在处理一些用户上传的音频的时候,往往根据用户的设备不通,文件格式难以统一,尤其是涉及到算法模型相关的,更是令人头疼,这里提供两种思路解决这个问题。

不借助三方库

这种采用的是javax.sound.sampled下的包来实现,缺点是需要预先知道目标的采样率等信息。

工具类

import com.example.phoneme.constant.WavConstant;

import lombok.extern.slf4j.Slf4j;import javax.sound.sampled.*;

import java.io.*;

import java.util.Arrays;@Slf4j

public class WavUtils {public static byte[] toPCM(byte[] src) {if (src.length > 44) {return Arrays.copyOfRange(src, 44, src.length);}return new byte[0];}public static byte[] convertTo16kHzMono16bitPCM(byte[] audioData,int sampleRate,int sampleSizeBits,int channels,boolean signed,boolean bigEndian) {try{// 创建输入字节数组流ByteArrayInputStream byteArrayInputStream = new ByteArrayInputStream(audioData);// 创建目标音频格式AudioFormat targetFormat = new AudioFormat(WavConstant.SAMPLE_RATE, WavConstant.BIT_DEPTH, WavConstant.CHANNELS, WavConstant.SIGNED, WavConstant.BIG_ENDIAN);// 创建目标音频输入流AudioInputStream audioInputStream = new AudioInputStream(byteArrayInputStream, new AudioFormat(sampleRate, sampleSizeBits, channels, signed, bigEndian), audioData.length / 4);// 转换音频格式AudioInputStream convertedAudioInputStream = AudioSystem.getAudioInputStream(targetFormat, audioInputStream);// 将转换后的音频数据写入字节数组ByteArrayOutputStream byteArrayOutputStream = new ByteArrayOutputStream();AudioSystem.write(convertedAudioInputStream, AudioFileFormat.Type.WAVE, byteArrayOutputStream);// 将字节数组返回byte[] convertedAudioData = byteArrayOutputStream.toByteArray();// 关闭流audioInputStream.close();convertedAudioInputStream.close();byteArrayOutputStream.close();return convertedAudioData;} catch (IOException e) {e.printStackTrace();return null;}}public static boolean checkVideo(byte[] fileBytes) {try (InputStream inputStream = new ByteArrayInputStream(fileBytes)) {// 使用AudioSystem类获取音频文件的格式AudioFileFormat audioFileFormat = AudioSystem.getAudioFileFormat(inputStream);// 判断文件格式是否为WAVif (audioFileFormat.getType() == AudioFileFormat.Type.WAVE) {// 获取WAV文件的属性信息AudioFormat audioFormat = audioFileFormat.getFormat();double sampleRate = audioFormat.getSampleRate();int channels = audioFormat.getChannels();int bitDepth = audioFormat.getSampleSizeInBits();log.info("上传的音频格式为:Sample Rate:{},Channels:{},Bit Depth:{}", sampleRate, channels, bitDepth);if (sampleRate == WavConstant.SAMPLE_RATE&& channels == WavConstant.CHANNELS&& bitDepth == WavConstant.BIT_DEPTH) {log.info("校验通过");return true;}} else {log.info("不是WAV文件");return false;}} catch (UnsupportedAudioFileException | IOException e) {log.info("不是WAV文件");return false;}log.info("不是WAV文件");return false;}

}常量类

public interface WavConstant {float SAMPLE_RATE = 16000.0;int CHANNELS = 1;int BIT_DEPTH = 16;boolean SIGNED = true;boolean BIG_ENDIAN = false;

}

测试类

@Test

public void testTransform(){try (FileInputStream fis=new FileInputStream("/path/to/file")){byte[] buffer = new byte[1024];int bytesRead;ByteArrayOutputStream stream = new ByteArrayOutputStream();while ((bytesRead = fis.read(buffer)) != -1) {stream.write(buffer);}byte[] streamByteArray = stream.toByteArray();boolean b1 = checkVideo(streamByteArray);log.info("文件是否符合要求: {}", b1);byte[] bytes = WavUtils.convertTo16kHzMono16bitPCM(streamByteArray,16000,16,2,true,false);log.info("开始转换");boolean b2 = checkVideo(bytes);log.info("文件的字节长度是: {}", bytes.length);log.info("文件是否符合要求: {}", b2);}catch (IOException ioe){ioe.printStackTrace();}

}

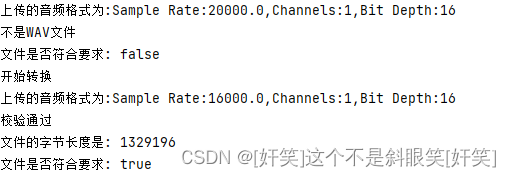

运行结果

借助三方库

比较通用的解决方案是ffmpeg,这里提供一种方法,借助命令行实现,缺点也很明显,就是命令行对于代码来说可控程度不高,依赖环境,不好迁移,需要保存中间文件,但是优点是处理音频更灵活

工具类

import lombok.extern.slf4j.Slf4j;import java.io.IOException;@Slf4j

public class FfmpegUtil {public static void toWav(String flvPath, int sampleRate, int channel, int bitrate, String fileType, String targetFilename) {try {String command = "ffmpeg -i " + flvPath + " -vn " + " -ar " + sampleRate + " -ac " + channel + " -ab " + bitrate + "k" + " -f " + fileType + " " + targetFilename;Runtime.getRuntime().exec(new String[]{"sh", "-c", command});} catch (IOException e) {e.printStackTrace();}}

}

测试类这里就略去了

总结

这两种方法各有优略,实际中要酌情考虑

调用命令行有时候比较依赖环境,不好迁移,也可以请c++的工程师负责编成jni的形式

的计算方法与源程序)