接下来,把实时注视点位置、语音文本知识点、帧知识点区域进行匹配;

首先,第一步是匹配语音文本知识点和帧知识点区域,我们知道教师所说的每句话对应的知识点,然后寻找当前时间段内,知识点对应的ppt中的区域,即得到学生应该看的知识点区域,后续我的队友进行可视化展示(视频生成和报告生成);

第二步,检测注视点位置是否在该区域;统计成功匹配的比例即可衡量该学生上课专注程度;

# -*- coding: utf-8 -*-

"""

@Time : 2024/6/22 14:45

@Auth : Zhao Yishuo

@File :pre_match.py

@IDE :PyCharm

"""

import cv2

import re

import io

from matplotlib import pyplot as plt# Parse voice match final data

def parse_voice_match_final(file_path):knowledge_points = []with open(file_path, 'r', encoding='utf-8') as file:for line in file:match = re.search(r'range: (\d+)-(\d+); kp_id: (\w+)', line)if match:start_time = int(match.group(1))end_time = int(match.group(2))kp_id = match.group(3)knowledge_points.append((start_time, end_time, kp_id))return knowledge_pointsimport cv2

import pandas as pd

import re# 解析 final_match_test.txt

def parse_final_match_test(file_path):ocr_data = []with open(file_path, 'r', encoding='utf-8') as file:timestamp = Nonefor line in file:if 'Timestamp' in line:timestamp = int(line.split(': ')[1])elif 'Knowledge_point_id:' in line:match = re.search(r'\((\d+), (\d+), (\d+), (\d+)\): Knowledge_point_id: (\w+)', line)if match:x1, y1, x2, y2 = map(int, match.groups()[:4])kp_id = match.group(5)ocr_data.append((timestamp, (x1, y1, x2, y2), kp_id))return ocr_data# Match knowledge points with OCR/detection regions based on timestamps

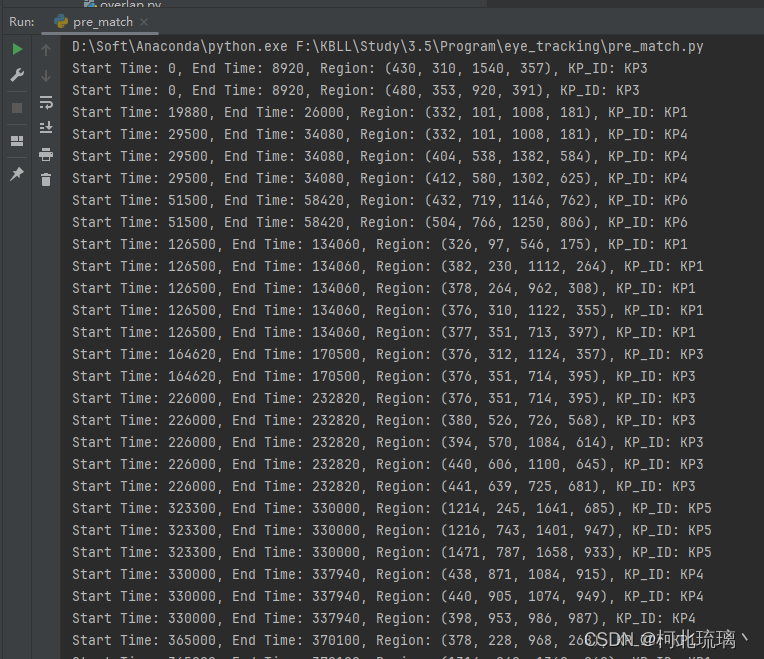

def match_knowledge_points(voice_data, ocr_data):matches = []for (start_time, end_time, kp_id) in voice_data:for (timestamp, region, ocr_kp_id) in ocr_data:if kp_id == ocr_kp_id and start_time <= timestamp <= end_time:matches.append((start_time, end_time, region, kp_id))return matches# Mark regions on the video

def mark_video(input_video_path, output_video_path, matches):cap = cv2.VideoCapture(input_video_path)fps = cap.get(cv2.CAP_PROP_FPS)frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))fourcc = cv2.VideoWriter_fourcc(*'mp4v')out = cv2.VideoWriter(output_video_path, fourcc, fps, (frame_width, frame_height))frame_count = 0current_region = Nonecurrent_kp_id = Noneregion_end_time = Nonewhile cap.isOpened():ret, frame = cap.read()if not ret:breaktimestamp = int(frame_count / fps * 1000) # Convert frame count to millisecondsfor match in matches:start_time, end_time, region, kp_id = matchif start_time <= timestamp <= end_time:current_region = regioncurrent_kp_id = kp_idregion_end_time = end_timebreakif current_region:cv2.rectangle(frame, (current_region[0], current_region[1]), (current_region[2], current_region[3]), (0, 255, 0), 2)cv2.putText(frame, current_kp_id, (current_region[0], current_region[1] - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)out.write(frame)frame_count += 1cap.release()out.release()if __name__ == "__main__":# Paths to the filesvoice_match_final_path = 'voice_match_final.txt'final_match_test_path = 'final_match_test(1).txt'input_video_path = 'video_data/5.mp4' # Path to the input videooutput_video_path = 'video_data/5_match.mp4' # Path to save the output video with annotations# Parse the filesvoice_data = parse_voice_match_final(voice_match_final_path)ocr_data = parse_final_match_test(final_match_test_path)# Match the knowledge points with OCR/detection regionsmatches = match_knowledge_points(voice_data, ocr_data)# Print matches for debuggingfor match in matches:print(f"Start Time: {match[0]}, End Time: {match[1]}, Region: {match[2]}, KP_ID: {match[3]}")# Mark the video with matched regions# mark_video(input_video_path, output_video_path, matches)

# -*- coding: utf-8 -*-

"""

@Time : 2024/6/16 14:52

@Auth : Zhao Yishuo

@File :match.py

@IDE :PyCharm

"""

import cv2

import pandas as pd

import os

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from sklearn.preprocessing import StandardScaler,MinMaxScalerplt.rcParams['font.sans-serif'] = ['SimHei']# 手动读取和处理眼动数据文本文件

eyedata_path = 'eye_output_16.txt' # 文本文件路径

data = []

with open(eyedata_path, 'r') as file:for line in file:line = line.strip()if ':' in line: # 检查是否存在冒号key, value = line.split(':', 1)data.append([key.strip(), value.strip()])# else:# print(f"Skipping malformed line: {line}") # 记录格式不正确的行data = pd.DataFrame(data, columns=['Type', 'Value'])# 清洗数据

timestamps = data['Value'][data['Type'] == 'Timestamp'].astype(float).reset_index(drop=True)

videos = data['Value'][data['Type'] == 'Video'].reset_index(drop=True)

positions = data['Value'][data['Type'] == 'Relative Position'].str.extract(r'\[(.*?)\]')[0] # 眼动位置

positions = positions.str.split(expand=True).astype(float).reset_index(drop=True)

positions[0] = round(positions[0])

positions[1] = round(-positions[1])# 提取第1列和第2列

data = positions.iloc[:, [0, 1]]# 确保数据为数值类型

data = data.apply(pd.to_numeric, errors='coerce')

# print(type(data))

x_values = data[0].tolist()

y_values = data[1].tolist()eye_pos = np.vstack([x_values, y_values]).T # df类型成功转换为np数组eye_timestamps = np.array(timestamps.tolist())# np.save('eye_positions.npy', eye_pos)

# np.save('eye_timestamps.npy', eye_timestamps)eye_pos = np.load('eye_positions.npy')

eye_timestamps = np.load('eye_timestamps.npy')# print(eye_pos,eye_timestamps)

text_path = 'final_match_test.txt'import re# Function to parse the text file and extract data

def parse_text_file(file_path):with open(file_path, 'r', encoding='utf-8') as file:content = file.read()# Regular expressions to match timestamps, OCR, and detection positionstimestamp_pattern = re.compile(r'Timestamp: (\d+)')ocr_pattern = re.compile(r'OCR \d+: \((\d+), (\d+), (\d+), (\d+)\) \(Knowledge_point_id: KP\d+\) (.+)')detection_pattern = re.compile(r'Detection \d+ \(Knowledge_pdoint_id: (KP\d+(?:, KP\d+)*)\): \((\d+), (\d+), (\d+), (\d+)\)')# Lists to store parsed dataparsed_data = []# Current timestampcurrent_timestamp = None# Split content by lineslines = content.split('\n')for line in lines:# Check for a timestamptimestamp_match = timestamp_pattern.match(line)if timestamp_match:current_timestamp = int(timestamp_match.group(1))# Check for OCR matchocr_match = ocr_pattern.match(line)if ocr_match:x1, y1, x2, y2, ocr_text = ocr_match.groups()parsed_data.append((current_timestamp, ocr_text, (int(x1), int(y1), int(x2), int(y2))))# Check for detection matchdetection_match = detection_pattern.match(line)if detection_match:knowledge_points, x1, y1, x2, y2 = detection_match.groups()parsed_data.append((current_timestamp, f'Detection with {knowledge_points}', (int(x1), int(y1), int(x2), int(y2))))return parsed_data# Parse the file and print the extracted data

parsed_data = parse_text_file(text_path)

text_timestamps = []

text_pos = []

for entry in parsed_data:# print(entry)text_timestamps.append(np.float32(entry[0])/1000)text_pos.append(np.array(entry[-1],dtype=np.float32))

text_timestamps = np.array(text_timestamps)

text_pos = np.array(text_pos)def check_gaze_in_regions(gaze_timestamps, gaze_positions, parsed_data):results = []gaze_idx = 0num_gaze_points = len(gaze_timestamps)idx = 0while idx < len(parsed_data):temp_gaze = []temp_text = []# print('timestamp,rect_coords',timestamp,rect_coords)# Find gaze points that fall within the current timestamp rangewhile (gaze_idx < num_gaze_points and gaze_timestamps[gaze_idx] >= text_timestamps[idx]):print(gaze_idx,num_gaze_points,gaze_timestamps[gaze_idx],text_timestamps[idx + 1])temp_gaze.append(gaze_idx)temp_text.append(idx)gaze_idx += 1idx += 1while gaze_timestamps[gaze_idx] >= text_timestamps[idx - 1] and gaze_timestamps[gaze_idx] <= text_timestamps[idx]:gaze_idx += 1gaze_idx -= 1# print(temp_text)print('gaze_idx,idx',gaze_idx,idx)if gaze_idx >= num_gaze_points:break# Check if gaze point is within rectangle regionif gaze_idx < num_gaze_points and gaze_timestamps[gaze_idx] <= text_timestamps[idx + 1]:# print(1)for temp_gaze_idx in temp_gaze:gaze_x, gaze_y = gaze_positions[temp_gaze_idx]# print('gaze_x,gaze_y',gaze_x,gaze_y)for temp_text_idx in temp_text:# print('text_pos[temp_text_idx]',text_pos[temp_text_idx])x1, y1, x2, y2 = text_pos[temp_text_idx]print('gaze_x,gaze_y,x1,y1,x2,y2',gaze_x,gaze_y,x1,y1,x2,y2)if x1 <= gaze_x <= x2 and y1 <= gaze_y <= y2:print('match found')results.append(timestamp, gaze_positions[temp_gaze_idx])breakreturn resultsresults = check_gaze_in_regions(eye_timestamps, eye_pos, parsed_data)# Print or process results

for result in results:print(result)

)

】——元组的概念及使用)

完成全部开始录制和全部停止录制代码)