1 ffmpeg命令行

一般对视频文件的裁剪 我们通过一行 ffmpeg命令行即可实现,比如

ffmpeg -ss 0.5 - t 3 - i a.mp4 vcodec copy b.mp4

其中 -ss 放置较前 开启精准seek定位 对于mp4而言 seek将从moov中相关索引表查找 0.5s时刻附近最近的关键帧 (此描述可能不精确)

ffmpeg根据此命令是如何裁剪呢?

1.1 命令行入口

执行代码位置

fftools/ffmpeg.c

int main(int argc, char **argv)

{int i, ret;BenchmarkTimeStamps ti;init_dynload();register_exit(ffmpeg_cleanup);setvbuf(stderr,NULL,_IONBF,0); /* win32 runtime needs this */av_log_set_flags(AV_LOG_SKIP_REPEATED);parse_loglevel(argc, argv, options);if(argc>1 && !strcmp(argv[1], "-d")){run_as_daemon=1;av_log_set_callback(log_callback_null);argc--;argv++;}#if CONFIG_AVDEVICEavdevice_register_all();

#endifavformat_network_init();show_banner(argc, argv, options);/* parse options and open all input/output files */ret = ffmpeg_parse_options(argc, argv);if (ret < 0)exit_program(1);if (nb_output_files <= 0 && nb_input_files == 0) {show_usage();av_log(NULL, AV_LOG_WARNING, "Use -h to get full help or, even better, run 'man %s'\n", program_name);exit_program(1);}/* file converter / grab */if (nb_output_files <= 0) {av_log(NULL, AV_LOG_FATAL, "At least one output file must be specified\n");exit_program(1);}for (i = 0; i < nb_output_files; i++) {if (strcmp(output_files[i]->ctx->oformat->name, "rtp"))want_sdp = 0;}current_time = ti = get_benchmark_time_stamps();if (transcode() < 0)exit_program(1);if (do_benchmark) {int64_t utime, stime, rtime;current_time = get_benchmark_time_stamps();utime = current_time.user_usec - ti.user_usec;stime = current_time.sys_usec - ti.sys_usec;rtime = current_time.real_usec - ti.real_usec;av_log(NULL, AV_LOG_INFO,"bench: utime=%0.3fs stime=%0.3fs rtime=%0.3fs\n",utime / 1000000.0, stime / 1000000.0, rtime / 1000000.0);}av_log(NULL, AV_LOG_DEBUG, "%"PRIu64" frames successfully decoded, %"PRIu64" decoding errors\n",decode_error_stat[0], decode_error_stat[1]);if ((decode_error_stat[0] + decode_error_stat[1]) * max_error_rate < decode_error_stat[1])exit_program(69);exit_program(received_nb_signals ? 255 : main_return_code);return main_return_code;

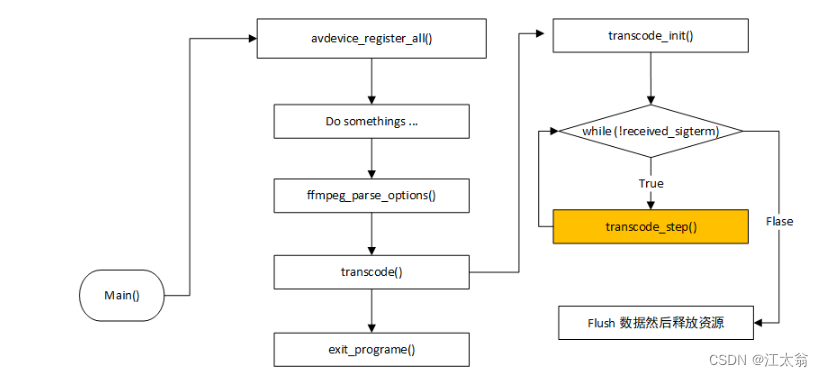

}1.2 执行流程

代码流程比较清晰 主要流程如下:

1.3 输入上下文设置

ffmpeg将解析的参数存放到OptionParseContext中包含输入及输出参数设置,然后进入输入上下文

根据之前解析的数据OptionPareseContext作为参数传入,确定好seek点,然后调用 avformat_seek_file

static int open_input_file(OptionsContext *o, const char *filename)

{InputFile *f;AVFormatContext *ic;AVInputFormat *file_iformat = NULL;int err, i, ret;int64_t timestamp;AVDictionary *unused_opts = NULL;AVDictionaryEntry *e = NULL;char * video_codec_name = NULL;char * audio_codec_name = NULL;char *subtitle_codec_name = NULL;char * data_codec_name = NULL;int scan_all_pmts_set = 0;if (o->stop_time != INT64_MAX && o->recording_time != INT64_MAX) {o->stop_time = INT64_MAX;av_log(NULL, AV_LOG_WARNING, "-t and -to cannot be used together; using -t.\n");}if (o->stop_time != INT64_MAX && o->recording_time == INT64_MAX) {int64_t start_time = o->start_time == AV_NOPTS_VALUE ? 0 : o->start_time;if (o->stop_time <= start_time) {av_log(NULL, AV_LOG_ERROR, "-to value smaller than -ss; aborting.\n");exit_program(1);} else {o->recording_time = o->stop_time - start_time;}}if (o->format) {if (!(file_iformat = av_find_input_format(o->format))) {av_log(NULL, AV_LOG_FATAL, "Unknown input format: '%s'\n", o->format);exit_program(1);}}if (!strcmp(filename, "-"))filename = "pipe:";stdin_interaction &= strncmp(filename, "pipe:", 5) &&strcmp(filename, "/dev/stdin");/* get default parameters from command line */ic = avformat_alloc_context();if (!ic) {print_error(filename, AVERROR(ENOMEM));exit_program(1);}if (o->nb_audio_sample_rate) {av_dict_set_int(&o->g->format_opts, "sample_rate", o->audio_sample_rate[o->nb_audio_sample_rate - 1].u.i, 0);}if (o->nb_audio_channels) {/* because we set audio_channels based on both the "ac" and* "channel_layout" options, we need to check that the specified* demuxer actually has the "channels" option before setting it */if (file_iformat && file_iformat->priv_class &&av_opt_find(&file_iformat->priv_class, "channels", NULL, 0,AV_OPT_SEARCH_FAKE_OBJ)) {av_dict_set_int(&o->g->format_opts, "channels", o->audio_channels[o->nb_audio_channels - 1].u.i, 0);}}if (o->nb_frame_rates) {/* set the format-level framerate option;* this is important for video grabbers, e.g. x11 */if (file_iformat && file_iformat->priv_class &&av_opt_find(&file_iformat->priv_class, "framerate", NULL, 0,AV_OPT_SEARCH_FAKE_OBJ)) {av_dict_set(&o->g->format_opts, "framerate",o->frame_rates[o->nb_frame_rates - 1].u.str, 0);}}if (o->nb_frame_sizes) {av_dict_set(&o->g->format_opts, "video_size", o->frame_sizes[o->nb_frame_sizes - 1].u.str, 0);}if (o->nb_frame_pix_fmts)av_dict_set(&o->g->format_opts, "pixel_format", o->frame_pix_fmts[o->nb_frame_pix_fmts - 1].u.str, 0);MATCH_PER_TYPE_OPT(codec_names, str, video_codec_name, ic, "v");MATCH_PER_TYPE_OPT(codec_names, str, audio_codec_name, ic, "a");MATCH_PER_TYPE_OPT(codec_names, str, subtitle_codec_name, ic, "s");MATCH_PER_TYPE_OPT(codec_names, str, data_codec_name, ic, "d");if (video_codec_name)ic->video_codec = find_codec_or_die(video_codec_name , AVMEDIA_TYPE_VIDEO , 0);if (audio_codec_name)ic->audio_codec = find_codec_or_die(audio_codec_name , AVMEDIA_TYPE_AUDIO , 0);if (subtitle_codec_name)ic->subtitle_codec = find_codec_or_die(subtitle_codec_name, AVMEDIA_TYPE_SUBTITLE, 0);if (data_codec_name)ic->data_codec = find_codec_or_die(data_codec_name , AVMEDIA_TYPE_DATA , 0);ic->video_codec_id = video_codec_name ? ic->video_codec->id : AV_CODEC_ID_NONE;ic->audio_codec_id = audio_codec_name ? ic->audio_codec->id : AV_CODEC_ID_NONE;ic->subtitle_codec_id = subtitle_codec_name ? ic->subtitle_codec->id : AV_CODEC_ID_NONE;ic->data_codec_id = data_codec_name ? ic->data_codec->id : AV_CODEC_ID_NONE;ic->flags |= AVFMT_FLAG_NONBLOCK;if (o->bitexact)ic->flags |= AVFMT_FLAG_BITEXACT;ic->interrupt_callback = int_cb;if (!av_dict_get(o->g->format_opts, "scan_all_pmts", NULL, AV_DICT_MATCH_CASE)) {av_dict_set(&o->g->format_opts, "scan_all_pmts", "1", AV_DICT_DONT_OVERWRITE);scan_all_pmts_set = 1;}/* open the input file with generic avformat function */err = avformat_open_input(&ic, filename, file_iformat, &o->g->format_opts);if (err < 0) {print_error(filename, err);if (err == AVERROR_PROTOCOL_NOT_FOUND)av_log(NULL, AV_LOG_ERROR, "Did you mean file:%s?\n", filename);exit_program(1);}if (scan_all_pmts_set)av_dict_set(&o->g->format_opts, "scan_all_pmts", NULL, AV_DICT_MATCH_CASE);remove_avoptions(&o->g->format_opts, o->g->codec_opts);assert_avoptions(o->g->format_opts);/* apply forced codec ids */for (i = 0; i < ic->nb_streams; i++)choose_decoder(o, ic, ic->streams[i]);if (find_stream_info) {AVDictionary **opts = setup_find_stream_info_opts(ic, o->g->codec_opts);int orig_nb_streams = ic->nb_streams;/* If not enough info to get the stream parameters, we decode thefirst frames to get it. (used in mpeg case for example) */ret = avformat_find_stream_info(ic, opts);for (i = 0; i < orig_nb_streams; i++)av_dict_free(&opts[i]);av_freep(&opts);if (ret < 0) {av_log(NULL, AV_LOG_FATAL, "%s: could not find codec parameters\n", filename);if (ic->nb_streams == 0) {avformat_close_input(&ic);exit_program(1);}}}if (o->start_time != AV_NOPTS_VALUE && o->start_time_eof != AV_NOPTS_VALUE) {av_log(NULL, AV_LOG_WARNING, "Cannot use -ss and -sseof both, using -ss for %s\n", filename);o->start_time_eof = AV_NOPTS_VALUE;}if (o->start_time_eof != AV_NOPTS_VALUE) {if (o->start_time_eof >= 0) {av_log(NULL, AV_LOG_ERROR, "-sseof value must be negative; aborting\n");exit_program(1);}if (ic->duration > 0) {o->start_time = o->start_time_eof + ic->duration;if (o->start_time < 0) {av_log(NULL, AV_LOG_WARNING, "-sseof value seeks to before start of file %s; ignored\n", filename);o->start_time = AV_NOPTS_VALUE;}} elseav_log(NULL, AV_LOG_WARNING, "Cannot use -sseof, duration of %s not known\n", filename);}timestamp = (o->start_time == AV_NOPTS_VALUE) ? 0 : o->start_time;/* add the stream start time */if (!o->seek_timestamp && ic->start_time != AV_NOPTS_VALUE)timestamp += ic->start_time;/* if seeking requested, we execute it */if (o->start_time != AV_NOPTS_VALUE) {int64_t seek_timestamp = timestamp;if (!(ic->iformat->flags & AVFMT_SEEK_TO_PTS)) {int dts_heuristic = 0;for (i=0; i<ic->nb_streams; i++) {const AVCodecParameters *par = ic->streams[i]->codecpar;if (par->video_delay) {dts_heuristic = 1;break;}}if (dts_heuristic) {seek_timestamp -= 3*AV_TIME_BASE / 23;}}ret = avformat_seek_file(ic, -1, INT64_MIN, seek_timestamp, seek_timestamp, 0);if (ret < 0) {av_log(NULL, AV_LOG_WARNING, "%s: could not seek to position %0.3f\n",filename, (double)timestamp / AV_TIME_BASE);}}/* update the current parameters so that they match the one of the input stream */add_input_streams(o, ic);/* dump the file content */av_dump_format(ic, nb_input_files, filename, 0);GROW_ARRAY(input_files, nb_input_files);f = av_mallocz(sizeof(*f));if (!f)exit_program(1);input_files[nb_input_files - 1] = f;f->ctx = ic;f->ist_index = nb_input_streams - ic->nb_streams;f->start_time = o->start_time;f->recording_time = o->recording_time;f->input_ts_offset = o->input_ts_offset;f->ts_offset = o->input_ts_offset - (copy_ts ? (start_at_zero && ic->start_time != AV_NOPTS_VALUE ? ic->start_time : 0) : timestamp);f->nb_streams = ic->nb_streams;f->rate_emu = o->rate_emu;f->accurate_seek = o->accurate_seek;f->loop = o->loop;f->duration = 0;f->time_base = (AVRational){ 1, 1 };

#if HAVE_THREADSf->thread_queue_size = o->thread_queue_size > 0 ? o->thread_queue_size : 8;

#endif/* check if all codec options have been used */unused_opts = strip_specifiers(o->g->codec_opts);for (i = f->ist_index; i < nb_input_streams; i++) {e = NULL;while ((e = av_dict_get(input_streams[i]->decoder_opts, "", e,AV_DICT_IGNORE_SUFFIX)))av_dict_set(&unused_opts, e->key, NULL, 0);}e = NULL;while ((e = av_dict_get(unused_opts, "", e, AV_DICT_IGNORE_SUFFIX))) {const AVClass *class = avcodec_get_class();const AVOption *option = av_opt_find(&class, e->key, NULL, 0,AV_OPT_SEARCH_CHILDREN | AV_OPT_SEARCH_FAKE_OBJ);const AVClass *fclass = avformat_get_class();const AVOption *foption = av_opt_find(&fclass, e->key, NULL, 0,AV_OPT_SEARCH_CHILDREN | AV_OPT_SEARCH_FAKE_OBJ);if (!option || foption)continue;if (!(option->flags & AV_OPT_FLAG_DECODING_PARAM)) {av_log(NULL, AV_LOG_ERROR, "Codec AVOption %s (%s) specified for ""input file #%d (%s) is not a decoding option.\n", e->key,option->help ? option->help : "", nb_input_files - 1,filename);exit_program(1);}av_log(NULL, AV_LOG_WARNING, "Codec AVOption %s (%s) specified for ""input file #%d (%s) has not been used for any stream. The most ""likely reason is either wrong type (e.g. a video option with ""no video streams) or that it is a private option of some decoder ""which was not actually used for any stream.\n", e->key,option->help ? option->help : "", nb_input_files - 1, filename);}av_dict_free(&unused_opts);for (i = 0; i < o->nb_dump_attachment; i++) {int j;for (j = 0; j < ic->nb_streams; j++) {AVStream *st = ic->streams[j];if (check_stream_specifier(ic, st, o->dump_attachment[i].specifier) == 1)dump_attachment(st, o->dump_attachment[i].u.str);}}input_stream_potentially_available = 1;return 0;

}

1.4 avformat_seek_file

int avformat_seek_file(AVFormatContext *s, int stream_index, int64_t min_ts,int64_t ts, int64_t max_ts, int flags)

{if (min_ts > ts || max_ts < ts)return -1;if (stream_index < -1 || stream_index >= (int)s->nb_streams)return AVERROR(EINVAL);if (s->seek2any>0)flags |= AVSEEK_FLAG_ANY;flags &= ~AVSEEK_FLAG_BACKWARD;if (s->iformat->read_seek2) {int ret;ff_read_frame_flush(s);if (stream_index == -1 && s->nb_streams == 1) {AVRational time_base = s->streams[0]->time_base;ts = av_rescale_q(ts, AV_TIME_BASE_Q, time_base);min_ts = av_rescale_rnd(min_ts, time_base.den,time_base.num * (int64_t)AV_TIME_BASE,AV_ROUND_UP | AV_ROUND_PASS_MINMAX);max_ts = av_rescale_rnd(max_ts, time_base.den,time_base.num * (int64_t)AV_TIME_BASE,AV_ROUND_DOWN | AV_ROUND_PASS_MINMAX);stream_index = 0;}ret = s->iformat->read_seek2(s, stream_index, min_ts,ts, max_ts, flags);if (ret >= 0)ret = avformat_queue_attached_pictures(s);return ret;}if (s->iformat->read_timestamp) {// try to seek via read_timestamp()}// Fall back on old API if new is not implemented but old is.// Note the old API has somewhat different semantics.if (s->iformat->read_seek || 1) {int dir = (ts - (uint64_t)min_ts > (uint64_t)max_ts - ts ? AVSEEK_FLAG_BACKWARD : 0);int ret = av_seek_frame(s, stream_index, ts, flags | dir);if (ret<0 && ts != min_ts && max_ts != ts) {ret = av_seek_frame(s, stream_index, dir ? max_ts : min_ts, flags | dir);if (ret >= 0)ret = av_seek_frame(s, stream_index, ts, flags | (dir^AVSEEK_FLAG_BACKWARD));}return ret;}// try some generic seek like seek_frame_generic() but with new ts semanticsreturn -1; //unreachable

}ffmpeg中大部分fromat都定义实现了read_seek ,一般情况下都会调用av_seek_frame,因为输入文件是mp4格式 .format对应 mov ,

av_ssek_frame->seek_frame_internal-> read_seek ->mov_read_seek

AVInputFormat ff_mov_demuxer = {.name = "mov,mp4,m4a,3gp,3g2,mj2",.long_name = NULL_IF_CONFIG_SMALL("QuickTime / MOV"),.priv_class = &mov_class,.priv_data_size = sizeof(MOVContext),.extensions = "mov,mp4,m4a,3gp,3g2,mj2",.read_probe = mov_probe,.read_header = mov_read_header,.read_packet = mov_read_packet,.read_close = mov_read_close,.read_seek = mov_read_seek,.flags = AVFMT_NO_BYTE_SEEK | AVFMT_SEEK_TO_PTS,

};

真正确定seek位置代码函数 ff_index_search_timestamp flags不同 查询规则有所不同

int ff_index_search_timestamp(const AVIndexEntry *entries, int nb_entries,int64_t wanted_timestamp, int flags)

{int a, b, m;int64_t timestamp;a = -1;b = nb_entries;// Optimize appending index entries at the end.if (b && entries[b - 1].timestamp < wanted_timestamp)a = b - 1;while (b - a > 1) {m = (a + b) >> 1;// Search for the next non-discarded packet.while ((entries[m].flags & AVINDEX_DISCARD_FRAME) && m < b && m < nb_entries - 1) {m++;if (m == b && entries[m].timestamp >= wanted_timestamp) {m = b - 1;break;}}timestamp = entries[m].timestamp;if (timestamp >= wanted_timestamp)b = m;if (timestamp <= wanted_timestamp)a = m;}m = (flags & AVSEEK_FLAG_BACKWARD) ? a : b;if (!(flags & AVSEEK_FLAG_ANY))while (m >= 0 && m < nb_entries &&!(entries[m].flags & AVINDEX_KEYFRAME))m += (flags & AVSEEK_FLAG_BACKWARD) ? -1 : 1;if (m == nb_entries)return -1;return m;

}flags:

#define AVSEEK_FLAG_BACKGROUND 1 ///<<Seek Background 往后移,

#define AVSEEK_FALG_BYTE ///<<<seeking based on position in bytes 让时间戳

变成一个 byte, 按照文件的大小位置跳到那个位置

#define AVSEEK_FLAG_ANY ///<<<seek to any frame, even non-keyframes // 移动到任意帧的位置,不去找前面的关键帧,

#define AVSEEK_FLAG_FRAME ///<<<seeking based on frame number // 找关键帧,一般与 AVSEEK_FLAG_BACKGROUND 一起使用

2 TS

对于ts切割格式采用同样的方式分析 mpegts.c , 并未定义 read_seek

AVInputFormat ff_mpegts_demuxer = {.name = "mpegts",.long_name = NULL_IF_CONFIG_SMALL("MPEG-TS (MPEG-2 Transport Stream)"),.priv_data_size = sizeof(MpegTSContext),.read_probe = mpegts_probe,.read_header = mpegts_read_header,.read_packet = mpegts_read_packet,.read_close = mpegts_read_close,.read_timestamp = mpegts_get_dts,.flags = AVFMT_SHOW_IDS | AVFMT_TS_DISCONT,.priv_class = &mpegts_class,

};从而进入 ff_seek_frame_binary 方法,由于是实时流没有index_entry 调用ff_gen_search 生成seek pos gen_seek

int ff_seek_frame_binary(AVFormatContext *s, int stream_index,int64_t target_ts, int flags)

{const AVInputFormat *avif = s->iformat;int64_t av_uninit(pos_min), av_uninit(pos_max), pos, pos_limit;int64_t ts_min, ts_max, ts;int index;int64_t ret;AVStream *st;if (stream_index < 0)return -1;av_log(s, AV_LOG_TRACE, "read_seek: %d %s\n", stream_index, av_ts2str(target_ts));ts_max =ts_min = AV_NOPTS_VALUE;pos_limit = -1; // GCC falsely says it may be uninitialized.st = s->streams[stream_index];if (st->index_entries) {AVIndexEntry *e;/* FIXME: Whole function must be checked for non-keyframe entries in* index case, especially read_timestamp(). */index = av_index_search_timestamp(st, target_ts,flags | AVSEEK_FLAG_BACKWARD);index = FFMAX(index, 0);e = &st->index_entries[index];if (e->timestamp <= target_ts || e->pos == e->min_distance) {pos_min = e->pos;ts_min = e->timestamp;av_log(s, AV_LOG_TRACE, "using cached pos_min=0x%"PRIx64" dts_min=%s\n",pos_min, av_ts2str(ts_min));} else {av_assert1(index == 0);}index = av_index_search_timestamp(st, target_ts,flags & ~AVSEEK_FLAG_BACKWARD);av_assert0(index < st->nb_index_entries);if (index >= 0) {e = &st->index_entries[index];av_assert1(e->timestamp >= target_ts);pos_max = e->pos;ts_max = e->timestamp;pos_limit = pos_max - e->min_distance;av_log(s, AV_LOG_TRACE, "using cached pos_max=0x%"PRIx64" pos_limit=0x%"PRIx64" dts_max=%s\n", pos_max, pos_limit, av_ts2str(ts_max));}}pos = ff_gen_search(s, stream_index, target_ts, pos_min, pos_max, pos_limit,ts_min, ts_max, flags, &ts, avif->read_timestamp);if (pos < 0)return -1;/* do the seek */if ((ret = avio_seek(s->pb, pos, SEEK_SET)) < 0)return ret;ff_read_frame_flush(s);ff_update_cur_dts(s, st, ts);return 0;

}注意avid->read_timestamap mpegts 将通过mpegts_get_dts 通过时间戳查询pos

AVInputFormat ff_mpegts_demuxer = {.name = "mpegts",.long_name = NULL_IF_CONFIG_SMALL("MPEG-TS (MPEG-2 Transport Stream)"),.priv_data_size = sizeof(MpegTSContext),.read_probe = mpegts_probe,.read_header = mpegts_read_header,.read_packet = mpegts_read_packet,.read_close = mpegts_read_close,.read_timestamp = mpegts_get_dts,.flags = AVFMT_SHOW_IDS | AVFMT_TS_DISCONT,.priv_class = &mpegts_class,

};static int64_t mpegts_get_dts(AVFormatContext *s, int stream_index,int64_t *ppos, int64_t pos_limit)

{MpegTSContext *ts = s->priv_data;int64_t pos;int pos47 = ts->pos47_full % ts->raw_packet_size;pos = ((*ppos + ts->raw_packet_size - 1 - pos47) / ts->raw_packet_size) * ts->raw_packet_size + pos47;ff_read_frame_flush(s);if (avio_seek(s->pb, pos, SEEK_SET) < 0)return AV_NOPTS_VALUE;while(pos < pos_limit) {int ret;AVPacket pkt;av_init_packet(&pkt);ret = av_read_frame(s, &pkt);if (ret < 0)return AV_NOPTS_VALUE;if (pkt.dts != AV_NOPTS_VALUE && pkt.pos >= 0) {ff_reduce_index(s, pkt.stream_index);av_add_index_entry(s->streams[pkt.stream_index], pkt.pos, pkt.dts, 0, 0, AVINDEX_KEYFRAME /* FIXME keyframe? */);if (pkt.stream_index == stream_index && pkt.pos >= *ppos) {int64_t dts = pkt.dts;*ppos = pkt.pos;av_packet_unref(&pkt);return dts;}}pos = pkt.pos;av_packet_unref(&pkt);}return AV_NOPTS_VALUE;

}

未完待续

)

)

- 类型支持 (数值极限,提供查询所有基础数值类型的性质的接口,numeric_limits))

)