本文介绍

在增强低光图像时,许多深度学习算法基于Retinex理论。然而,Retinex模型并没有考虑到暗部隐藏的损坏或者由光照过程引入的影响。此外,这些方法通常需要繁琐的多阶段训练流程,并依赖于卷积神经网络,在捕捉长距离依赖关系方面存在局限性。

本文提出了一种简单而又有原则性的单阶段Retinex-based框架(ORF)。ORF首先估计照明信息来点亮低光图像,然后恢复损坏以生成增强的图像。我们设计了一个基于照明指导的Transformer(IGT),利用照明表示来指导不同光照条件下区域之间的非局部交互建模。将IGT插入到ORF中,我们得到了我们的算法Retinexformer。

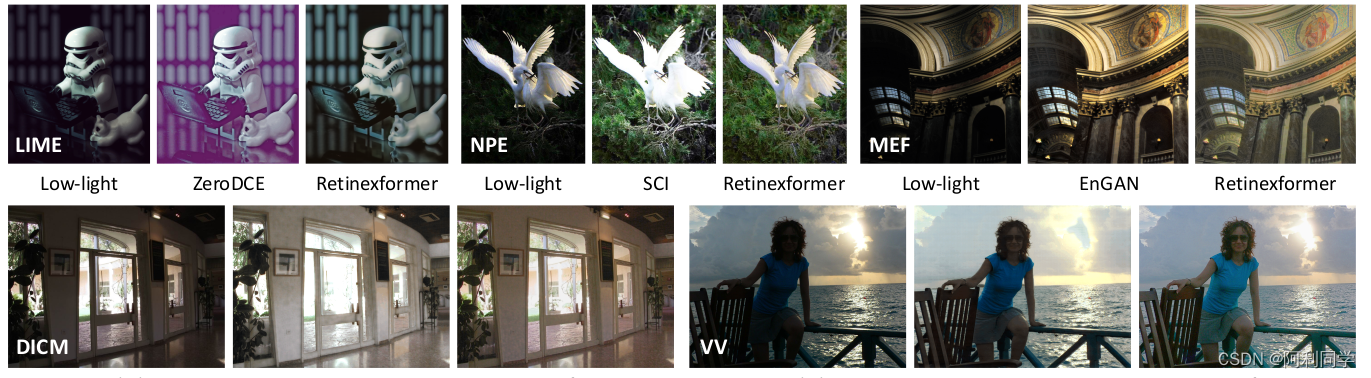

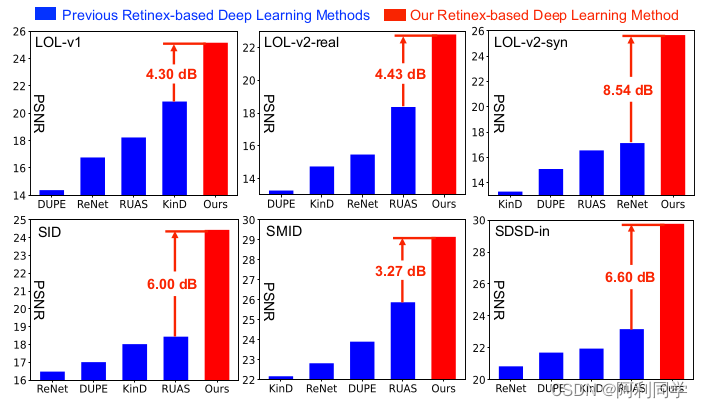

全面的定量和定性实验证明了我们的Retinexformer在13个基准测试上明显优于现有的方法。通过用户研究和在低光物体检测上的应用,也揭示了我们方法的潜在实际价值。

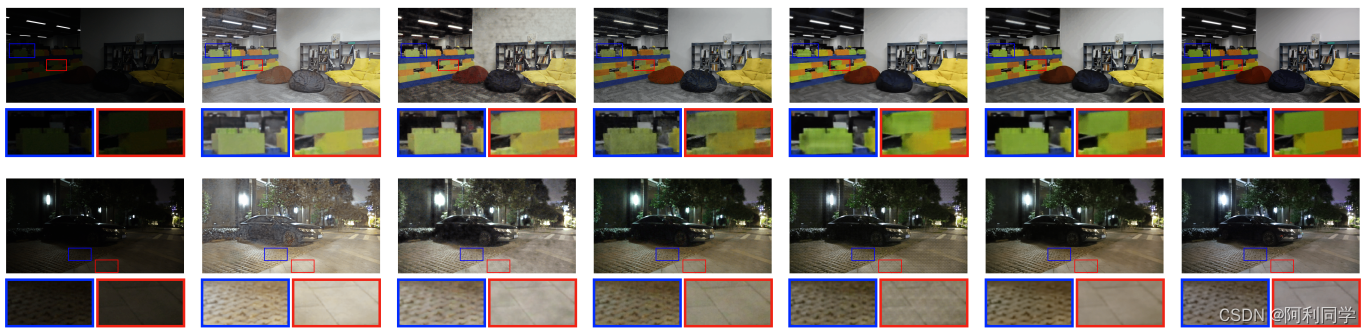

结果展示

上图可以看出,图像低照度增强,显著增强了目标检测的recall值以及置信度,因此图像增强对目标检测、目标跟踪等计算机视觉领域有重要意义。

代码运行

1. 创建环境

- 创建Conda环境

conda create -n Retinexformer python=3.7

conda activate Retinexformer

- 安装依赖项

conda install pytorch=1.11 torchvision cudatoolkit=11.3 -c pytorch

pip install matplotlib scikit-learn scikit-image opencv-python yacs joblib natsort h5py tqdm tensorboard

pip install einops gdown addict future lmdb numpy pyyaml requests scipy yapf lpips

- 安装BasicSR

python setup.py develop --no_cuda_ext

以上是创建和配置Retinexformer环境的步骤。首先,使用Conda创建一个名为Retinexformer的环境,并激活该环境。然后,通过conda和pip安装所需的依赖项,包括PyTorch、matplotlib、scikit-learn等。最后,使用python命令运行setup.py文件来安装BasicSR。完成这些步骤后,即可进入Retinexformer环境并开始使用。

2. 准备数据集

下载以下数据集:

LOL-v1 百度网盘 (提取码: cyh2), 谷歌网盘

LOL-v2 百度网盘 (提取码: cyh2), 谷歌网盘

SID 百度网盘 (提取码: gplv), 谷歌网盘

SMID 百度网盘 (提取码: btux), 谷歌网盘

SDSD-indoor 百度网盘 (提取码: jo1v), 谷歌网盘

SDSD-outdoor 百度网盘 (提取码: uibk), 谷歌网盘

MIT-Adobe FiveK 百度网盘 (提取码:cyh2), 谷歌网盘, 官方网站

请按照sRGB设置处理MIT Adobe FiveK数据集。

然后按照以下方式组织这些数据集: |--data | |--LOLv1| | |--Train| | | |--input| | | | |--100.png| | | | |--101.png| | | | ...| | | |--target| | | | |--100.png| | | | |--101.png| | | | ...| | |--Test| | | |--input| | | | |--111.png| | | | |--146.png| | | | ...| | | |--target| | | | |--111.png| | | | |--146.png| | | | ...| |--LOLv2| | |--Real_captured| | | |--Train| | | | |--Low| | | | | |--00001.png| | | | | |--00002.png| | | | | ...| | | | |--Normal| | | | | |--00001.png| | | | | |--00002.png| | | | | ...| | | |--Test| | | | |--Low| | | | | |--00690.png| | | | | |--00691.png| | | | | ...| | | | |--Normal| | | | | |--00690.png| | | | | |--00691.png| | | | | ...| | |--Synthetic| | | |--Train| | | | |--Low| | | | | |--r000da54ft.png| | | | | |--r02e1abe2t.png| | | | | ...| | | | |--Normal| | | | | |--r000da54ft.png| | | | | |--r02e1abe2t.png| | | | | ...| | | |--Test| | | | |--Low| | | | | |--r00816405t.png| | | | | |--r02189767t.png| | | | | ...| | | | |--Normal| | | | | |--r00816405t.png| | | | | |--r02189767t.png| | | | | ...| |--SDSD| | |--indoor_static_np| | | |--input| | | | |--pair1| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--pair2| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | | |--GT| | | | |--pair1| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--pair2| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | |--outdoor_static_np| | | |--input| | | | |--MVI_0898| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--MVI_0918| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | | |--GT| | | | |--MVI_0898| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--MVI_0918| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| |--SID| | |--short_sid2| | | |--00001| | | | |--00001_00_0.04s.npy| | | | |--00001_00_0.1s.npy| | | | |--00001_01_0.04s.npy| | | | |--00001_01_0.1s.npy| | | | ...| | | |--00002| | | | |--00002_00_0.04s.npy| | | | |--00002_00_0.1s.npy| | | | |--00002_01_0.04s.npy| | | | |--00002_01_0.1s.npy| | | | ...| | | ...| | |--long_sid2| | | |--00001| | | | |--00001_00_0.04s.npy| | | | |--00001_00_0.1s.npy| | | | |--00001_01_0.04s.npy| | | | |--00001_01_0.1s.npy| | | | ...| | | |--00002| | | | |--00002_00_0.04s.npy| | | | |--00002_00_0.1s.npy| | | | |--00002_01_0.04s.npy| | | | |--00002_01_0.1s.npy| | | | ...| | | ...| |--SMID| | |--SMID_LQ_np| | | |--0001| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | |--0002| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | ...| | |--SMID_Long_np| | | |--0001| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | |--0002| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | ...| |--FiveK| | |--train| | | |--input| | | | |--a0099-kme_264.jpg| | | | |--a0101-kme_610.jpg| | | | ...| | | |--target| | | | |--a0099-kme_264.jpg| | | | |--a0101-kme_610.jpg| | | | ...| | |--test| | | |--input| | | | |--a4574-DSC_0038.jpg| | | | |--a4576-DSC_0217.jpg| | | | ...| | | |--target| | | | |--a4574-DSC_0038.jpg| | | | |--a4576-DSC_0217.jpg| | | | ...

3 测试

下载我们的模型文件从百度网盘 (提取码: cyh2) 或 谷歌网盘,然后将它们放在名为 pretrained_weights 的文件夹中。

下面是测试命令的示例:

# LOL-v1

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v1.yml --weights pretrained_weights/LOL_v1.pth --dataset LOL_v1# LOL-v2-real

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v2_real.yml --weights pretrained_weights/LOL_v2_real.pth --dataset LOL_v2_real# LOL-v2-synthetic

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v2_synthetic.yml --weights pretrained_weights/LOL_v2_synthetic.pth --dataset LOL_v2_synthetic# SID

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SID.yml --weights pretrained_weights/SID.pth --dataset SID# SMID

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SMID.yml --weights pretrained_weights/SMID.pth --dataset SMID# SDSD-indoor

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SDSD_indoor.yml --weights pretrained_weights/SDSD_indoor.pth --dataset SDSD_indoor# SDSD-outdoor

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SDSD_outdoor.yml --weights pretrained_weights/SDSD_outdoor.pth --dataset SDSD_outdoor# FiveK

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_FiveK.yml --weights pretrained_weights/FiveK.pth --dataset FiveK

4 训练

# LOL-v1

python3 basicsr/train.py --opt Options/RetinexFormer_LOL_v1.yml# LOL-v2-real-

python3 basicsr/train.py --opt Options/RetinexFormer_LOL_v2_real.yml# LOL-v2-synthetic

python3 basicsr/train.py --opt Options/RetinexFormer_LOL_v2_synthetic.yml# SID1. Create Envirement

Make Conda Environment

conda create -n Retinexformer python=3.7

conda activate Retinexformer

Install Dependencies

conda install pytorch=1.11 torchvision cudatoolkit=11.3 -c pytorch

pip install matplotlib scikit-learn scikit-image opencv-python yacs joblib natsort h5py tqdm tensorboard

pip install einops gdown addict future lmdb numpy pyyaml requests scipy yapf lpips

Install BasicSR

python setup.py develop --no_cuda_ext

2. Prepare Dataset

Download the following datasets:LOL-v1 Baidu Disk (code: cyh2), Google DriveLOL-v2 Baidu Disk (code: cyh2), Google DriveSID Baidu Disk (code: gplv), Google DriveSMID Baidu Disk (code: btux), Google DriveSDSD-indoor Baidu Disk (code: jo1v), Google DriveSDSD-outdoor Baidu Disk (code: uibk), Google DriveMIT-Adobe FiveK Baidu Disk (code:cyh2), Google Drive, OfficialPlease process the MIT Adobe FiveK dataset following the sRGB setting

Then organize these datasets as follows:

|--data | |--LOLv1| | |--Train| | | |--input| | | | |--100.png| | | | |--101.png| | | | ...| | | |--target| | | | |--100.png| | | | |--101.png| | | | ...| | |--Test| | | |--input| | | | |--111.png| | | | |--146.png| | | | ...| | | |--target| | | | |--111.png| | | | |--146.png| | | | ...| |--LOLv2| | |--Real_captured| | | |--Train| | | | |--Low| | | | | |--00001.png| | | | | |--00002.png| | | | | ...| | | | |--Normal| | | | | |--00001.png| | | | | |--00002.png| | | | | ...| | | |--Test| | | | |--Low| | | | | |--00690.png| | | | | |--00691.png| | | | | ...| | | | |--Normal| | | | | |--00690.png| | | | | |--00691.png| | | | | ...| | |--Synthetic| | | |--Train| | | | |--Low| | | | | |--r000da54ft.png| | | | | |--r02e1abe2t.png| | | | | ...| | | | |--Normal| | | | | |--r000da54ft.png| | | | | |--r02e1abe2t.png| | | | | ...| | | |--Test| | | | |--Low| | | | | |--r00816405t.png| | | | | |--r02189767t.png| | | | | ...| | | | |--Normal| | | | | |--r00816405t.png| | | | | |--r02189767t.png| | | | | ...| |--SDSD| | |--indoor_static_np| | | |--input| | | | |--pair1| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--pair2| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | | |--GT| | | | |--pair1| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--pair2| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | |--outdoor_static_np| | | |--input| | | | |--MVI_0898| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--MVI_0918| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| | | |--GT| | | | |--MVI_0898| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | |--MVI_0918| | | | | |--0001.npy| | | | | |--0002.npy| | | | | ...| | | | ...| |--SID| | |--short_sid2| | | |--00001| | | | |--00001_00_0.04s.npy| | | | |--00001_00_0.1s.npy| | | | |--00001_01_0.04s.npy| | | | |--00001_01_0.1s.npy| | | | ...| | | |--00002| | | | |--00002_00_0.04s.npy| | | | |--00002_00_0.1s.npy| | | | |--00002_01_0.04s.npy| | | | |--00002_01_0.1s.npy| | | | ...| | | ...| | |--long_sid2| | | |--00001| | | | |--00001_00_0.04s.npy| | | | |--00001_00_0.1s.npy| | | | |--00001_01_0.04s.npy| | | | |--00001_01_0.1s.npy| | | | ...| | | |--00002| | | | |--00002_00_0.04s.npy| | | | |--00002_00_0.1s.npy| | | | |--00002_01_0.04s.npy| | | | |--00002_01_0.1s.npy| | | | ...| | | ...| |--SMID| | |--SMID_LQ_np| | | |--0001| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | |--0002| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | ...| | |--SMID_Long_np| | | |--0001| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | |--0002| | | | |--0001.npy| | | | |--0002.npy| | | | ...| | | ...| |--FiveK| | |--train| | | |--input| | | | |--a0099-kme_264.jpg| | | | |--a0101-kme_610.jpg| | | | ...| | | |--target| | | | |--a0099-kme_264.jpg| | | | |--a0101-kme_610.jpg| | | | ...| | |--test| | | |--input| | | | |--a4574-DSC_0038.jpg| | | | |--a4576-DSC_0217.jpg| | | | ...| | | |--target| | | | |--a4574-DSC_0038.jpg| | | | |--a4576-DSC_0217.jpg| | | | ...

</details>We also provide download links for LIME, NPE, MEF, DICM, and VV datasets that have no ground truth:Baidu Disk (code: cyh2)or Google Drive 3. Testing

Download our models from Baidu Disk (code: cyh2) or Google Drive. Put them in folder pretrained_weights

# LOL-v1

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v1.yml --weights pretrained_weights/LOL_v1.pth --dataset LOL_v1

# LOL-v2-real

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v2_real.yml --weights pretrained_weights/LOL_v2_real.pth --dataset LOL_v2_real

# LOL-v2-synthetic

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_LOL_v2_synthetic.yml --weights pretrained_weights/LOL_v2_synthetic.pth --dataset LOL_v2_synthetic

# SID

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SID.yml --weights pretrained_weights/SID.pth --dataset SID

# SMID

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SMID.yml --weights pretrained_weights/SMID.pth --dataset SMID

# SDSD-indoor

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SDSD_indoor.yml --weights pretrained_weights/SDSD_indoor.pth --dataset SDSD_indoor

# SDSD-outdoor

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_SDSD_outdoor.yml --weights pretrained_weights/SDSD_outdoor.pth --dataset SDSD_outdoor

# FiveK

python3 Enhancement/test_from_dataset.py --opt Options/RetinexFormer_FiveK.yml --weights pretrained_weights/FiveK.pth --dataset FiveK

4. Training

Feel free to check our training logs from Baidu Disk (code: cyh2) or Google Drivepython3 basicsr/train.py --opt Options/RetinexFormer_SID.yml# SMID

python3 basicsr/train.py --opt Options/RetinexFormer_SMID.yml# SDSD-indoor

python3 basicsr/train.py --opt Options/RetinexFormer_SDSD_indoor.yml# SDSD-outdoorxunlian

python3 basicsr/train.py --opt Options/RetinexFormer_SDSD_outdoor.yml

在增强低光图像时,许多深度学习算法基于Retinex理论。然而,Retinex模型并没有考虑到暗部隐藏的损坏或者由光照过程引入的影响。此外,这些方法通常需要繁琐的多阶段训练流程,并依赖于卷积神经网络,在捕捉长距离依赖关系方面存在局限性。本文提出了一种简单而又有原则性的单阶段Retinex-based框架(ORF)。ORF首先估计照明信息来点亮低光图像,然后恢复损坏以生成增强的图像。我们设计了一个基于照明指导的Transformer(IGT),利用照明表示来指导不同光照条件下区域之间的非局部交互建模。将IGT插入到ORF中,我们得到了我们的算法Retinexformer。全面的定量和定性实验证明了我们的Retinexformer在13个基准测试上明显优于现有的方法。通过用户研究和在低光物体检测上的应用,也揭示了我们方法的潜在实际价值。

# FiveK

python3 basicsr/train.py --opt Options/RetinexFormer_FiveK.yml

5 图像评价指标对比

)

)

:使用PyTorch构建神经网络的基本步骤)

)