流程:

- 查看bme_cpu所有节点的详细情况

scontrol show node bme_gpu[12-23]

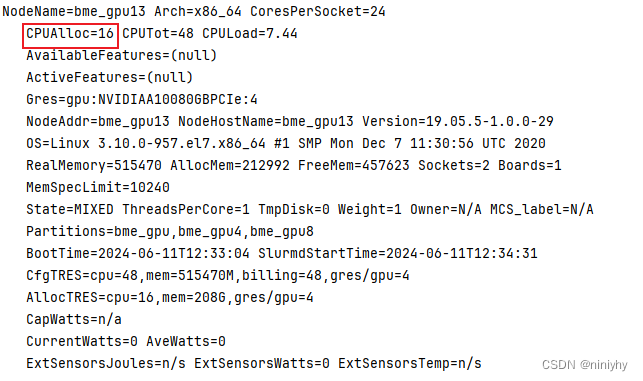

下面这个看起来分配出去较少

- 查看bme_cpu空闲节点

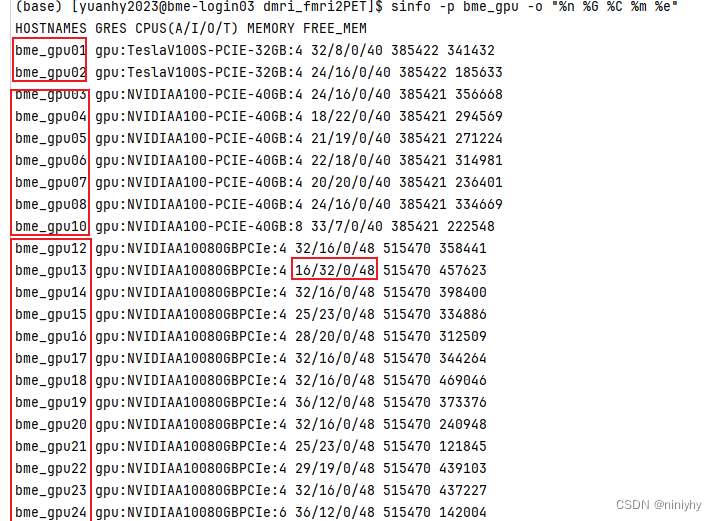

sinfo -p bme_gpu -o "%n %G %C %m %eNVIDIAA10080GBPCIe 卡 gpu 13看起来最少

- 在命令中选择这个节点

#!/bin/bash

#SBATCH -J ratio25

#SBATCH -N 1

#SBATCH -p bme_gpu

#SBATCH -n 8

#SBATCH --time=04:10:00

#SBATCH --gres=gpu:NVIDIAA10080GBPCIe:1

#SBATCH --nodelist=bme_gpu13

#SBATCH -o %j.out

#SBATCH -e %j.out

echo ${SLURM_JOB_NODELIST}

echo start on $(date)

source activate py38

python train_withXYZ_remote.py

echo end on $(date)

# 查看bme_gpu12到bme_gpu23节点的详细信息

scontrol show node bme_gpu[12-23]# 或者查看所有包含 NVIDIAA10080GBPCIe GPU 的节点

scontrol show node | grep -A 10 "NodeName=bme_gpu1[2-9]\|NodeName=bme_gpu2[0-3]"# 查看bme_gpu分区中所有作业的信息

squeue -p bme_gpu -o "%.18i %.9P %.8j %.8u %.2t %.10M %.6D %R"# 查看bme_gpu分区的节点使用情况

sinfo -p bme_gpu -o "%n %f %G %C %m"# 查看所有节点的GPU使用情况

scontrol show node | grep -E "NodeName|Gres=|AllocTRES"查看空闲节点

(base) [yuanhy2023@bme-login03 dmri_fmri2PET]$ sinfo -p bme_gpu -o "%n %G %C %m %e"

HOSTNAMES GRES CPUS(A/I/O/T) MEMORY FREE_MEM

bme_gpu01 gpu:TeslaV100S-PCIE-32GB:4 32/8/0/40 385422 340886

bme_gpu02 gpu:TeslaV100S-PCIE-32GB:4 24/16/0/40 385422 190333

bme_gpu03 gpu:NVIDIAA100-PCIE-40GB:4 24/16/0/40 385421 356811

bme_gpu04 gpu:NVIDIAA100-PCIE-40GB:4 18/22/0/40 385421 308029

bme_gpu05 gpu:NVIDIAA100-PCIE-40GB:4 21/19/0/40 385421 221302

bme_gpu06 gpu:NVIDIAA100-PCIE-40GB:4 22/18/0/40 385421 329626

bme_gpu07 gpu:NVIDIAA100-PCIE-40GB:4 20/20/0/40 385421 272223

bme_gpu08 gpu:NVIDIAA100-PCIE-40GB:4 24/16/0/40 385421 334960

bme_gpu10 gpu:NVIDIAA100-PCIE-40GB:8 33/7/0/40 385421 222243

bme_gpu12 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 356842

bme_gpu13 gpu:NVIDIAA10080GBPCIe:4 16/32/0/48 515470 458384

bme_gpu14 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 398798

bme_gpu15 gpu:NVIDIAA10080GBPCIe:4 25/23/0/48 515470 347636

bme_gpu16 gpu:NVIDIAA10080GBPCIe:4 28/20/0/48 515470 312912

bme_gpu17 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 361716

bme_gpu18 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 469081

bme_gpu19 gpu:NVIDIAA10080GBPCIe:4 36/12/0/48 515470 387393

bme_gpu20 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 241189

bme_gpu21 gpu:NVIDIAA10080GBPCIe:4 25/23/0/48 515470 121542

bme_gpu22 gpu:NVIDIAA10080GBPCIe:4 29/19/0/48 515470 440023

bme_gpu23 gpu:NVIDIAA10080GBPCIe:4 32/16/0/48 515470 439940

bme_gpu24 gpu:NVIDIAA10080GBPCIe:6 36/12/0/48 515470 141288

)

和ReentrantLock的区别)

)

)

)