【多目标跟踪】 TrackFormer 耗时三天 单句翻译!!!

TrackFormer: Multi-Object Tracking with Transformers

Abstract

The challenging task of multi-object tracking (MOT) re-quires simultaneous reasoning about track initialization,identity, and spatio-temporal trajectories. We formulatethis task as a frame-to-frame set prediction problem andintroduce TrackFormer, an end-to-end trainable MOT ap-proach based on an encoder-decoder Transformer architec-ture. Our model achieves data association between framesvia attention by evolving a set of track predictions througha video sequence. The Transformer decoder initializes newtracks from static object queries and autoregressively fol-lows existing tracks in space and time with the concep-tually new and identity preserving track queries.Bothquery types benefit from self- and encoder-decoder atten-tion on global frame-level features, thereby omitting any ad-ditional graph optimization or modeling of motion and/orappearance. TrackFormer introduces a new tracking-by-attention paradigm and while simple in its design is able toachieve state-of-the-art performance on the task of multi-object tracking (MOT17 and MOT20) and segmentation(MOTS20).

多目标跟踪(MOT)的挑战性任务要求同时对轨迹初始化、身份和时空轨迹进行推理。我们将该任务公式化为一个帧到帧集预测问题,并介绍了TrackFormer,这是一种基于编码器-解码器转换器架构的端到端可训练MOT方法。我们的模型通过在视频序列中进化一组轨迹预测来实现帧和注意力之间的数据关联。Transformer解码器从静态对象查询中初始化新的轨道,并通过概念上新的和保持身份的轨道查询在空间和时间上自回归跟踪现有的轨道。Bothquery类型受益于自身和编码器解码器对全局帧级特征的关注,从而省略了运动和/或外观的任何附加的图优化或建模。TrackFormer引入了一种新的注意力追踪范式,虽然设计简单,但它是abl

1.Introduction

Humans need to focus theirattentionto track objects inspace and time, for example, when playing a game of ten-nis, golf, or pong. This challenge is only increased whentracking not one, butmultipleobjects, in crowded and realworld scenarios. Following this analogy, we demonstratethe effectiveness of Transformer [51] attention for the taskof multi-object tracking (MOT) in videos

人类需要将注意力集中在空间和时间上的轨迹物体上,例如,当玩十尼斯、高尔夫或乒乓球时。当在拥挤和现实世界的场景中跟踪多个对象而不是一个对象时,这种挑战只会增加。根据这个类比,我们证明了Transformer[51]对视频中多对象跟踪(MOT)任务的关注的有效性

The goal in MOT is to follow the trajectories of a set ofobjects,e.g., pedestrians, while keeping their identities dis-criminated as they are moving throughout a video sequence.Due to the advances in image-level object detection [7, 39],most approaches follow the two-steptracking-by-detectionparadigm: (i) detecting objects in individual video frames and (ii) associating sets of detections between frames andthereby creating individual object tracks over time. Tra-ditional tracking-by-detection methods associate detectionsvia temporally sparse [23, 26] or dense [19, 22] graph opti-mization, or apply convolutional neural networks to predictmatching scores between detections [8, 24]

MOT的目标是跟踪一组对象(例如行人)的轨迹,同时在整个视频序列中移动时不区分他们的身份。由于图像级对象检测的进步[7,39],大多数方法都遵循两步检测跟踪法:(i)检测单个视频帧中的对象以及(ii)将帧之间的检测集合相关联,从而随着时间的推移创建单独的对象轨迹。检测方法的传统跟踪通过时间稀疏[23,26]或密集[19,22]图优化将检测关联起来,或将卷积神经网络应用于检测之间的预测匹配分数[8,24]

Recent works [4,6,29,69] suggest a variation of the tradi-tional paradigm, coinedtracking-by-regression[12]. In thisapproach, the object detector not only provides frame-wisedetections, but replaces the data association step with a con-tinuous regression of each track to the changing position ofits object. These approaches achieve track association im-plicitly, but provide top performance only by relying eitheron additional graph optimization [6, 29] or motion and ap-pearance models [4]. This is largely due to the isolated andlocal bounding box regression which lacks any notion ofobject identity or global communication between tracks

最近的工作[4,6,29,69]提出了传统范式的一种变体,通过回归进行跟踪[12]。在这种方法中,对象检测器不仅提供逐帧检测,而且用每个轨迹到其对象的变化位置的连续回归来代替数据关联步骤。这些方法有效地实现了轨迹关联,但仅通过依赖额外的图优化[6,29]或运动和概率模型[4]来提供最佳性能。这在很大程度上是由于孤立和局部的边界框回归,它缺乏任何对象身份或轨迹之间全局通信的概念

We present a first straightforward instantiation oftracking-by-attention, TrackFormer, an end-to-end train-able Transformer [51] encoder-decoder architecture.Itencodes frame-level features from a convolutional neuralnetwork (CNN) [18] and decodes queries into boundingboxes associated with identities. The data association isperformed through the novel and simple concept oftrackqueries. Each query represents an object and follows it inspace and time over the course of a video sequence in anautoregressive fashion. New objects entering the scene aredetected by static object queries as in [7, 71] and subse-quently transform to future track queries. At each frame,the encoder-decoder computes attention between the inputimage features and the track as well as object queries, andoutputs bounding boxes with assigned identities. Thereby,TrackFormer performs tracking-by-attention and achievesdetection and data association jointly without relying onany additional track matching, graph optimization, or ex-plicit modeling of motion and/or appearance. In contrastto tracking-by-detection/regression, our approach detectsand associates tracks simultaneously in a single step via at-tention (and not regression). TrackFormer extends the re-cently proposed set prediction objective for object detec-tion [7, 48, 71] to multi-object tracking

我们提出了第一个直接的注意力跟踪实例化TrackFormer,这是一种端到端可训练的Transformer[51]编码器-解码器架构。它对卷积神经网络(CNN)[18]的帧级特征进行编码,并将查询解码为与身份相关的边界框。数据关联是通过一个新颖而简单的查询概念来实现的。每个查询表示一个对象,并在视频序列的过程中以自动方式在空间和时间上跟随它。如[7,71]中所述,通过静态对象查询检测进入场景的新对象,并随后转换为未来的轨迹查询。在每一帧,编码器-解码器计算输入图像特征和轨迹之间的注意力以及对象查询,并输出具有指定标识的边界框。从而,TrackFormer通过注意力进行跟踪

总结:transfomer 是纯基于注意力作 检测 和数据关联 不基于 常见的匹配算法 例如匈牙利 卡尔曼等其他算法

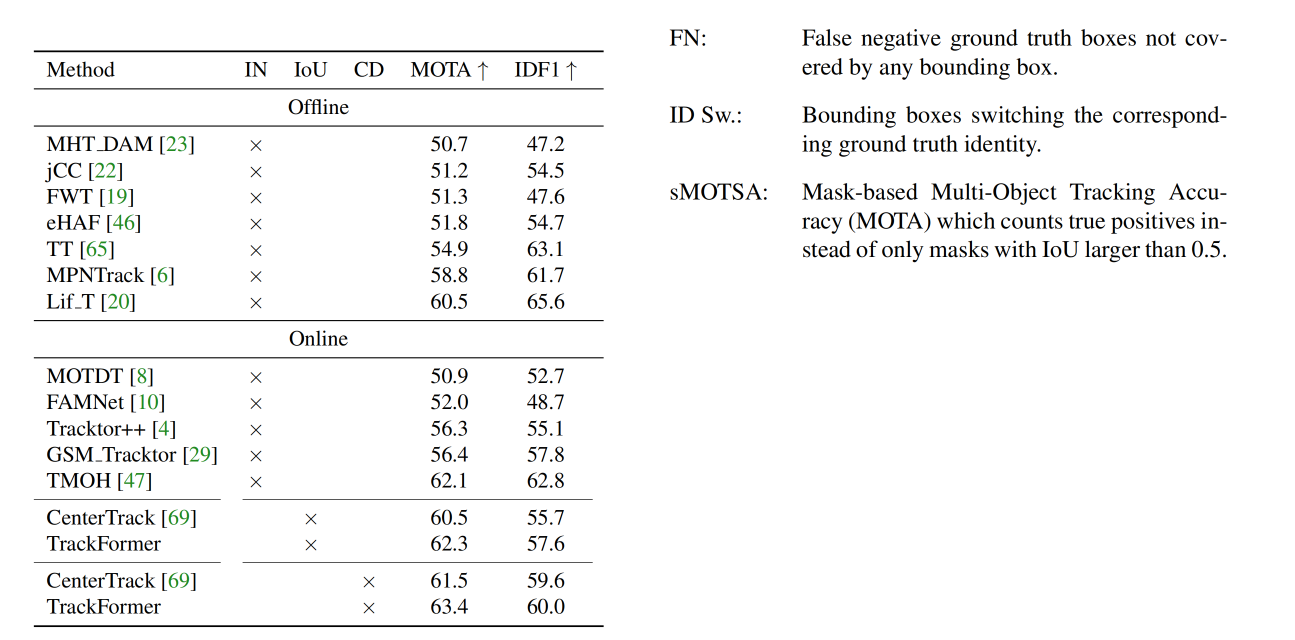

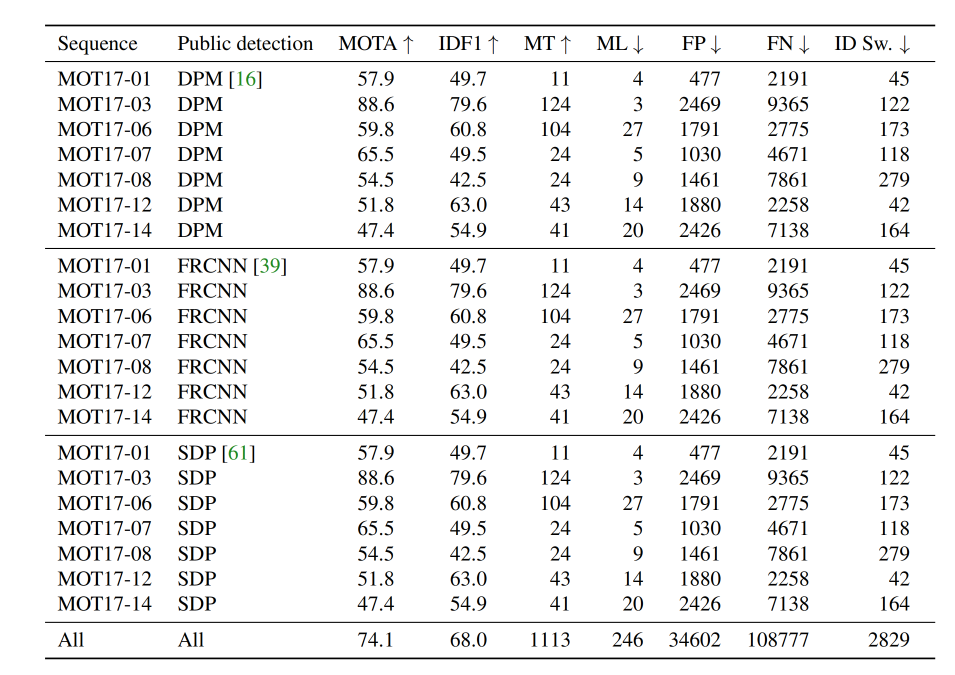

We evaluate TrackFormer on the MOT17 [30] andMOT20 [13] benchmarks where it achieves state-of-the-artperformance for public and private detections. Furthermore,we demonstrate the extension with a mask prediction headand show state-of-the-art results on the Multi-Object Track-ing and Segmentation (MOTS20) challenge [52]. We hopethis simple yet powerful baseline will inspire researchers toexplore the potential of the tracking-by-attention paradigm.In summary, we make the following contributions:

我们在MOT17[30]和MOT20[13]基准上评估了TrackFormer,它在公共和私人检测方面达到了最先进的性能。此外,我们用掩模预测头演示了扩展,并在多对象跟踪和分割(MOTS20)挑战中展示了最先进的结果[52]。我们希望这个简单而强大的基线将激励研究人员探索注意力追踪范式的潜力。总之,我们做出了以下贡献:

An end-to-end trainable multi-object tracking ap-proach which achieves detection and data associationin a new tracking-by-attention paradigm

一种端到端可训练的多目标跟踪方法,在一种新的注意力跟踪范式中实现检测和数据关联

The concept of autoregressive track queries which em-bed an object’s spatial position and identity, therebytracking it in space and time

自回归跟踪的概念查询对象的空间位置和身份,从而在空间和时间上跟踪它

New state-of-the-art results on three challenging multi-object tracking (MOT17 and MOT20) and segmenta-tion (MOTS20) benchmarks

关于三个具有挑战性的多目标跟踪(MOT17和MOT20)和分割(MOTS20)基准的最新技术成果

2. Related work

TBD:

refrains from associating detec-tions between frames but instead accomplishes tracking byregressing past object locations to their new positions in thecurrent frame. Previous efforts [4, 15] use regression headson region-pooled object features. In [69], objects are rep-resented as center points which allow for an association bya distance-based greedy matching algorithm. To overcometheir lacking notion of object identity and global track rea-soning, additional re-identification and motion models [4],as well as traditional [29] and learned [6] graph methodshave been necessary to achieve top performance

避免将帧之间的检测关联起来,而是通过将过去的对象位置回归到当前帧中的新位置来实现跟踪。先前的工作[4,15]使用回归头部区域合并对象特征。在[69]中,对象被表示为中心点,这允许通过基于距离的贪婪匹配算法进行关联。为了克服缺乏物体识别和全局轨迹重建概念的方法,需要额外的重新识别和运动模型[4],以及传统的[29]和学习的[6]图方法,以实现最佳性能

被分割的跟踪

not only predicts objectmasks but leverages the pixel-level information to mitigateissues with crowdedness and ambiguous backgrounds.Prior attempts used category-agnostic image segmenta-tion [31], applied Mask R-CNN [17] with 3D convolu-tions [52], mask pooling layers [38], or represented objectsas unordered point clouds [58] and cost volumes [57].However, the scarcity of annotated MOT segmentation datamakes modern approaches still rely on bounding boxes

不仅预测了对象掩码,而且利用像素级信息来缓解拥挤和背景模糊的问题。之前的尝试使用了类别不可知的图像分割[31],应用了具有3D卷积的掩码R-CNN[17][52],掩码池化层[38],或将对象表示为无序点云[58]和成本量[57]。然而,注释MOT分割数据的稀缺性使得现代方法仍然依赖于边界框

In contrast, TrackFormer casts the entire tracking objec-tive into a single set prediction problem, applying attentionnot only for the association step. It jointly reasons abouttrack initialization, identity, and spatio-temporal trajecto-ries. We only rely on feature-level attention and avoid addi-tional graph optimization and appearance/motion models

相比之下,TrackFormer将整个跟踪对象投射到一个单集预测问题中,而不仅仅对关联步骤应用注意力。它共同解释了轨道初始化、身份和时空轨迹。我们只依赖于特征级别的注意力,避免附加的图形优化和外观/运动模型

3. TrackFormer

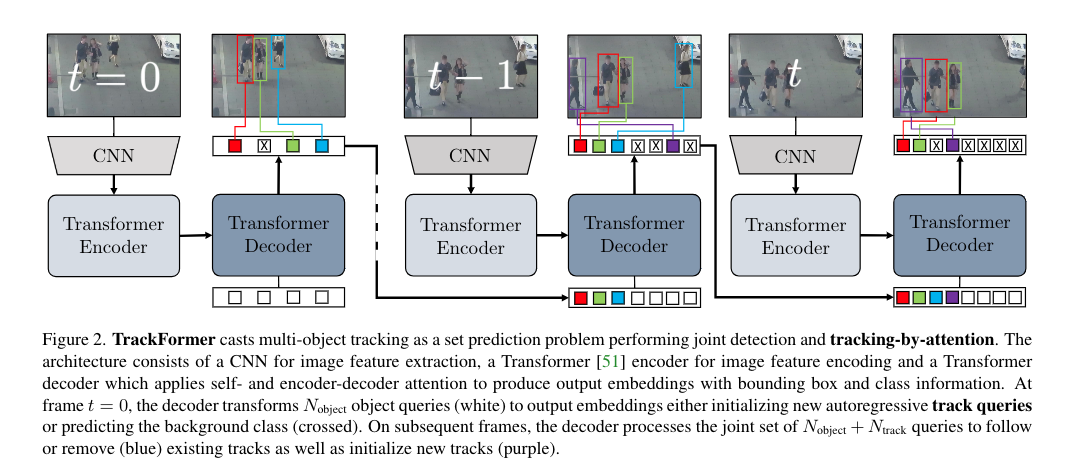

We present TrackFormer, an end-to-end trainable multi-object tracking (MOT) approach based on an encoder-decoder Transformer [51] architecture. This section de-scribes how we cast MOT as a set prediction problem andintroduce the newtracking-by-attentionparadigm. Further-more, we explain the concept oftrack queriesand their ap-plication for frame-to-frame data association

我们提出了TrackFormer,这是**一种基于编码器-解码器转换器[51]架构的端到端可训练多对象跟踪(MOT)**方法。本节描述了我们如何将MOT视为一个集合预测问题,并引入了新的注意力追踪算法。此外,我们还解释了查询的概念及其在帧到帧数据关联中的应用

3.1MOT as a set prediction problem

Given a video sequence withKindividual object iden-tities, MOT describes the task of generating ordered tracksTk= (bkt1,bkt2,...)with bounding boxesbtand track iden-titiesk. The subset(t1,t2,...)of total framesTindicatesthe time span between an object entering and leaving thethe scene. These include all frames for which an object isoccluded by either the background or other objectsIn order to cast MOT as a set prediction problem, weleverage an encoder-decoder Transformer architecture. Ourmodel performs online tracking and yields per-frame objectbounding boxes and class predictions associated with iden-tities in four consecutive steps

为了将MOT转化为集合预测问题,我们提出了一种编码器-解码器-转换器架构。我们的模型执行在线跟踪,并在四个连续步骤中产生每帧对象边界框和与身份相关的类预测

- 使用通用CNN骨干网的帧级特征提取,例如ResNet-50[18]

- Transformer编码中具有自注意的帧特征编码

- 在Transformer解码器中使用自身和编码器解码器对查询进行解码

- 使用多层感知器(MLP)将查询映射到框和类预测。

Objects are implicitly represented in the decoderqueries,which are embeddings used by the decoder to output bound-ing box coordinates and class predictions. The decoder al-ternates between two types of attention: (i) self-attentionover all queries, which allows for joint reasoning aboutthe objects in a scene and (ii) encoder-decoder attention,which gives queries global access to the visual informationof the encoded features. The output embeddings accumu-late bounding box and class information over multiple de-coding layers. The permutation invariance of Transformersrequires additive feature and object encodings for the framefeatures and decoder queries, respectively

3.2 Tracking-by-attention with queries

The total set of output embeddings is initialized with twotypes of query encodings: (i) static object queries, whichallow the model to initialize tracks at any frame of the video,and (ii) autoregressive track queries, which are responsiblefor tracking objects across frames

输出嵌入的总集合用两种类型的查询编码进行初始化:(i)静态对象查询,允许模型初始化视频任何帧的轨迹;(ii)自回归轨迹查询,负责跨帧跟踪对象

The simultaneous decoding of object and track queriesallows our model to perform detection and tracking in a uni-fied way, thereby introducing a newtracking-by-attentionparadigm. Different tracking-by-X approaches are definedby their key component responsible for track generation.For tracking-by-detection, the tracking is performed bycomputing/modelling distances between frame-wise objectdetections. The tracking-by-regression paradigm also per-forms object detection, but tracks are generated by regress-ing each object box to its new position in the current frame.Technically, our TrackFormer also performs regression inthe mapping of object embeddings with MLPs. However,the actual track association happens earlier via attention inthe Transformer decoder. A detailed architecture overviewwhich illustrates the integration of track and object queriesinto the Transformer decoder is shown in the appendix

对象和跟踪查询的同时解码使我们的模型能够以统一的方式进行检测和跟踪,从而引入了一种新的注意力追踪方法。不同的X跟踪方法由其负责跟踪生成的关键组件定义。对于检测跟踪,跟踪是通过计算/建模逐帧对象检测之间的距离来执行的。回归跟踪范式也形成了对象检测,但轨迹是通过将每个对象框回归到当前帧中的新位置来生成的。从技术上讲,我们的TrackFormer也在使用MLP映射对象嵌入时执行回归。然而,实际的轨道关联通过Transformer解码器中的注意力更早地发生。附录中显示了详细的体系结构概述,说明了将跟踪和对象查询集成到Transformer解码器中

轨迹初始化

场景中出现的新对象是由固定数量的对象输出嵌入检测到的,每个嵌入都用静态和学习的对象编码初始化,称为对象查询[7]。直观地说,每个对象查询都会学习预测具有特定空间适当关系的对象,例如边界框的大小和位置。解码器自身的注意力依赖于对象编码来避免重复检测,并推理对象的空间和类别关系。对象查询的数量应超过每帧对象的最大数量

轨迹query

为了实现帧到帧的跟踪生成,我们将跟踪查询的概念引入到编码器中。跟踪查询通过携带对象身份信息的视频序列跟踪对象,同时以自回归方式适应其不断变化的位置

For this purpose, each new object detection initializesa track query with the corresponding output embedding ofthe previous frame. The Transformer encoder-decoder per-forms attention on frame features and decoder queriescon-tinuously updatingthe instance-specific representation of anobject‘s identity and location in each track query embed-ding. Self-attention over the joint set of both query types al-lows for the detection of new objects while simultaneouslyavoiding re-detection of already tracked objects

为此,每个新的对象检测都会使用前一帧的相应输出嵌入来初始化轨迹查询。Transformer编码器-解码器关注帧特征和解码器查询,不断更新每个轨道查询嵌入中对象身份和位置的实例特定表示。对两种查询类型的联合集合的自我关注有助于检测新对象,同时避免重新检测已跟踪的对象

解码轨道查询的随机数量的能力允许基于注意力的短期重新识别过程。我们继续对先前删除的跟踪查询进行解码,以获得最大数量的跟踪reidframes。在这个耐心窗口期间,跟踪查询被认为是不活动的,并且不会对轨迹做出贡献,直到高于σtrack的分类分数触发重新识别。嵌入到每个轨迹查询中的空间信息防止它们应用于具有大物体移动的长期遮挡,

3.3. TrackFormer training

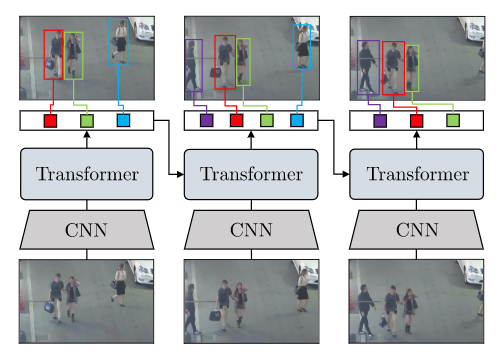

For track queries to work in interaction with objectqueries and follow objects to the next frame, TrackFormerrequires dedicated frame-to-frame tracking training. As in-dicated in Figure 2, we train on two adjacent frames andoptimize the entire MOT objective at once. The loss forframetmeasures the set prediction of all output embed-dingsN=Nobject+Ntrackwith respect to the ground truthobjects in terms of class and bounding box prediction

为了使跟踪查询与对象查询交互并跟随对象到下一帧,TrackFormerre需要专门的帧到帧跟踪训练。如图2所示,我们在两个相邻的帧上进行训练,并同时优化整个MOT目标。帧的损失在类和边界框预测方面测量所有输出嵌入项的集合预测N=Nobject+N跟踪相对于地面truthobjects

集合损失预测 两步: 检测 + 跟踪

4. Experiments

生成)

)

方式)

+数据集(四))

)

)