论文地址:YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information

GitHub:WongKinYiu/yolov9: Implementation of paper - YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information (github.com)

一、摘要

今天的深度学习方法侧重于如何设计最合适的目标函数,从而使模型的预测结果能够最接近真实标签。同时,设计一个合适的体系结构,可以获取足够的信息进行预测。现有的方法忽略了输入数据在进行分层特征提取和空间转换时,会丢失大量信息。

本文研究数据通过深度网络传输时数据丢失的重要问题,即信息瓶颈和可逆函数。并且提出了可编程梯度信息(PGI)的概念,以应对深度网络实现多个目标所需的各种变化。PGI可以为目标任务提供完整的输入信息来计算目标函数,从而获得可靠的梯度信息来更新网络权值。

此外,还设计了一种基于梯度路径规划的新型轻量级网络体系结构——广义高效层聚合网络(GELAN)。GELAN的架构证实了PGI在轻量级模型上获得了更好的结果。并且基于MS COCO数据集的目标检测上验证了所提出的GELAN和PGI。结果表明,GELAN只使用传统的卷积算子来比基于深度卷积的先进方法获得更好的参数利用。

PGI可用于从轻量级到大型的各种模型。可以用来获得完整的信息,因此,从头开始训练的模型可以比使用大数据集预先训练的最先进的模型获得更好的结果。

二、配置YOLOv9

2.1、下载yolov9

git clone https://github.com/WongKinYiu/yolov9.git2.2、创建conda环境

conda create -n yolov9 python==3.8然后切换到yolov9目录下,找到requirements.txt文件(yolov9需要的库)

# 1、激活yolov9

conda activate yolov9# 2、切换地址

cd yolov9# 3、安装相关库

pip install -r requirements.txt注意:本文忽略了anaconda的安装和cuda、cudnn的安装过程

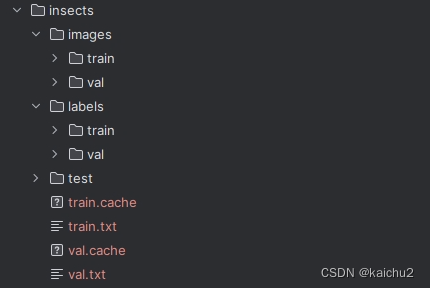

三、数据划分

环境都配置完成之后,开始对测试集进行划分,本文选择了一个insect测试集(包含7个类别['Leconte','Boerner','linnaeus','armandi','coleoptera','acuminatus','Linnaeus'])如下图所示

images:训练集和验证集

labels:训练集和验证集的标签

train.txt和val.txt:需要生成的列表(对于每个图片的地址)

test:测试集

train.cache和val.cache:训练过程中生成的

3.1、数据可视化

看下标签

<annotation><folder>xxx</folder><filename>1.jpeg</filename><path>/home/fion/桌面/xxx/1.jpeg</path><source><database>Unknown</database></source><size><width>1344</width><height>1344</height><depth>3</depth></size><segmented>0</segmented><object><name>Leconte</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>473</xmin><ymin>578</ymin><xmax>612</xmax><ymax>727</ymax></bndbox></object><object><name>Boerner</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>822</xmin><ymin>505</ymin><xmax>948</xmax><ymax>639</ymax></bndbox></object><object><name>linnaeus</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>607</xmin><ymin>781</ymin><xmax>690</xmax><ymax>842</ymax></bndbox></object><object><name>armandi</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>756</xmin><ymin>786</ymin><xmax>841</xmax><ymax>856</ymax></bndbox></object><object><name>coleoptera</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>624</xmin><ymin>488</ymin><xmax>711</xmax><ymax>554</ymax></bndbox></object>

</annotation>xml文件解析:

xml.etree.ElementTree库在Python中提供了多种处理XML数据的方法。以下是一些常见的用法:

1. 解析XML字符串:

使用ET.fromstring(xml_string)可以从一个XML格式的字符串创建一个元素对象,并作为树的根节点。2. 解析XML文件:

使用ET.parse(file_path)可以加载并解析一个XML文件,返回一个树的根节点。3. 获取元素标签和属性:

使用element.tag获取元素的标签名称;使用`element.attrib`获取元素的属性字典。4. 查找子元素:

使用element.find(tag)查找第一个匹配的子元素;使用`element.findall(tag)`查找所有匹配的子元素。5. 遍历元素:

使用`element.iter()`或`element.iter(tag)`可以迭代遍历元素的所有后代元素。等等......

这些是`xml.etree.ElementTree`库的一些基本用法,涵盖了解析、查询、修改和创建XML数据的常见操作。

import numpy as np

import os,cv2,systry:import xml.etree.cElementTree as ET

except ImportError:import xml.etree.ElementTree as ETdef plot_image():# 数据的类别INSECT_NAMES = ['Leconte','Boerner','linnaeus','armandi','coleoptera','acuminatus','Linnaeus']# 不同类别给不同颜色COLOR_NAMES = {'Leconte':(255,0,0),'Boerner':(0,255,0),'linnaeus':(0,0,255),'armandi':(0,0,0),'coleoptera':(255,255,0),'acuminatus':(148,0,211),'Linnaeus':(255,255,255)}img_dir = r"E:\YOLO_insects\insects\train\images"xml_dir = r"E:\YOLO_insects\insects\train\annotations\xmls"save_dir = r"E:\YOLO_insects\insects\imshow"if not os.path.exists(save_dir):os.makedirs(save_dir)for i in os.listdir(img_dir):img_path = os.path.join(img_dir, i)xml_path = os.path.join(xml_dir, i.split(".")[0]+".xml")img = cv2.imread(img_path)root = ET.parse(xml_path).getroot()for obj in root.findall('object'):difficult = obj.find('difficult').textcls = obj.find('name').textsize = root.find('size')w = int(size.find('width').text)h = int(size.find('height').text)if cls not in INSECT_NAMES or int(difficult) == 1:continuecls_id = INSECT_NAMES.index(cls)xml_box = obj.find('bndbox')b = (float(xml_box.find('xmin').text), float(xml_box.find('xmax').text),float(xml_box.find('ymin').text), float(xml_box.find('ymax').text))print(f"{cls}")cv2.rectangle(img, (int(b[0]),int(b[2])),(int(b[1]),int(b[3])), COLOR_NAMES[str(cls)],3)cv2.putText(img, cls,(int(b[0]),int(b[2])-5),cv2.FONT_HERSHEY_SIMPLEX,0.5,COLOR_NAMES[str(cls)],2,cv2.LINE_AA)cv2.imwrite(os.path.join(save_dir,i), img)

3.2、测试集标签转换

因为本文测试集已划分好了train、val和test,因此下面跳过了数据集划分部分,下面看下如何生成yolo所需的标签格式

代码部分:

import numpy as np

import os,cv2,systry:import xml.etree.cElementTree as ET

except ImportError:import xml.etree.ElementTree as ETdef convert(size, box):dw = 1. / (size[0])dh = 1. / (size[1])x = (box[0] + box[1]) / 2.0 - 1y = (box[2] + box[3]) / 2.0 - 1w = box[1] - box[0]h = box[3] - box[2]x = x * dww = w * dwy = y * dhh = h * dhreturn x, y, w, hdef generate_labels():train_dir = r'E:\YOLO_insects\insects\val\annotations\xmls'train_xml = './insects/labels/val'if not os.path.exists(train_xml):os.makedirs(train_xml,exist_ok=True)num = 0classes = ['Leconte','Boerner','linnaeus','armandi','coleoptera','acuminatus','Linnaeus']for file in os.listdir(train_dir):xml_path = os.path.join(train_dir,file)outfile = open(os.path.join(train_xml,file.split(".xml")[0]+".txt"),'w',encoding="utf8")num +=1print(xml_path)content = ET.parse(xml_path)root = content.getroot()size = root.find('size')w = int(size.find('width').text)h = int(size.find('height').text)channel = int(size.find('depth').text)print(f"size: {w}x{h}, channel: {channel}")for obj in root.iter('object'):difficult = obj.find('difficult').textcls = obj.find('name').text# classes.append(cls) # 可以查看有哪些类if cls not in classes or int(difficult) == 1:continuecls_id = classes.index(cls)xml_box = obj.find('bndbox')b = (float(xml_box.find('xmin').text), float(xml_box.find('xmax').text),float(xml_box.find('ymin').text),float(xml_box.find('ymax').text))b1, b2, b3, b4 = b# 标注越界修正if b2 > w:b2 = wif b4 > h:b4 = hb = (b1, b2, b3, b4)bbox = convert((w,h),b)print(bbox)outfile.write(str(cls_id) + " " + " ".join([str(i) for i in bbox]) + "\n")print(f"total_num:{num}")标签生成之后,生成测试列表

def produce_txt(input_dir=r'E:\yolov9\data\insects\images\val', output_file=r"E:\yolov9\data\insects\val.txt"):with open(output_file, 'w') as f:for root, dirs, files in os.walk(input_dir):for file in files:file_path = os.path.join(root, file)f.write(file_path + '\n')四、开始训练

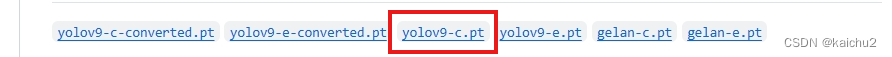

4.1、在github里下载yolov9-c.pt文件

存放位置随意

4.2、创建对应的yaml配置文件

yolov9/data/insect.yaml

path: E:\yolov9\data\insects # dataset root dir

train: train.txt # train images (relative to 'path')

val: val.txt # val images (relative to 'path')# Classes

names: ['Leconte','Boerner','linnaeus','armandi','coleoptera','acuminatus','Linnaeus']4.3、修改yolov9-c.yaml文件中的类别数目

# YOLOv9# parameters

nc: 7 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

#activation: nn.LeakyReLU(0.1)

#activation: nn.ReLU()# anchors

anchors: 34.4、配置train_dual.py参数

| --weights:设置下载好的yolov9-c.pt文件地址 |

| --cfg:选择yolov9-c.yaml |

| --data:自己创建的配置文件 |

| --imgsz:图像的尺寸:我这里图设置320,也可以设置640 |

| --batch-size:批次大小,太大可能跑不了 |

| --epoch:训练epoch次数 |

| 其他参数都是选择默认值 |

def parse_opt(known=False):parser = argparse.ArgumentParser()# parser.add_argument('--weights', type=str, default=ROOT / 'yolo.pt', help='initial weights path')# parser.add_argument('--cfg', type=str, default='', help='model.yaml path')parser.add_argument('--weights', type=str, default='weights/yolov9-c.pt', help='initial weights path')parser.add_argument('--cfg', type=str, default='models/detect/yolov9-c.yaml', help='model.yaml path')parser.add_argument('--data', type=str, default='data/insect.yaml', help='dataset.yaml path')parser.add_argument('--hyp', type=str, default='data/hyps/hyp.scratch-high.yaml', help='hyperparameters path')parser.add_argument('--epochs', type=int, default=20, help='total training epochs')parser.add_argument('--batch-size', type=int, default=8, help='total batch size for all GPUs, -1 for autobatch')parser.add_argument('--imgsz', '--img', '--img-size', type=int, default=320, help='train, val image size (pixels)')parser.add_argument('--rect', action='store_true', help='rectangular training')parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')parser.add_argument('--noval', action='store_true', help='only validate final epoch')parser.add_argument('--noautoanchor', action='store_true', help='disable AutoAnchor')parser.add_argument('--noplots', action='store_true', help='save no plot files')parser.add_argument('--evolve', type=int, nargs='?', const=300, help='evolve hyperparameters for x generations')parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')parser.add_argument('--cache', type=str, nargs='?', const='ram', help='image --cache ram/disk')parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')parser.add_argument('--optimizer', type=str, choices=['SGD', 'Adam', 'AdamW', 'LION'], default='SGD', help='optimizer')parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')parser.add_argument('--workers', type=int, default=0, help='max dataloader workers (per RANK in DDP mode)')parser.add_argument('--project', default=ROOT / 'runs/train', help='save to project/name')parser.add_argument('--name', default='exp', help='save to project/name')parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')parser.add_argument('--quad', action='store_true', help='quad dataloader')parser.add_argument('--cos-lr', action='store_true', help='cosine LR scheduler')parser.add_argument('--flat-cos-lr', action='store_true', help='flat cosine LR scheduler')parser.add_argument('--fixed-lr', action='store_true', help='fixed LR scheduler')parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')parser.add_argument('--patience', type=int, default=10, help='EarlyStopping patience (epochs without improvement)')parser.add_argument('--freeze', nargs='+', type=int, default=[0], help='Freeze layers: backbone=10, first3=0 1 2')parser.add_argument('--save-period', type=int, default=-1, help='Save checkpoint every x epochs (disabled if < 1)')parser.add_argument('--seed', type=int, default=0, help='Global training seed')parser.add_argument('--local_rank', type=int, default=-1, help='Automatic DDP Multi-GPU argument, do not modify')parser.add_argument('--min-items', type=int, default=0, help='Experimental')parser.add_argument('--close-mosaic', type=int, default=0, help='Experimental')# Logger argumentsparser.add_argument('--entity', default=None, help='Entity')parser.add_argument('--upload_dataset', nargs='?', const=True, default=False, help='Upload data, "val" option')parser.add_argument('--bbox_interval', type=int, default=-1, help='Set bounding-box image logging interval')parser.add_argument('--artifact_alias', type=str, default='latest', help='Version of dataset artifact to use')return parser.parse_known_args()[0] if known else parser.parse_args()4.5、开始训练

直接运行:train_dual.py

16 epochs completed in 6.767 hours.

Optimizer stripped from runs\train\exp3\weights\last.pt, 102.8MB

Optimizer stripped from runs\train\exp3\weights\best.pt, 102.8MBValidating runs\train\exp3\weights\best.pt...

Fusing layers...

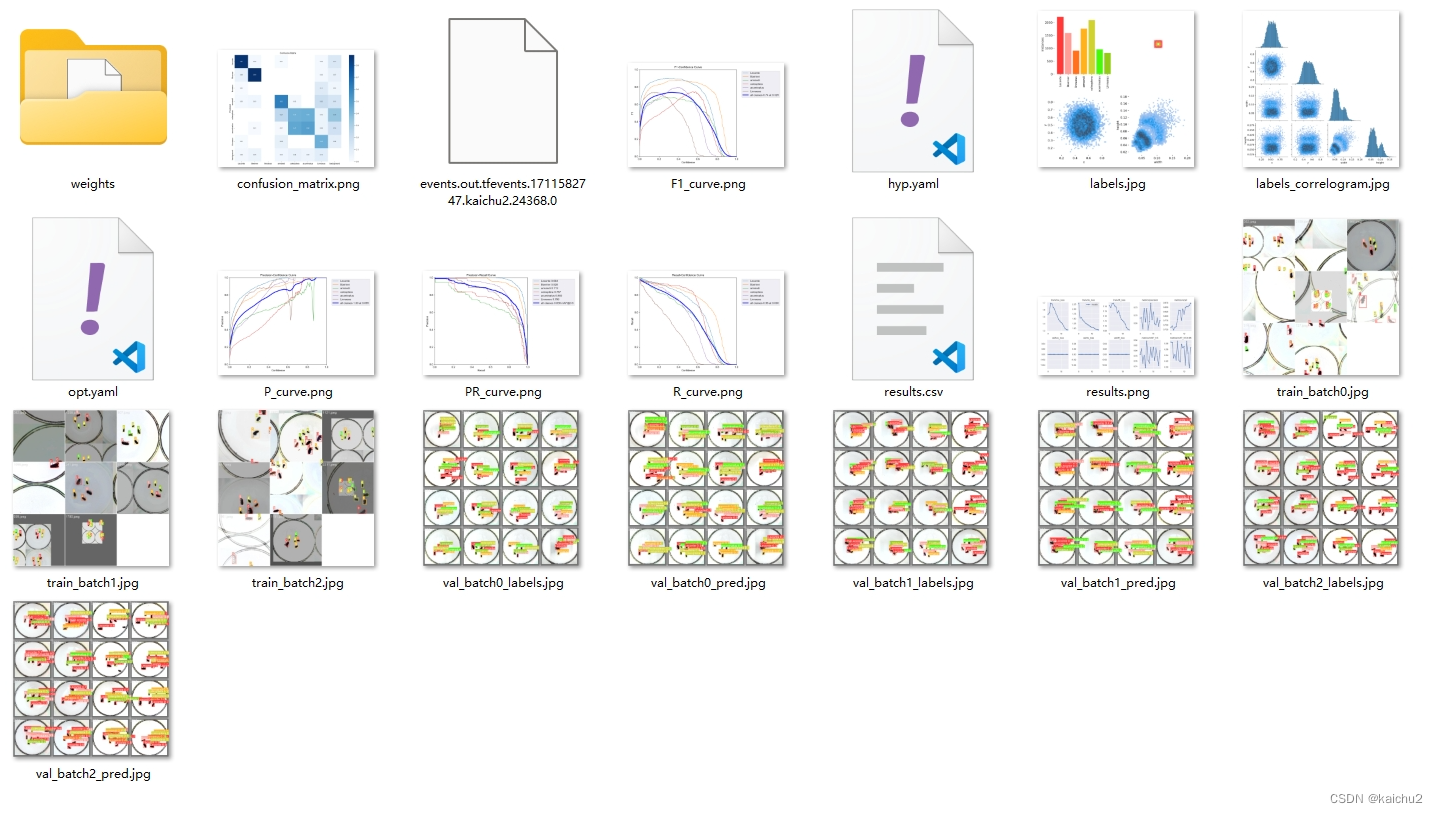

yolov9-c summary: 604 layers, 50712138 parameters, 0 gradients, 236.7 GFLOPsClass Images Instances P R mAP50 mAP50-95: 100%|██████████| 16/16 01:11all 245 1856 0.743 0.782 0.836 0.588Leconte 245 594 0.92 0.871 0.964 0.714Boerner 245 318 0.85 0.893 0.928 0.722armandi 245 231 0.611 0.754 0.712 0.497coleoptera 245 186 0.437 0.909 0.757 0.456acuminatus 245 235 0.763 0.8 0.86 0.555Linnaeus 245 292 0.877 0.464 0.796 0.584训练过程中在runs/train目录下生成中间文件,如下图

五、模型测试

5.1、修改detect_dual.py文件

| --weights:训练好的模型,选择best.pt |

| --source:设置测试图片地址,或者采用电脑摄像头,设置为0时 |

| --data:自己创建的insect.yaml配置文件 |

| --imgsz:这里选择320,和训练时保持一致 |

| 其他参数选择默认配置 |

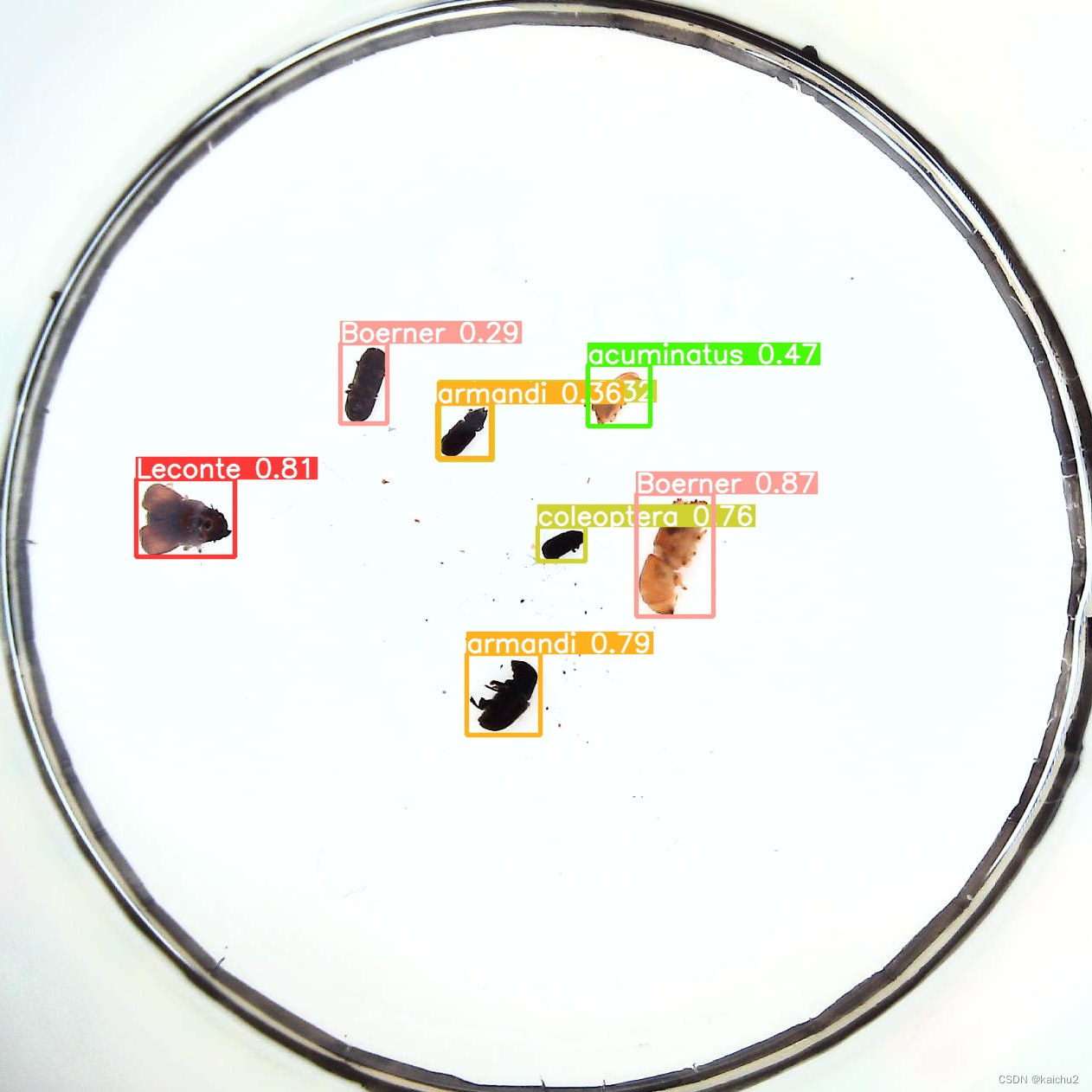

def parse_opt():parser = argparse.ArgumentParser()parser.add_argument('--weights', nargs='+', type=str, default=ROOT / 'runs/train/exp3/weights/best.pt', help='model path or triton URL')# parser.add_argument('--source', type=str, default=ROOT / 'E:\yolov9\data\insects\\test', help='file/dir/URL/glob/screen/0(webcam)')parser.add_argument('--source', type=str, default=ROOT / 'E:\YOLO_insects\insects\\test\images',help='file/dir/URL/glob/screen/0(webcam)')parser.add_argument('--data', type=str, default=ROOT / 'data/insect.yaml', help='(optional) dataset.yaml path')parser.add_argument('--imgsz', '--img', '--img-size', nargs='+', type=int, default=[320], help='inference size h,w')parser.add_argument('--conf-thres', type=float, default=0.25, help='confidence threshold')parser.add_argument('--iou-thres', type=float, default=0.45, help='NMS IoU threshold')parser.add_argument('--max-det', type=int, default=1000, help='maximum detections per image')parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--view-img', action='store_true', help='show results')parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')parser.add_argument('--save-conf', action='store_true', help='save confidences in --save-txt labels')parser.add_argument('--save-crop', action='store_true', help='save cropped prediction boxes')parser.add_argument('--nosave', action='store_true', help='do not save images/videos')parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --classes 0, or --classes 0 2 3')parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')parser.add_argument('--augment', action='store_true', help='augmented inference')parser.add_argument('--visualize', action='store_true', help='visualize features')parser.add_argument('--update', action='store_true', help='update all models')parser.add_argument('--project', default=ROOT / 'runs/detect', help='save results to project/name')parser.add_argument('--name', default='exp', help='save results to project/name')parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')parser.add_argument('--line-thickness', default=3, type=int, help='bounding box thickness (pixels)')parser.add_argument('--hide-labels', default=False, action='store_true', help='hide labels')parser.add_argument('--hide-conf', default=False, action='store_true', help='hide confidences')parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')parser.add_argument('--dnn', action='store_true', help='use OpenCV DNN for ONNX inference')parser.add_argument('--vid-stride', type=int, default=1, help='video frame-rate stride')opt = parser.parse_args()opt.imgsz *= 2 if len(opt.imgsz) == 1 else 1 # expandprint_args(vars(opt))return opt在runs\detect\exp目录下生成对应的测试效果图

六、遇到的问题

AttributeError: 'FreeTypeFont' object has no attribute 'getsize'这个问题 主要是:

'FreeTypeFont' object has no attribute 'getsize', is caused by a compatibility problem with the new version of Pillow.

# 1、查看pillow的版本

conda list# 2、卸载高版本安装9.5版本

pip uninstall pillowpip install pillow==9.5参考博客:

1、YOLOv9目标识别——详细记录训练环境配置与训练自己的数据集_yolov9训练自己的数据集-CSDN博客

2、YOLOv9如何训练自己的数据集(NEU-DET为案列)_yolov9训练自己的数据集-CSDN博客

)

)

![[flask]http请求//获取请求头信息+客户端信息](http://pic.xiahunao.cn/[flask]http请求//获取请求头信息+客户端信息)

)

)