Mediapipe,每秒200-300帧的实时人脸检测,提取画面中的人脸框,实现后续各种应用:人脸属性识别、表情识别、关键点检测、三维重建、增强现实、AI换妆等

code:google/mediapipe: Cross-platform, customizable ML solutions for live and streaming media. (github.com)

理论

检测到的人脸集合,其中每个人脸都表示为一个检测原型消息,其中包含一个边界框和 6 个关键点(右眼、左眼、鼻尖、嘴巴中心、右耳和左耳)。边界框由xmin和width(均由[0.0, 1.0]图像宽度归一化)和ymin和(均由图像高度height归一化)组成。[0.0, 1.0]每个关键点由x和组成,分别由图像宽度和高度y归一化。[0.0, 1.0]

import cv2

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

# For webcam input:

cap = cv2.VideoCapture(0)

with mp_face_detection.FaceDetection(model_selection=0, min_detection_confidence=0.5) as face_detection:while cap.isOpened():success, image = cap.read()if not success:print("Ignoring empty camera frame.")# If loading a video, use 'break' instead of 'continue'.continue# To improve performance, optionally mark the image as not writeable to# pass by reference.image.flags.writeable = Falseimage = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)results = face_detection.process(image)# Draw the face detection annotations on the image.image.flags.writeable = Trueimage = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)if results.detections:for detection in results.detections:mp_drawing.draw_detection(image, detection)# Flip the image horizontally for a selfie-view display.cv2.imshow('MediaPipe Face Detection', cv2.flip(image, 1))if cv2.waitKey(5) & 0xFF == 27:break

cap.release()

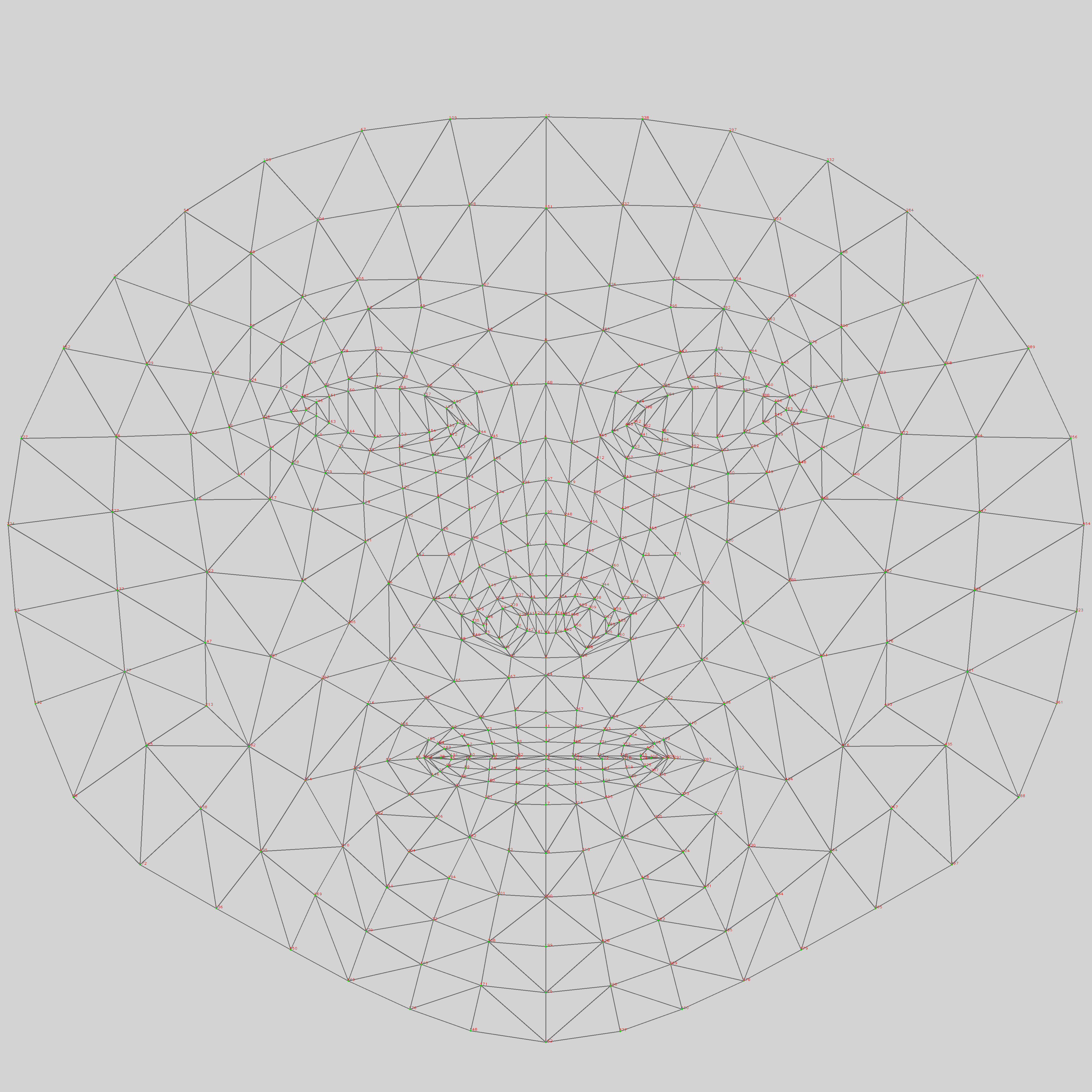

复制代码468个人脸关键点:

Diffusion Video Editing

结合论文:Speech Driven Video Editing via an Audio-Conditioned Diffusion Model

video preprocess

每个视频帧需要有一个矩形区域的脸掩盖。使用mediapipe,提取面部landmark来确定颌骨的位置。使用这些信息,mask掉覆盖鼻子下方区域的脸部矩形部分,如图1所示。这个掩码是在数据加载器运行时计算并应用到帧上的。

参考:一起来学MediaPipe(一)人脸及五官定位检测-阿里云开发者社区 (aliyun.com)

TensorRT写Plugin)

)