一、前言

本次部署elk所有的服务都部署在k8s集群中,服务包含filebeat、logstash、elasticsearch、kibana,其中elasticsearch使用集群的方式部署,所有服务都是用7.17.10版本

二、部署

部署elasticsearch集群

部署elasticsearch集群需要先优化宿主机(所有k8s节点都要优化,不优化会部署失败)

vi /etc/sysctl.conf

vm.max_map_count=262144重载生效配置

sysctl -p

以下操作在k8s集群的任意master执行即可

创建yaml文件存放目录

mkdir /opt/elk && cd /opt/elk

这里使用无头服务部署es集群,需要用到pv存储es集群数据,service服务提供访问,setafuset服务部署es集群

创建svc的无头服务和对外访问的yaml配置文件

vi es-service.yaml

kind: Service

metadata:name: elasticsearchnamespace: elklabels:app: elasticsearch

spec:selector:app: elasticsearchclusterIP: Noneports:- port: 9200name: db- port: 9300name: intervi es-service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:name: elasticsearch-nodeportnamespace: elklabels:app: elasticsearch

spec:selector:app: elasticsearchtype: NodePortports:- port: 9200name: dbnodePort: 30017- port: 9300name: internodePort: 30018创建pv的yaml配置文件(这里使用nfs共享存储方式)

vi es-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: es-pv1

spec:storageClassName: es-pv #定义了存储类型capacity:storage: 30GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:path: /volume2/k8s-data/es/es-pv1server: 10.1.13.99

---

apiVersion: v1

kind: PersistentVolume

metadata:name: es-pv2

spec:storageClassName: es-pv #定义了存储类型capacity:storage: 30GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:path: /volume2/k8s-data/es/es-pv2server: 10.1.13.99

---

apiVersion: v1

kind: PersistentVolume

metadata:name: es-pv3

spec:storageClassName: es-pv #定义了存储类型capacity:storage: 30GiaccessModes:- ReadWriteManypersistentVolumeReclaimPolicy: Retainnfs:path: /volume2/k8s-data/es/es-pv3server: 10.1.13.99创建setafulset的yaml配置文件

vi es-setafulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: elasticsearchnamespace: elklabels:app: elasticsearch

spec:podManagementPolicy: Parallel serviceName: elasticsearchreplicas: 3selector:matchLabels:app: elasticsearchtemplate:metadata:labels:app: elasticsearchspec:tolerations: #此配置是容忍污点可以使pod部署到master节点,可以去掉- key: "node-role.kubernetes.io/control-plane"operator: "Exists"effect: NoSchedulecontainers:- image: elasticsearch:7.17.10name: elasticsearchresources:limits:cpu: 1memory: 2Girequests:cpu: 0.5memory: 500Mienv:- name: network.hostvalue: "_site_"- name: node.namevalue: "${HOSTNAME}"- name: discovery.zen.minimum_master_nodesvalue: "2"- name: discovery.seed_hosts #该参数用于告诉新加入集群的节点去哪里发现其他节点,它应该包含集群中已经在运行的一部分节点的主机名或IP地址,这里我使用无头服务的地址value: "elasticsearch-0.elasticsearch.elk.svc.cluster.local,elasticsearch-1.elasticsearch.elk.svc.cluster.local,elasticsearch-2.elasticsearch.elk.svc.cluster.local"- name: cluster.initial_master_nodes #这个参数用于指定初始主节点。当一个新的集群启动时,它会从这个列表中选择一个节点作为初始主节点,然后根据集群的情况选举其他的主节点value: "elasticsearch-0,elasticsearch-1,elasticsearch-2"- name: cluster.namevalue: "es-cluster"- name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx512m"ports:- containerPort: 9200name: dbprotocol: TCP- name: intercontainerPort: 9300volumeMounts:- name: elasticsearch-datamountPath: /usr/share/elasticsearch/datavolumeClaimTemplates:- metadata:name: elasticsearch-dataspec:storageClassName: "es-pv"accessModes: [ "ReadWriteMany" ]resources:requests:storage: 30Gi创建elk服务的命名空间

kubectl create namespace elk创建yaml文件的服务

kubectl create -f es-pv.yaml

kubectl create -f es-service-nodeport.yaml

kubectl create -f es-service.yaml

kubectl create -f es-setafulset.yaml

查看es服务是否正常启动

kubectl get pod -n elk

检查elasticsearch集群是否正常

http://10.1.60.119:30017/_cluster/state/master_node,nodes?pretty可以看到集群中能正确识别到三个es节点

elasticsearch集群部署完成

部署kibana服务

这里使用deployment控制器部署kibana服务,使用service服务对外提供访问

创建deployment的yaml配置文件

vi kibana-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: kibananamespace: elklabels:app: kibana

spec:replicas: 1selector:matchLabels:app: kibanatemplate:metadata:labels:app: kibanaspec:tolerations:- key: "node-role.kubernetes.io/control-plane"operator: "Exists"effect: NoSchedulecontainers:- name: kibanaimage: kibana:7.17.10resources:limits:cpu: 1memory: 1Grequests:cpu: 0.5memory: 500Mienv:- name: ELASTICSEARCH_HOSTSvalue: http://elasticsearch:9200ports:- containerPort: 5601protocol: TCP创建service的yaml配置文件

vi kibana-service.yaml

apiVersion: v1

kind: Service

metadata:name: kibananamespace: elk

spec:ports:- port: 5601protocol: TCPtargetPort: 5601nodePort: 30019type: NodePortselector:app: kibana创建yaml文件的服务

kubectl create -f kibana-service.yaml

kubectl create -f kibana-deployment.yaml查看kibana是否正常

kubectl get pod -n elk

部署logstash服务

logstash服务也是通过deployment控制器部署,需要使用到configmap存储logstash配置,还有service提供对外访问服务

编辑configmap的yaml配置文件

vi logstash-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: logstash-configmapnamespace: elklabels:app: logstash

data:logstash.conf: |input {beats {port => 5044 #设置日志收集端口# codec => "json"}}filter {}output {# stdout{ 该被注释的配置项用于将收集的日志输出到logstash的日志中,主要用于测试看收集的日志中包含哪些内容# codec => rubydebug# }elasticsearch {hosts => "elasticsearch:9200"index => "nginx-%{+YYYY.MM.dd}"}}编辑deployment的yaml配置文件

vi logstash-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: logstashnamespace: elk

spec:replicas: 1selector:matchLabels:app: logstashtemplate:metadata:labels:app: logstashspec:containers:- name: logstashimage: logstash:7.17.10imagePullPolicy: IfNotPresentports:- containerPort: 5044volumeMounts:- name: config-volumemountPath: /usr/share/logstash/pipeline/volumes:- name: config-volumeconfigMap:name: logstash-configmapitems:- key: logstash.confpath: logstash.conf编辑service的yaml配置文件(我这里是收集k8s内部署的服务日志,所以没开放对外访问)

vi logstash-service.yaml

apiVersion: v1

kind: Service

metadata:name: logstashnamespace: elk

spec:ports:- port: 5044targetPort: 5044protocol: TCPselector:app: logstashtype: ClusterIP创建yaml文件的服务

kubectl create -f logstash-configmap.yaml

kubectl create -f logstash-service.yaml

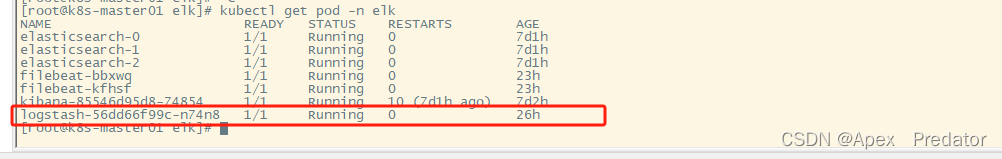

kubectl create -f logstash-deployment.yaml查看logstash服务是否正常启动

kubectl get pod -n elk

部署filebeat服务

filebeat服务使用daemonset方式部署到k8s的所有工作节点上,用于收集容器日志,也需要使用configmap存储配置文件,还需要配置rbac赋权,因为用到了filebeat的自动收集模块,自动收集k8s集群的日志,需要对k8s集群进行访问,所以需要赋权

编辑rabc的yaml配置文件

vi filebeat-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: filebeatnamespace: elklabels:app: filebeat

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: filebeatlabels:app: filebeat

rules:

- apiGroups: [""]resources: ["namespaces", "pods", "nodes"] #赋权可以访问的服务verbs: ["get", "list", "watch"] #可以使用以下命令

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: filebeat

subjects:

- kind: ServiceAccountname: filebeatnamespace: elk

roleRef:kind: ClusterRolename: filebeatapiGroup: rbac.authorization.k8s.io编辑configmap的yaml配置文件

vi filebeat-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: filebeat-confignamespace: elkdata:filebeat.yml: |filebeat.autodiscover: #使用filebeat的自动发现模块providers:- type: kubernetes #类型选择k8s类型templates: #配置需要收集的模板- condition:and:- or:- equals:kubernetes.labels: #通过标签筛选需要收集的pod日志app: foundation- equals:kubernetes.labels:app: api-gateway- equals: #通过命名空间筛选需要收集的pod日志kubernetes.namespace: java-serviceconfig: #配置日志路径,使用k8s的日志路径- type: containersymlinks: true paths: #配置路径时,需要使用变量去构建路径,以此来达到收集对应服务的日志- /var/log/containers/${data.kubernetes.pod.name}_${data.kubernetes.namespace}_${data.kubernetes.container.name}-*.logoutput.logstash:hosts: ['logstash:5044']关于filebeat自动发现k8s服务的更多内容可以参考elk官网,里面还有很多的k8s参数可用

参考:Autodiscover | Filebeat Reference [8.12] | Elastic

编辑daemonset的yaml配置文件

vi filebeat-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: filebeatnamespace: elklabels:app: filebeat

spec:selector:matchLabels:app: filebeattemplate:metadata:labels:app: filebeatspec:serviceAccountName: filebeatterminationGracePeriodSeconds: 30containers:- name: filebeatimage: elastic/filebeat:7.17.10args: ["-c", "/etc/filebeat.yml","-e",]env:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeNamesecurityContext:runAsUser: 0resources:limits:cpu: 200mmemory: 200Mirequests:cpu: 100mmemory: 100MivolumeMounts:- name: configmountPath: /etc/filebeat.ymlreadOnly: truesubPath: filebeat.yml- name: log #这里挂载了三个日志路径,这是因为k8s的container路径下的日志文件都是通过软链接去链接其它目录的文件mountPath: /var/log/containersreadOnly: true- name: pod-log #这里是container下的日志软链接的路径,然而这个还不是真实路径,这也是个软链接mountPath: /var/log/podsreadOnly: true- name: containers-log #最后这里才是真实的日志路径,如果不都挂载进来是取不到日志文件的内容的mountPath: /var/lib/docker/containersreadOnly: truevolumes:- name: configconfigMap:defaultMode: 0600name: filebeat-config- name: loghostPath:path: /var/log/containers- name: pod-loghostPath:path: /var/log/pods- name: containers-loghostPath:path: /var/lib/docker/containers创建yaml文件的服务

kubectl create -f filebeat-rbac.yaml

kubectl create -f filebeat-configmap.yaml

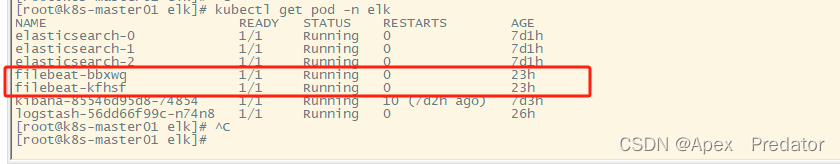

kubectl create -f filebeat-daemonset.yaml查看filebeat服务是否正常启动

kubectl get pod -n elk

至此在k8s集群内部署elk服务完成

)

安装与Series)

)

)

)