一个视频:https://www.bilibili.com/video/BV1mb4y1y7EB/?spm_id_from=333.337.search-card.all.click&vd_source=7a1a0bc74158c6993c7355c5490fc600

这里有个视频,讲解得更加生动形象一些

总得来说,词袋模型(Bow, bag-of-words) 是最简单的 “文本 —> 矢量”(把文本转为矢量) 模型

二元分类和多元分类的两个例子放在末尾

以下是 Claude3 的解释,我们慢慢看

The bag-of-words model is a simplifying representation used in natural language processing (NLP). In this representation, a text (such as a sentence or a document) is represented as an unordered collection of words, disregarding grammar and word order.

bag-of-words 模型是 NLP领域 里的一个简化表示方法。在这种表示方法里,一个文本(比如一个句子或者是一个文档) 会被表示成一个乱序的单词集合,无视里面的语法和单词顺序。

Here’s how it works:

- A vocabulary of known words is created from the corpus of texts.

- Each text is represented as a vector, with one dimension for each word in the vocabulary.

- If a word appears in the text, its value in the vector is non-zero (typically 1 or a number representing frequency); otherwise, it is zero.

For example, if the vocabulary is [“hello”, “goodbye”, “computer”], then the sentence “hello hello computer” would be represented by the vector [2, 0, 1].

如下,是 bag-of-words 的工作方法:

- 一堆已知单词的词汇表是从语料库里创建出来的

- 每一个文本都可以使用一个向量表示,词汇表中的每一个单词都可以使用一个一维向量来表示

- 如果一个单词在文本中出现,它在矢量中的值是 non-zero,否则就是 0。

举个例子,如果词汇表是 [“hello”, “goodbye”, “computer”],那么这句 “hello hello computer” 就可以用矢量 [2, 0, 1] 表示

The advantages of this model are its simplicity and the fact that it allows for straightforward application of techniques from fields like machine learning and information retrieval.

However, the bag-of-words model has several shortcomings:

- It disregards word order and grammar, which can be very important in natural language.

- It assumes that all words are equally important, ignoring semantics.

- For large vocabularies, the resulting vector can be very high-dimensional and sparse.

Despite these limitations, the bag-of-words model can serve as a baseline for more advanced NLP techniques and may still have some uses in simple applications.

这个模型的优点是:很简单,并且它允许直接应用来自机器学习和信息检索等领域的技术。

然而,bag-of-words 模型也有几个缺点:

- 它忽略了单词的顺序和语法结构,而这在自然语言中是非常重要的。 (单词向量无法展示单词顺序和语法结构)

- 它假设所有单词同等重要,忽视了词义语义信息。 (假设所有单词同等重要)

- 对于大型词汇表,生成的向量往往是非常高维且稀疏的。 (大型词汇表中,向量高维且稀疏)

尽管有这些限制,bag-of-words 模型仍然可以作为一些先进的 NLP技术的 baseline,并且在一些简单的应用中都是有用例的

In modern NLP systems, more advanced techniques like word embeddings (e.g., Word2Vec, GloVe, BERT) are commonly used to capture richer semantic and syntactic information.

在现代NLP系统中,通常采用更先进的技术,如词嵌入(例如Word2Vec、GloVe、BERT)来捕获更丰富的语义和语法信息。

二元分类和多元分类的两个例子放在末尾

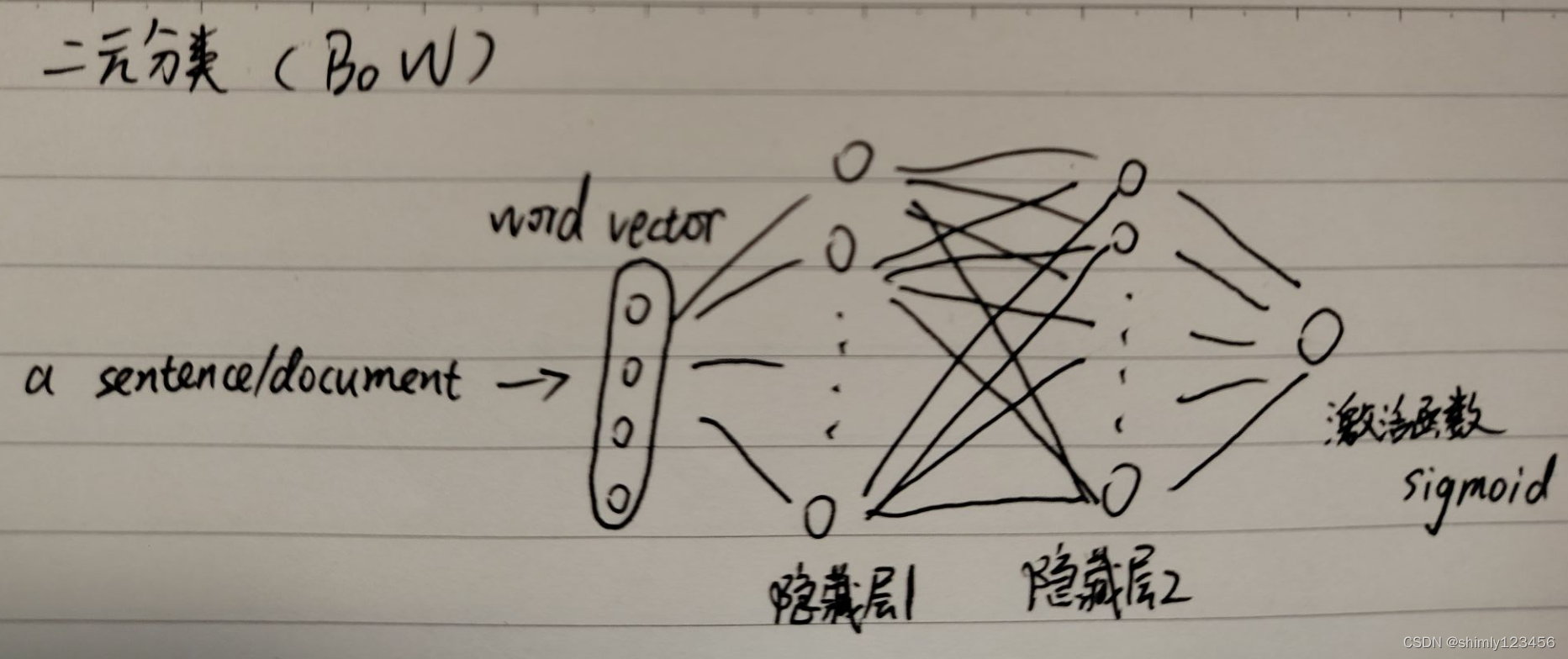

二元分类:

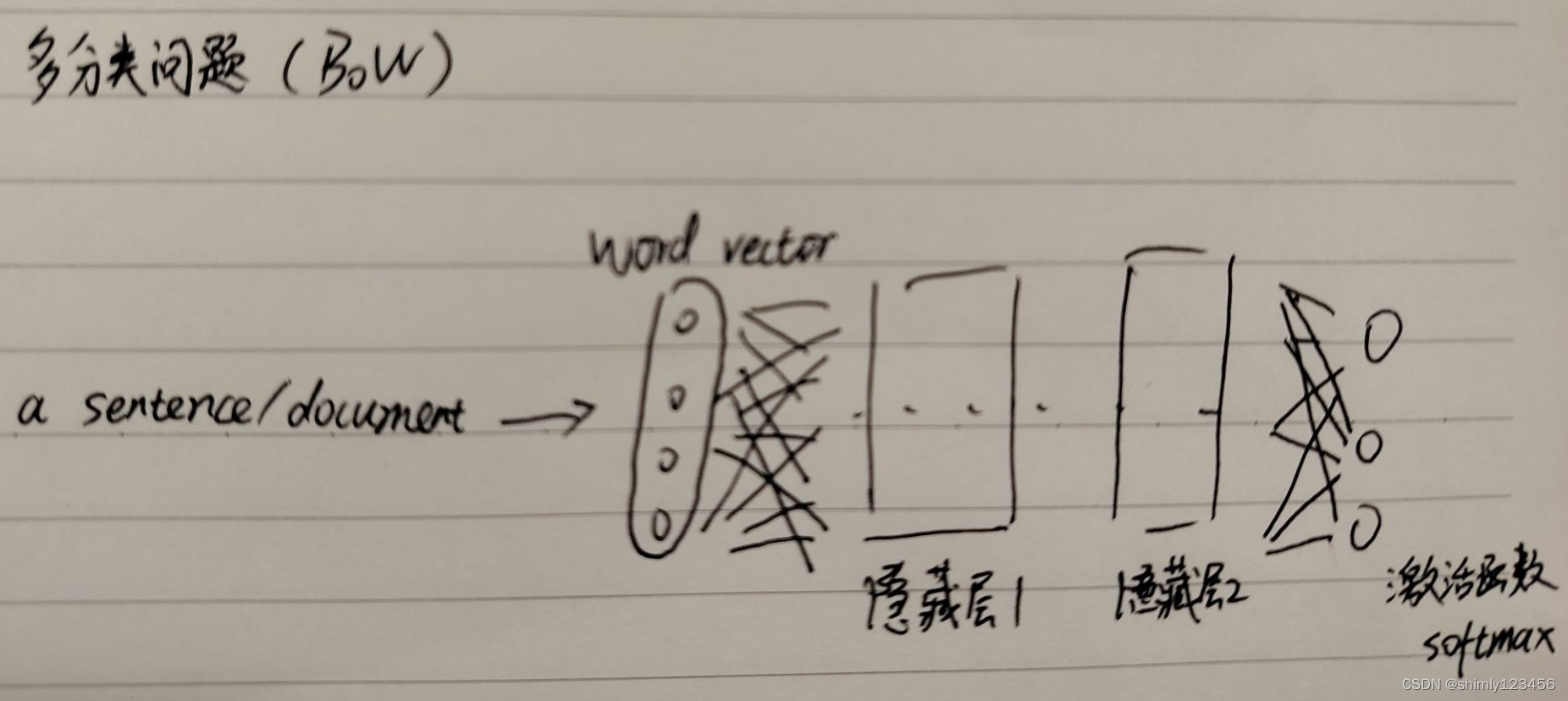

多元分类:

)

)

)

)

)

内部时钟定时与外部时钟 TIM小总结】)

)

![[蓝桥杯 2021 省 AB2] 完全平方数](http://pic.xiahunao.cn/[蓝桥杯 2021 省 AB2] 完全平方数)

)