目录

一、理解Docker网络

1.1 运行tomcat容器

1.2 查看容器内部网络地址

1.3 测试连通性

二、原理

2.1 查看网卡信息

2.2 再启动一个容器测试网卡

2.3 测试tomcat01 和tomcat02是否可以ping通

2.4 只要删除容器,对应网桥一对就没了

2.5 结论

三、--link

3.1 两个容器直接通过名字ping,发现ping不通

3.2 解决方法

3.2.1 新建一个tomcat容器使用--link参数

3.2.2 进行tomcat03和tomcat02通信

3.3.3 查看docker网络信息

3.3.4 探究

3.4.5 结论

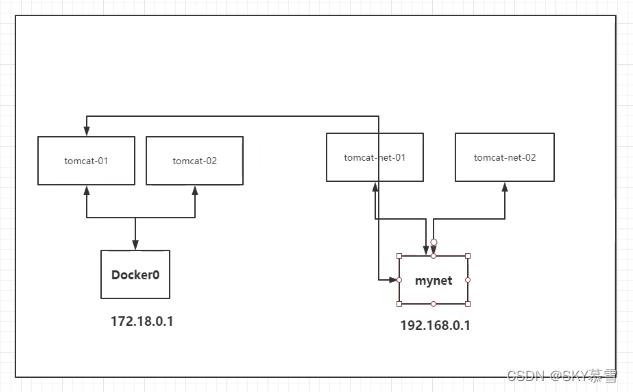

四、自定义网络

4.1 测试

4.2 创建两个容器,再次查看自定义的网络信息

4.3 两个容器互相通信

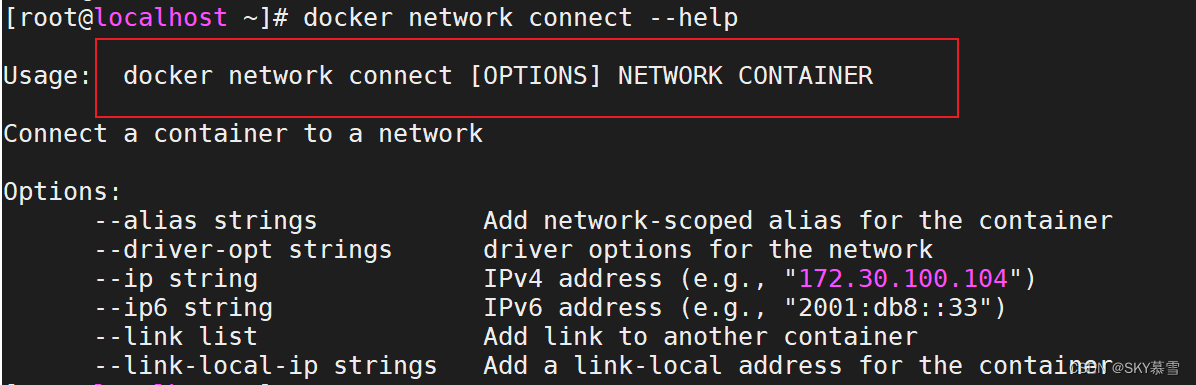

五、网络连通

5.1 打通tomcat01 - mynet

5.2 测试连通性

一、理解Docker网络

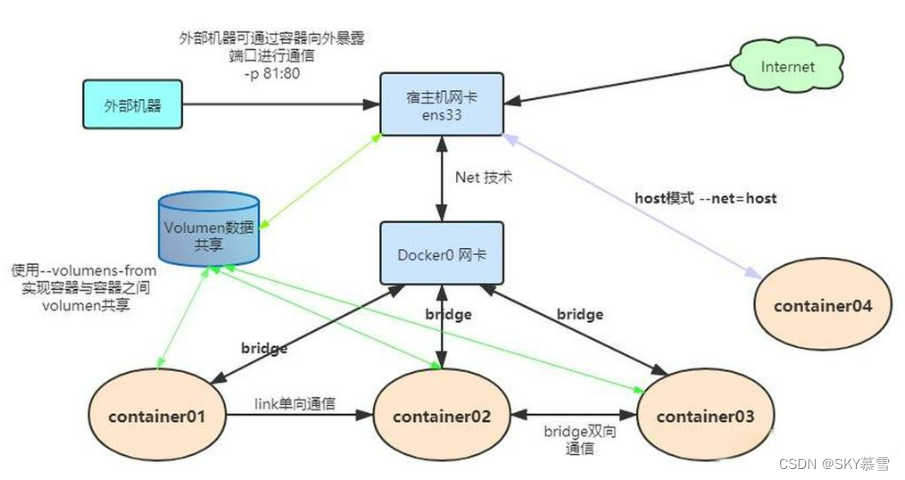

在 Docker 中,网络是连接容器实例的关键组件,它允许不同的容器之间进行通信,并与外部世界进行交互。Docker 提供了多种网络模式和驱动程序,以满足不同场景下的网络需求。

合理配置和使用 Docker 的网络功能,可以实现容器间的通信和数据共享,同时也能保障容器的安全和隔离性

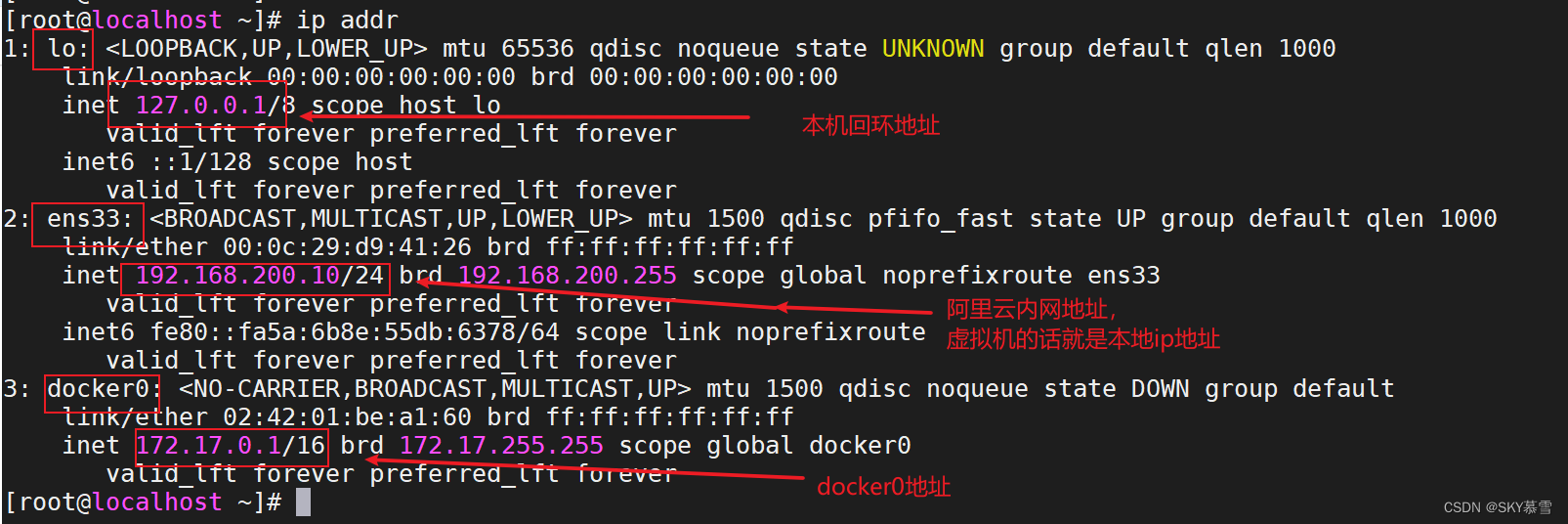

查看本机网卡

1.1 运行tomcat容器

[root@localhost ~]# docker run -d -p 8085:80 --name tomcat01 tomcat

Unable to find image 'tomcat:latest' locally

latest: Pulling from library/tomcat

0e29546d541c: Pull complete

9b829c73b52b: Pull complete

cb5b7ae36172: Pull complete

6494e4811622: Pull complete

668f6fcc5fa5: Pull complete

dc120c3e0290: Pull complete

8f7c0eebb7b1: Pull complete

77b694f83996: Pull complete

0f611256ec3a: Pull complete

4f25def12f23: Pull complete

Digest: sha256:9dee185c3b161cdfede1f5e35e8b56ebc9de88ed3a79526939701f3537a52324

Status: Downloaded newer image for tomcat:latest

bd6a51c579d1dd64812f60e009c61f2ffe91a2434d088fe6749a07ed6b16e1aa

1.2 查看容器内部网络地址

发现容器启动的时候就会得到一个ip地址,docker分配的

# 如果容器内没有 ip addr 命令执行以下内容进行安装

[root@localhost ~]# docker exec -it tomcat01 apt-get update && apt-get install -y iproute2# 查看容器的内部网络地址

[root@localhost ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

1.3 测试连通性

尝试Linux与容器的网络连通性,发现是可以ping通的

[root@localhost ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.096 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.063 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.051 ms

^C

--- 172.17.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.051/0.070/0.096/0.019 ms

二、原理

2.1 查看网卡信息

每安装启动一个docker容器,docker就会给docker容器分配一个ip,只要安装了docker,就会有一个网卡docker0,桥接模式,使用的技术是evth-pair技术

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:d9:41:26 brd ff:ff:ff:ff:ff:ffinet 192.168.200.10/24 brd 192.168.200.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::fa5a:6b8e:55db:6378/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:01:be:a1:60 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:1ff:febe:a160/64 scope linkvalid_lft forever preferred_lft forever

5: veth4a3fbdb@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 6e:5e:99:86:6b:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::6c5e:99ff:fe86:6b25/64 scope linkvalid_lft forever preferred_lft forever

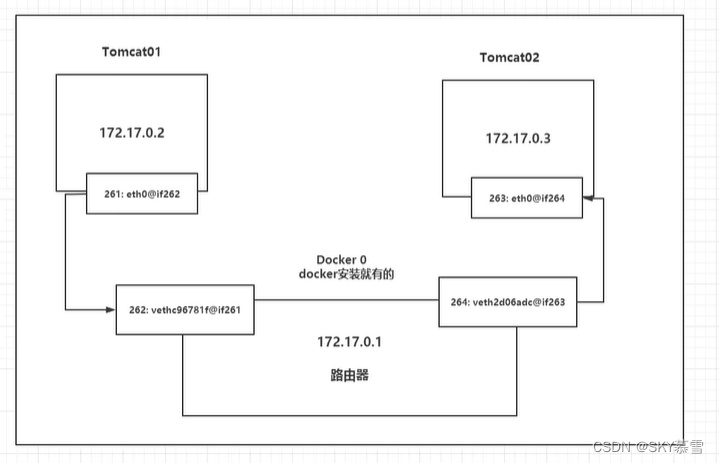

2.2 再启动一个容器测试网卡

发现又多了一对网卡

#创建tomcat02容器

[root@localhost ~]# docker run -d -P --name tomcat02 tomcat

d55f7582c96b12181485f5c31448c6a0d682ee9b39129652c4cfd9c3b03968a5# 再次查看

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:d9:41:26 brd ff:ff:ff:ff:ff:ffinet 192.168.200.10/24 brd 192.168.200.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::fa5a:6b8e:55db:6378/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:01:be:a1:60 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:1ff:febe:a160/64 scope linkvalid_lft forever preferred_lft forever

5: veth4a3fbdb@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 6e:5e:99:86:6b:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::6c5e:99ff:fe86:6b25/64 scope linkvalid_lft forever preferred_lft forever

7: veth347eeeb@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether c6:52:9e:89:1d:e4 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::c452:9eff:fe89:1de4/64 scope linkvalid_lft forever preferred_lft forever

# 查看容器内的ip

[root@localhost ~]# docker exec -it tomcat02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

发现这个容器带来的网卡,都是一对的

evth-pair 就是一对的虚拟设备接口,但是成对出现的,一段连着协议,一段彼此相连

因为这个特性,evth-pair充当一个桥梁,连接各种虚拟网络设备的

Openstack,Docker容器之间的连接,OVS的连接,都是使用 evth-pair 技术

2.3 测试tomcat01 和tomcat02是否可以ping通

#没有ping命令的话需要先安装一下

[root@localhost ~]# docker exec -it tomcat01 apt-get install iputils-ping

[root@localhost ~]# docker exec -it tomcat02 apt-get install iputils-ping开始测试tomcat02 ping tomcat01,发现是可以通信的

[root@localhost ~]# docker exec -it tomcat02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.131 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.099 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.122 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.094 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.055 ms

^C

--- 172.17.0.2 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4000ms

rtt min/avg/max/mdev = 0.055/0.100/0.131/0.026 ms

2.4 只要删除容器,对应网桥一对就没了

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:d9:41:26 brd ff:ff:ff:ff:ff:ffinet 192.168.200.10/24 brd 192.168.200.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::fa5a:6b8e:55db:6378/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:01:be:a1:60 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:1ff:febe:a160/64 scope linkvalid_lft forever preferred_lft forever

5: veth4a3fbdb@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 6e:5e:99:86:6b:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::6c5e:99ff:fe86:6b25/64 scope linkvalid_lft forever preferred_lft forever

7: veth347eeeb@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether c6:52:9e:89:1d:e4 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::c452:9eff:fe89:1de4/64 scope linkvalid_lft forever preferred_lft forever[root@localhost ~]# docker rm -f tomcat01

tomcat01[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:d9:41:26 brd ff:ff:ff:ff:ff:ffinet 192.168.200.10/24 brd 192.168.200.255 scope global noprefixroute ens33valid_lft forever preferred_lft foreverinet6 fe80::fa5a:6b8e:55db:6378/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:01:be:a1:60 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:1ff:febe:a160/64 scope linkvalid_lft forever preferred_lft forever

7: veth347eeeb@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether c6:52:9e:89:1d:e4 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::c452:9eff:fe89:1de4/64 scope linkvalid_lft forever preferred_lft forever

2.5 结论

容器之间是可以互相ping通的

tomcat01和tomcat02是共用一个路由器,docker0

所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用IP

小结

Docker使用的是Linux的桥接,宿主机中是一个Docker容器中的网桥 docker0

Docker中的所有的网络接口都是虚拟的。虚拟的转发效率高!(内网传递文件)

三、--link

目的:ip更改了,项目不重启的情况下,用名字进行访问容器

3.1 两个容器直接通过名字ping,发现ping不通

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b914c96e16f9 tomcat "catalina.sh run" 9 seconds ago Up 9 seconds 0.0.0.0:32770->8080/tcp, :::32770->8080/tcp tomcat01

d55f7582c96b tomcat "catalina.sh run" 14 hours ago Up 14 hours 0.0.0.0:32768->8080/tcp, :::32768->8080/tcp tomcat02

[root@localhost ~]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Name or service not known

3.2 解决方法

3.2.1 新建一个tomcat容器使用--link参数

[root@localhost ~]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0

如果容器没有ping命令,就下载一下

[root@localhost ~]# docker exec -it tomcat03 apt-get update

[root@localhost ~]# docker exec -it tomcat03 apt-get install iputils-ping

3.2.2 进行tomcat03和tomcat02通信

[root@localhost ~]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.105 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.099 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.087 ms

^C

--- tomcat02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.087/0.097/0.105/0.007 ms

通过--linke就可以解决网络连通问题

尝试反向通信,tomcat02向tomcat03通信,发现不行

[root@localhost ~]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

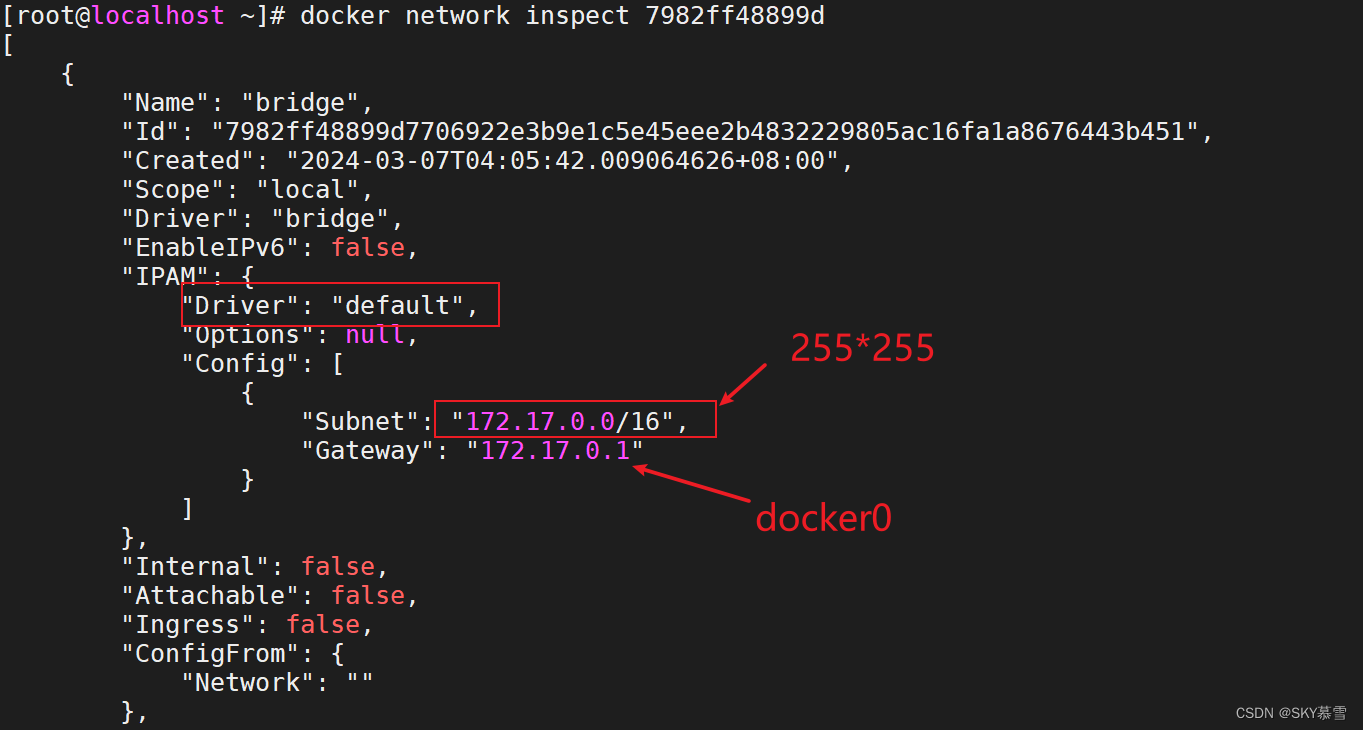

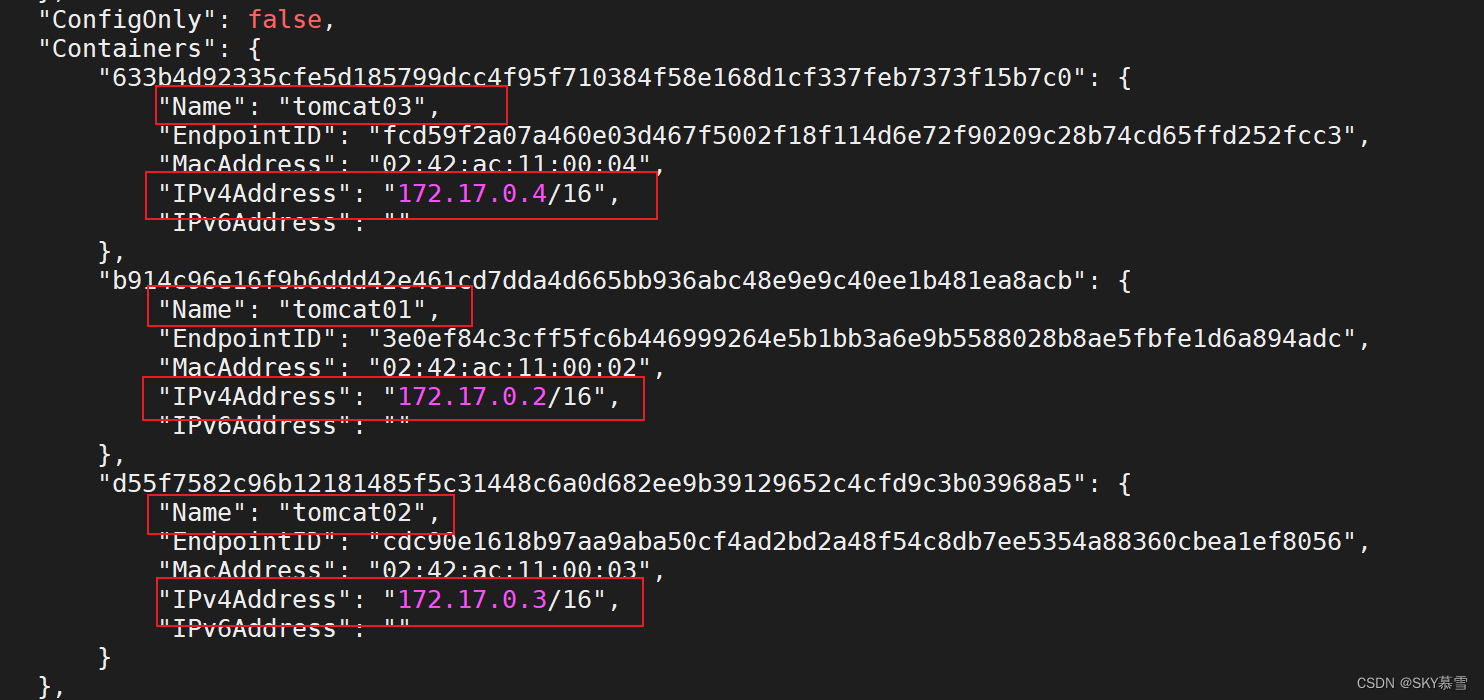

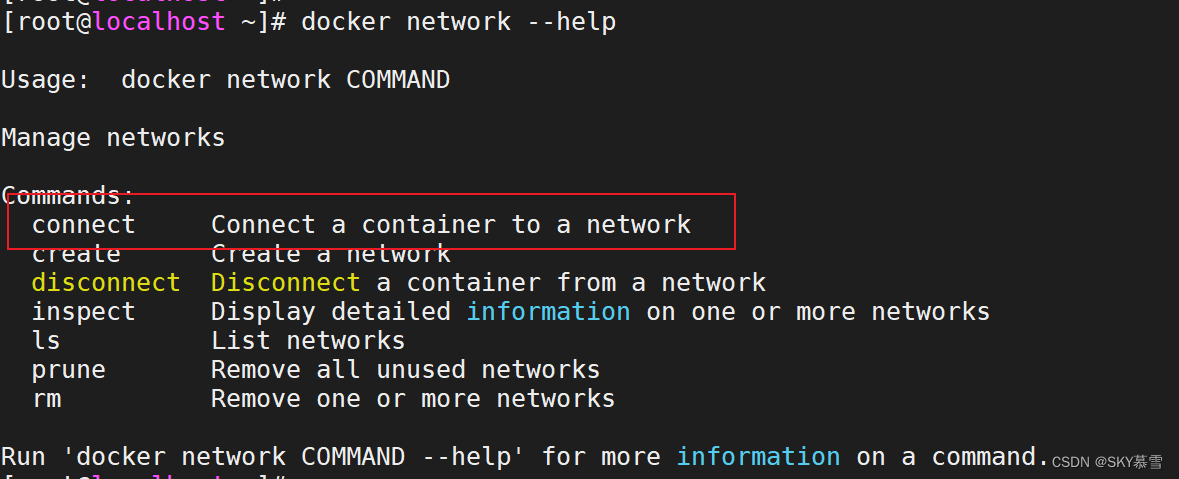

3.3.3 查看docker网络信息

[root@localhost ~]# docker network --helpUsage: docker network COMMANDManage networksCommands:connect Connect a container to a networkcreate Create a networkdisconnect Disconnect a container from a networkinspect Display detailed information on one or more networksls List networksprune Remove all unused networksrm Remove one or more networksRun 'docker network COMMAND --help' for more information on a command.[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7982ff48899d bridge bridge local

2681eab69678 host host local

858371f13c83 none null local[root@localhost ~]# docker network inspect 7982ff48899d

[{"Name": "bridge","Id": "7982ff48899d7706922e3b9e1c5e45eee2b4832229805ac16fa1a8676443b451","Created": "2024-03-07T04:05:42.009064626+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "172.17.0.0/16","Gateway": "172.17.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0": {"Name": "tomcat03","EndpointID": "fcd59f2a07a460e03d467f5002f18f114d6e72f90209c28b74cd65ffd252fcc3","MacAddress": "02:42:ac:11:00:04","IPv4Address": "172.17.0.4/16","IPv6Address": ""},"b914c96e16f9b6ddd42e461cd7dda4d665bb936abc48e9e9c40ee1b481ea8acb": {"Name": "tomcat01","EndpointID": "3e0ef84c3cff5fc6b446999264e5b1bb3a6e9b5588028b8ae5fbfe1d6a894adc","MacAddress": "02:42:ac:11:00:02","IPv4Address": "172.17.0.2/16","IPv6Address": ""},"d55f7582c96b12181485f5c31448c6a0d682ee9b39129652c4cfd9c3b03968a5": {"Name": "tomcat02","EndpointID": "cdc90e1618b97aa9aba50cf4ad2bd2a48f54c8db7ee5354a88360cbea1ef8056","MacAddress": "02:42:ac:11:00:03","IPv4Address": "172.17.0.3/16","IPv6Address": ""}},"Options": {"com.docker.network.bridge.default_bridge": "true","com.docker.network.bridge.enable_icc": "true","com.docker.network.bridge.enable_ip_masquerade": "true","com.docker.network.bridge.host_binding_ipv4": "0.0.0.0","com.docker.network.bridge.name": "docker0","com.docker.network.driver.mtu": "1500"},"Labels": {}}

]

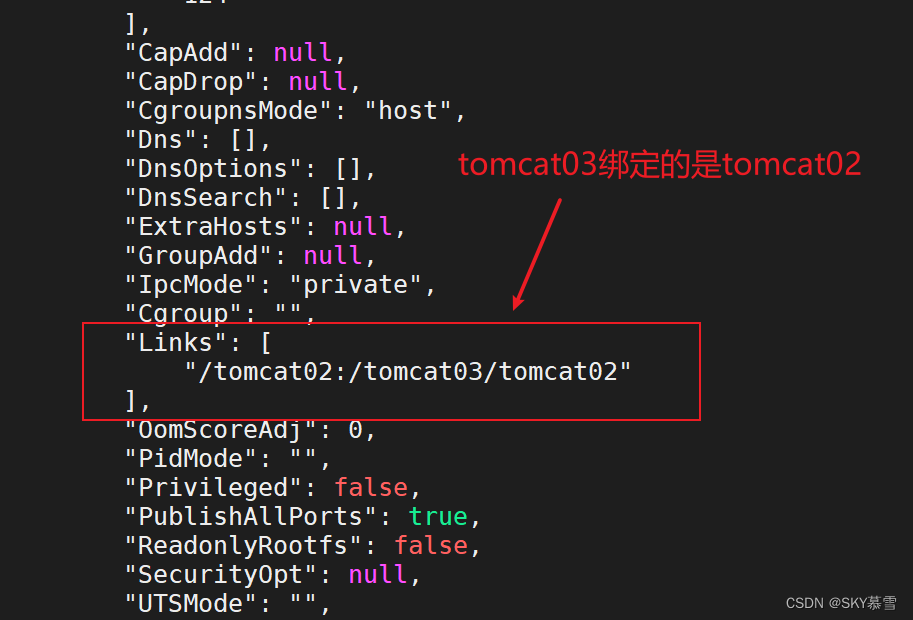

3.3.4 探究

查看tomcat03容器信息

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

633b4d92335c tomcat "catalina.sh run" 14 minutes ago Up 14 minutes 0.0.0.0:32771->8080/tcp, :::32771->8080/tcp tomcat03

b914c96e16f9 tomcat "catalina.sh run" 20 minutes ago Up 20 minutes 0.0.0.0:32770->8080/tcp, :::32770->8080/tcp tomcat01

d55f7582c96b tomcat "catalina.sh run" 15 hours ago Up 15 hours 0.0.0.0:32768->8080/tcp, :::32768->8080/tcp tomcat02

[root@localhost ~]# docker inspect 633b4d92335c

[{"Id": "633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0","Created": "2024-03-07T11:51:49.679486273Z","Path": "catalina.sh","Args": ["run"],"State": {"Status": "running","Running": true,"Paused": false,"Restarting": false,"OOMKilled": false,"Dead": false,"Pid": 13973,"ExitCode": 0,"Error": "","StartedAt": "2024-03-07T11:51:49.966799121Z","FinishedAt": "0001-01-01T00:00:00Z"},"Image": "sha256:fb5657adc892ed15910445588404c798b57f741e9921ff3c1f1abe01dbb56906","ResolvConfPath": "/var/lib/docker/containers/633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0/resolv.conf","HostnamePath": "/var/lib/docker/containers/633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0/hostname","HostsPath": "/var/lib/docker/containers/633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0/hosts","LogPath": "/var/lib/docker/containers/633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0/633b4d92335cfe5d185799dcc4f95f710384f58e168d1cf337feb7373f15b7c0-json.log","Name": "/tomcat03","RestartCount": 0,"Driver": "overlay2","Platform": "linux","MountLabel": "","ProcessLabel": "","AppArmorProfile": "","ExecIDs": null,"HostConfig": {"Binds": null,"ContainerIDFile": "","LogConfig": {"Type": "json-file","Config": {}},"NetworkMode": "default","PortBindings": {},"RestartPolicy": {"Name": "no","MaximumRetryCount": 0},"AutoRemove": false,"VolumeDriver": "","VolumesFrom": null,"ConsoleSize": [29,124],"CapAdd": null,"CapDrop": null,"CgroupnsMode": "host","Dns": [],"DnsOptions": [],"DnsSearch": [],"ExtraHosts": null,"GroupAdd": null,"IpcMode": "private","Cgroup": "","Links": ["/tomcat02:/tomcat03/tomcat02"],"OomScoreAdj": 0,"PidMode": "","Privileged": false,"PublishAllPorts": true,"ReadonlyRootfs": false,"SecurityOpt": null,"UTSMode": "","UsernsMode": "","ShmSize": 67108864,"Runtime": "runc","Isolation": "","CpuShares": 0,"Memory": 0,"NanoCpus": 0,"CgroupParent": "","BlkioWeight": 0,"BlkioWeightDevice": [],"BlkioDeviceReadBps": [],"BlkioDeviceWriteBps": [],"BlkioDeviceReadIOps": [],"BlkioDeviceWriteIOps": [],"CpuPeriod": 0,"CpuQuota": 0,"CpuRealtimePeriod": 0,"CpuRealtimeRuntime": 0,"CpusetCpus": "","CpusetMems": "","Devices": [],"DeviceCgroupRules": null,"DeviceRequests": null,"MemoryReservation": 0,"MemorySwap": 0,"MemorySwappiness": null,"OomKillDisable": false,"PidsLimit": null,"Ulimits": [],"CpuCount": 0,"CpuPercent": 0,"IOMaximumIOps": 0,"IOMaximumBandwidth": 0,"MaskedPaths": ["/proc/asound","/proc/acpi","/proc/kcore","/proc/keys","/proc/latency_stats","/proc/timer_list","/proc/timer_stats","/proc/sched_debug","/proc/scsi","/sys/firmware","/sys/devices/virtual/powercap"],"ReadonlyPaths": ["/proc/bus","/proc/fs","/proc/irq","/proc/sys","/proc/sysrq-trigger"]},"GraphDriver": {"Data": {"LowerDir": "/var/lib/docker/overlay2/0371313c2f4c07786b72a697dde07d132cc559ce66cc4c61a000bf0437f0ff41-init/diff:/var/lib/docker/overlay2/52ea3533a7a1d49ab4349f208b73400f8b0e836d484831a305df6350465b09e8/diff:/var/lib/docker/overlay2/9b8346259dd2171266b109b6ad175ff3304c32ae94af2b44be6d3f25be3ef849/diff:/var/lib/docker/overlay2/42e19ff7b72661fb7a6b85e8f5ccaca6074ec89bb802eeb88267babb4a510ed0/diff:/var/lib/docker/overlay2/25aeedf4ede35428f7d14e9f34d358aee6511ebd75cf47cae11a123e833c2a75/diff:/var/lib/docker/overlay2/c2dc2d7f1609c2755d4160d23e6837d369530bdae2fe4f3abb132d927ab64553/diff:/var/lib/docker/overlay2/57adcc688b0df46b0b55da08bf0ee899665b1ea7947ee44ad734c0fc581b537e/diff:/var/lib/docker/overlay2/08312de53167dc50ffa768172b9217c174e008aa5700eea4a9db227c94f4f00f/diff:/var/lib/docker/overlay2/47c43aa930e9cd570cbc1201d052800e4464ec9493a556dd65defc727ed69192/diff:/var/lib/docker/overlay2/444ab0cfda2a7d32c91a9da3cf238886d1fc808dcaf935d8fec5ac5fa6a6fa8f/diff:/var/lib/docker/overlay2/3fa1fb97f7bdeb837a2b97de72fd5ec08ac07175da9b99a94e411dc9811f9422/diff","MergedDir": "/var/lib/docker/overlay2/0371313c2f4c07786b72a697dde07d132cc559ce66cc4c61a000bf0437f0ff41/merged","UpperDir": "/var/lib/docker/overlay2/0371313c2f4c07786b72a697dde07d132cc559ce66cc4c61a000bf0437f0ff41/diff","WorkDir": "/var/lib/docker/overlay2/0371313c2f4c07786b72a697dde07d132cc559ce66cc4c61a000bf0437f0ff41/work"},"Name": "overlay2"},"Mounts": [],"Config": {"Hostname": "633b4d92335c","Domainname": "","User": "","AttachStdin": false,"AttachStdout": false,"AttachStderr": false,"ExposedPorts": {"8080/tcp": {}},"Tty": false,"OpenStdin": false,"StdinOnce": false,"Env": ["PATH=/usr/local/tomcat/bin:/usr/local/openjdk-11/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin","JAVA_HOME=/usr/local/openjdk-11","LANG=C.UTF-8","JAVA_VERSION=11.0.13","CATALINA_HOME=/usr/local/tomcat","TOMCAT_NATIVE_LIBDIR=/usr/local/tomcat/native-jni-lib","LD_LIBRARY_PATH=/usr/local/tomcat/native-jni-lib","GPG_KEYS=A9C5DF4D22E99998D9875A5110C01C5A2F6059E7","TOMCAT_MAJOR=10","TOMCAT_VERSION=10.0.14","TOMCAT_SHA512=c2d2ad5ed17f7284e3aac5415774a8ef35434f14dbd9a87bc7230d8bfdbe9aa1258b97a59fa5c4030e4c973e4d93d29d20e40b6254347dbb66fae269ff4a61a5"],"Cmd": ["catalina.sh","run"],"Image": "tomcat","Volumes": null,"WorkingDir": "/usr/local/tomcat","Entrypoint": null,"OnBuild": null,"Labels": {}},"NetworkSettings": {"Bridge": "","SandboxID": "09b1e8b94fbd606b8471ebe394135a88e3e6a17137a2340e0f572ce34d08c384","SandboxKey": "/var/run/docker/netns/09b1e8b94fbd","Ports": {"8080/tcp": [{"HostIp": "0.0.0.0","HostPort": "32771"},{"HostIp": "::","HostPort": "32771"}]},"HairpinMode": false,"LinkLocalIPv6Address": "","LinkLocalIPv6PrefixLen": 0,"SecondaryIPAddresses": null,"SecondaryIPv6Addresses": null,"EndpointID": "fcd59f2a07a460e03d467f5002f18f114d6e72f90209c28b74cd65ffd252fcc3","Gateway": "172.17.0.1","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"IPAddress": "172.17.0.4","IPPrefixLen": 16,"IPv6Gateway": "","MacAddress": "02:42:ac:11:00:04","Networks": {"bridge": {"IPAMConfig": null,"Links": null,"Aliases": null,"MacAddress": "02:42:ac:11:00:04","NetworkID": "7982ff48899d7706922e3b9e1c5e45eee2b4832229805ac16fa1a8676443b451","EndpointID": "fcd59f2a07a460e03d467f5002f18f114d6e72f90209c28b74cd65ffd252fcc3","Gateway": "172.17.0.1","IPAddress": "172.17.0.4","IPPrefixLen": 16,"IPv6Gateway": "","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"DriverOpts": null,"DNSNames": null}}}}

]

# 查看hosts配置,原理发现

[root@localhost ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 d55f7582c96b

172.17.0.4 633b4d92335c[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

633b4d92335c tomcat "catalina.sh run" 17 minutes ago Up 17 minutes 0.0.0.0:32771->8080/tcp, :::32771->8080/tcp tomcat03

b914c96e16f9 tomcat "catalina.sh run" 23 minutes ago Up 23 minutes 0.0.0.0:32770->8080/tcp, :::32770->8080/tcp tomcat01

d55f7582c96b tomcat "catalina.sh run" 15 hours ago Up 15 hours 0.0.0.0:32768->8080/tcp, :::32768->8080/tcp tomcat023.4.5 结论

--link 就是在hosts配置中增加了一个172.17.0.3 tomcat02 d55f7582c96b

已经不建议使用--link了!

自定义网络,不适用docker0!

docker0 不支持容器名访问!

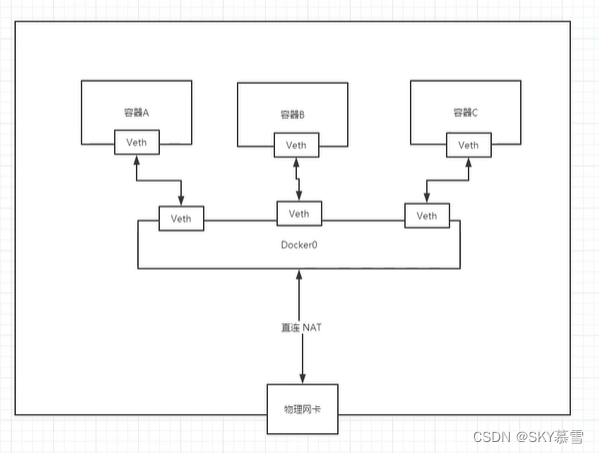

四、自定义网络

查看所有的docker网络

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7982ff48899d bridge bridge local

2681eab69678 host host local

858371f13c83 none null local

网络模式

bridge:桥接docker (默认,自己创建也是用bridge模式)

none: 不配置网络

host:和宿主机共享网络

dontainer:容器内网络连通(用的少!局限很大!)

4.1 测试

之前直接启动的命令 --net bridge,这个就是docker0

docker0特点:默认,域名不能访问,--link可以打通连接

可以自定义一个网络!

--driver bridge 桥接

--subnet 192.168.0.0/16 子网地址

--gateway 192.168.0.1 网关

[root@localhost ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

40d77be7a79d7dc13a3b5b3cd46234be47276159ad2da8daf264903739780edc

[root@localhost ~]#

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7982ff48899d bridge bridge local

2681eab69678 host host local

40d77be7a79d mynet bridge local

858371f13c83 none null local

[root@localhost ~]#

查看新创建的网卡信息

[root@localhost ~]# docker network inspect mynet

[{"Name": "mynet","Id": "40d77be7a79d7dc13a3b5b3cd46234be47276159ad2da8daf264903739780edc","Created": "2024-03-07T20:27:57.115095419+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": {},"Config": [{"Subnet": "192.168.0.0/16","Gateway": "192.168.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {},"Options": {},"Labels": {}}

]

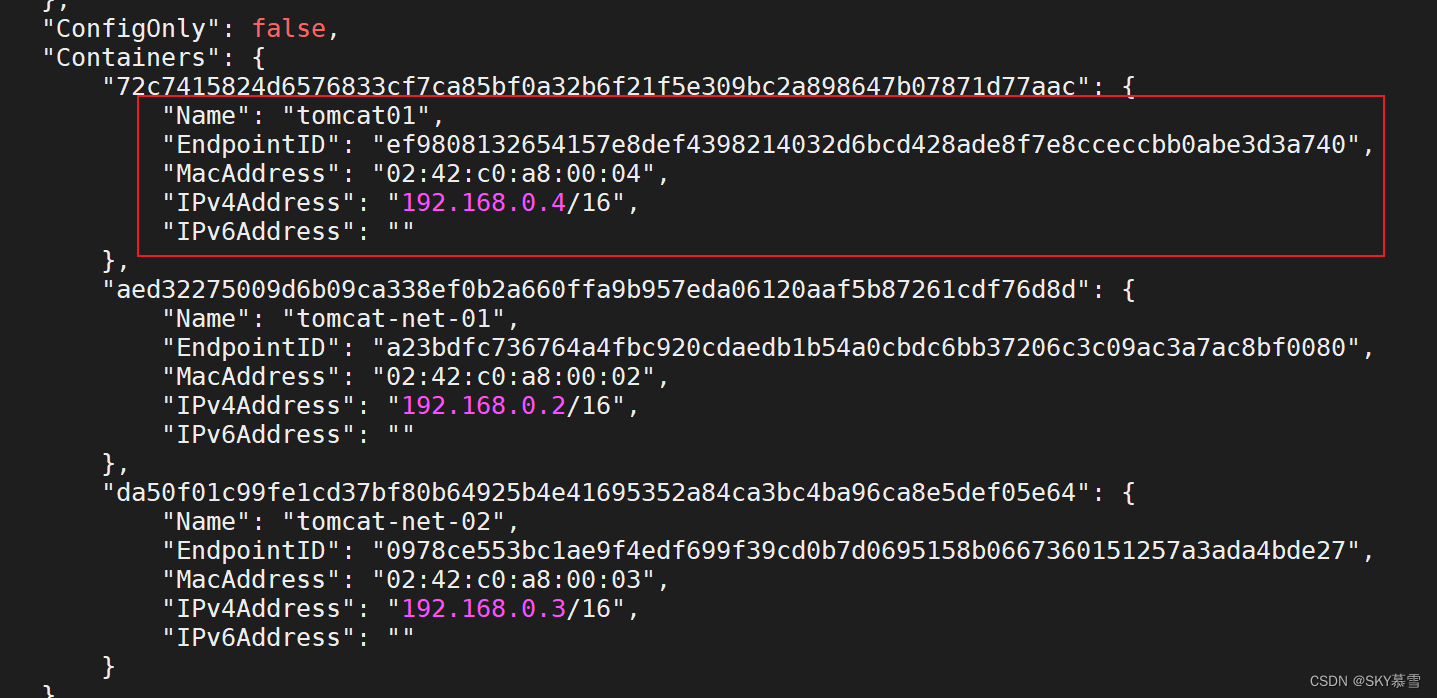

4.2 创建两个容器,再次查看自定义的网络信息

[root@localhost ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

aed32275009d6b09ca338ef0b2a660ffa9b957eda06120aaf5b87261cdf76d8d

[root@localhost ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

da50f01c99fe1cd37bf80b64925b4e41695352a84ca3bc4ba96ca8e5def05e64

[root@localhost ~]#

[root@localhost ~]#

[root@localhost ~]# docker network inspect mynet

[{"Name": "mynet","Id": "40d77be7a79d7dc13a3b5b3cd46234be47276159ad2da8daf264903739780edc","Created": "2024-03-07T20:27:57.115095419+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": {},"Config": [{"Subnet": "192.168.0.0/16","Gateway": "192.168.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"aed32275009d6b09ca338ef0b2a660ffa9b957eda06120aaf5b87261cdf76d8d": {"Name": "tomcat-net-01","EndpointID": "a23bdfc736764a4fbc920cdaedb1b54a0cbdc6bb37206c3c09ac3a7ac8bf0080","MacAddress": "02:42:c0:a8:00:02","IPv4Address": "192.168.0.2/16","IPv6Address": ""},"da50f01c99fe1cd37bf80b64925b4e41695352a84ca3bc4ba96ca8e5def05e64": {"Name": "tomcat-net-02","EndpointID": "0978ce553bc1ae9f4edf699f39cd0b7d0695158b0667360151257a3ada4bde27","MacAddress": "02:42:c0:a8:00:03","IPv4Address": "192.168.0.3/16","IPv6Address": ""}},"Options": {},"Labels": {}}

]

4.3 两个容器互相通信

[root@localhost ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

OCI runtime exec failed: exec failed: unable to start container process: exec: "ping": executable file not found in $PATH: unknown#出现如上报错,执行以下代码,每个容器都需要安装ping命令

[root@localhost ~]# docker exec -it tomcat-net-01 apt-get update[root@localhost ~]# docker exec -it tomcat-net-01 apt-get install -y iputils-ping[root@localhost ~]# docker exec -it tomcat-net-02 apt-get update[root@localhost ~]# docker exec -it tomcat-net-02 apt-get install -y iputils-ping不使用--link也可以ping容器名了!

#用tomcat-net-01ping另一个容器的ip地址

[root@localhost ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.067 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.083 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.054 ms

^C

--- 192.168.0.3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.054/0.068/0.083/0.011 ms#使用两个容器名字通信

[root@localhost ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.057 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.098 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.089 ms

^C

--- tomcat-net-02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2001ms

rtt min/avg/max/mdev = 0.057/0.081/0.098/0.017 ms

自定义的docekr会帮我们维护好对应的关系,推荐这种方法使用网络!!

好处

redis 不同集群使用不同的网络,保证集群是安全和健康的

mysql 不同集群使用不同的网络,保证集群是安全和健康的

五、网络连通

5.1 打通tomcat01 - mynet

连通之后就是将tomcat01 放到了 mynet 网络下

一个容器两个ip地址

阿里云服务:公网ip 私网ip

[root@localhost ~]# docker network connect mynet tomcat01[root@localhost ~]# docker network inspect mynet

[{"Name": "mynet","Id": "40d77be7a79d7dc13a3b5b3cd46234be47276159ad2da8daf264903739780edc","Created": "2024-03-07T20:27:57.115095419+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": {},"Config": [{"Subnet": "192.168.0.0/16","Gateway": "192.168.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"72c7415824d6576833cf7ca85bf0a32b6f21f5e309bc2a898647b07871d77aac": {"Name": "tomcat01","EndpointID": "ef9808132654157e8def4398214032d6bcd428ade8f7e8cceccbb0abe3d3a740","MacAddress": "02:42:c0:a8:00:04","IPv4Address": "192.168.0.4/16","IPv6Address": ""},"aed32275009d6b09ca338ef0b2a660ffa9b957eda06120aaf5b87261cdf76d8d": {"Name": "tomcat-net-01","EndpointID": "a23bdfc736764a4fbc920cdaedb1b54a0cbdc6bb37206c3c09ac3a7ac8bf0080","MacAddress": "02:42:c0:a8:00:02","IPv4Address": "192.168.0.2/16","IPv6Address": ""},"da50f01c99fe1cd37bf80b64925b4e41695352a84ca3bc4ba96ca8e5def05e64": {"Name": "tomcat-net-02","EndpointID": "0978ce553bc1ae9f4edf699f39cd0b7d0695158b0667360151257a3ada4bde27","MacAddress": "02:42:c0:a8:00:03","IPv4Address": "192.168.0.3/16","IPv6Address": ""}},"Options": {},"Labels": {}}

]

5.2 测试连通性

[root@localhost ~]# docker exec -it tomcat01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.071 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.070 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.083 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.060 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=5 ttl=64 time=0.057 ms

^C

--- tomcat-net-02 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4004ms

rtt min/avg/max/mdev = 0.057/0.068/0.083/0.009 ms

[root@localhost ~]#

[root@localhost ~]#

[root@localhost ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.185 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.060 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.060 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=4 ttl=64 time=0.063 ms

^C

--- tomcat-net-01 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3001ms

rtt min/avg/max/mdev = 0.060/0.092/0.185/0.053 ms

tomcat01成功通信,tomcat02如果也想进行通信,也需要进行打通(按照以上方法)

结论:如果要跨网络操作别人,就需要使用docker network connect 连通!

)

)

)