本笔记主要记录tf.GradientTape和tf.gradient的用法

import tensorflow as tf

import numpy as nptf.__version__#要计算梯度的所有参数计算过程必须放到gradient tape中

#with tf.GradientTape as tape:

w = tf.constant(1.)

x = tf.constant(2.)with tf.GradientTape() as tape:tape.watch([w])y = x * w

#使用gradient()计算w的梯度

grad = tape.gradient(y, [w])

print("Graidient of W:")

print(grad)#向量求梯度

W = tf.convert_to_tensor([8., 7., 6.])

X = tf.convert_to_tensor([3., 3., 3.])

with tf.GradientTape() as tape:tape.watch(X)Y = X * W

grads = tape.gradient(Y, X)

print("Gradient of X:")

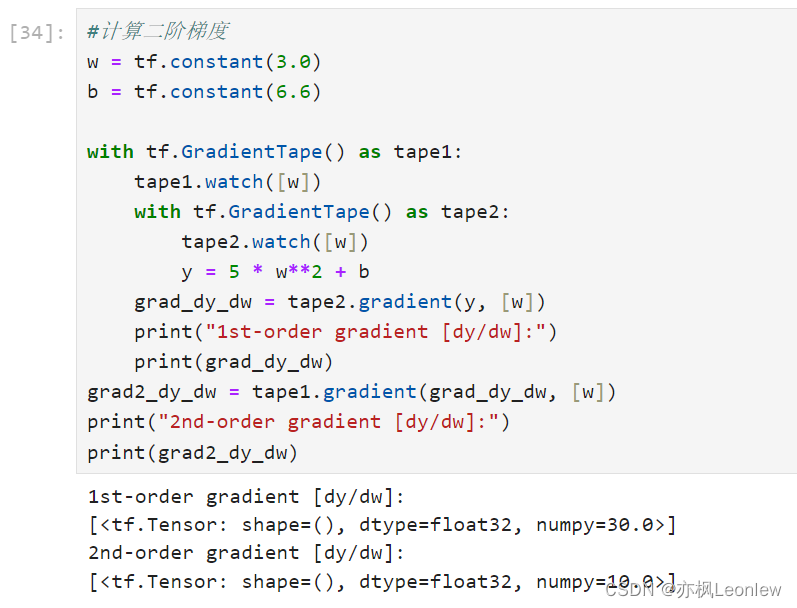

print(grads)#计算二阶梯度

w = tf.constant(3.0)

b = tf.constant(6.6)with tf.GradientTape() as tape1:tape1.watch([w])with tf.GradientTape() as tape2:tape2.watch([w])y = 5 * w**2 + bgrad_dy_dw = tape2.gradient(y, [w])print("1st-order gradient [dy/dw]:")print(grad_dy_dw)

grad2_dy_dw = tape1.gradient(grad_dy_dw, [w])

print("2nd-order gradient [dy/dw]:")

print(grad2_dy_dw)运行结果:

数据包)

笔记-2)

)

是如何赋能数字化市场营销全过程?- 我为什么要翻译介绍美国人工智能科技巨头IAB公司)