Attention

Attention = 注意力,从两个不同的主体开始。

论文:https://arxiv.org/pdf/1703.03906.pdf

seq2seq代码仓:https://github.com/google/seq2seq

计算方法:

加性Attention,如(Bahdanau attention):

v a ⊤ tanh ( W 1 h t + W 2 h ‾ s ) \boldsymbol{v}_a^{\top} \tanh \left(\boldsymbol{W}_{\mathbf{1}} \boldsymbol{h}_t+\boldsymbol{W}_{\mathbf{2}} \overline{\boldsymbol{h}}_s\right) va⊤tanh(W1ht+W2hs)

乘性Attention,如(Luong attention):

score ( h t , h ‾ s ) = { h t ⊤ h ‾ s dot h t ⊤ W a h ‾ s general v a ⊤ tanh ( W a [ h t ; h ‾ s ] ) concat \operatorname{score}\left(\boldsymbol{h}_{t}, \overline{\boldsymbol{h}}_{s}\right)=\left\{\begin{array}{ll} \boldsymbol{h}_{t}^{\top} \overline{\boldsymbol{h}}_{s} & \text { dot } \\ \boldsymbol{h}_{t}^{\top} \boldsymbol{W}_{a} \overline{\boldsymbol{h}}_{s} & \text { general } \\ \boldsymbol{v}_{a}^{\top} \tanh \left(\boldsymbol{W}_{a}\left[\boldsymbol{h}_{t} ; \overline{\boldsymbol{h}}_{s}\right]\right) & \text { concat } \end{array}\right. score(ht,hs)=⎩ ⎨ ⎧ht⊤hsht⊤Wahsva⊤tanh(Wa[ht;hs]) dot general concat

来源论文:https://arxiv.org/pdf/1508.04025.pdf

From Attention to SelfAttention

Self Attention

“Attention is All You Need” 这篇论文提出了Multi-Head Self-Attention,是一种:Scaled Dot-Product Attention。

Attention ( Q , K , V ) = softmax ( Q K T d k ) V \operatorname{Attention}(Q, K, V)=\operatorname{softmax}\left(\frac{Q K^T}{\sqrt{d_k}}\right) V Attention(Q,K,V)=softmax(dkQKT)V

来源论文:https://arxiv.org/pdf/1706.03762.pdf

Scaled

Scaled 的目的是调节内积,使其结果不至于太大(太大的话softmax后就非0即1了,不够“soft”了)。

来源论文: https://kexue.fm/archives/4765

Multi-Head

Multi-Head可以理解为多个注意力模块,期望不同注意力模块“注意”到不一样的地方,类似于CNN的Kernel。

Multi-head attention allows the model to jointly attend to information from different representation

subspaces at different positions.

MultiHead ( Q , K , V ) = Concat ( head 1 , … , head h ) W O where head i = Attention ( Q W i Q , K W i K , V W i V ) \begin{aligned} \operatorname{MultiHead}(Q, K, V) & =\operatorname{Concat}\left(\operatorname{head}_1, \ldots, \text { head }_{\mathrm{h}}\right) W^O \\ \text { where head }_{\mathrm{i}} & =\operatorname{Attention}\left(Q W_i^Q, K W_i^K, V W_i^V\right) \end{aligned} MultiHead(Q,K,V) where head i=Concat(head1,…, head h)WO=Attention(QWiQ,KWiK,VWiV)

来源论文: https://arxiv.org/pdf/1706.03762.pdf

代码实践

Attention

导入库

from dataclasses import dataclass

import torch

import torch.nn as nn

import torch.nn.functional as Ffrom selfattention import SelfAttention

我们只用一个核心的SelfAttention模块(可支持Single-Head或Multi-Head),来学习理解Attention机制。

class Model(nn.Module):def __init__(self, config):super().__init__()self.config = configself.emb = nn.Embedding(config.vocab_size, config.hidden_dim)self.attn = SelfAttention(config)self.fc = nn.Linear(config.hidden_dim, config.num_labels)def forward(self, x):batch_size, seq_len = x.shapeh = self.emb(x)attn_score, h = self.attn(h)h = F.avg_pool1d(h.permute(0, 2, 1), seq_len, 1)h = h.squeeze(-1)logits = self.fc(h)return attn_score, logits

@dataclass

class Config:vocab_size: int = 5000hidden_dim: int = 512num_heads: int = 16head_dim: int = 32dropout: float = 0.1num_labels: int = 2max_seq_len: int = 512num_epochs: int = 10config = Config(5000, 512, 16, 32, 0.1, 2)model = Model(config)x = torch.randint(0, 5000, (3, 30))

x.shape

#torch.Size([3, 30])attn, logits = model(x)

attn.shape, logits.shape

#(torch.Size([3, 16, 30, 30]), torch.Size([3, 2]))

数据

import pandas as pd

from sklearn.model_selection import train_test_splitfile_path = "./data/ChnSentiCorp_htl_all.csv"df = pd.read_csv(file_path)

df = df.dropna()

df.head(), df.shape'''

( label review0 1 距离川沙公路较近,但是公交指示不对,如果是"蔡陆线"的话,会非常麻烦.建议用别的路线.房间较...1 1 商务大床房,房间很大,床有2M宽,整体感觉经济实惠不错!2 1 早餐太差,无论去多少人,那边也不加食品的。酒店应该重视一下这个问题了。房间本身很好。3 1 宾馆在小街道上,不大好找,但还好北京热心同胞很多~宾馆设施跟介绍的差不多,房间很小,确实挺小...4 1 CBD中心,周围没什么店铺,说5星有点勉强.不知道为什么卫生间没有电吹风,(7765, 2))

'''df.label.value_counts()

'''

label

1 5322

0 2443

Name: count, dtype: int64

'''

数据不均衡,我们给它简单重采样一下。

df = pd.concat([df[df.label==1].sample(2500), df[df.label==0]])

df.shape

#(4943, 2)df.label.value_counts()

'''

label

1 2500

0 2443

Name: count, dtype: int64

'''

from tokenizer import Tokenizertokenizer = Tokenizer(config.vocab_size, config.max_seq_len)tokenizer.build_vocab(df.review)tokenizer(["你好", "你好呀"])

'''

tensor([[3233, 0],[3233, 955]])

'''def collate_batch(batch):label_list, text_list = [], []for v in batch:_label = v["label"]_text = v["text"]label_list.append(_label)text_list.append(_text)inputs = tokenizer(text_list)labels = torch.LongTensor(label_list)return inputs, labelsfrom dataset import Datasetds = Dataset()

ds.build(df, "review", "label")len(ds), ds[0]

'''

(4943,{'text': '1、酒店环境不错,地理位置佳。到十全街、凤凰街挺方便的。属于闹中取静的那种。2、客房里设施齐全、干净,比较方便。卫浴设施也挺好的。插头挺多的,很好。房间很干净,也挺温馨的。3、自助餐还可以。美中不足之处:1、卫生纸的质量比较差。2、饭店里最好可以提供(自助)洗衣、干衣的服务。3、房间里的小冰箱效果不好,声音也比较响。反正不用,我就关掉了。总体感觉不错,性价比高,下次还来住。','label': 1})

'''train_ds, test_ds = train_test_split(ds, test_size=0.2)

train_ds, valid_ds = train_test_split(train_ds, test_size=0.1)

len(train_ds), len(valid_ds), len(test_ds)

#(3558, 396, 989)

from torch.utils.data import DataLoaderBATCH_SIZE = 8train_dl = DataLoader(train_ds, batch_size=BATCH_SIZE, collate_fn=collate_batch)

valid_dl = DataLoader(valid_ds, batch_size=BATCH_SIZE, collate_fn=collate_batch)

test_dl = DataLoader(test_ds, batch_size=BATCH_SIZE, collate_fn=collate_batch)

len(train_dl), len(valid_dl), len(test_dl)

#(445, 50, 124)

for v in train_dl: breakv[0].shape, v[1].shape, v[0].dtype, v[1].dtype

#(torch.Size([8, 225]), torch.Size([8]), torch.int64, torch.int64)

训练

from trainer import train, testNUM_EPOCHS = 10

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")config = Config(5000, 64, 1, 64, 0.1, 2)

model = Model(config)

model.to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3, weight_decay=1e-3)

train(model, optimizer, train_dl, valid_dl, config)test(model, test_dl)

'''

Epoch [1/10]

Iter: 445, Train Loss: 0.52, Train Acc: 0.75, Val Loss: 0.52, Val Acc: 0.74

Epoch [2/10]

Iter: 890, Train Loss: 0.51, Train Acc: 0.75, Val Loss: 0.51, Val Acc: 0.76

Epoch [3/10]

Iter: 1335, Train Loss: 0.50, Train Acc: 0.62, Val Loss: 0.52, Val Acc: 0.78

Epoch [4/10]

Iter: 1780, Train Loss: 0.51, Train Acc: 0.62, Val Loss: 0.49, Val Acc: 0.79

Epoch [5/10]

Iter: 2225, Train Loss: 0.56, Train Acc: 0.62, Val Loss: 0.45, Val Acc: 0.81

Epoch [6/10]

Iter: 2670, Train Loss: 0.64, Train Acc: 0.88, Val Loss: 0.41, Val Acc: 0.82

Epoch [7/10]

Iter: 3115, Train Loss: 0.58, Train Acc: 0.88, Val Loss: 0.38, Val Acc: 0.84

Epoch [8/10]

Iter: 3560, Train Loss: 0.52, Train Acc: 0.75, Val Loss: 0.36, Val Acc: 0.85

Epoch [9/10]

Iter: 4005, Train Loss: 0.45, Train Acc: 0.75, Val Loss: 0.37, Val Acc: 0.86

Epoch [10/10]

Iter: 4450, Train Loss: 0.34, Train Acc: 0.88, Val Loss: 0.38, Val Acc: 0.87

0.8554189267686065

'''

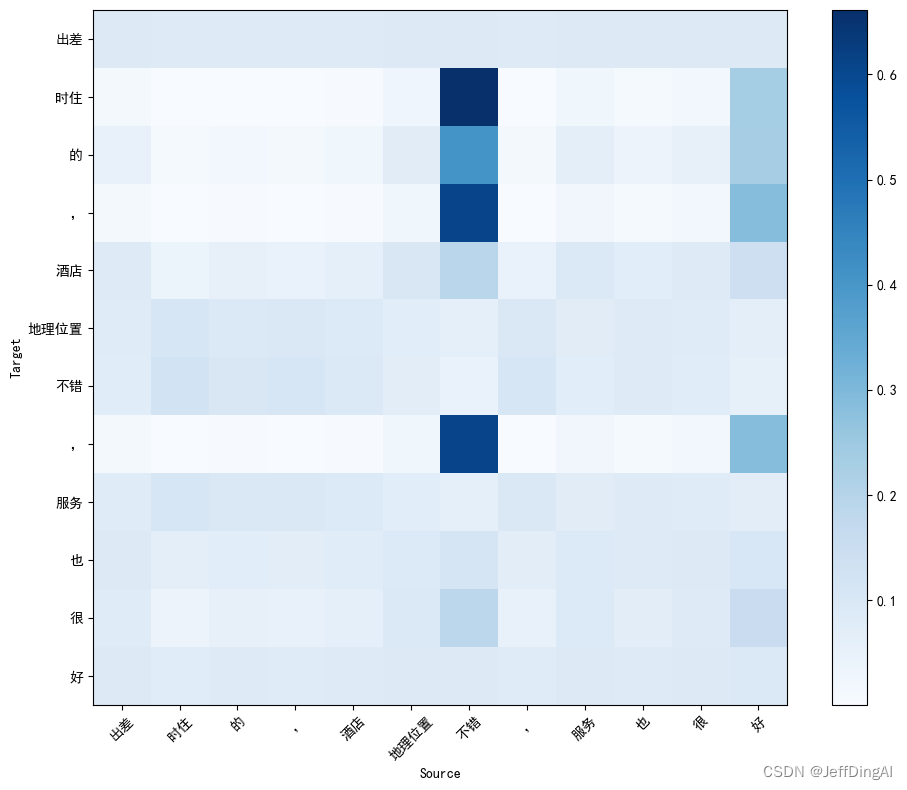

推理

from inference import infer, plot_attention

import numpy as npsample = np.random.choice(test_ds)

while len(sample["text"]) > 20:sample = np.random.choice(test_ds)print(sample)inp = sample["text"]

inputs = tokenizer(inp)

attn, prob = infer(model, inputs.to(device))

attn_prob = attn[0, 0, :, :].cpu().numpy()

tokens = tokenizer.tokenize(inp)

tokens, prob

'''

{'text': '买东西方便!不错的选择!大家也要选择', 'label': 1}

(['买', '东西', '方便', '!', '不错', '的', '选择', '!', '大家', '也', '要', '选择'], 1)

'''

plot_attention(attn_prob, tokens, tokens)tokenizer.get_freq_of("不")

# 2659

LLM

准备代码仓

git clone https://github.com/hscspring/llama.np

cd llama.np/

下载模型

# 从这里下载模型 https://hf-mirror.com/karpathy/tinyllamas/tree/main

# 放到llama.np目录

import os# 设置环境变量

os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'# 下载模型

os.system('huggingface-cli download --resume-download karpathy/tinyllamas --local-dir /root/datawhale/sora_learn/datawhale/attention-llm/llm/llama.np')

转换格式

python convert_bin_llama_to_np.py stories15M.bin

生成

python main.py "Once upon"

LLaMA

from config import ModelArgs

from model import Llama

from tokenizer import Tokenizer

import numpy as npargs = ModelArgs(288, 6, 6, 6, 32000, None, 256)token_model_path = "./tokenizer.model.np"

model_path = "./stories15M.model.npz"tok = Tokenizer(token_model_path)

llama = Llama(model_path, args)prompt = "Once upon"ids = tok.encode(prompt)

input_ids = np.array([ids], dtype=np.int32)

token_num = input_ids.shape[1]print(prompt, end="")

for ids in llama.generate(input_ids, args.max_seq_len, True, 1.0, 0.9, 0):output_ids = ids[0].tolist()if output_ids[-1] in [tok.eos_id, tok.bos_id]:breakoutput_text = tok.decode(output_ids)print(output_text, end="")

运行结果

**Once uponong end, there wa a girl named Sophie. She wa three year old and loved to explore. She had a different blouse with lot of bright colour.

One day, Sophie wa walking in the park with her mommy. She saw a shiny pedal on the ground. It wa a shiny one, so she picked it up and wa so excited to have found it.

“Mommy, look! I found a new pedal!” she said.

“That’ great, Sophie,” her mommy said. “I know, it look very exciting!”

But then Sophie noticed that her reflection in the bright blade of the pedal seemed very distant. She started to worry.

“Mommy, what’ wrong?” Sophie asked.

“It’ too far away to come back,” her mommy replied.

“Let’ go look for it,” Sophie said.

So they started to look for the pedal. They walked a bit further and eventually, they found it! Sophie wa so happy.

“Mommy, thi pedal i so interesting!” Sophie said.

Her mommy smiled and said, “Ye**

)

)

)

| YOLOv9)

方法学习)