目录

问题

解决

问题

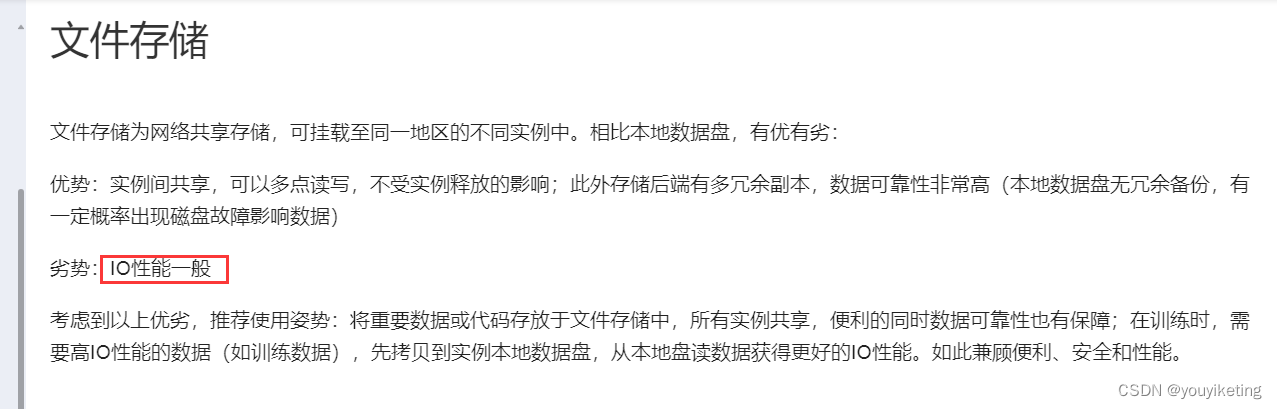

实验室服务器资源紧张,博主就自己在autodl上租卡跑了,autodl有一个网络共享存储,可挂载至同一地区的不同实例中,当我们在该地区创建实例开机后,将会挂载文件存储至实例的/root/autodl-fs目录,以实现不同实例间的数据共享。

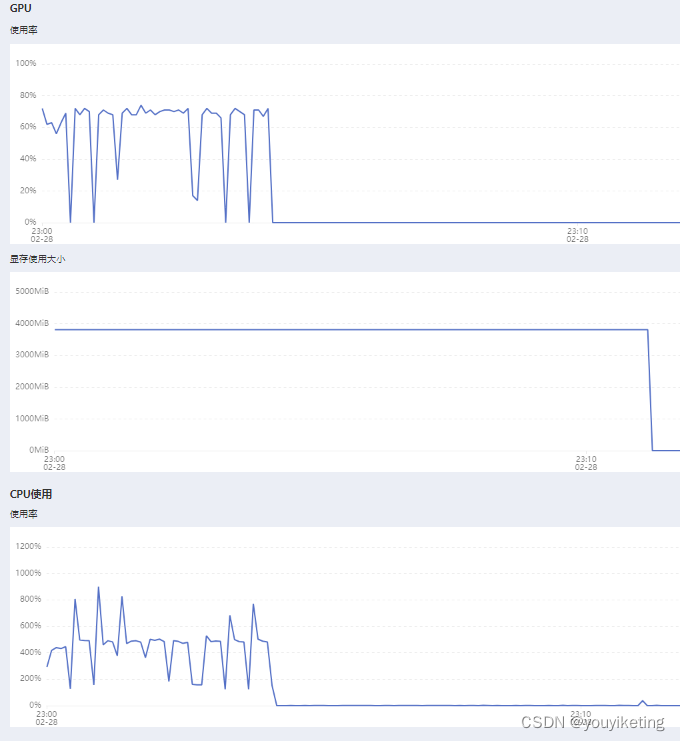

那当我们之前使用的卡被别人占用后,可以直接在租的新卡上访问该网络共享存储上的数据代码,就能省掉文件传来传去的冗余读写烦恼了。于是博主一直在该共享盘上修改模型。但最近博主复现模型的时候,模型总是卡在某epoch处,监控服务器状态,发现GPU和CPU利用率突然骤降为0,但程序依然占用显存,且训练过程中会出现如下线程控制警告?

Traceback (most recent call last):File "/root/miniconda3/lib/python3.8/threading.py", line 932, in _bootstrap_inner

Exception in thread Thread-3:

Traceback (most recent call last):File "/root/miniconda3/lib/python3.8/threading.py", line 932, in _bootstrap_inner

Exception in thread Thread-5:

Traceback (most recent call last):File "/root/miniconda3/lib/python3.8/threading.py", line 932, in _bootstrap_inner

Exception in thread Thread-8:

Traceback (most recent call last):File "/root/miniconda3/lib/python3.8/threading.py", line 932, in _bootstrap_inner

Exception in thread Thread-9:

Traceback (most recent call last):File "/root/miniconda3/lib/python3.8/threading.py", line 932, in _bootstrap_innerself.run()File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/event_file_writer.py", line 233, in runself.run()File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/event_file_writer.py", line 233, in runself.run()File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/event_file_writer.py", line 233, in runself.run()File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/event_file_writer.py", line 233, in runself.run()File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/event_file_writer.py", line 233, in runself._record_writer.write(data)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/record_writer.py", line 40, in writeself._record_writer.write(data)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/record_writer.py", line 40, in writeself._record_writer.write(data)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/record_writer.py", line 40, in writeself._record_writer.write(data)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/record_writer.py", line 40, in writeself._writer.write(header + header_crc + data + footer_crc)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 766, in writeself._record_writer.write(data)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/summary/writer/record_writer.py", line 40, in writeself._writer.write(header + header_crc + data + footer_crc)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 766, in writeself._writer.write(header + header_crc + data + footer_crc)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 766, in writeself._writer.write(header + header_crc + data + footer_crc)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 766, in writeself._writer.write(header + header_crc + data + footer_crc)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 766, in writeself.fs.append(self.filename, file_content, self.binary_mode)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 160, in appendself.fs.append(self.filename, file_content, self.binary_mode)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 160, in appendself.fs.append(self.filename, file_content, self.binary_mode)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 160, in appendself._write(filename, file_content, "ab" if binary_mode else "a")File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 164, in _writeself._write(filename, file_content, "ab" if binary_mode else "a")File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 164, in _writeself.fs.append(self.filename, file_content, self.binary_mode)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 160, in appendself.fs.append(self.filename, file_content, self.binary_mode)File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 160, in appendself._write(filename, file_content, "ab" if binary_mode else "a")File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 164, in _writeself._write(filename, file_content, "ab" if binary_mode else "a")File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 164, in _writewith io.open(filename, mode, encoding=encoding) as f:

FileNotFoundError: [Errno 2] No such file or directory: b'runs/Feb28_22-30-35_autodl-container-b8bc118052-8d77dd6aCombined_hinet_pretrain100_debug_MSG_imgPatchSE_mean/Total_Loss_Total Loss/events.out.tfevents.1709130663.autodl-container-b8bc118052-8d77dd6a.1871.1steg'with io.open(filename, mode, encoding=encoding) as f:

FileNotFoundError: [Errno 2] No such file or directory: b'runs/Feb28_22-30-35_autodl-container-b8bc118052-8d77dd6aCombined_hinet_pretrain100_debug_MSG_imgPatchSE_mean/error_msg_average bit error/events.out.tfevents.1709130663.autodl-container-b8bc118052-8d77dd6a.1871.6steg'self._write(filename, file_content, "ab" if binary_mode else "a")File "/root/miniconda3/lib/python3.8/site-packages/tensorboard/compat/tensorflow_stub/io/gfile.py", line 164, in _writewith io.open(filename, mode, encoding=encoding) as f:

FileNotFoundError: [Errno 2] No such file or directory: b'runs/Feb28_22-30-35_autodl-container-b8bc118052-8d77dd6aCombined_hinet_pretrain100_debug_MSG_imgPatchSE_mean/acc_msg_average accuracy/events.out.tfevents.1709130663.autodl-container-b8bc118052-8d77dd6a.1871.7steg'with io.open(filename, mode, encoding=encoding) as f:

FileNotFoundError: [Errno 2] No such file or directory: b'runs/Feb28_22-30-35_autodl-container-b8bc118052-8d77dd6aCombined_hinet_pretrain100_debug_MSG_imgPatchSE_mean/rs_loss_reconstruct_secret loss/events.out.tfevents.1709130663.autodl-container-b8bc118052-8d77dd6a.1871.3steg'with io.open(filename, mode, encoding=encoding) as f:

FileNotFoundError: [Errno 2] No such file or directory: b'runs/Feb28_22-30-35_autodl-container-b8bc118052-8d77dd6aCombined_hinet_pretrain100_debug_MSG_imgPatchSE_mean/steg_loss_embedded loss/events.out.tfevents.1709130663.autodl-container-b8bc118052-8d77dd6a.1871.2steg'

解决

挠破脑袋,查阅各种网络资料后,我怀疑问题出在我的tensorboard的IO调用上,然后我又查了autodl关于网络共享存储的帮助文档,果然啊!虽然这个共享盘可以实现实例间的共享,还能冗余备份,保护咱们代码财产安全(博主就碰到过一次:刚改完代码跑着模型,服务器突然报下线维修,请联系客服...还好咱的程序都在共享存储盘上,没丢),但是IO性能一般,影响模型训练过程。

后续,我把程序拷贝至实例本地数据盘后,模型莫名的训练卡顿问题就解决啦!

string容器)

![LeetCode 刷题 [C++] 第102题.二叉树的层序遍历](http://pic.xiahunao.cn/LeetCode 刷题 [C++] 第102题.二叉树的层序遍历)

)

)