point_pillar_fcooper

- PointPillarFCooper

- PointPillars

- PillarVFE

- PFNLayer

- PointPillarScatter

- BaseBEVBackbone

- DownsampleConv

- DoubleConv

- SpatialFusion

- 检测头

(紧扣PointPillarFCooper的框架结构,一点一点看代码)

PointPillarFCooper

# -*- coding: utf-8 -*-

# Author: Runsheng Xu <rxx3386@ucla.edu>

# License: TDG-Attribution-NonCommercial-NoDistrib

import pprintimport torch.nn as nnfrom opencood.models.sub_modules.pillar_vfe import PillarVFE

from opencood.models.sub_modules.point_pillar_scatter import PointPillarScatter

from opencood.models.sub_modules.base_bev_backbone import BaseBEVBackbone

from opencood.models.sub_modules.downsample_conv import DownsampleConv

from opencood.models.sub_modules.naive_compress import NaiveCompressor

from opencood.models.fuse_modules.f_cooper_fuse import SpatialFusionclass PointPillarFCooper(nn.Module):"""F-Cooper implementation with point pillar backbone."""def __init__(self, args):super(PointPillarFCooper, self).__init__()print("args: ")pprint.pprint(args)self.max_cav = args['max_cav']# PIllar VFE Voxel Feature Encodingself.pillar_vfe = PillarVFE(args['pillar_vfe'],num_point_features=4,voxel_size=args['voxel_size'],point_cloud_range=args['lidar_range'])self.scatter = PointPillarScatter(args['point_pillar_scatter'])self.backbone = BaseBEVBackbone(args['base_bev_backbone'], 64)# used to downsample the feature map for efficient computationself.shrink_flag = Falseif 'shrink_header' in args:self.shrink_flag = Trueself.shrink_conv = DownsampleConv(args['shrink_header'])self.compression = Falseif args['compression'] > 0:self.compression = Trueself.naive_compressor = NaiveCompressor(256, args['compression'])self.fusion_net = SpatialFusion()self.cls_head = nn.Conv2d(128 * 2, args['anchor_number'],kernel_size=1)self.reg_head = nn.Conv2d(128 * 2, 7 * args['anchor_number'],kernel_size=1)if args['backbone_fix']:self.backbone_fix()- args: 其实就是从hypes_yaml配置文件里传来的参数

args:

{'anchor_number': 2,'backbone_fix': False,'base_bev_backbone': {'layer_nums': [3, 5, 8],'layer_strides': [2, 2, 2],'num_filters': [64, 128, 256],'num_upsample_filter': [128, 128, 128],'upsample_strides': [1, 2, 4]},'compression': 0,'lidar_range': [-140.8, -40, -3, 140.8, 40, 1],'max_cav': 5,'pillar_vfe': {'num_filters': [64],'use_absolute_xyz': True,'use_norm': True,'with_distance': False},'point_pillar_scatter': {'grid_size': array([704, 200, 1], dtype=int64),'num_features': 64},'shrink_header': {'dim': [256],'input_dim': 384,'kernal_size': [1],'padding': [0],'stride': [1]},'voxel_size': [0.4, 0.4, 4]} def backbone_fix(self):"""Fix the parameters of backbone during finetune on timedelay。"""for p in self.pillar_vfe.parameters():p.requires_grad = Falsefor p in self.scatter.parameters():p.requires_grad = Falsefor p in self.backbone.parameters():p.requires_grad = Falseif self.compression:for p in self.naive_compressor.parameters():p.requires_grad = Falseif self.shrink_flag:for p in self.shrink_conv.parameters():p.requires_grad = Falsefor p in self.cls_head.parameters():p.requires_grad = Falsefor p in self.reg_head.parameters():p.requires_grad = False

backbone_fix 方法用于在模型微调过程中固定骨干网络的参数,以避免它们被更新。

遍历了模型中各个需要固定参数的组件,并将它们的 requires_grad 属性设置为 False,这意味着这些参数不会被优化器更新。

我们来看 forward 方法:

def forward(self, data_dict):voxel_features = data_dict['processed_lidar']['voxel_features']voxel_coords = data_dict['processed_lidar']['voxel_coords']voxel_num_points = data_dict['processed_lidar']['voxel_num_points']record_len = data_dict['record_len']batch_dict = {'voxel_features': voxel_features,'voxel_coords': voxel_coords,'voxel_num_points': voxel_num_points,'record_len': record_len}# n, 4 -> n, cbatch_dict = self.pillar_vfe(batch_dict)# n, c -> N, C, H, Wbatch_dict = self.scatter(batch_dict)batch_dict = self.backbone(batch_dict)spatial_features_2d = batch_dict['spatial_features_2d']# downsample feature to reduce memoryif self.shrink_flag:spatial_features_2d = self.shrink_conv(spatial_features_2d)# compressorif self.compression:spatial_features_2d = self.naive_compressor(spatial_features_2d)fused_feature = self.fusion_net(spatial_features_2d, record_len)psm = self.cls_head(fused_feature)rm = self.reg_head(fused_feature)output_dict = {'psm': psm,'rm': rm}return output_dict

forward 方法定义了模型的前向传播过程。它接受一个数据字典作为输入,包含了经过处理的点云数据。

首先,从输入字典中提取出点云特征、体素坐标、体素点数等信息。

然后,依次将数据通过 pillar_vfe、scatter 和 backbone 这几个模块进行处理,得到了一个包含了空间特征的张量 spatial_features_2d。

如果启用了特征图的下采样(shrink_flag 为 True),则对 spatial_features_2d 进行下采样。

如果启用了特征压缩(compression 为 True),则对 spatial_features_2d 进行压缩。

最后,将压缩后的特征通过 fusion_net 进行融合,并通过 cls_head 和 reg_head 进行分类和回归,得到预测结果。

整个 forward 方法实现了模型的数据流动过程,从输入数据到最终输出结果的计算过程。

- PointPillarsFcooper结构

PointPillarFCooper((pillar_vfe): PillarVFE((pfn_layers): ModuleList((0): PFNLayer((linear): Linear(in_features=10, out_features=64, bias=False)(norm): BatchNorm1d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True))))(scatter): PointPillarScatter()(backbone): BaseBEVBackbone((blocks): ModuleList((0): Sequential((0): ZeroPad2d(padding=(1, 1, 1, 1), value=0.0)(1): Conv2d(64, 64, kernel_size=(3, 3), stride=(2, 2), bias=False)(2): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(3): ReLU()(4): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(5): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(6): ReLU()(7): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(8): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(9): ReLU()(10): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(11): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(12): ReLU())(1): Sequential((0): ZeroPad2d(padding=(1, 1, 1, 1), value=0.0)(1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), bias=False)(2): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(3): ReLU()(4): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(5): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(6): ReLU()(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(8): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(9): ReLU()(10): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(11): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(12): ReLU()(13): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(14): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(15): ReLU()(16): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(17): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(18): ReLU())(2): Sequential((0): ZeroPad2d(padding=(1, 1, 1, 1), value=0.0)(1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), bias=False)(2): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(3): ReLU()(4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(5): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(6): ReLU()(7): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(8): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(9): ReLU()(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(11): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(12): ReLU()(13): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(14): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(15): ReLU()(16): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(17): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(18): ReLU()(19): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(20): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(21): ReLU()(22): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(23): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(24): ReLU()(25): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(26): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(27): ReLU()))(deblocks): ModuleList((0): Sequential((0): ConvTranspose2d(64, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(2): ReLU())(1): Sequential((0): ConvTranspose2d(128, 128, kernel_size=(2, 2), stride=(2, 2), bias=False)(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(2): ReLU())(2): Sequential((0): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(4, 4), bias=False)(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)(2): ReLU())))(shrink_conv): DownsampleConv((layers): ModuleList((0): DoubleConv((double_conv): Sequential((0): Conv2d(384, 256, kernel_size=(1, 1), stride=(1, 1))(1): ReLU(inplace=True)(2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(3): ReLU(inplace=True)))))(fusion_net): SpatialFusion()(cls_head): Conv2d(256, 2, kernel_size=(1, 1), stride=(1, 1))(reg_head): Conv2d(256, 14, kernel_size=(1, 1), stride=(1, 1))

)PointPillars

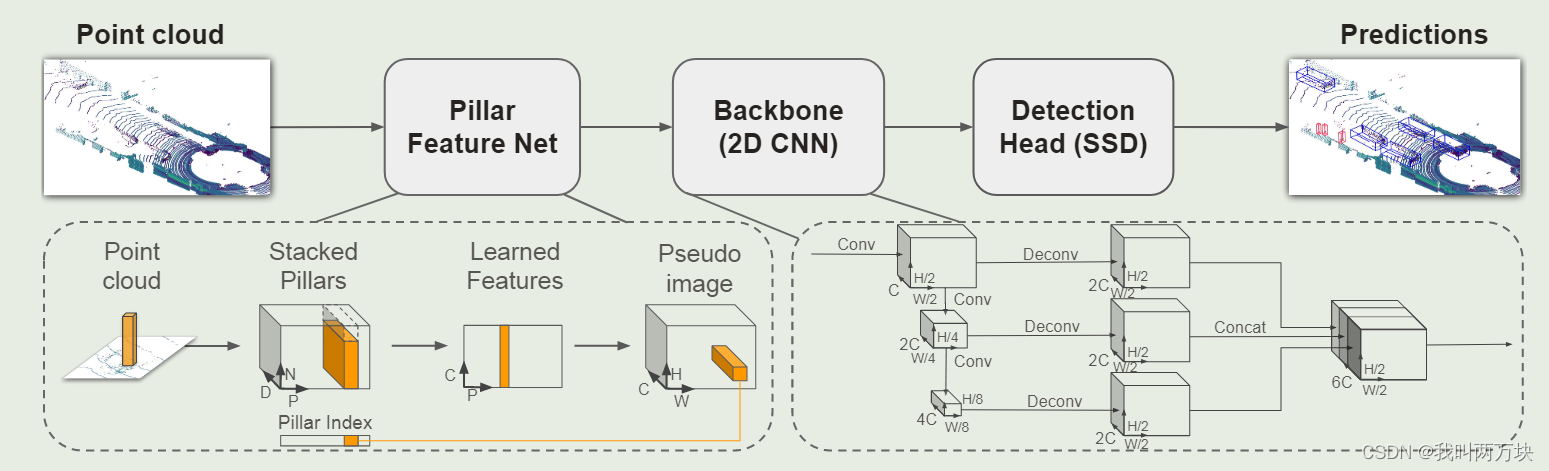

网络overview:网络的主要组成部分是PFN、Backbone和 SSD 检测头。原始点云被转换为堆叠的柱子张量和柱子索引张量。编码器使用堆叠的柱子来学习一组特征,这些特征可以分散回卷积神经网络的 2D 伪图像。检测头使用来自主干的特征来预测对象的 3D 边界框。请注意:在这里,我们展示了汽车网络的骨干维度。

PillarVFE

就是 voxel feature encoder:先对点云进行特征提取

VFE由PFNLayer(Pillar Feature Net)组成

- model_cfg

{'num_filters': [64],'use_absolute_xyz': True,'use_norm': True,'with_distance': False},

class PillarVFE(nn.Module):def __init__(self, model_cfg, num_point_features, voxel_size,point_cloud_range):super().__init__()self.model_cfg = model_cfgself.use_norm = self.model_cfg['use_norm']self.with_distance = self.model_cfg['with_distance']self.use_absolute_xyz = self.model_cfg['use_absolute_xyz']num_point_features += 6 if self.use_absolute_xyz else 3if self.with_distance:num_point_features += 1self.num_filters = self.model_cfg['num_filters']assert len(self.num_filters) > 0num_filters = [num_point_features] + list(self.num_filters)pfn_layers = []for i in range(len(num_filters) - 1):in_filters = num_filters[i]out_filters = num_filters[i + 1]pfn_layers.append(PFNLayer(in_filters, out_filters, self.use_norm,last_layer=(i >= len(num_filters) - 2)))self.pfn_layers = nn.ModuleList(pfn_layers)self.voxel_x = voxel_size[0]self.voxel_y = voxel_size[1]self.voxel_z = voxel_size[2]self.x_offset = self.voxel_x / 2 + point_cloud_range[0]self.y_offset = self.voxel_y / 2 + point_cloud_range[1]self.z_offset = self.voxel_z / 2 + point_cloud_range[2]

PFNLayer

这里只是一个全连接+归一化(好像和原来的算法有出入)

class PFNLayer(nn.Module):def __init__(self,in_channels,out_channels,use_norm=True,last_layer=False):super().__init__()self.last_vfe = last_layerself.use_norm = use_normif not self.last_vfe:out_channels = out_channels // 2if self.use_norm:self.linear = nn.Linear(in_channels, out_channels, bias=False)self.norm = nn.BatchNorm1d(out_channels, eps=1e-3, momentum=0.01)else:self.linear = nn.Linear(in_channels, out_channels, bias=True)self.part = 50000

PointPillarScatter

主要作用就是三维点云压缩成bev(鸟瞰图)

class PointPillarScatter(nn.Module):def __init__(self, model_cfg):super().__init__()self.model_cfg = model_cfgself.num_bev_features = self.model_cfg['num_features']self.nx, self.ny, self.nz = model_cfg['grid_size']assert self.nz == 1

- model_cfg:

{'grid_size': array([704, 200, 1], dtype=int64),'num_features': 64}

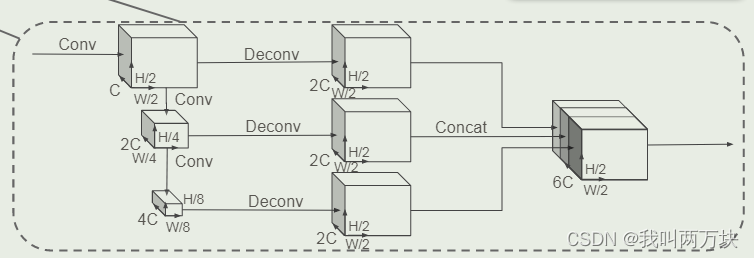

BaseBEVBackbone

参考这个图

3 * Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

↓

5 * Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

↓

8 * Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

3、5、8对应着layer_nums

- model_cfg

{'layer_nums': [3, 5, 8],'layer_strides': [2, 2, 2],'num_filters': [64, 128, 256],'num_upsample_filter': [128, 128, 128],'upsample_strides': [1, 2, 4]},

class BaseBEVBackbone(nn.Module):def __init__(self, model_cfg, input_channels):super().__init__()self.model_cfg = model_cfgif 'layer_nums' in self.model_cfg:assert len(self.model_cfg['layer_nums']) == \len(self.model_cfg['layer_strides']) == \len(self.model_cfg['num_filters'])layer_nums = self.model_cfg['layer_nums']layer_strides = self.model_cfg['layer_strides']num_filters = self.model_cfg['num_filters']else:layer_nums = layer_strides = num_filters = []if 'upsample_strides' in self.model_cfg:assert len(self.model_cfg['upsample_strides']) \== len(self.model_cfg['num_upsample_filter'])num_upsample_filters = self.model_cfg['num_upsample_filter']upsample_strides = self.model_cfg['upsample_strides']else:upsample_strides = num_upsample_filters = []num_levels = len(layer_nums) # len(layer_nums)个Sequentialc_in_list = [input_channels, *num_filters[:-1]]self.blocks = nn.ModuleList()self.deblocks = nn.ModuleList()for idx in range(num_levels):cur_layers = [nn.ZeroPad2d(1),nn.Conv2d(c_in_list[idx], num_filters[idx], kernel_size=3,stride=layer_strides[idx], padding=0, bias=False),nn.BatchNorm2d(num_filters[idx], eps=1e-3, momentum=0.01),nn.ReLU()]for k in range(layer_nums[idx]): # 每个Sequential里有多少个以下结构cur_layers.extend([nn.Conv2d(num_filters[idx], num_filters[idx],kernel_size=3, padding=1, bias=False),nn.BatchNorm2d(num_filters[idx], eps=1e-3, momentum=0.01),nn.ReLU()])self.blocks.append(nn.Sequential(*cur_layers))# 以下是deblock模块if len(upsample_strides) > 0:stride = upsample_strides[idx]if stride >= 1:self.deblocks.append(nn.Sequential(nn.ConvTranspose2d(num_filters[idx], num_upsample_filters[idx],upsample_strides[idx],stride=upsample_strides[idx], bias=False),nn.BatchNorm2d(num_upsample_filters[idx],eps=1e-3, momentum=0.01),nn.ReLU()))else:stride = np.round(1 / stride).astype(np.int)self.deblocks.append(nn.Sequential(nn.Conv2d(num_filters[idx], num_upsample_filters[idx],stride,stride=stride, bias=False),nn.BatchNorm2d(num_upsample_filters[idx], eps=1e-3,momentum=0.01),nn.ReLU()))c_in = sum(num_upsample_filters)if len(upsample_strides) > num_levels:self.deblocks.append(nn.Sequential(nn.ConvTranspose2d(c_in, c_in, upsample_strides[-1],stride=upsample_strides[-1], bias=False),nn.BatchNorm2d(c_in, eps=1e-3, momentum=0.01),nn.ReLU(),))self.num_bev_features = c_in

DownsampleConv

其实就是下采样(用了几个DoubleConv)

主要作用就是

- 降低计算成本: 在深度神经网络中,参数量和计算量通常会随着输入数据的尺寸增加而增加。通过下采样,可以降低每个层的输入数据的尺寸,从而降低网络的计算成本。

- 减少过拟合: 下采样可以通过减少输入数据的维度和数量来减少模型的复杂性,从而有助于降低过拟合的风险。过拟合是指模型在训练数据上表现良好,但在测试数据上表现较差的现象。

- 提高模型的泛化能力: 通过减少输入数据的空间分辨率,下采样有助于模型学习更加抽象和通用的特征,从而提高了模型对于不同数据的泛化能力。

- 加速训练和推理过程: 由于下采样可以降低网络的计算成本,因此可以加快模型的训练和推理过程。这对于处理大规模数据和实时应用特别有用。

class DownsampleConv(nn.Module):def __init__(self, config):super(DownsampleConv, self).__init__()self.layers = nn.ModuleList([])input_dim = config['input_dim']for (ksize, dim, stride, padding) in zip(config['kernal_size'],config['dim'],config['stride'],config['padding']):self.layers.append(DoubleConv(input_dim,dim,kernel_size=ksize,stride=stride,padding=padding))input_dim = dimconfig参数

{'dim': [256],'input_dim': 384,'kernal_size': [1],'padding': [0],'stride': [1]},

DoubleConv

其实就是两层卷积

class DoubleConv(nn.Module):"""Double convoltuionArgs:in_channels: input channel numout_channels: output channel num"""def __init__(self, in_channels, out_channels, kernel_size,stride, padding):super().__init__()self.double_conv = nn.Sequential(nn.Conv2d(in_channels, out_channels, kernel_size=kernel_size,stride=stride, padding=padding),nn.ReLU(inplace=True),nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1),nn.ReLU(inplace=True))

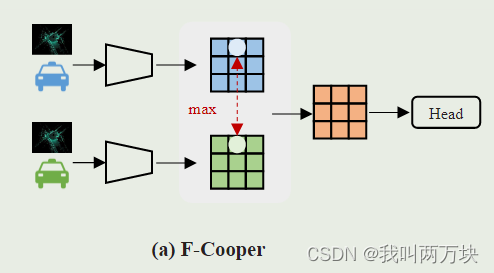

SpatialFusion

其实就是取最大来进行融合特征

class SpatialFusion(nn.Module):def __init__(self):super(SpatialFusion, self).__init__()def regroup(self, x, record_len):cum_sum_len = torch.cumsum(record_len, dim=0)split_x = torch.tensor_split(x, cum_sum_len[:-1].cpu())return split_xdef forward(self, x, record_len):# x: B, C, H, W, split x:[(B1, C, W, H), (B2, C, W, H)]split_x = self.regroup(x, record_len)out = []for xx in split_x:xx = torch.max(xx, dim=0, keepdim=True)[0]out.append(xx)return torch.cat(out, dim=0)

检测头

(cls_head): Conv2d(256, 2, kernel_size=(1, 1), stride=(1, 1))

(reg_head): Conv2d(256, 14, kernel_size=(1, 1), stride=(1, 1))

)

)

)

)