在容器环境中配置安装Prometheus+部署企业微信容器报警+Grafana展示

下载Prometheus (监控Server端)

[root@Prometheus-Grafana prometheus]# mkdir /prometheus

[root@Prometheus-Grafana prometheus]# docker run -d --name test -P prom/prometheus

[root@Prometheus-Grafana prometheus]# docker cp test:/etc/prometheus/prometheus.yml /prometheus[root@Prometheus-Grafana prometheus]# docker rm -f test

[root@Prometheus-Grafana prometheus]# docker run -d --name prometheus --net host -p 9090:9090 -v /prometheus/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus[root@Prometheus-Grafana prometheus]# pwd

/prometheus

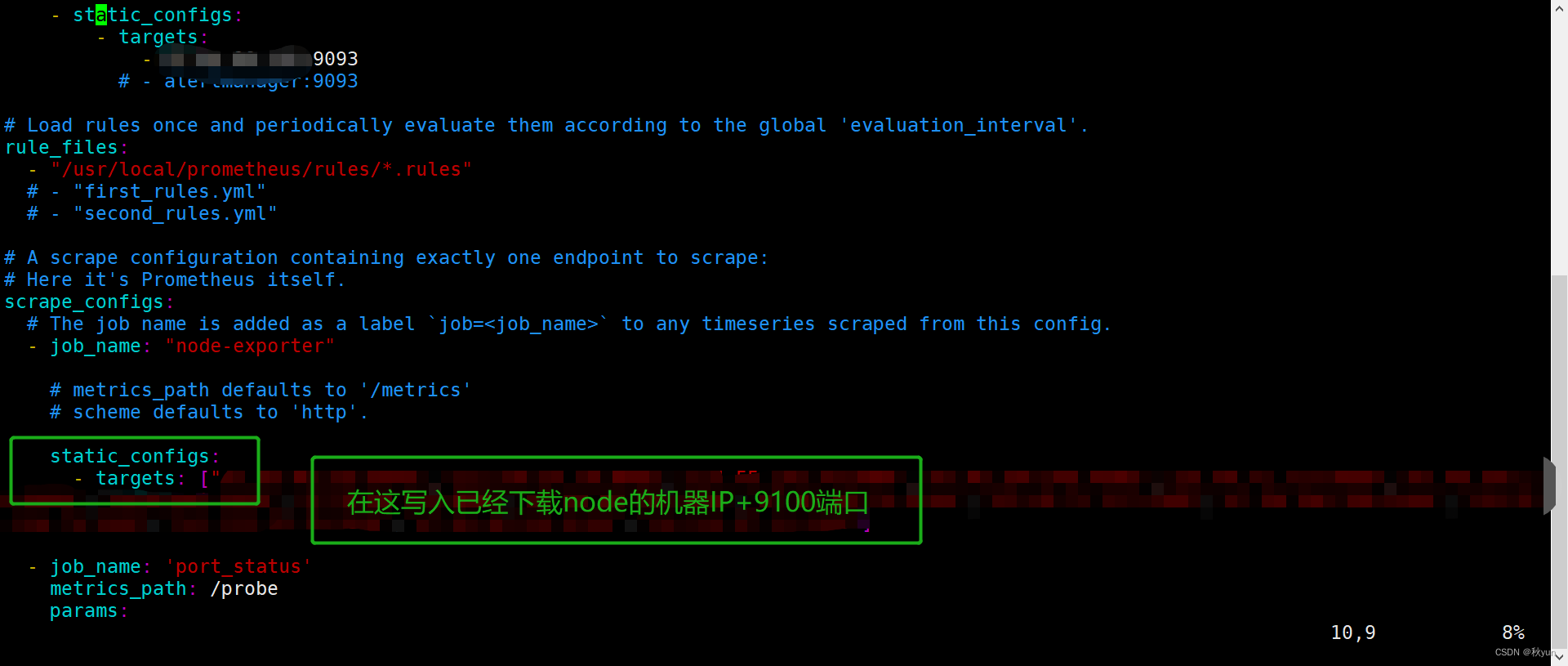

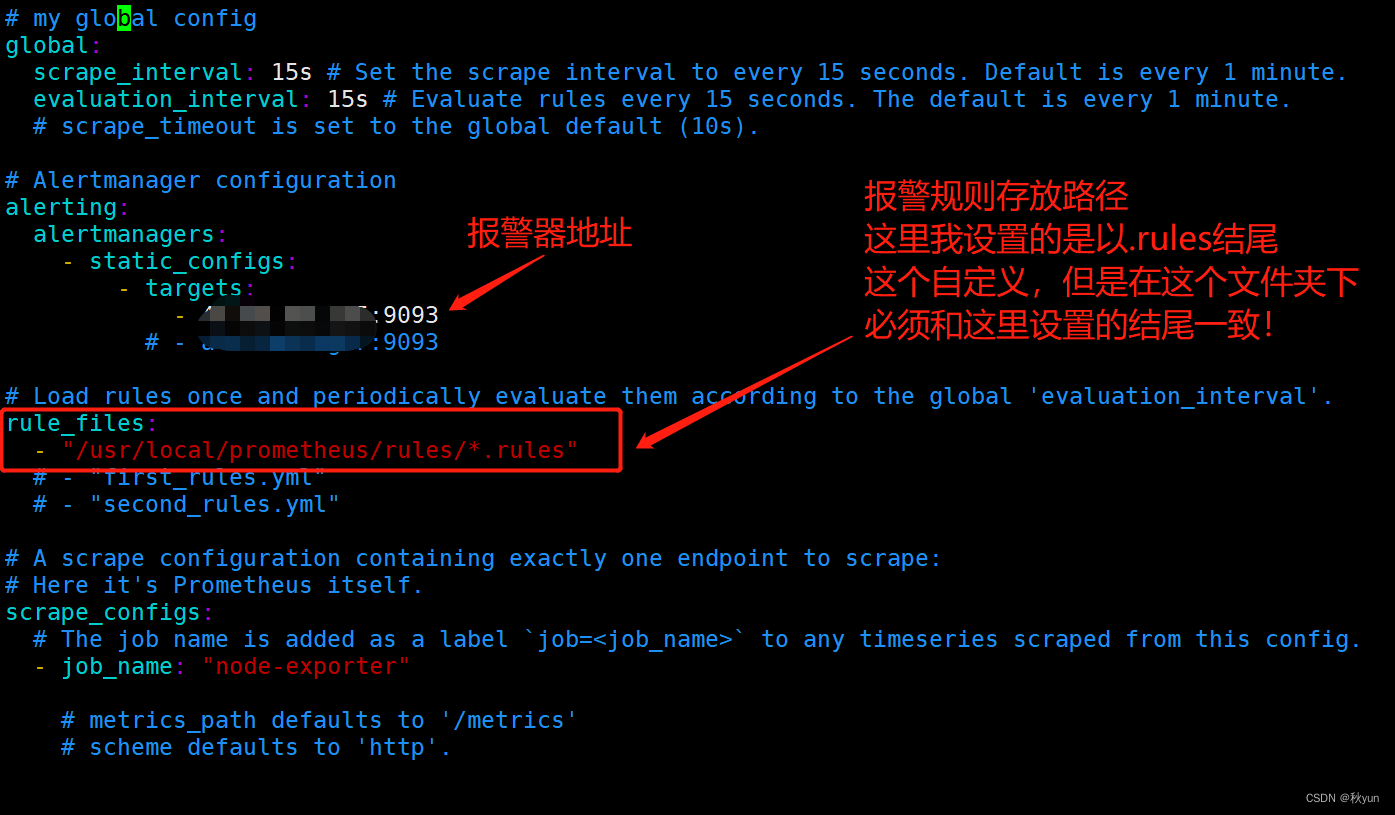

[root@Prometheus-Grafana prometheus]# vim prometheus.yml

修改后重启Prometheus

[root@Prometheus-Grafana prometheus]# docker restart prometheus

访问 IP+9090

下载node-exporter(抽取硬件信息)

// 全部主机都要做

docker run -d -p 9100:9100 -v /proc:/host/proc -v /sys:/host/sys -v /:/rootfs --net=host prom/node-exporter --path.procfs /host/proc --path.sysfs /host/sys --collector.filesystem.ignored-mount-points "^/(sys|proc|dev|host|etc)($|/)"--web.listen-address 9100 //默认使用9100端口号

--path.rootfs:node-exporter使用/host前缀访问主机文件系统

--collector.filesystem.ignored-mount-points:忽略访问的文件

--net=host:代替端口映射,如容器有80,需要访问的话就需要加-p选项,不想的话加--net=host就可以直接访问80

启动后去修改Prometheus.yml配置文件!

#重启Prometheus

docker restart prometheus

#每次动完Prometheus.yml这个文件,必须重启才能生效,因为这是普罗米的配置文件!

下载cadvisor(抽取容器信息)

docker run \

--volume=/:/roofs:ro \

--volume=/var/run:/var/run:rw \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--volume=/dev/disk/:/dev/disk:ro \

--publish=8080:8080 \

--detach=true \

--name=cadvisor \

google/cadvisor:latest

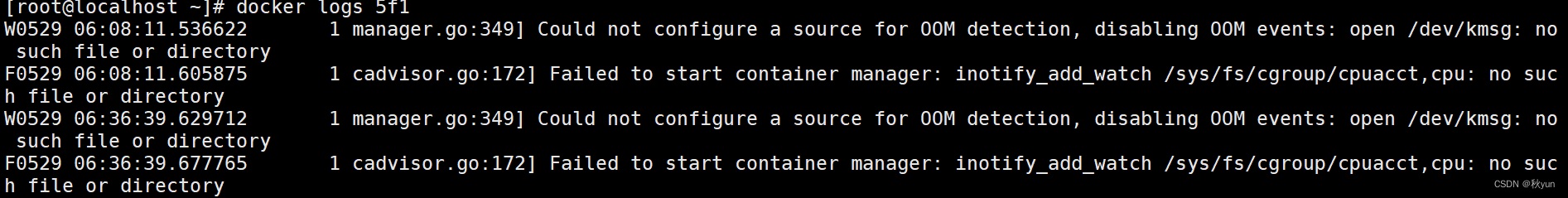

注:如果发现启动不起来 docker ps查看没有的话跟着下文解决报错

发现没有启动起来

#如果是这样的话

[root@localhost ~]# mount -o remount,rw '/sys/fs/cgroup'

[root@localhost ~]# ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f16fb0b2a91 google/cadvisor "/usr/bin/cadvisor..." 29 minutes ago Exited (255) 32 seconds ago cadvisor

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@localhost ~]# docker start 5f1

5f1

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f16fb0b2a91 google/cadvisor "/usr/bin/cadvisor..." 29 minutes ago Up Less than a second cadvisor#执行前两行命令#该报错的错误原因是:系统资源只读!

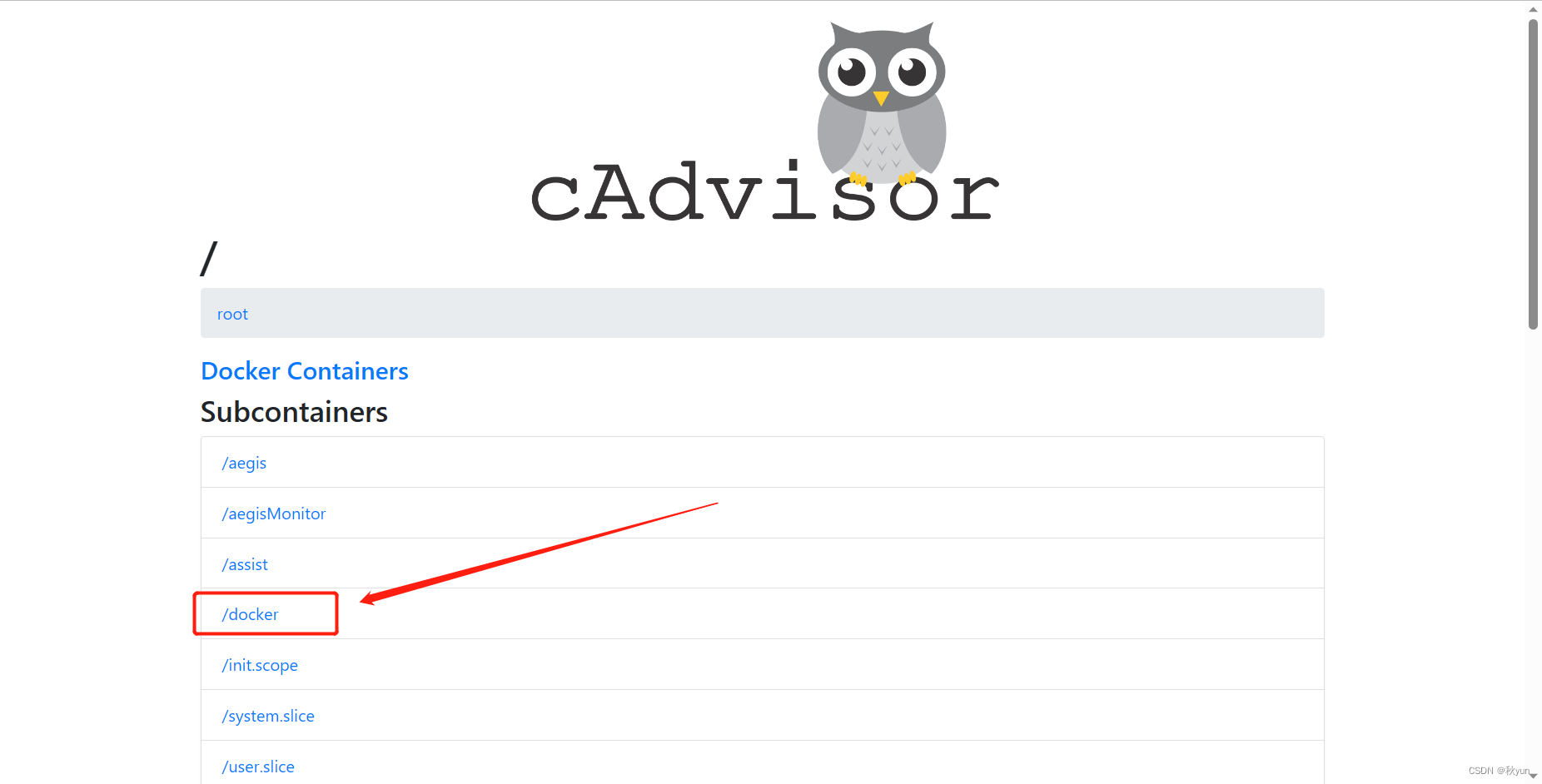

#启动后去访问IP+8080端口查看cADvisor

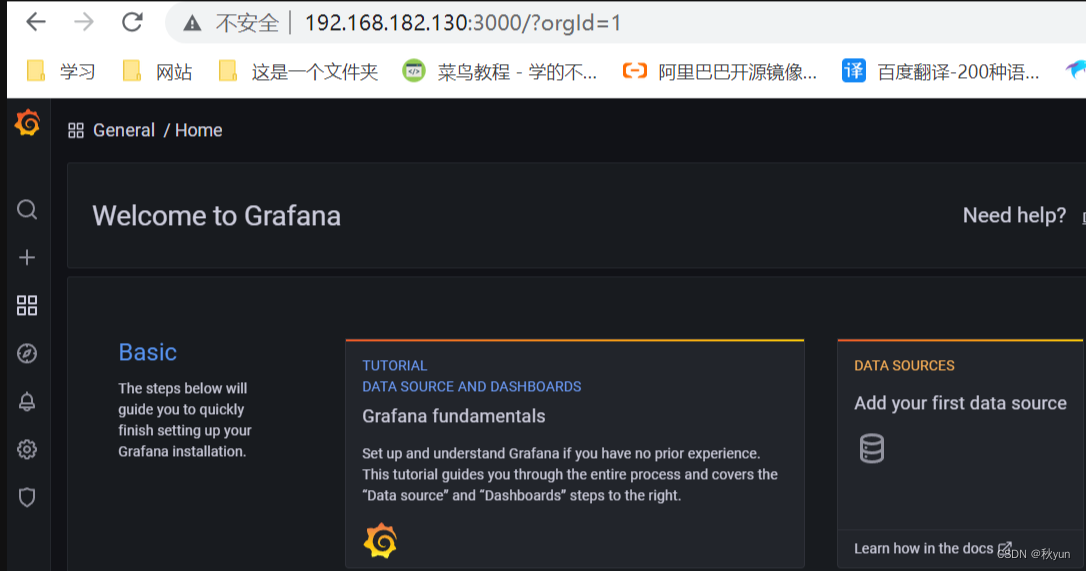

下载Grafana(展示监控)

mkdir /grafana

chmod 777 -R /grafana

docker run -d -p 3000:3000 --name grafana -v /grafana:/var/lib/grafana -e "GF_SECURITY_ADMIN_PASSWORD=123.com" grafana/grafana

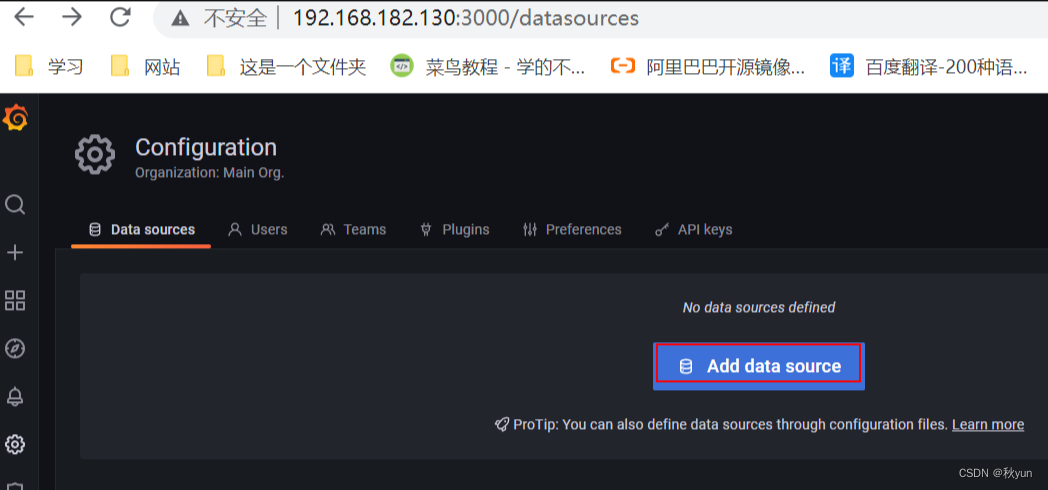

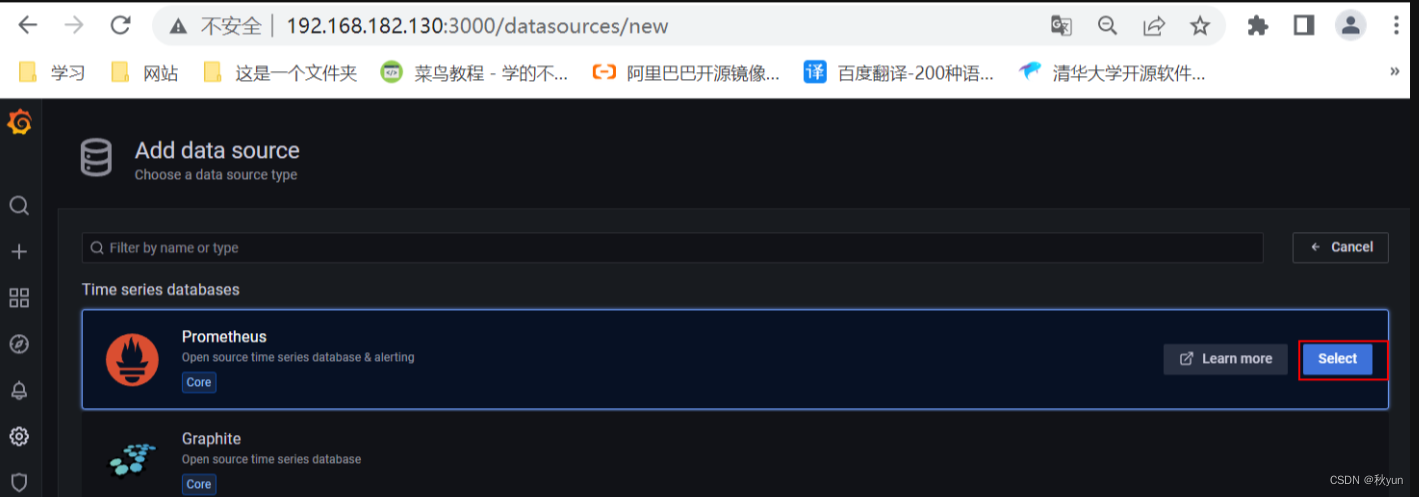

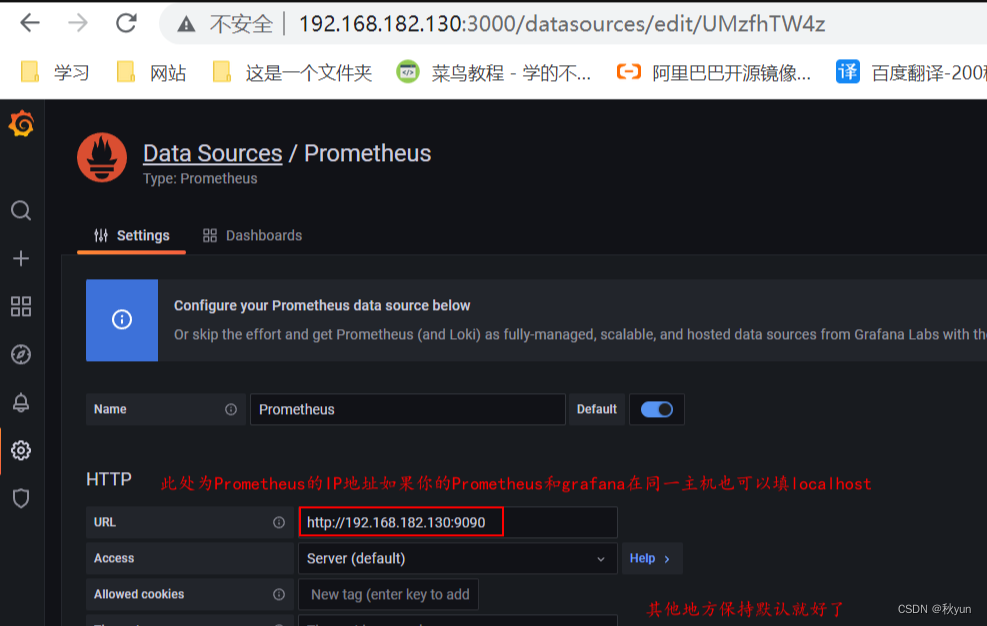

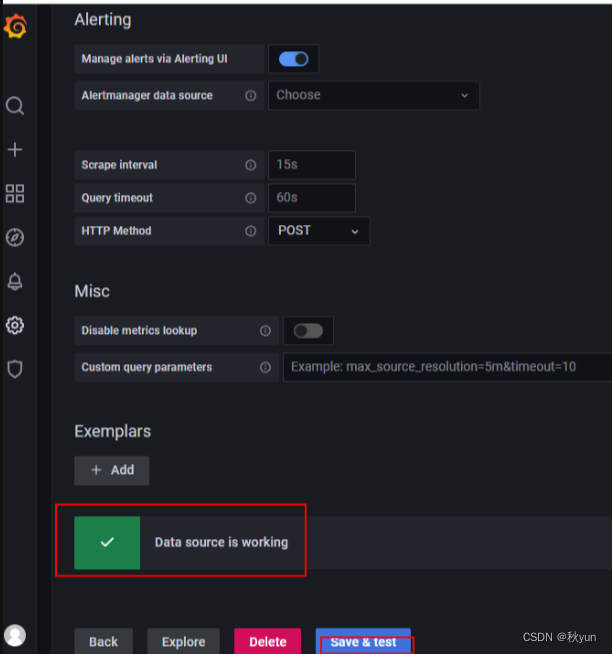

/ / 添加数据源

注:这里比较容易犯错,不要去填8080和9100,必须去填9090!!跟着我操作就可以了!!

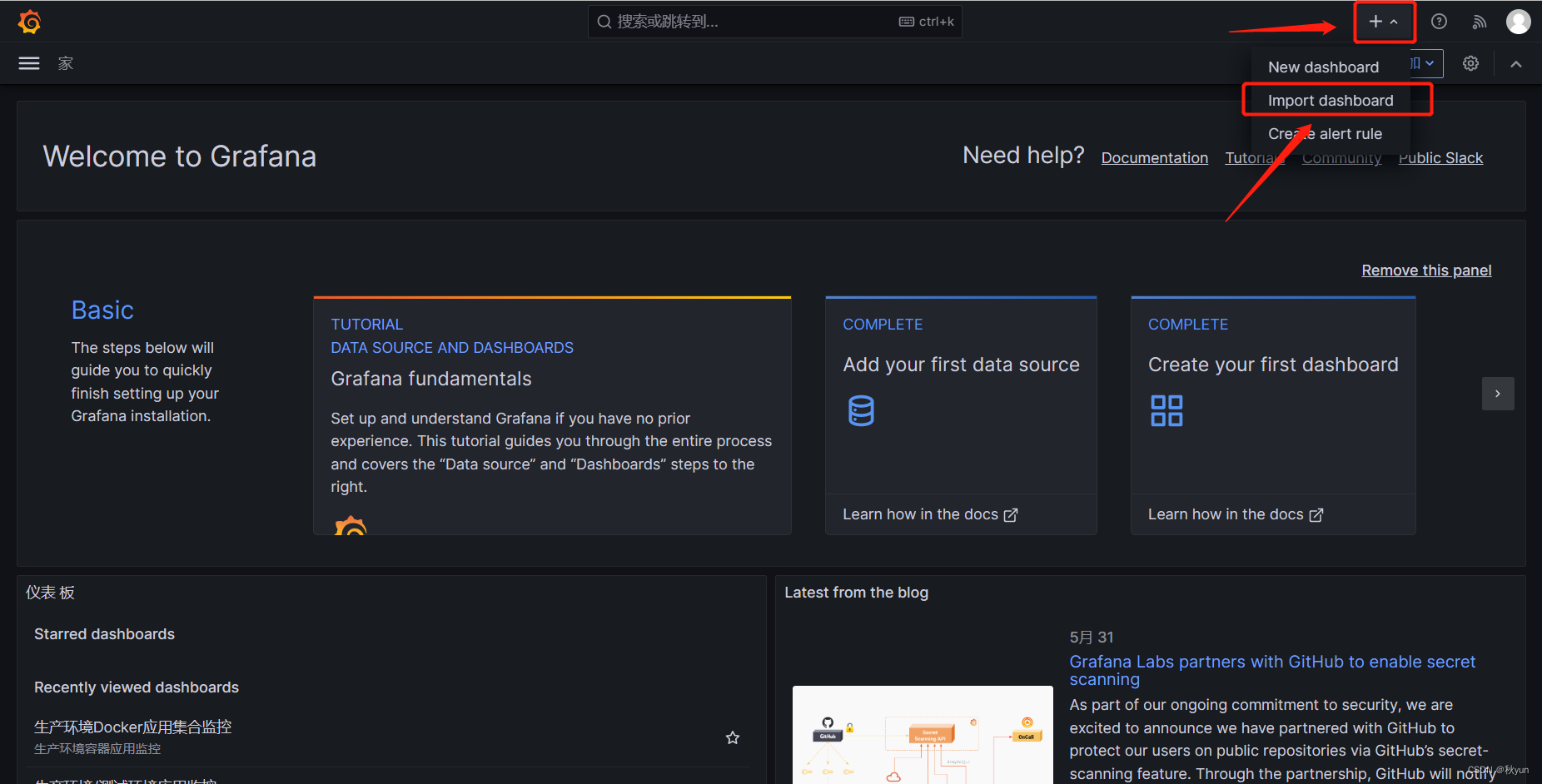

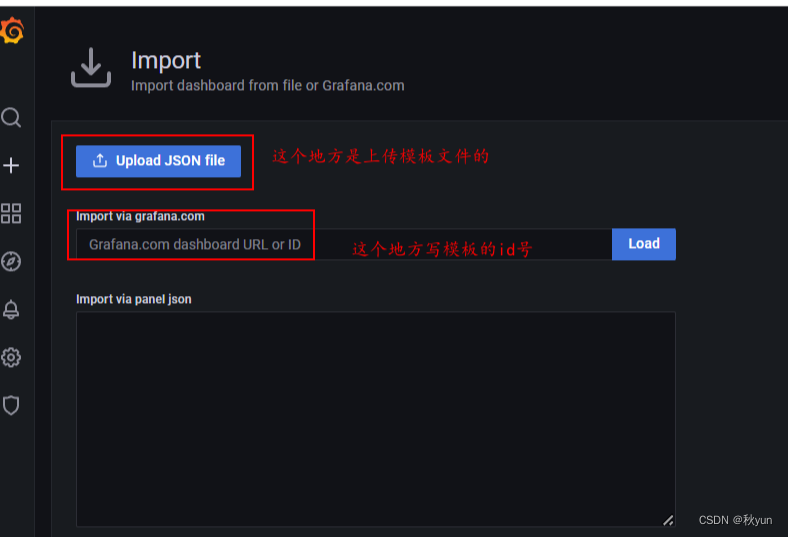

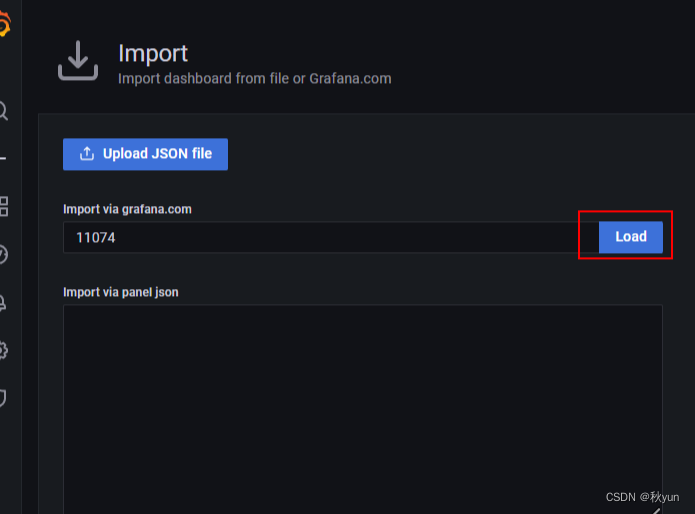

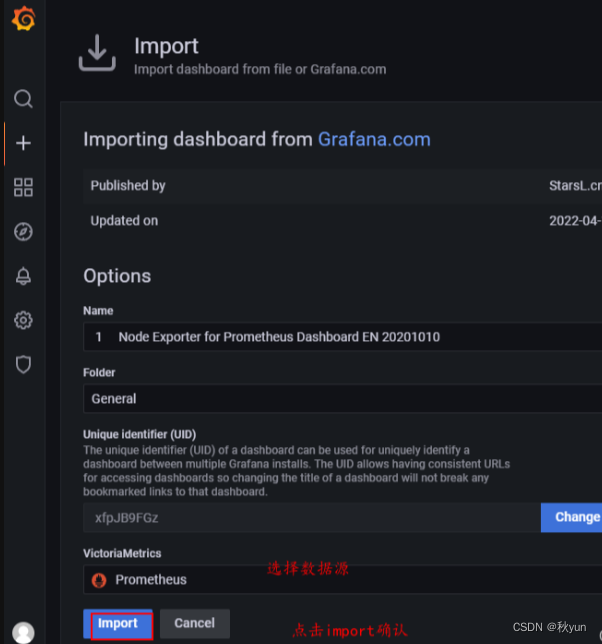

这里直接套用grafana的模板

https://grafana.com/grafana/dashboards/11074-node-exporter-for-prometheus-dashboard-en-v20201010/

#有两种方式使用模板

1 通过模板的id号进行导入

2将模板下载到本地再进行导入

#这里推荐直接输入ID号

这是最终展示的监控硬件画面

因为我们要看的是容器的信息,所以找一个和容器相关的模板导入。

通过关键字搜索相关模板

https://grafana.com/grafana/dashboards/10619-docker-host-container-overview/

操作和上面导入模板操作一样,也是导入刚刚配置的数据源

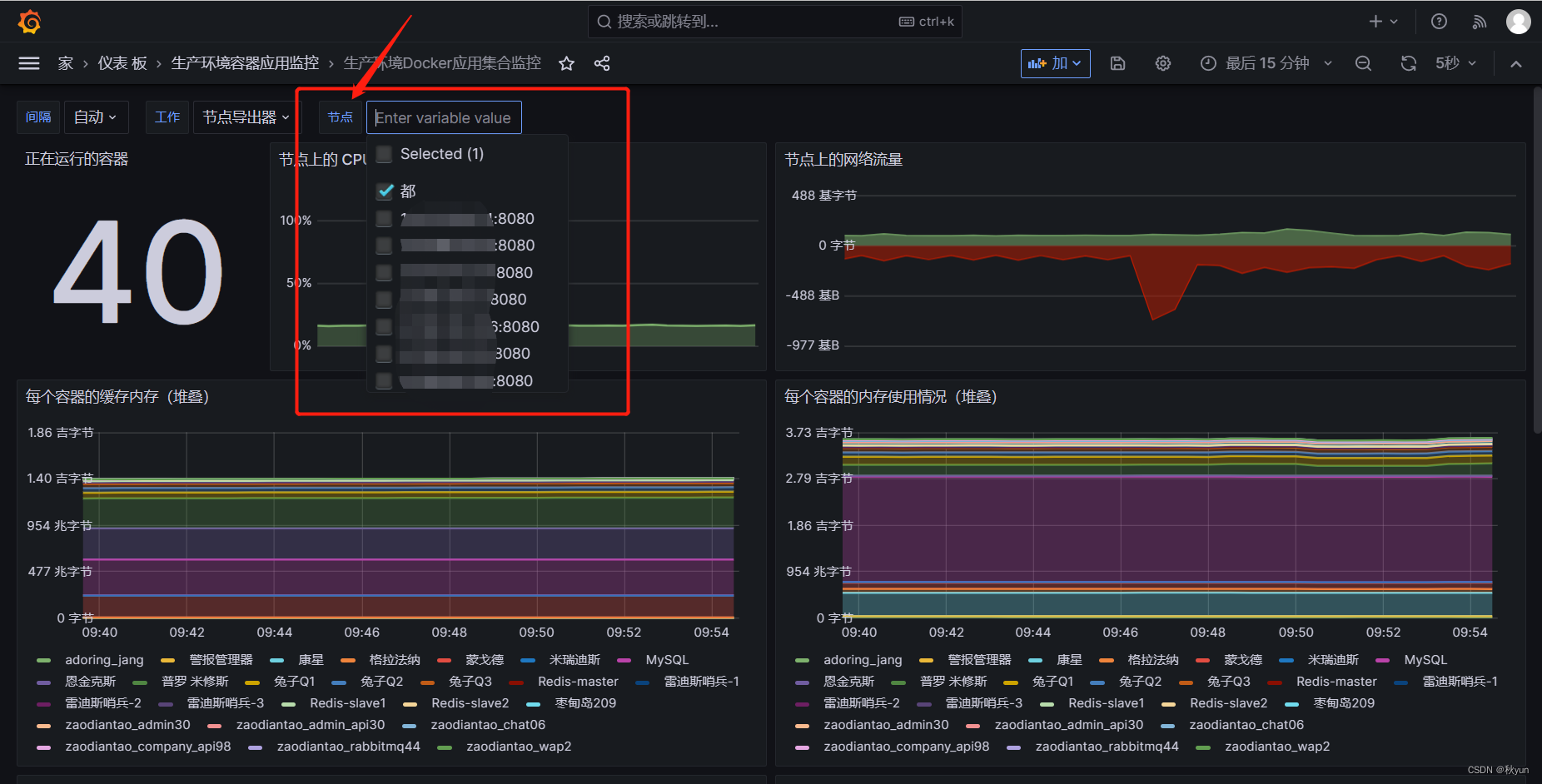

这是最终展示的页面

在这里也可以去选择节点

做完以上监控以及展示我们的步骤已经进行了一半了,接下来就是去配置监控报警项

下载Alertmanager(报警)

[root@prometheus ~]# mkdir /alertmanager //创建一个数据目录

[root@prometheus ~]# docker run -d --name test -p 9093:9093 prom/alertmanager

#创建容器用来获得alertmanager.yml配置文件[root@prometheus ~]# docker cp test:/etc/alertmanager/alertmanager.yml /alertmanager/[root@prometheus ~]# docker rm -f test[root@prometheus ~]# docker run -d --name alertmanager -p 9093:9093 -v /alertmanager/alertmanager.yml:/etc/alertmanager/alertmanager.yml -v /alertmanager/template/:/etc/alertmanager/template prom/alertmanager

#这里说一下配置文件中几个重要的参数global:全局配置,包括报警解决后的超时时间、SMTP相关配置、各种渠道通知的API地址等route:用来设置报警的分发策略,它是一个树状结构,按照深度优先从左向右的顺序进行匹配。receivers:配置告警消息接受者信息,例如常用的email、wechat、slack、webhook等消息通知方式inhibit_rules:抑制规则配置,当存在与另一组匹配的警报(源)时,抑制规则将禁用与一组匹配的报警(目标)。

配置Prometheus部分

[root@Prometheus-Grafana prometheus]# mkdir /prometheus/rules

[root@Prometheus-Grafana rules]# vim blackbox-alert.rules

[root@Prometheus-Grafana rules]# pwd

/prometheus/rulesgroups:- name: blackbox-alertrules:- alert: 端口状态expr: probe_success == 0for: 10slabels:severity: 'warning'annotations:description: 'API service: {{$labels.instance}} 端口检查失败,服务不可用,请检查'summary: 'API service: {{$labels.instance}} '#注,这是第一个报警规则配置,下面还有两个都要创建!这个规则一会容器报警会用,先提前创建了。

[root@Prometheus-Grafana rules]# vim node-exporter-record.rules

groups:- name: node-exporter-recordrules:- expr: up{job="node-exporter"}record: node_exporter:uplabels:desc: "节点是否在线, 在线1,不在线0"unit: " "job: "node-exporter"- expr: time() - node_boot_time_seconds{}record: node_exporter:node_uptimelabels:desc: "节点的运行时间"unit: "s"job: "node-exporter"

##############################################################################################

# cpu #- expr: (1 - avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode="idle"}[5m]))) * 100record: node_exporter:cpu:total:percentlabels:desc: "节点的cpu总消耗百分比"unit: "%"job: "node-exporter"- expr: (avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode="idle"}[5m]))) * 100record: node_exporter:cpu:idle:percentlabels:desc: "节点的cpu idle百分比"unit: "%"job: "node-exporter"- expr: (avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode="iowait"}[5m]))) * 100record: node_exporter:cpu:iowait:percentlabels:desc: "节点的cpu iowait百分比"unit: "%"job: "node-exporter"- expr: (avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode="system"}[5m]))) * 100record: node_exporter:cpu:system:percentlabels:desc: "节点的cpu system百分比"unit: "%"job: "node-exporter"- expr: (avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode="user"}[5m]))) * 100record: node_exporter:cpu:user:percentlabels:desc: "节点的cpu user百分比"unit: "%"job: "node-exporter"- expr: (avg by (environment,instance) (irate(node_cpu_seconds_total{job="node-exporter",mode=~"softirq|nice|irq|steal"}[5m]))) * 100record: node_exporter:cpu:other:percentlabels:desc: "节点的cpu 其他的百分比"unit: "%"job: "node-exporter"

############################################################################################################################################################################################

# memory #- expr: node_memory_MemTotal_bytes{job="node-exporter"}record: node_exporter:memory:totallabels:desc: "节点的内存总量"unit: bytejob: "node-exporter"- expr: node_memory_MemFree_bytes{job="node-exporter"}record: node_exporter:memory:freelabels:desc: "节点的剩余内存量"unit: bytejob: "node-exporter"- expr: node_memory_MemTotal_bytes{job="node-exporter"} - node_memory_MemFree_bytes{job="node-exporter"}record: node_exporter:memory:usedlabels:desc: "节点的已使用内存量"unit: bytejob: "node-exporter"- expr: node_memory_MemTotal_bytes{job="node-exporter"} - node_memory_MemAvailable_bytes{job="node-exporter"}record: node_exporter:memory:actualusedlabels:desc: "节点用户实际使用的内存量"unit: bytejob: "node-exporter"- expr: (1-(node_memory_MemAvailable_bytes{job="node-exporter"} / (node_memory_MemTotal_bytes{job="node-exporter"})))* 100record: node_exporter:memory:used:percentlabels:desc: "节点的内存使用百分比"unit: "%"job: "node-exporter"- expr: ((node_memory_MemAvailable_bytes{job="node-exporter"} / (node_memory_MemTotal_bytes{job="node-exporter"})))* 100record: node_exporter:memory:free:percentlabels:desc: "节点的内存剩余百分比"unit: "%"job: "node-exporter"

##############################################################################################

# load #- expr: sum by (instance) (node_load1{job="node-exporter"})record: node_exporter:load:load1labels:desc: "系统1分钟负载"unit: " "job: "node-exporter"- expr: sum by (instance) (node_load5{job="node-exporter"})record: node_exporter:load:load5labels:desc: "系统5分钟负载"unit: " "job: "node-exporter"- expr: sum by (instance) (node_load15{job="node-exporter"})record: node_exporter:load:load15labels:desc: "系统15分钟负载"unit: " "job: "node-exporter"##############################################################################################

# disk #- expr: node_filesystem_size_bytes{job="node-exporter" ,fstype=~"ext4|xfs"}record: node_exporter:disk:usage:totallabels:desc: "节点的磁盘总量"unit: bytejob: "node-exporter"- expr: node_filesystem_avail_bytes{job="node-exporter",fstype=~"ext4|xfs"}record: node_exporter:disk:usage:freelabels:desc: "节点的磁盘剩余空间"unit: bytejob: "node-exporter"- expr: node_filesystem_size_bytes{job="node-exporter",fstype=~"ext4|xfs"} - node_filesystem_avail_bytes{job="node-exporter",fstype=~"ext4|xfs"}record: node_exporter:disk:usage:usedlabels:desc: "节点的磁盘使用的空间"unit: bytejob: "node-exporter"- expr: (1 - node_filesystem_avail_bytes{job="node-exporter",fstype=~"ext4|xfs"} / node_filesystem_size_bytes{job="node-exporter",fstype=~"ext4|xfs"}) * 100record: node_exporter:disk:used:percentlabels:desc: "节点的磁盘的使用百分比"unit: "%"job: "node-exporter"- expr: irate(node_disk_reads_completed_total{job="node-exporter"}[1m])record: node_exporter:disk:read:count:ratelabels:desc: "节点的磁盘读取速率"unit: "次/秒"job: "node-exporter"- expr: irate(node_disk_writes_completed_total{job="node-exporter"}[1m])record: node_exporter:disk:write:count:ratelabels:desc: "节点的磁盘写入速率"unit: "次/秒"job: "node-exporter"- expr: (irate(node_disk_written_bytes_total{job="node-exporter"}[1m]))/1024/1024record: node_exporter:disk:read:mb:ratelabels:desc: "节点的设备读取MB速率"unit: "MB/s"job: "node-exporter"- expr: (irate(node_disk_read_bytes_total{job="node-exporter"}[1m]))/1024/1024record: node_exporter:disk:write:mb:ratelabels:desc: "节点的设备写入MB速率"unit: "MB/s"job: "node-exporter"##############################################################################################

# filesystem #- expr: (1 -node_filesystem_files_free{job="node-exporter",fstype=~"ext4|xfs"} / node_filesystem_files{job="node-exporter",fstype=~"ext4|xfs"}) * 100record: node_exporter:filesystem:used:percentlabels:desc: "节点的inode的剩余可用的百分比"unit: "%"job: "node-exporter"

#############################################################################################

# filefd #- expr: node_filefd_allocated{job="node-exporter"}record: node_exporter:filefd_allocated:countlabels:desc: "节点的文件描述符打开个数"unit: "%"job: "node-exporter"- expr: node_filefd_allocated{job="node-exporter"}/node_filefd_maximum{job="node-exporter"} * 100record: node_exporter:filefd_allocated:percentlabels:desc: "节点的文件描述符打开百分比"unit: "%"job: "node-exporter"#############################################################################################

# network #- expr: avg by (environment,instance,device) (irate(node_network_receive_bytes_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netin:bit:ratelabels:desc: "节点网卡eth0每秒接收的比特数"unit: "bit/s"job: "node-exporter"- expr: avg by (environment,instance,device) (irate(node_network_transmit_bytes_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netout:bit:ratelabels:desc: "节点网卡eth0每秒发送的比特数"unit: "bit/s"job: "node-exporter"- expr: avg by (environment,instance,device) (irate(node_network_receive_packets_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netin:packet:ratelabels:desc: "节点网卡每秒接收的数据包个数"unit: "个/秒"job: "node-exporter"- expr: avg by (environment,instance,device) (irate(node_network_transmit_packets_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netout:packet:ratelabels:desc: "节点网卡发送的数据包个数"unit: "个/秒"job: "node-exporter"- expr: avg by (environment,instance,device) (irate(node_network_receive_errs_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netin:error:ratelabels:desc: "节点设备驱动器检测到的接收错误包的数量"unit: "个/秒"job: "node-exporter"- expr: avg by (environment,instance,device) (irate(node_network_transmit_errs_total{device=~"eth0|eth1|ens33|ens37"}[1m]))record: node_exporter:network:netout:error:ratelabels:desc: "节点设备驱动器检测到的发送错误包的数量"unit: "个/秒"job: "node-exporter"- expr: node_tcp_connection_states{job="node-exporter", state="established"}record: node_exporter:network:tcp:established:countlabels:desc: "节点当前established的个数"unit: "个"job: "node-exporter"- expr: node_tcp_connection_states{job="node-exporter", state="time_wait"}record: node_exporter:network:tcp:timewait:countlabels:desc: "节点timewait的连接数"unit: "个"job: "node-exporter"- expr: sum by (environment,instance) (node_tcp_connection_states{job="node-exporter"})record: node_exporter:network:tcp:total:countlabels:desc: "节点tcp连接总数"unit: "个"job: "node-exporter"#############################################################################################

# process #- expr: node_processes_state{state="Z"}record: node_exporter:process:zoom:total:countlabels:desc: "节点当前状态为zoom的个数"unit: "个"job: "node-exporter"

#############################################################################################

# other #- expr: abs(node_timex_offset_seconds{job="node-exporter"})record: node_exporter:time:offsetlabels:desc: "节点的时间偏差"unit: "s"job: "node-exporter"#############################################################################################- expr: count by (instance) ( count by (instance,cpu) (node_cpu_seconds_total{ mode='system'}) )record: node_exporter:cpu:count

[root@Prometheus-Grafana rules]# vim node-exporer-alert.rules

groups:- name: node-exporter-alertrules:- alert: node-exporter-downexpr: node_exporter:up == 0for: 1mlabels:severity: 'critical'annotations:summary: "instance: {{ $labels.instance }} 宕机了"description: "instance: {{ $labels.instance }} \n- job: {{ $labels.job }} 关机了, 时间已经1分钟了。"value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-cpu-highexpr: node_exporter:cpu:total:percent > 80for: 3mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} cpu 使用率高于 {{ $value }}"description: "instance: {{ $labels.instance }} \n- job: {{ $labels.job }} CPU使用率已经持续三分钟高过80% 。"value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-cpu-iowait-highexpr: node_exporter:cpu:iowait:percent >= 12for: 3mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} cpu iowait 使用率高于 {{ $value }}"description: "instance: {{ $labels.instance }} \n- job: {{ $labels.job }} cpu iowait使用率已经持续三分钟高过12%"value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-load-load1-highexpr: (node_exporter:load:load1) > (node_exporter:cpu:count) * 1.2for: 3mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} load1 使用率高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-memory-highexpr: node_exporter:memory:used:percent > 85for: 3mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} memory 使用率高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-disk-highexpr: node_exporter:disk:used:percent > 88for: 10mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} disk 使用率高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-disk-read:count-highexpr: node_exporter:disk:read:count:rate > 3000for: 2mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} iops read 使用率高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-disk-write-count-highexpr: node_exporter:disk:write:count:rate > 3000for: 2mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} iops write 使用率高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-disk-read-mb-highexpr: node_exporter:disk:read:mb:rate > 60for: 2mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 读取字节数 高于 {{ $value }}"description: ""instance: "{{ $labels.instance }}"value: "{{ $value }}"- alert: node-exporter-disk-write-mb-highexpr: node_exporter:disk:write:mb:rate > 60for: 2mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 写入字节数 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-filefd-allocated-percent-highexpr: node_exporter:filefd_allocated:percent > 80for: 10mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 打开文件描述符 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-network-netin-error-rate-highexpr: node_exporter:network:netin:error:rate > 4for: 1mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 包进入的错误速率 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-network-netin-packet-rate-highexpr: node_exporter:network:netin:packet:rate > 35000for: 1mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 包进入速率 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-network-netout-packet-rate-highexpr: node_exporter:network:netout:packet:rate > 35000for: 1mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 包流出速率 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-network-tcp-total-count-highexpr: node_exporter:network:tcp:total:count > 40000for: 1mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} tcp连接数量 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-process-zoom-total-count-highexpr: node_exporter:process:zoom:total:count > 10for: 10mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} 僵死进程数量 高于 {{ $value }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"- alert: node-exporter-time-offset-highexpr: node_exporter:time:offset > 0.03for: 2mlabels:severity: infoannotations:summary: "instance: {{ $labels.instance }} {{ $labels.desc }} {{ $value }} {{ $labels.unit }}"description: ""value: "{{ $value }}"instance: "{{ $labels.instance }}"

以上规则配好后我们的报警触发器已经做好了现在需要去做报警收件人了,因为现在企业微信直接用Alertmanager发送会非常麻烦,要涉及到可信IP,那个地方兄弟们会被卡很久的,所以直接用企业微信可信的转发器转发报警信息给企业微信就可以了!

下载PrometheusAlert(转发器)

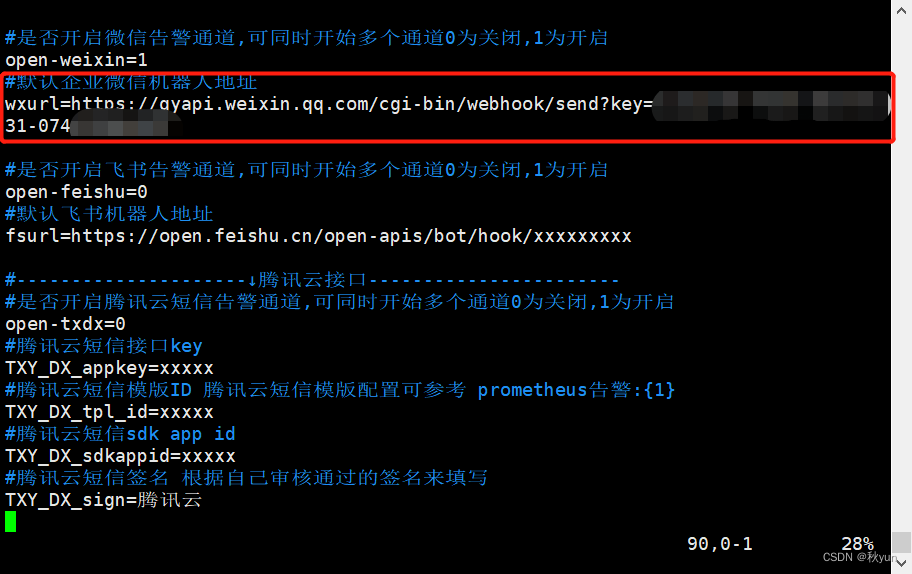

[root@JenkinsGitlab linux]# wget https://github.com/feiyu563/PrometheusAlert/releases/download/v4.8.1/linux.zip[root@JenkinsGitlab linux]# unzip linux.zip[root@JenkinsGitlab linux]# cd linux/[root@JenkinsGitlab linux]# vim conf/app.conf#这个我们直接部署在外面,不用在容器中,在容器中部署是非常麻烦的

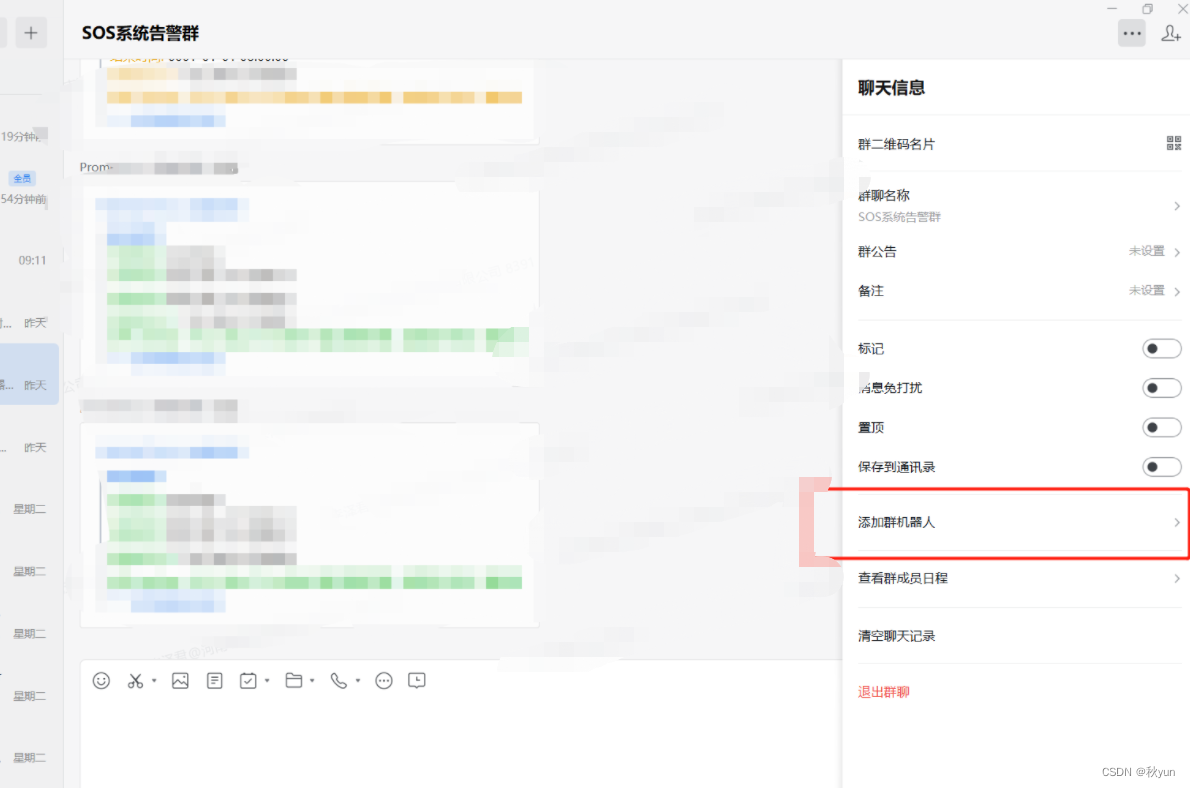

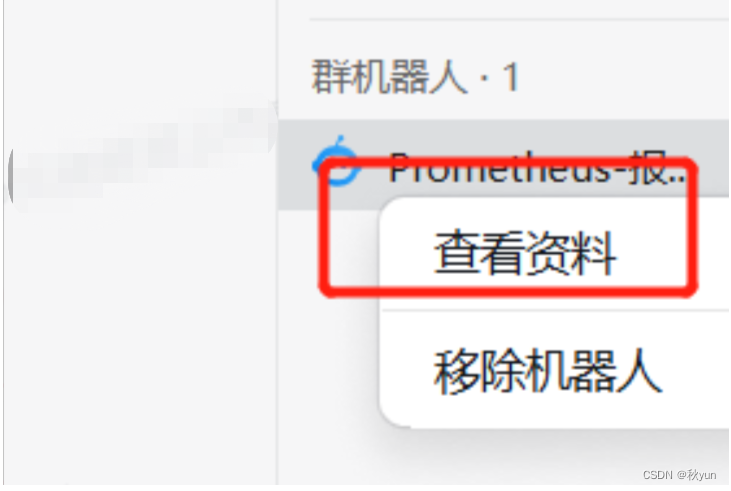

这个机器人地址在企业微信群聊中去添加

把这个复制下来填到刚刚的配置文件中

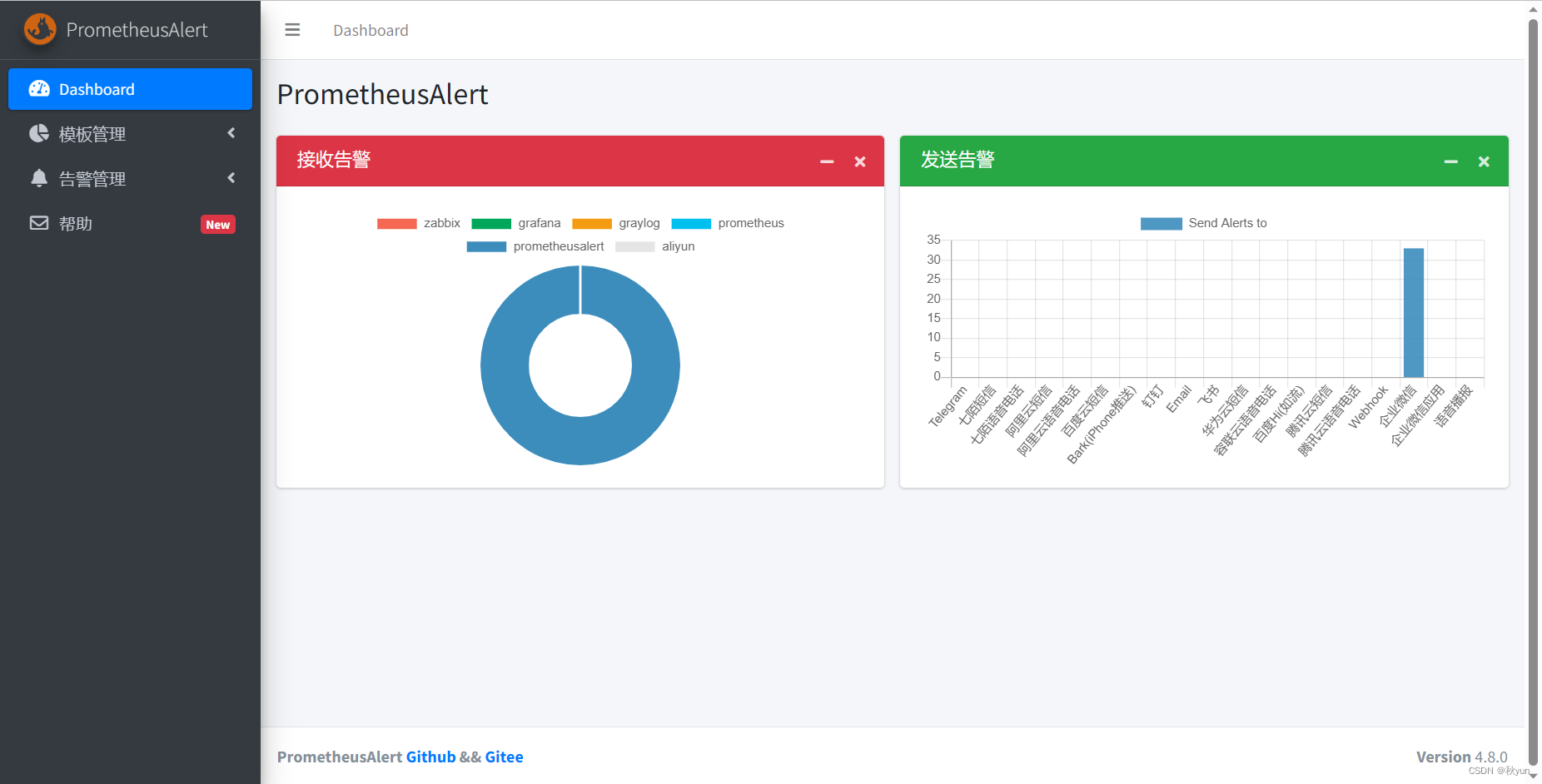

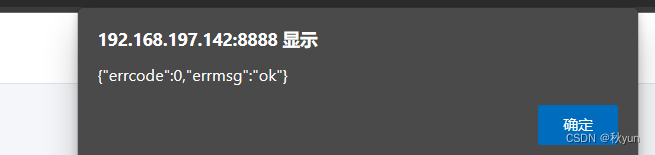

[root@JenkinsGitlab linux]# chmod +x PrometheusAlert[root@JenkinsGitlab linux]# 启动 nohup ./PrometheusAlert & 后台运行[root@JenkinsGitlab linux]# 停止 ps -ef |grep PrometheusAlert 关闭 kill -9 进程号#访问IP地址:刚刚更改的端口号 比如我是9555那直接访问9555

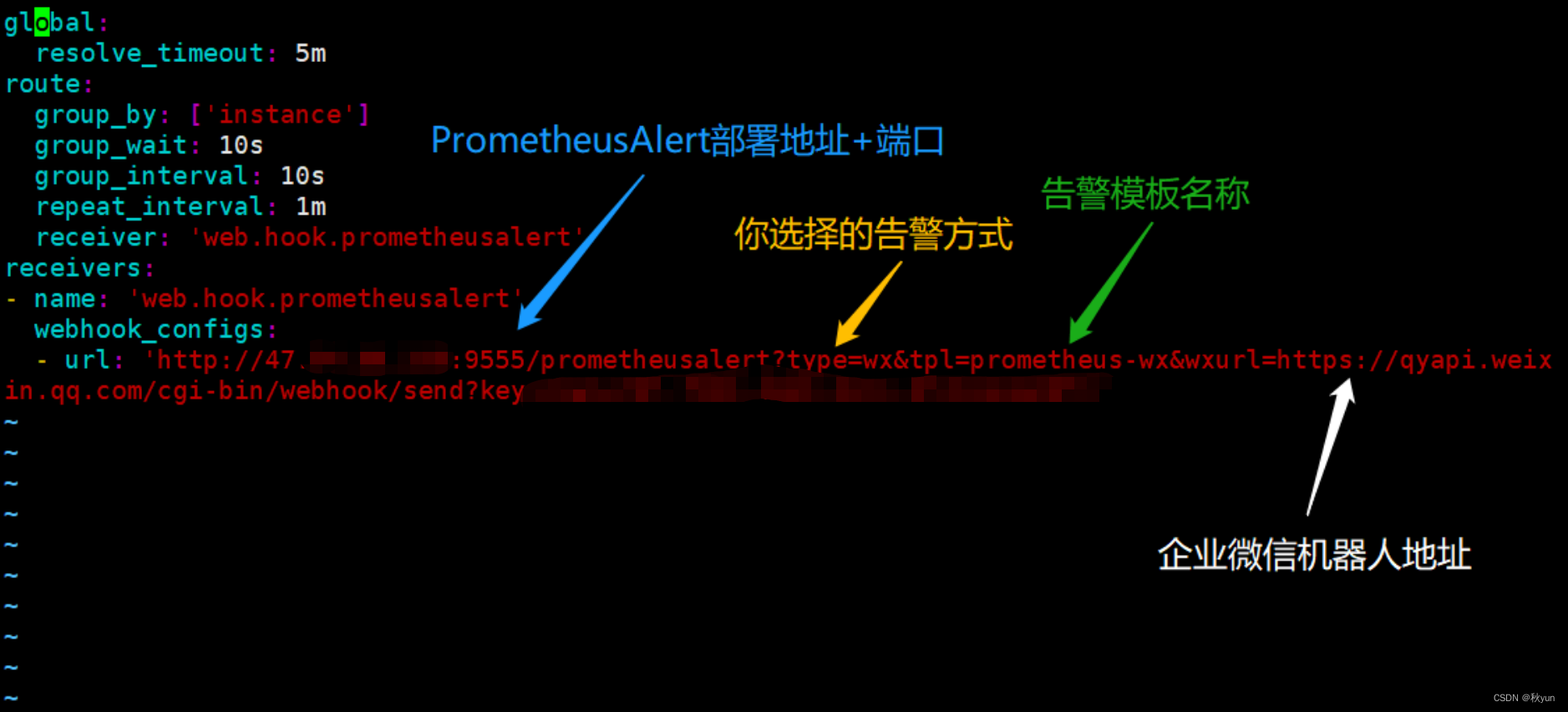

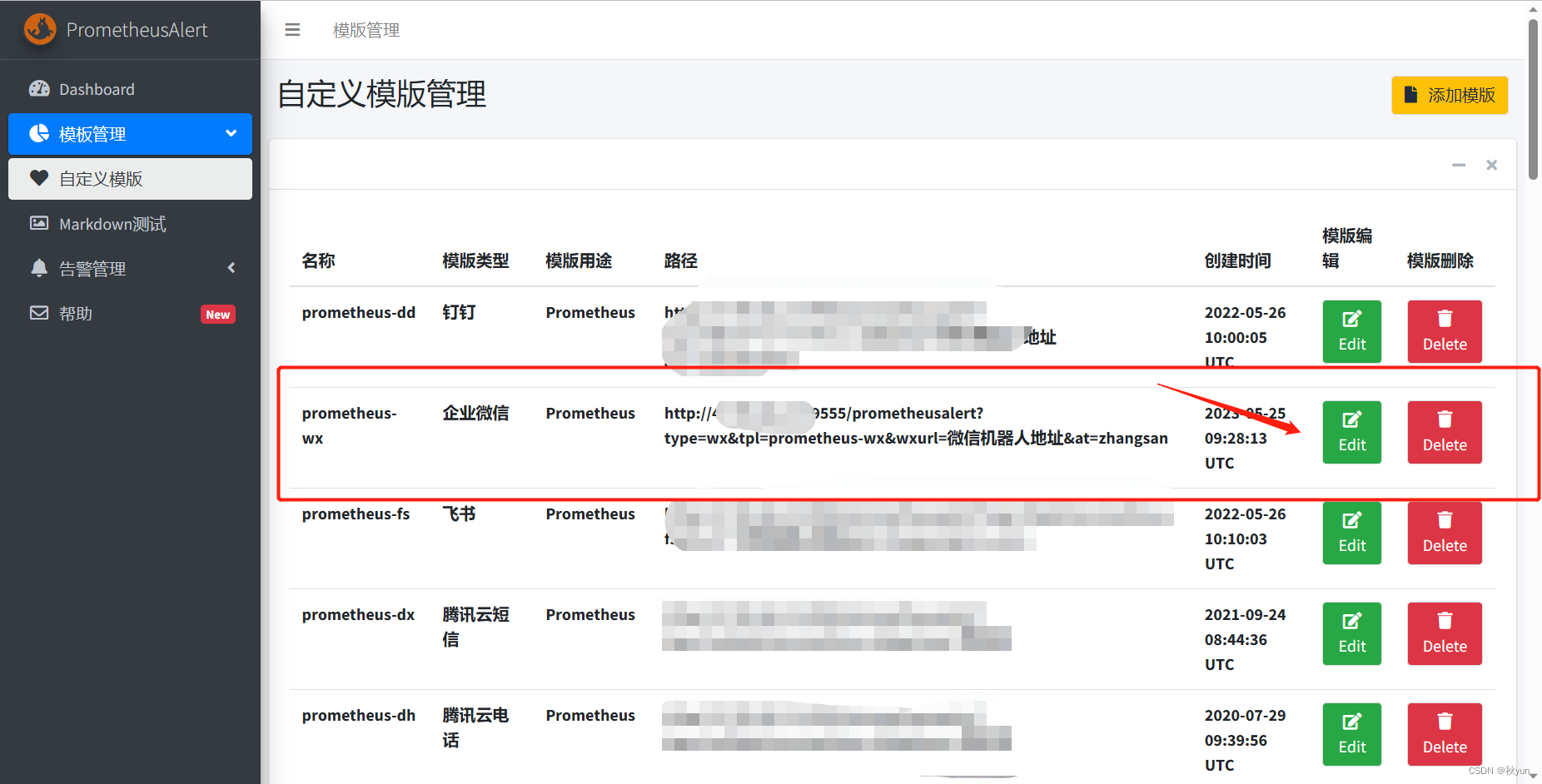

部署好了PrometheusAler之后更改Alertmanager的配置,将所有告警信息都转发到PrometheusAlert

[root@JenkinsGitlab linux]# vim /usr/local/alertmanager/alertmanager.yml

global:resolve_timeout: 5m

route:group_by: ['instance']group_wait: 10sgroup_interval: 10srepeat_interval: 1mreceiver: 'web.hook.prometheusalert'

receivers:

- name: 'web.hook.prometheusalert'webhook_configs:- url: '根据下图填写!!!!!!!!!!!!!!!!!'

#重启alertmanager

[root@JenkinsGitlab linux]# docker restart alertmanager

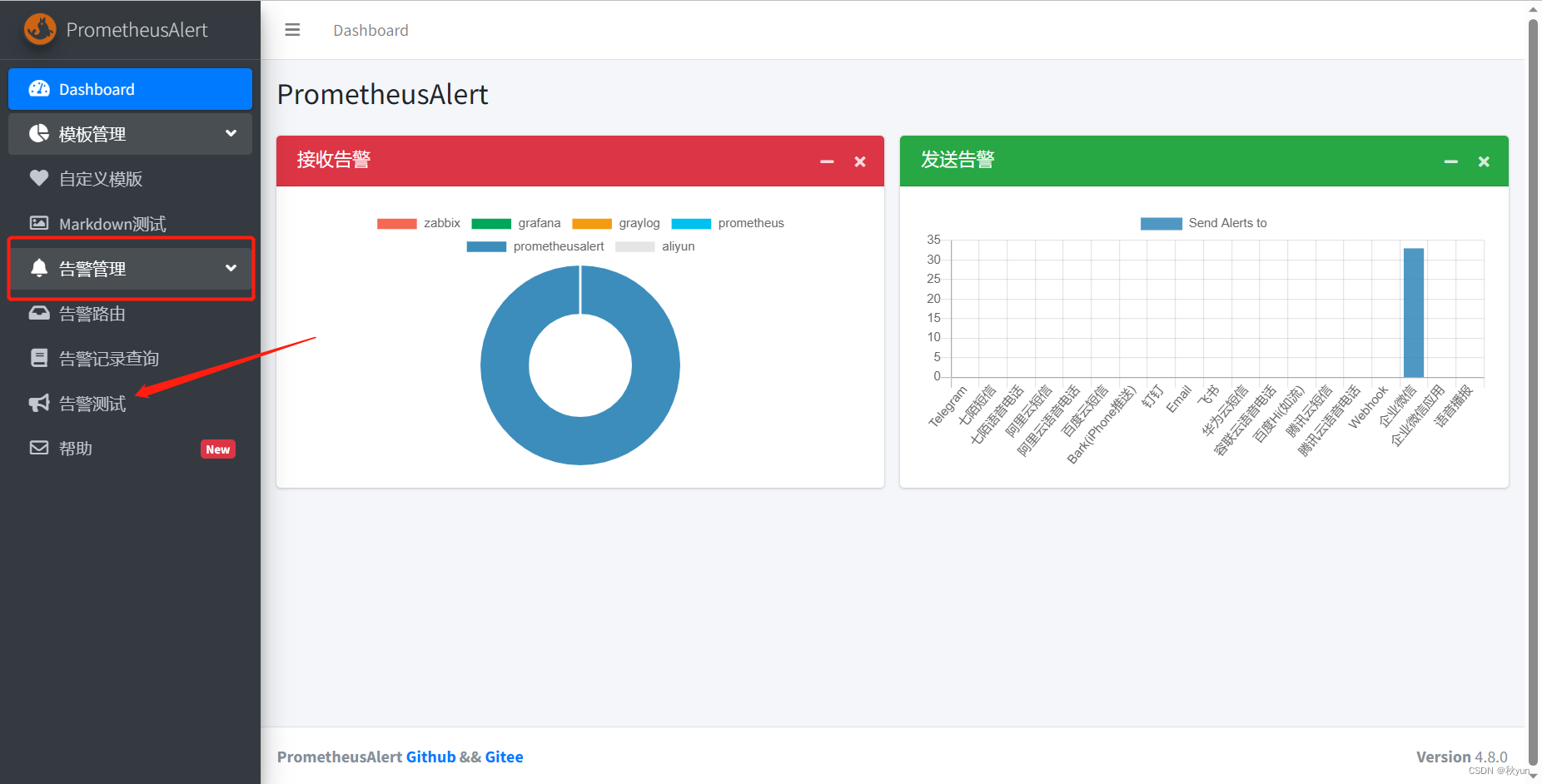

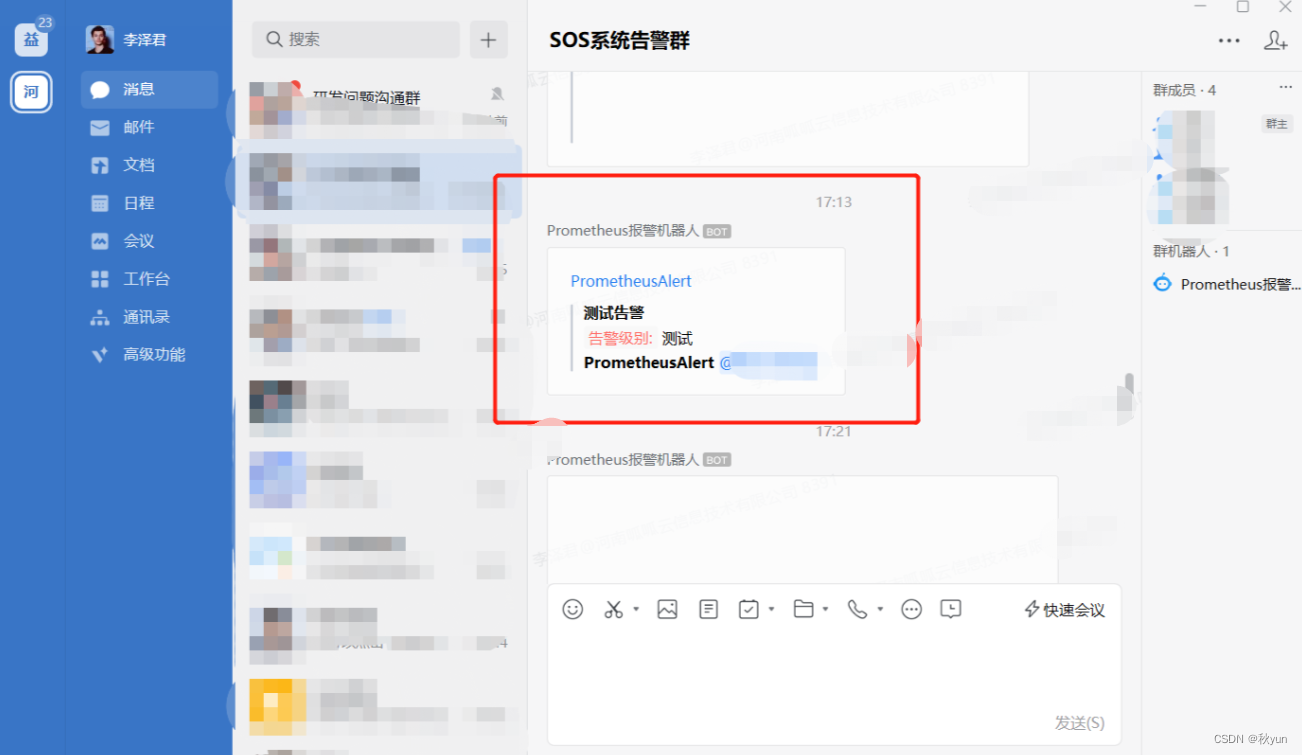

点击测试后去企业微信群查看测试信息(这条测试信息是秒发送,但是正常的报警会有2分钟左右的延迟)

去到Alert页面点击模板管理后点击企业微信

{{ $var := .externalURL}}{{ range $k,$v:=.alerts }}{{if eq $v.status "resolved"}}[PROMETHEUS-恢复信息]({{$v.generatorURL}})

> **[{{$v.labels.alertname}}]({{$var}})**

> <font color="info">告警级别:</font> {{$v.labels.severity}}

> <font color="info">开始时间:</font> {{GetCSTtime $v.startsAt}}

> <font color="info">结束时间:</font> {{GetCSTtime $v.endsAt}}

> <font color="info">故障主机IP:</font> {{$v.labels.instance}}

> <font color="info">**{{$v.annotations.description}}**</font>{{else}}[PROMETHEUS-告警信息]({{$v.generatorURL}})

> **[{{$v.labels.alertname}}]({{$var}})**

> <font color="warning">告警级别:</font> {{$v.labels.severity}}

> <font color="warning">开始时间:</font> {{GetCSTtime $v.startsAt}}

> <font color="warning">结束时间:</font> {{GetCSTtime $v.endsAt}}

> <font color="warning">故障主机IP:</font> {{$v.labels.instance}}

> <font color="warning">**{{$v.annotations.description}}**</font>{{end}}{{ end }}

{{ $urimsg:=""}}{{ range $key,$value:=.commonLabels }}{{$urimsg = print $urimsg $key "%3D%22" $value "%22%2C" }}{{end}}[*** 点我屏蔽该告警]({{$var}}/#/silences/new?filter=%7B{{SplitString $urimsg 0 -3}}%7D)

配置完报警规则后重启Prometheus

[root@JenkinsGitlab prometheus]# dcoker restart prometheus

重启后去到node节点

[root@node1 ~]# docker stop node_exporter

关闭服务后等待1-2分钟这边报警信息就会来了

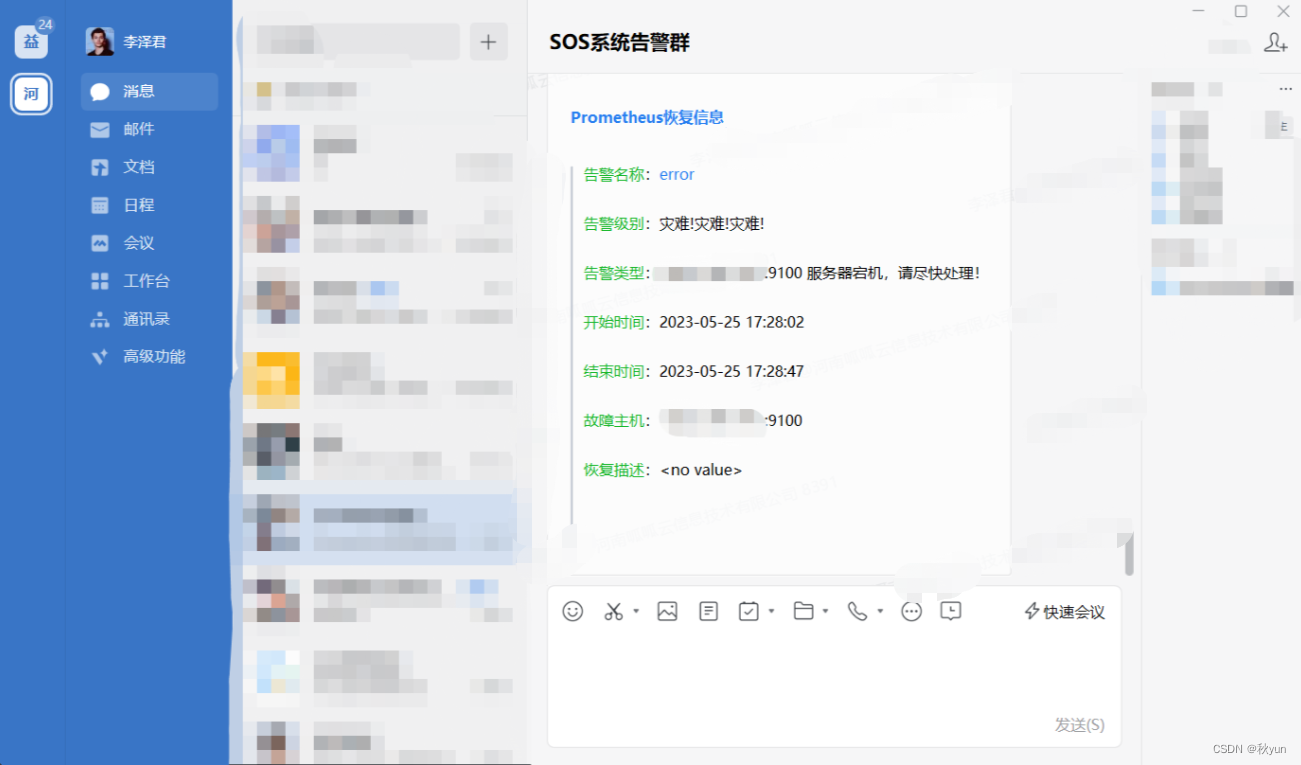

再尝试一下恢复

[root@node1 ~]# docker start node_exporter

开启服务后等待1-2分钟这边报警信息就会来了

但是呢,这会的报警只能去监控到9100端口就是node组件,我们还需要去配置容器的报警

下载Blackbox_exporter

blackbox_exporter是Prometheus官方提供的exporter之一,可以提供HTTP、HTTPS、DNS、TCP以及ICMP的方式对网络进行探测。

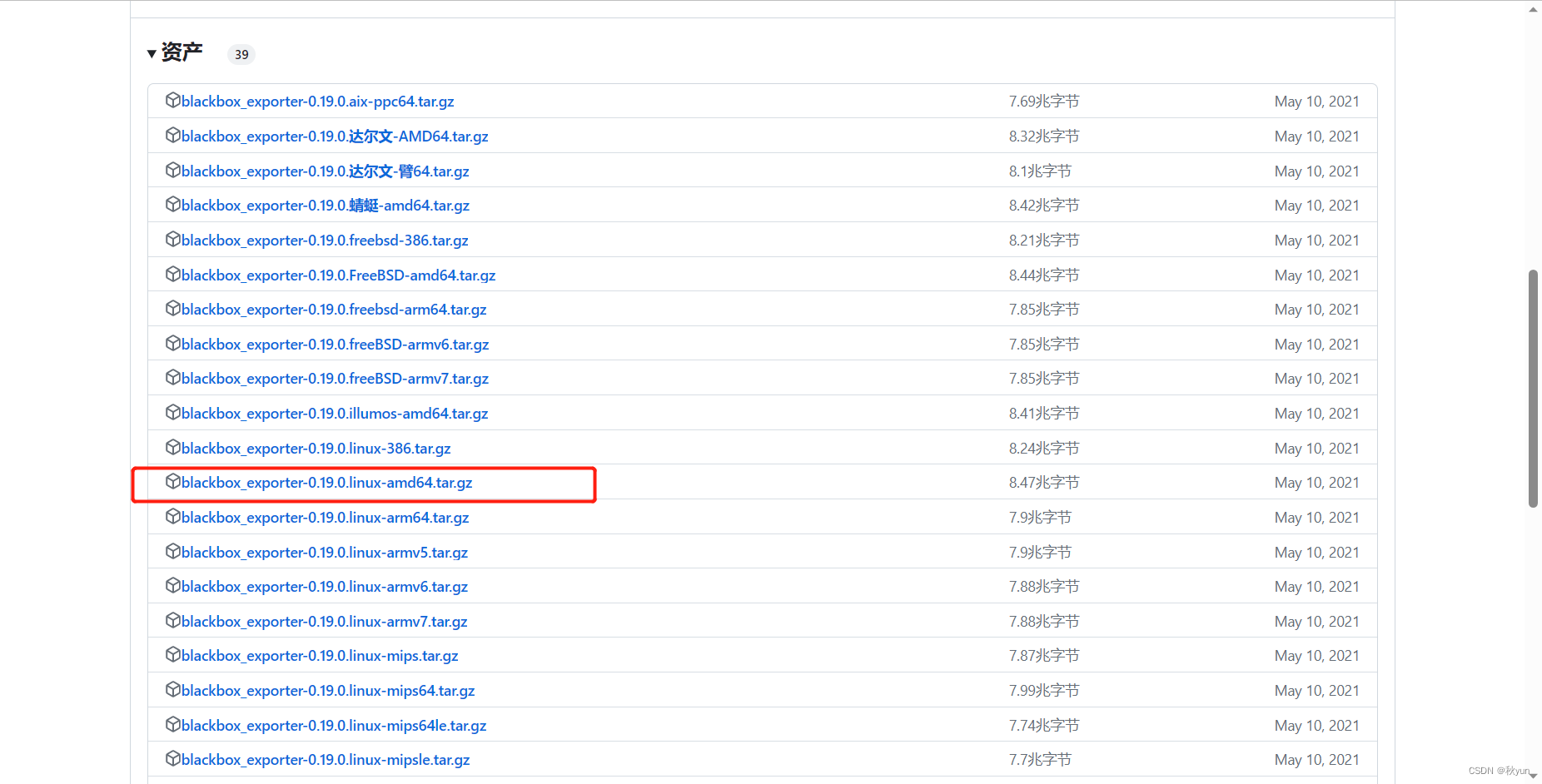

下载地址:https://github.com/prometheus/blackbox_exporter/releases/tag/v0.19.0

点击下载

[root@Prometheus-Grafana ~]# tar xf blackbox_exporter-0.22.0.linux-amd64.tar.gz[root@Prometheus-Grafana ~]# mv blackbox_exporter-0.22.0.linux-amd64 /usr/local/blackbox_exporter[root@Prometheus-Grafana linux]# vim /usr/lib/systemd/system/blackbox_exporter.service

[Unit]Description=Blackbox ExporterWants=network-online.targetAfter=network-online.target[Service]User=rootExecStart=/usr/local/blackbox_exporter/blackbox_exporter --config.file=/usr/local/blackbox_exporter/blackbox.ymlRestart=on-failureWantedBy=default.target[root@Prometheus-Grafana linux]# systemctl daemon-reload[root@Prometheus-Grafana linux]# systemctl start blackbox_exporter[root@Prometheus-Grafana linux]# systemctl enable blackbox_exporter

#测试一下,启动一个Nginx

[root@Prometheus-Grafana linux]# docker run -d --name nginx01 -p:3344:80 nginx

启动后去修改Prometheus配置文件

- job_name: 'port_status'metrics_path: /probeparams:module: [tcp_connect]static_configs:- targets:- 容器宿主机IP:3344relabel_configs:- source_labels: [__address__]target_label: __param_target- source_labels: [__param_target]target_label: instance- target_label: __address__replacement: 黑盒所在主机的IP:9115

写完配置文件重启Prometheus

[root@Prometheus-Grafana linux]# docker stop prometheus

[root@Prometheus-Grafana linux]# docker start prometheu

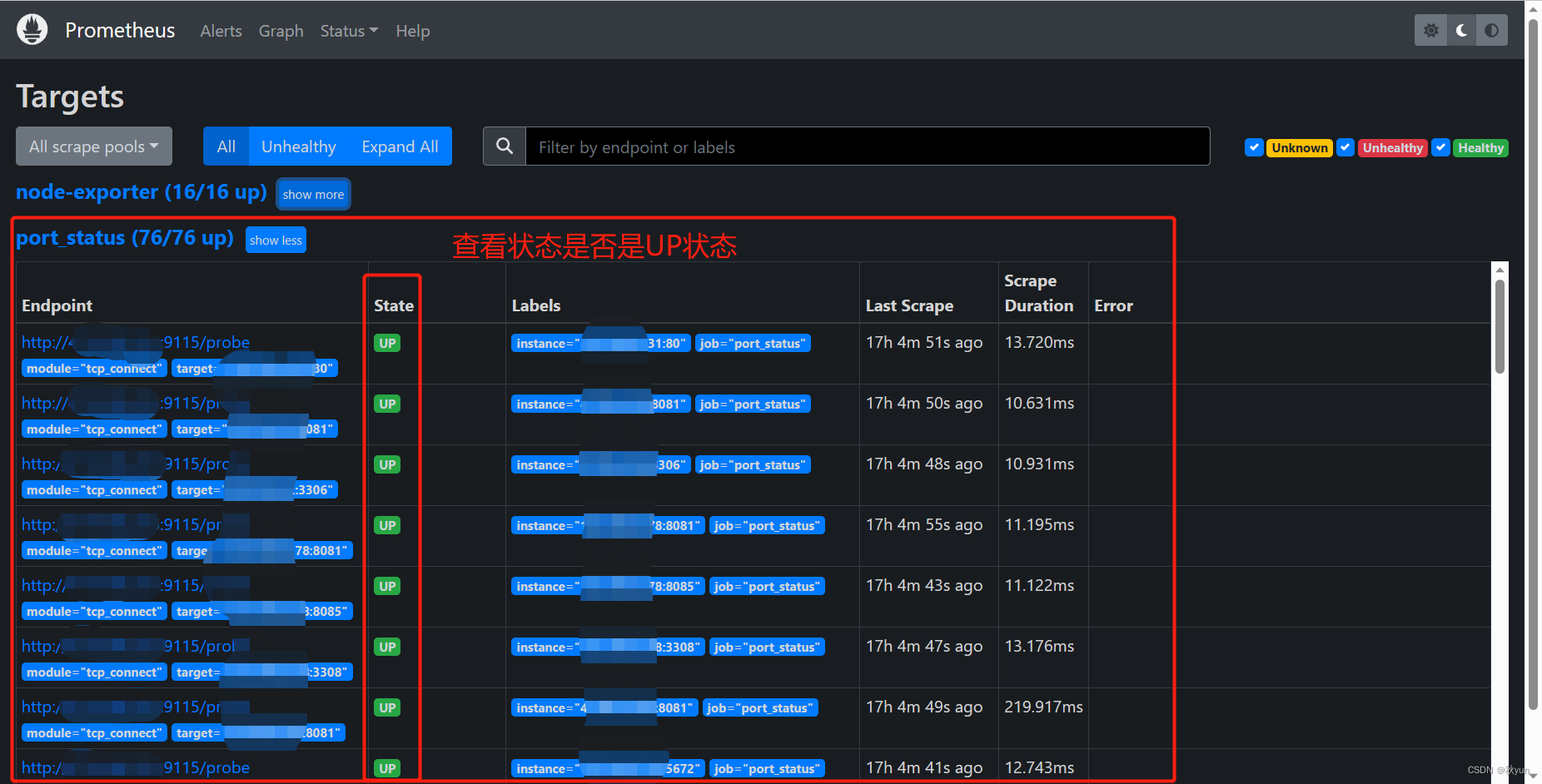

重启后去访问Prometheus的网页端查看是否监控上

成功显示后关闭Nginx查看是否有报警信息

[root@Prometheus-Grafana linux]# docker stop nginx01#等收到报警后再开启nginx测试恢复信息[root@Prometheus-Grafana linux]# docker start nginx01

配置完后,我们的Prometheus监控容器并报警就做完了!

)

进程内存详解)

)

)