1、同步mysql:OS errno 24 - Too many open files

2023-11-20 12:30:04.371 [job-0] ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-07], Description:[读取数据库数据失败. 请检查您的配置的 column/table/where/querySql或者向 DBA 寻求帮助.]. - 执行的SQL为: select a.archive_code,a.archive_name,FROM_UNIXTIME(a.archive_file_time/1000,'%Y-%m-%d'),c.contract_code,c.contract_name,FROM_UNIXTIME(c.start_time/1000,'%Y-%m-%d'),FROM_UNIXTIME(c.finish_time/1000,'%Y-%m-%d'),b.brand_name,s.subject_name,co.contract_id,co.customer_code,o.opposites_name,f.field_value,cast(c.contract_type as char),cc.category_name,FROM_UNIXTIME(c.create_time/1000,'%Y-%m-%d'),cc.category_code,x.field_value from company_contract_archive a left join company_contract c on a.contract_id = c.contract_id left join company_brand b on c.brand_id = b.brand_id left join sign_subject s on c.sign_subject = s.subject_id left join company_contract_opposites co on co.contract_id = c.contract_id left join opposites o on co.opposites_id = o.opposites_id left join contract_basics_field_value f on f.contract_id = c.contract_id and f.field_name = '店铺编号' left join htquan_devops.contract_category cc on c.contract_type = cc.category_id left join contract_basics_field_value x on x.contract_id = c.contract_id and x.field_name = '销售地区' 具体错误信息为:java.sql.SQLException: Can't create/write to file '/tmp/MYJLaOfQ' (OS errno 24 - Too many open files)

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.util.RdbmsException.asQueryException(RdbmsException.java:81) ~[na:na]

at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task.startRead(CommonRdbmsReader.java:237) ~[na:na]

at com.alibaba.datax.plugin.reader.mysqlreader.MysqlReader$Task.startRead(MysqlReader.java:81) ~[na:na]

at com.alibaba.datax.core.taskgroup.runner.ReaderRunner.run(ReaderRunner.java:57) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at java.lang.Thread.run(Thread.java:748) ~[na:1.8.0_191]

querySql:查询结果打开文件较多

解决方案:来源表mysql 的开启了文件大小限制,需要在数据库中增加 open_files_limit 配置

或者优化querySql

2、Code:[HiveReader-12], Description:[文件类型目前不支持].

- 文件[hdfs://vm-lvmh-cdp-cdh02:8020/data/hive/warehouse/pcd_ods.db/ods_std_dmall_oms_sdb_ome_payments_delta/ds=20230202/.hive-staging_hive_2023-02-03_01-15-57_375_6186868347167071378-29/-ext-10001/tmpstats-1]的类型与用户配置的fileType类型不一致,请确认您配置的目录下面所有文件的类型均为[parquet]

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26)

at com.alibaba.datax.plugin.reader.hivereader.DFSUtil.addSourceFileByType(DFSUtil.java:320)

at com.alibaba.datax.plugin.reader.hivereader.DFSUtil.getHDFSAllFilesNORegex(DFSU

解决方案:在集群上增加以下配置:

hive.insert.into.multilevel.dirs=true

hive.exec.stagingdir=/tmp/hive/staging/.hive-staging

3、datax:hive(hdfs)写时,datax任务异常终止,未删除临时目录,该任务下次启动时,从临时目录读取文件报错

解决方案:1、调整basic container 内存限制,防止datax任务被系统kill

2、任务重新运行时,先删除临时目录

4、data写ftp文件失败: 暂时不支持写入到根目录

代码已修复,未合并开源代码中

SftpHelperImpl中增加以下逻辑

String parentDir; int lastIndex = StringUtils.lastIndexOf(filePath, IOUtils.DIR_SEPARATOR); if(lastIndex<=0){ parentDir = filePath.substring(0,1); }else { parentDir = filePath.substring(0, StringUtils.lastIndexOf(filePath, IOUtils.DIR_SEPARATOR)); }

5、datax写入dorisDB报错

Caused by: java.io.IOException: Failed to flush data to StarRocks.{"Status":"Fail","Comment":"","BeginTxnTimeMs":0,"Message":"[INTERNAL_ERROR]too many filtered rows\n0. /mnt/ssd01/selectdb-doris-package/enterprise-core/be/src/common/stack_trace.cpp:302: StackTrace::tryCapture() @ 0x000000000ba70197 in /data/doris/be/lib/doris_be\n1. /mnt/ssd01/selectdb-doris-package/enterprise-core/be/src/common/stack_trace.h:0: doris::get_stack_trace[abi:cxx11]() @ 0x000000000ba6e72d in /data/doris/be/lib/doris_be\n2. /usr/local/software/ldb_toolchain/bin/../lib/gcc/x86_64-linux-gnu/11/../../../../include/c++/11/bits/basic_string.h:187: doris::Status doris::Status::Error(int, std::basic_string_view >) @ 0x000000000af07e2b in /data/doris/be/lib/doris_be\n3. /mnt/ssd01/selectdb-doris-package/enterprise-core/be/src/common/status.h:348: std::_Function_handler)::$_0>::_M_invoke(std::_Any_data const&, doris::RuntimeState*&&, doris::Status*&&) @ 0x000000000b961a09 in /data/doris/be/lib/doris_be\n4. /usr/local/software/ldb_toolchain/bin/../lib/gcc/x86_64-linux-gnu/11/../../../../include/c++/11/bits/unique_ptr.h:360: doris::FragmentMgr::_exec_actual(std::shared_ptr, std::function const&) @ 0x000000000b86b36c in

原因:数据中存在%时报错,官方代码已修复

// httpPut.setHeader("Content-Type", "application/x-www-form-urlencoded"); httpPut.setHeader("two_phase_commit", "false");

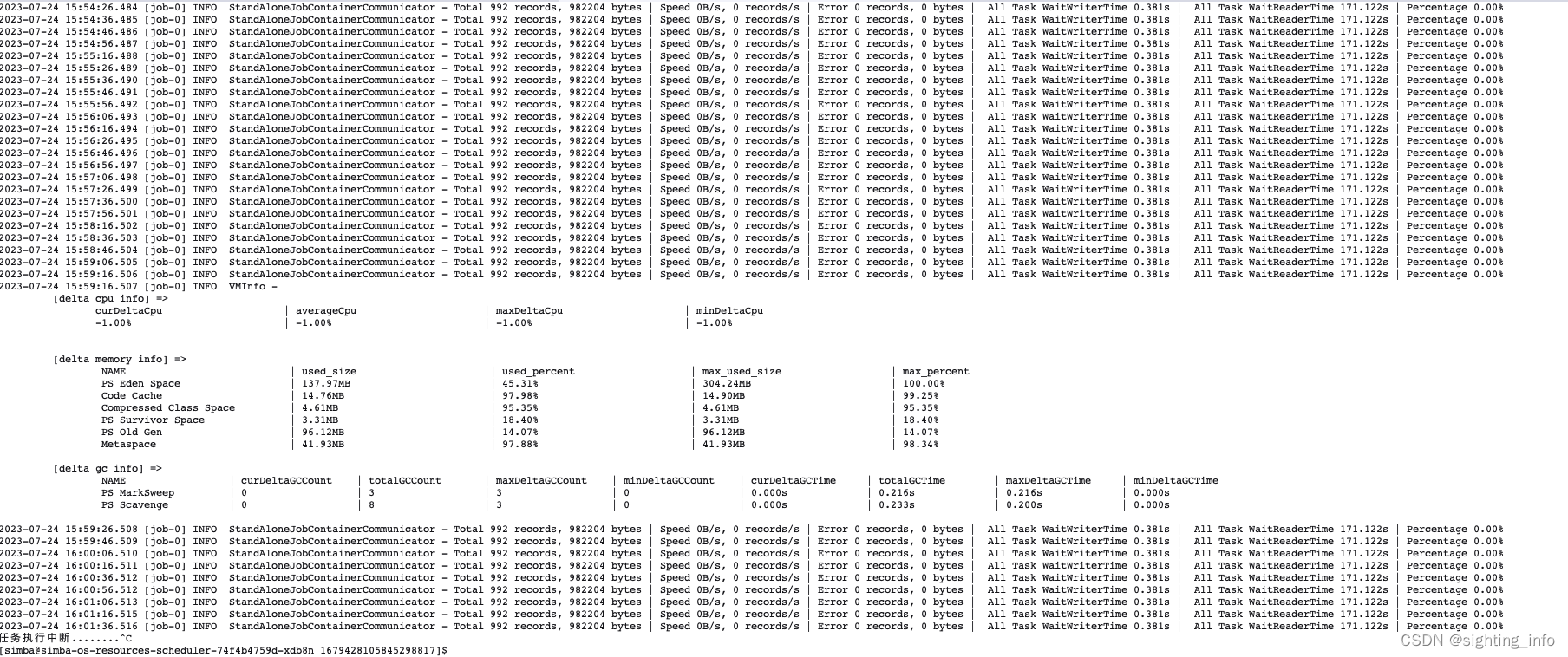

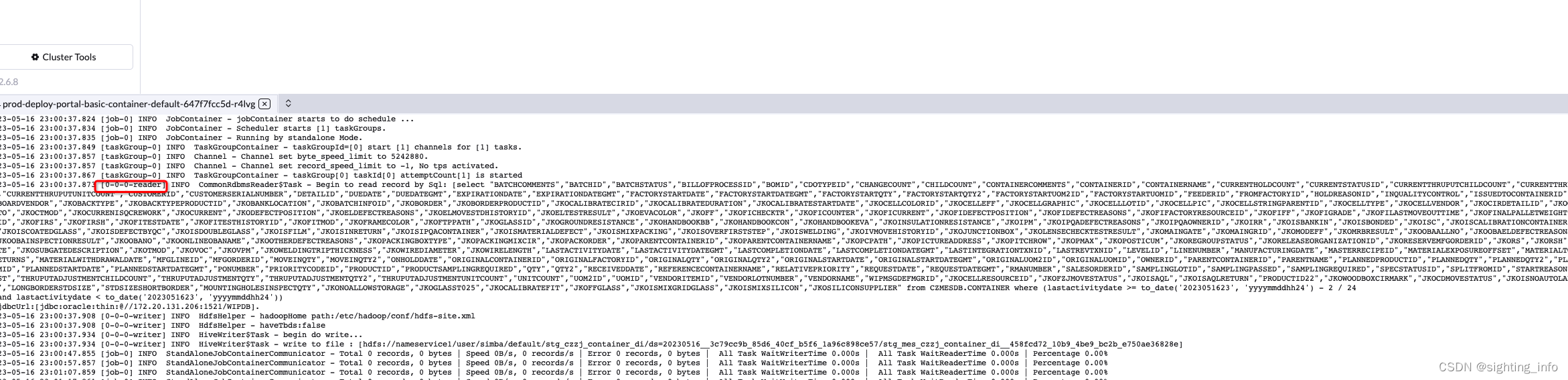

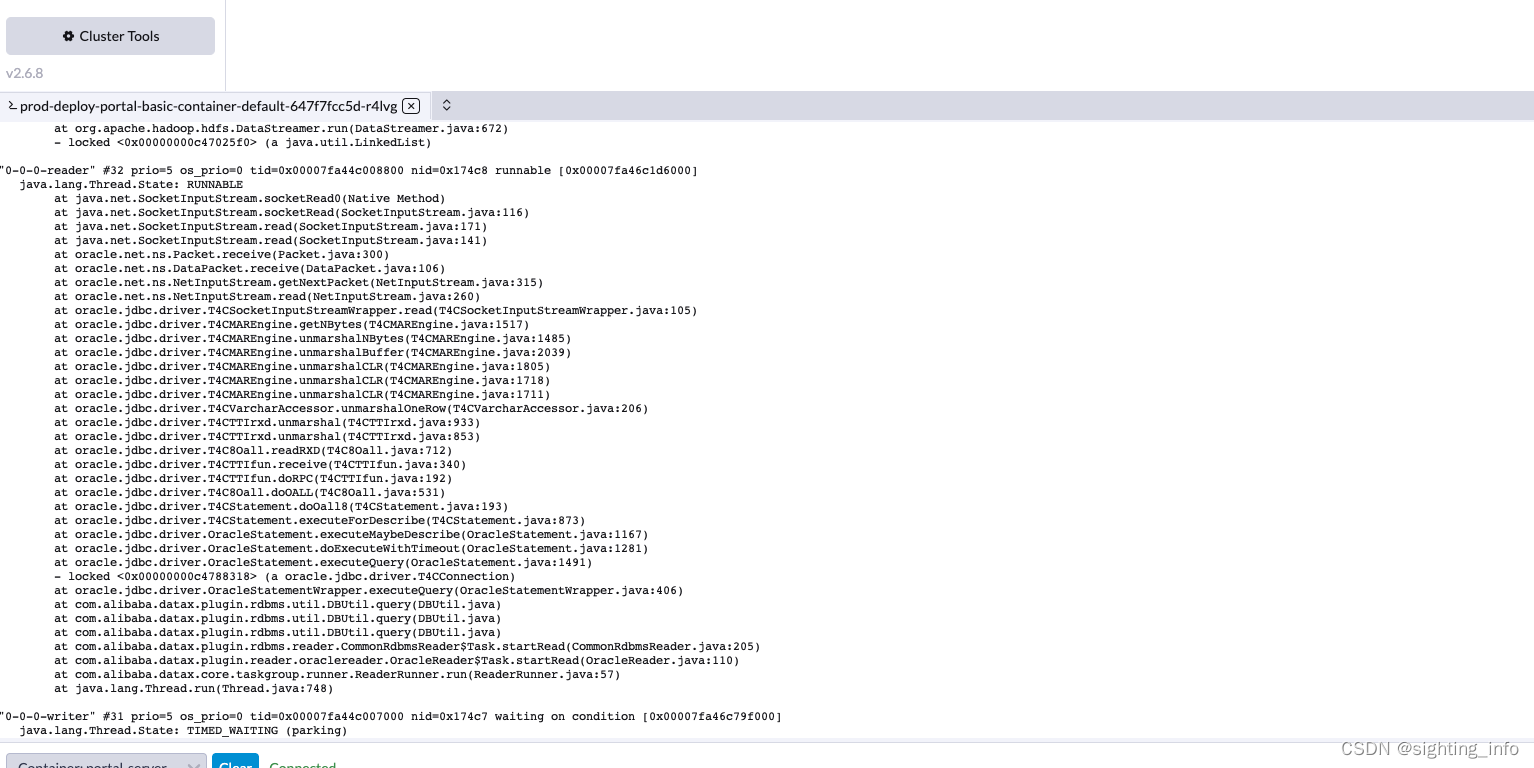

6、datax任务卡死,获取oracle连接时卡死

现象:同步任务重,speed为0,record为0

查看日志,执行完前置sql后,该线程就没有日志了,因此需要重点看下dump日志中该线程卡在什么地方

dump日志,发现线程卡oracle执行sql方法中

解决方案:具体卡住原因需要后续追踪下oracle数据库本身及源码,此处仅提出应对方案

1、oracle超时时间减小,默认是48h

2、任务设置超时重试

,马尔科夫链蒙特卡洛MCMC,隐马尔科夫(HMM(V算法剪枝优化),NLP))

)

-A题:Power Planning Model: Magic Weapon for Cyclists(续))

)

-初识Jetpck)

)