在 Windows 11 上通过 Autoawq 启动 Mixtral 8*7B 大语言模型

- 0. 背景

- 1. 安装依赖

- 2. 开发 main.py

- 3. 运行 main.py

0. 背景

看了一些文章之后,今天尝试在 Windows 11 上通过 Autoawq 启动 Mixtral 8*7B 大语言模型。

1. 安装依赖

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

pip install autoawq git+https://github.com/huggingface/transformers.git

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.41.2.post2-py3-none-win_amd64.whl

pip install https://github.com/bdashore3/flash-attention/releases/download/v2.4.1/flash_attn-2.4.1+cu121torch2.1cxx11abiFALSE-cp310-cp310-win_amd64.whl

2. 开发 main.py

from transformers import AutoModelForCausalLM,AutoTokenizer

from transformers import TextStreamer

model_id ="casperhansen/mixtral-instruct-awq"

tokenizer =AutoTokenizer.from_pretrained(model_id)

model =AutoModelForCausalLM.from_pretrained(model_id,low_cpu_mem_usage=True,device_map="auto",attn_implementation="flash_attention_2")

streamer = TextStreamer(tokenizer,skip_prompt=True,skip_special_tokens=True)

text="[INST] How to make the best cup of americano? [/INST]"

tokens =tokenizer(text,return_tensors='pt').input_ids.to("cuda:0")

generation_output =model.generate(tokens,streamer=streamer,max_new_tokens=512)

代码来自:https://mp.weixin.qq.com/s/IAWJIh61_enYoyME3oJqJQ

3. 运行 main.py

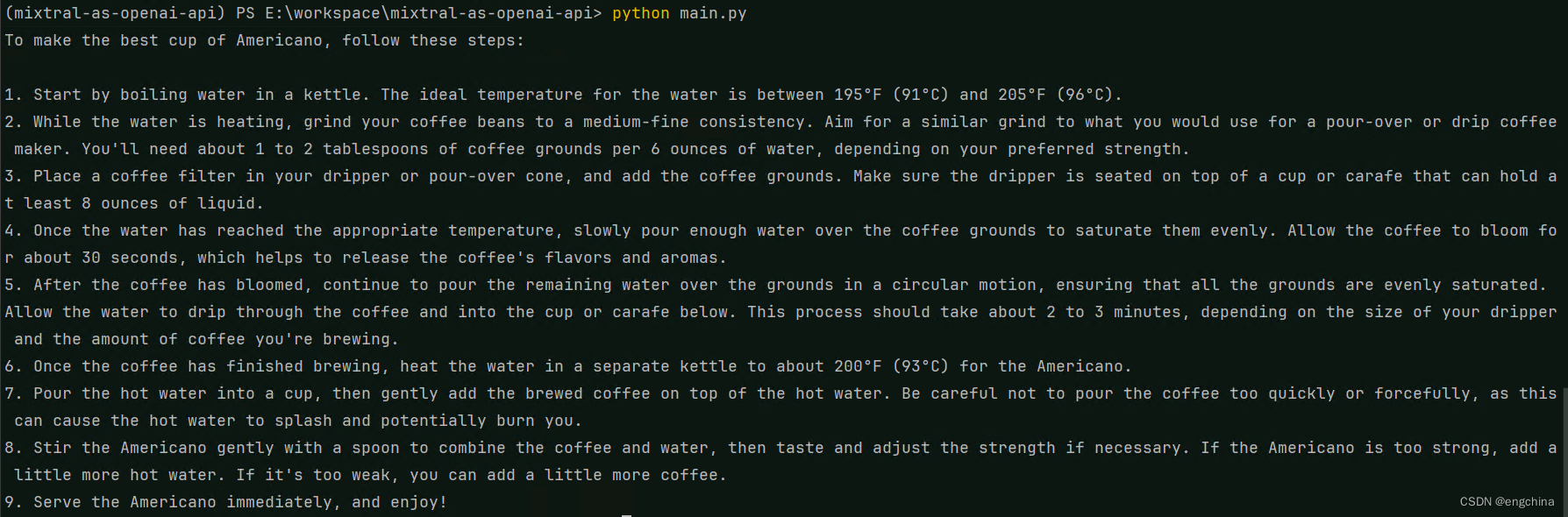

python main.py

输出结果示例,

完成!

)