前言:Hello大家好,我是Dream。 今天来学习一下利用YOLOv5进行姿态估计,HRnet与SimDR检测图片、视频以及摄像头中的人体关键点,欢迎大家一起前来探讨学习~

本文目录:

- 一、项目准备

- 1Pycharm中克隆github上的项目

- 2.具体步骤

- 3.环境配置

- 二、目标检测

- 1.添加权重文件

- 2.获取图片边界框

- 3.添加SPPF模块

- 三、姿态估计

- 1.添加权重

- 2.修改yaml文件

- 3.获取关键点

- 4.绘制骨骼关键点

- 四、结果演示

- 1.照片演示

- 2.视频演示

- 3.实时演示

- 五、报错分析

- 1.路径问题

- 2.YOLOv5、YOLOvx训练过程警告:functional.py

- 3.matplotlib.use( Agg ) # for writing to files only

- 4.AttributeError: ‘Upsample‘ object has no attribute ‘recompute_scale_factor‘

- 5. ‘gbk‘ codec can‘t decode byte

- 六、总结分享

- 原工程文件分享:

- 文末免费送书福利:Python OpenCV从入门到精通

一、项目准备

首先需要我们利用Pycharm直接克隆github中的姿态估计原工程文件,如果不知道怎样在本地克隆Pycharm,可以接着往下看,如果知道的话可以直接跳过1.1,直接看1.2

1Pycharm中克隆github上的项目

要想在Pycharm中克隆github上的源码,必须要借助Git工具来实现,如何检测自己的Pycharm中有没有安装Git呢?

首先第一步,找到File中的Settings,打开:

然后第二步,找到Version Control中的Git,然后点击test按钮:

![[图片]](https://img-blog.csdnimg.cn/direct/a7aa10e8b595479584af410fb53b67a1.png)

如果有像我一样的Git版本显示,则证明我们已经成功安装了Git:

![[图片]](https://img-blog.csdnimg.cn/direct/0dbd59f05a5a4f37ad9356e13b03da60.png)

如果出现此提示,则证明没有安装Git:

![[图片]](https://img-blog.csdnimg.cn/direct/59642479e22a4c8c910b5b6555004a08.png)

如果有提示的话我们点击提示自动去安装Git;但是如果没有提示的话,我们需要自己去Git官网上下载,但是官网上速度慢的一批,给大家提供一个提供一个国内网址,在这里下载速度会很快:https://registry.npmmirror.com/binary.html?path=git-for-windows/

下载完之后,新打开一个窗口,切换到Version Control–>Git选项卡中,选择刚安装好的Git路径

注意:一定要选择git.exe,默认安装的都在cmd下。

2.具体步骤

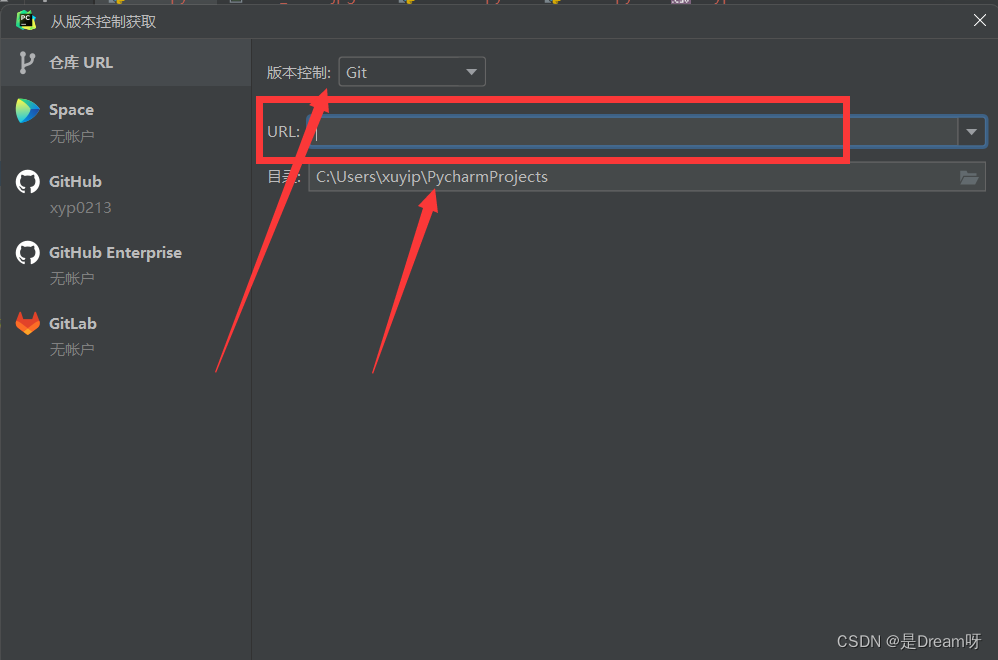

下载完Git之后,我们在最上面找到Git,然后点击下方的克隆:

![[图片]](https://img-blog.csdnimg.cn/direct/a34e977895fb4fd091d926cbbe9a3f00.png)

将下方要克隆的工程文件URL写入进去,同时一定要注意自己克隆到的文件目录,这个很重要!!!千万不要开始调模型了才发现自己文件目录搞错了,像我一样还得重新来一遍(苦涩)。

姿态估计原工程文件:

git clone https://github.com/leeyegy/SimDR.git

克隆完之后,需要再将yolov5算法添加到其中:

- 在终端处:

cd SimDR - 接着在终端输入:

git clone -b v5.https://github.com/ultralytics/yolov5.git

解释:git clone -b + 分支名 + 仓库地址,-b=-branch(分支)

3.环境配置

本次实验需要的直接按照工程中SimDR与yolov5的requirement.txt安装即可,一般会有提示一键安装,哪里报错解决哪里,记得更新pip,这个地方经常出错,总之缺啥补啥。

二、目标检测

1.添加权重文件

首先我们需要为xai/yolovz添加权重文件:yolov5x.pt到 SimDR/yolov5/weights/ 文件夹下:

![[图片]](https://img-blog.csdnimg.cn/direct/91788b668da24bf68237ecd4d31f2dc5.png)

这里是权重文件的下载链接,免费下载:

链接: 帅哥一枚到此一游 提取码: 0213

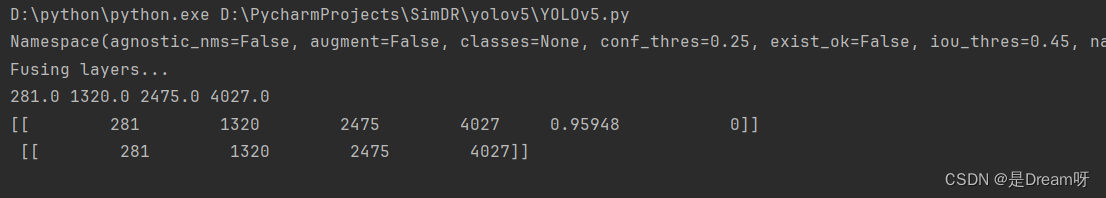

2.获取图片边界框

在yolov5文件夹下新建一个py文件,将代码写入其中,如果有很多报错,先不要着急,我们慢慢解决:

import argparse

import time

from pathlib import Path

import numpy as np

import cv2

import torch

import torch.backends.cudnn as cudnn

from numpy import random

import sys

import osfrom models.experimental import attempt_load

from utils.datasets import LoadStreams, LoadImages

from utils.general import check_img_size, check_requirements, check_imshow, non_max_suppression, \apply_classifier, \scale_coords, xyxy2xywh, strip_optimizer, set_logging, increment_path

from utils.plots import plot_one_box

from utils.torch_utils import select_device, load_classifier, time_synchronized

from utils.datasets import letterboxfrom yolov5.models.experimental import attempt_load

from yolov5.utils.datasets import LoadStreams, LoadImages

from yolov5.utils.general import check_img_size, check_requirements, check_imshow, non_max_suppression, apply_classifier, \scale_coords, xyxy2xywh, strip_optimizer, set_logging, increment_path

from yolov5.utils.plots import plot_one_box

from yolov5.utils.torch_utils import select_device, load_classifier, time_synchronized

from yolov5.utils.datasets import letterboxclass Yolov5():def __init__(self, weights=None, opt=None, device=None):"""@param weights:@param save_txt:@param opt:@param device:"""self.weights = weightsself.device = device# save_dir = Path(increment_path(Path(opt.project) / opt.name, exist_ok=opt.exist_ok)) # increment run# save_dir.mkdir(parents=True, exist_ok=True) # make dirself.img_size = 640self.model = attempt_load(weights, map_location=self.device)self.stride = int(self.model.stride.max())self.names = self.model.module.names if hasattr(self.model, 'module') else self.model.namesself.colors = [[random.randint(0, 255) for _ in range(3)] for _ in self.names]self.opt = optdef detect(self,img0):"""@param img0: 输入图片 shape=[h,w,3]@return:"""person_boxes = np.ones((6))img = letterbox(img0, self.img_size, stride=self.stride)[0]# Convertimg = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416img = np.ascontiguousarray(img)img = torch.from_numpy(img).to(self.device)img = img.float() # uint8 to fp16/32img /= 255.0 # 0 - 255 to 0.0 - 1.0if img.ndimension() == 3:img = img.unsqueeze(0)pred = self.model(img, augment=self.opt.augment)[0]# Apply NMSpred = non_max_suppression(pred, self.opt.conf_thres, self.opt.iou_thres, classes=self.opt.classes, agnostic=self.opt.agnostic_nms)for i, det in enumerate(pred):if len(det):# Rescale boxes from img_size to im0 sizedet[:, :4] = scale_coords(img.shape[2:], det[:, :4], img0.shape).round()boxes = reversed(det)boxes = boxes.cpu().numpy() #2022.04.06修改,在GPU上跑boxes无法直接转numpy数据#for i , box in enumerate(np.array(boxes)):for i , box in enumerate(boxes):if int(box[-1]) == 0 and box[-2]>=0.7:person_boxes=np.vstack((person_boxes , box))# label = f'{self.names[int(box[-1])]} {box[-2]:.2f}'# print(label)# plot_one_box(box, img0, label=label, color=self.colors[int(box[-1])], line_thickness=3)# cv2.imwrite('result1.jpg',img0)# print(s)# print(person_boxes,np.ndim(person_boxes))if np.ndim(person_boxes)>=2 :person_boxes_result = person_boxes[1:]boxes_result = person_boxes[1:,:4]else:person_boxes_result = []boxes_result = []return person_boxes_result,boxes_resultdef yolov5test(opt,path = ''):detector = Yolov5(weights='weights/yolov5x.pt',opt=opt,device=torch.device('cpu'))img0 = cv2.imread(path)personboxes ,boxes= detector.detect(img0)for i,(x1,y1,x2,y2) in enumerate(boxes):print(x1,y1,x2,y2)print(personboxes,'\n',boxes)

if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')parser.add_argument('--augment', action='store_true', help='augmented inference')parser.add_argument('--update', action='store_true', help='update all model')parser.add_argument('--project', default='runs/detect', help='save results to project/name')parser.add_argument('--name', default='exp', help='save results to project/name')parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')opt = parser.parse_args()print(opt)# check_requirements(exclude=('pycocotools', 'thop'))with torch.no_grad():yolov5test(opt,'data/images/zidane.jpg')

如果没有报错,会得到以下的坐标,但是有报错也不要着急,在下面也给大家列举了常见的报错:

3.添加SPPF模块

yolov5 v5.0工程中没有SPPF模块,此时我们需要在SimDR/yolov5/models/common.py文件末尾加入以下代码:

import warningsclass SPPF(nn.Module):# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocherdef __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * 4, c2, 1, 1)self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningy1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))

三、姿态估计

1.添加权重

在SimDR文件夹下新建weight/hrnet文件夹,添加pose_hrnet_w48_384x288.pth等三个类似文件,这几个文件也在我们上面提到的权重链接里面,这是所在路径:weight\hrnet

![[图片]](https://img-blog.csdnimg.cn/direct/0d3aedb5265a4f02910f9cc71801632f.png)

2.修改yaml文件

SimDR/experiments/文件夹下是coco与mpii数据集的配置文件,本文以coco数据集去做,同时只演示了其中的一个heatmap,其余两个也是一样修改便可以:

![[图片]](https://img-blog.csdnimg.cn/direct/ff662ea12e584aeea377ac736b5ae22f.png)

修改./SimDR/experiments/coco/hrnet/heatmap/w48_384x288_adam_lr1e-3.yaml文件中的TEST部分的MODEL_FILE路径,如图所示:

![[图片]](https://img-blog.csdnimg.cn/direct/727a2a19b39441aca3f0930622319cd9.png)

3.获取关键点

在SimDR文件夹下新建Point_detect.py ,将下面的代码写入其中:

import cv2

import numpy as np

import torch

from torchvision.transforms import transforms

import torch.nn.functional as F

from lib.config import cfgfrom yolov5.YOLOv5 import Yolov5

from lib.utils.transforms import flip_back_simdr,transform_preds,get_affine_transform

from lib import models

import argparse

import sys

sys.path.insert(0, 'D:\\Study\\Pose Estimation\\SimDR\\yolov5')class Points():def __init__(self,model_name='sa-simdr',resolution=(384,288),opt=None,yolo_weights_path="./yolov5/weights/yolov5x.pt",):"""Initializes a new SimpleHRNet object.HRNet (and YOLOv3) are initialized on the torch.device("device") andits (their) pre-trained weights will be loaded from disk.Args:c (int): number of channels (when using HRNet model) or resnet size (when using PoseResNet model).nof_joints (int): number of joints.checkpoint_path (str): path to an official hrnet checkpoint or a checkpoint obtained with `train_coco.py`.model_name (str): model name (HRNet or PoseResNet).Valid names for HRNet are: `HRNet`, `hrnet`Valid names for PoseResNet are: `PoseResNet`, `poseresnet`, `ResNet`, `resnet`Default: "HRNet"resolution (tuple): hrnet input resolution - format: (height, width).Default: (384, 288)interpolation (int): opencv interpolation algorithm.Default: cv2.INTER_CUBICmultiperson (bool): if True, multiperson detection will be enabled.This requires the use of a people detector (like YOLOv3).Default: Truereturn_heatmaps (bool): if True, heatmaps will be returned along with poses by self.predict.Default: Falsereturn_bounding_boxes (bool): if True, bounding boxes will be returned along with poses by self.predict.Default: Falsemax_batch_size (int): maximum batch size used in hrnet inference.Useless without multiperson=True.Default: 16yolo_model_def (str): path to yolo model definition file.Default: "./model/detectors/yolo/config/yolov3.cfg"yolo_class_path (str): path to yolo class definition file.Default: "./model/detectors/yolo/data/coco.names"yolo_weights_path (str): path to yolo pretrained weights file.Default: "./model/detectors/yolo/weights/yolov3.weights.cfg"device (:class:`torch.device`): the hrnet (and yolo) inference will be run on this device.Default: torch.device("cpu")"""self.model_name = model_nameself.resolution = resolution # in the form (height, width) as in the original implementationself.aspect_ratio = resolution[1]/resolution[0]self.yolo_weights_path = yolo_weights_pathself.flip_pairs = [[1, 2], [3, 4], [5, 6], [7, 8],[9, 10], [11, 12], [13, 14], [15, 16]]self.device = torch.device(opt.device)cfg.defrost()if model_name in ('sa-simdr','sasimdr','sa_simdr'):if resolution ==(384,288):cfg.merge_from_file('./experiments/coco/hrnet/sa_simdr/w48_384x288_adam_lr1e-3_split1_5_sigma4.yaml')elif resolution == (256,192):cfg.merge_from_file('./experiments/coco/hrnet/sa_simdr/w48_256x192_adam_lr1e-3_split2_sigma4.yaml')else:raise ValueError('Wrong cfg file')elif model_name in ('simdr'):if resolution == (256, 192):cfg.merge_from_file('./experiments/coco/hrnet/simdr/nmt_w48_256x192_adam_lr1e-3.yaml')else:raise ValueError('Wrong cfg file')elif model_name in ('hrnet','HRnet','Hrnet'):if resolution == (384,288):cfg.merge_from_file('./experiments/coco/hrnet/heatmap/w48_384x288_adam_lr1e-3.yaml')elif resolution == (256,192):cfg.merge_from_file('./experiments/coco/hrnet/heatmap/w48_256x192_adam_lr1e-3.yaml')else:raise ValueError('Wrong cfg file')else:raise ValueError('Wrong model name.')cfg.freeze()self.model = eval('models.' + cfg.MODEL.NAME + '.get_pose_net')(cfg, is_train=False)print('=> loading model from {}'.format(cfg.TEST.MODEL_FILE))checkpoint_path = cfg.TEST.MODEL_FILEcheckpoint = torch.load(checkpoint_path, map_location=self.device)if 'model' in checkpoint:self.model.load_state_dict(checkpoint['model'])else:self.model.load_state_dict(checkpoint)if 'cuda' in str(self.device):print("device: 'cuda' - ", end="")if 'cuda' == str(self.device):# if device is set to 'cuda', all available GPUs will be usedprint("%d GPU(s) will be used" % torch.cuda.device_count())device_ids = Noneelse:# if device is set to 'cuda:IDS', only that/those device(s) will be usedprint("GPU(s) '%s' will be used" % str(self.device))device_ids = [int(x) for x in str(self.device)[5:].split(',')]elif 'cpu' == str(self.device):print("device: 'cpu'")else:raise ValueError('Wrong device name.')self.model = self.model.to(self.device)self.model.eval()self.detector = Yolov5(weights=yolo_weights_path,opt=opt ,device=self.device)self.transform = transforms.Compose([transforms.ToPILImage(),transforms.Resize((self.resolution[0], self.resolution[1])), # (height, width)transforms.ToTensor(),transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),])def _box2cs(self, box):x, y, w, h = box[:4]return self._xywh2cs(x, y, w, h)def _xywh2cs(self, x, y, w, h):center = np.zeros((2), dtype=np.float32)center[0] = x + w * 0.5center[1] = y + h * 0.5if w > self.aspect_ratio * h:h = w * 1.0 / self.aspect_ratioelif w < self.aspect_ratio * h:w = h * self.aspect_ratioscale = np.array([w * 1.0 / 200, h * 1.0 / 200],dtype=np.float32)if center[0] != -1:scale = scale * 1.25return center, scaledef predict(self, image):"""Predicts the human pose on a single image or a stack of n images.Args:image (:class:`np.ndarray`):the image(s) on which the human pose will be estimated.image is expected to be in the opencv format.image can be:- a single image with shape=(height, width, BGR color channel)- a stack of n images with shape=(n, height, width, BGR color channel)Returns::class:`np.ndarray` or list:a numpy array containing human joints for each (detected) person.Format:if image is a single image:shape=(# of people, # of joints (nof_joints), 3); dtype=(np.float32).if image is a stack of n images:list of n np.ndarrays withshape=(# of people, # of joints (nof_joints), 3); dtype=(np.float32).Each joint has 3 values: (y position, x position, joint confidence).If self.return_heatmaps, the class returns a list with (heatmaps, human joints)If self.return_bounding_boxes, the class returns a list with (bounding boxes, human joints)If self.return_heatmaps and self.return_bounding_boxes, the class returns a list with(heatmaps, bounding boxes, human joints)"""if len(image.shape) == 3:return self._predict_single(image)else:raise ValueError('Wrong image format.')def sa_simdr_pts(self,img,detection,images,boxes):c, s = [], []if detection is not None:for i, (x1, y1, x2, y2) in enumerate(detection):x1 = int(round(x1.item()))x2 = int(round(x2.item()))y1 = int(round(y1.item()))y2 = int(round(y2.item()))boxes[i] = [x1, y1, x2, y2]w, h = x2 - x1, y2 - y1xx1 = np.max((0, x1))yy1 = np.max((0, y1))xx2 = np.min((img.shape[1] - 1, x1 + np.max((0, w - 1))))yy2 = np.min((img.shape[0] - 1, y1 + np.max((0, h - 1))))box = [xx1, yy1, xx2 - xx1, yy2 - yy1]center, scale = self._box2cs(box)c.append(center)s.append(scale)trans = get_affine_transform(center, scale, 0, np.array(cfg.MODEL.IMAGE_SIZE))input = cv2.warpAffine(img,trans,(int(self.resolution[1]), int(self.resolution[0])),flags=cv2.INTER_LINEAR)images[i] = self.transform(input)if images.shape[0] > 0:images = images.to(self.device)with torch.no_grad():output_x, output_y = self.model(images)if cfg.TEST.FLIP_TEST:input_flipped = images.flip(3)output_x_flipped_, output_y_flipped_ = self.model(input_flipped)output_x_flipped = flip_back_simdr(output_x_flipped_.cpu().numpy(),self.flip_pairs, type='x')output_y_flipped = flip_back_simdr(output_y_flipped_.cpu().numpy(),self.flip_pairs, type='y')output_x_flipped = torch.from_numpy(output_x_flipped.copy()).to(self.device)output_y_flipped = torch.from_numpy(output_y_flipped.copy()).to(self.device)# feature is not aligned, shift flipped heatmap for higher accuracyif cfg.TEST.SHIFT_HEATMAP:output_x_flipped[:, :, 0:-1] = \output_x_flipped.clone()[:, :, 1:]output_x = F.softmax((output_x + output_x_flipped) * 0.5, dim=2)output_y = F.softmax((output_y + output_y_flipped) * 0.5, dim=2)else:output_x = F.softmax(output_x, dim=2)output_y = F.softmax(output_y, dim=2)max_val_x, preds_x = output_x.max(2, keepdim=True)max_val_y, preds_y = output_y.max(2, keepdim=True)mask = max_val_x > max_val_ymax_val_x[mask] = max_val_y[mask]maxvals = max_val_x * 10.0output = torch.ones([images.size(0), preds_x.size(1), 3])output[:, :, 0] = torch.squeeze(torch.true_divide(preds_x, cfg.MODEL.SIMDR_SPLIT_RATIO))output[:, :, 1] = torch.squeeze(torch.true_divide(preds_y, cfg.MODEL.SIMDR_SPLIT_RATIO))# output[:, :, 2] = maxvals.squeeze(2)output = output.cpu().numpy()preds = output.copy()for i in range(output.shape[0]):preds[i] = transform_preds(output[i], c[i], s[i], [cfg.MODEL.IMAGE_SIZE[0], cfg.MODEL.IMAGE_SIZE[1]])preds[:, :, 2] = maxvals.squeeze(2)else:preds = np.empty((0, 0, 3), dtype=np.float32)return predsdef simdr_pts(self,img,detection,images,boxes):c, s = [], []if detection is not None:for i, (x1, y1, x2, y2) in enumerate(detection):x1 = int(round(x1.item()))x2 = int(round(x2.item()))y1 = int(round(y1.item()))y2 = int(round(y2.item()))boxes[i] = [x1, y1, x2, y2]w, h = x2 - x1, y2 - y1xx1 = np.max((0, x1))yy1 = np.max((0, y1))xx2 = np.min((img.shape[1] - 1, x1 + np.max((0, w - 1))))yy2 = np.min((img.shape[0] - 1, y1 + np.max((0, h - 1))))box = [xx1, yy1, xx2 - xx1, yy2 - yy1]center, scale = self._box2cs(box)c.append(center)s.append(scale)trans = get_affine_transform(center, scale, 0, np.array(cfg.MODEL.IMAGE_SIZE))input = cv2.warpAffine(img,trans,(int(self.resolution[1]), int(self.resolution[0])),flags=cv2.INTER_LINEAR)images[i] = self.transform(input)if images.shape[0] > 0:images = images.to(self.device)with torch.no_grad():output_x, output_y = self.model(images)if cfg.TEST.FLIP_TEST:input_flipped = images.flip(3)output_x_flipped_, output_y_flipped_ = self.model(input_flipped)output_x_flipped = flip_back_simdr(output_x_flipped_.cpu().numpy(),self.flip_pairs, type='x')output_y_flipped = flip_back_simdr(output_y_flipped_.cpu().numpy(),self.flip_pairs, type='y')output_x_flipped = torch.from_numpy(output_x_flipped.copy()).to(self.device)output_y_flipped = torch.from_numpy(output_y_flipped.copy()).to(self.device)# feature is not aligned, shift flipped heatmap for higher accuracyif cfg.TEST.SHIFT_HEATMAP:output_x_flipped[:, :, 0:-1] = \output_x_flipped.clone()[:, :, 1:]output_x = (F.softmax(output_x, dim=2) + F.softmax(output_x_flipped, dim=2)) * 0.5output_y = (F.softmax(output_y, dim=2) + F.softmax(output_y_flipped, dim=2)) * 0.5else:output_x = F.softmax(output_x, dim=2)output_y = F.softmax(output_y, dim=2)max_val_x, preds_x = output_x.max(2, keepdim=True)max_val_y, preds_y = output_y.max(2, keepdim=True)mask = max_val_x > max_val_ymax_val_x[mask] = max_val_y[mask]maxvals = max_val_x * 10.0output = torch.ones([images.size(0), preds_x.size(1), 3])output[:, :, 0] = torch.squeeze(torch.true_divide(preds_x, cfg.MODEL.SIMDR_SPLIT_RATIO))output[:, :, 1] = torch.squeeze(torch.true_divide(preds_y, cfg.MODEL.SIMDR_SPLIT_RATIO))output = output.cpu().numpy()preds = output.copy()for i in range(output.shape[0]):preds[i] = transform_preds(output[i], c[i], s[i], [cfg.MODEL.IMAGE_SIZE[0], cfg.MODEL.IMAGE_SIZE[1]])preds[:, :, 2] = maxvals.squeeze(2)else:preds = np.empty((0, 0, 3), dtype=np.float32)return predsdef hrnet_pts(self,img,detection,images,boxes):if detection is not None:for i, (x1, y1, x2, y2) in enumerate(detection):x1 = int(round(x1.item()))x2 = int(round(x2.item()))y1 = int(round(y1.item()))y2 = int(round(y2.item()))# Adapt detections to match HRNet input aspect ratio (as suggested by xtyDoge in issue #14)correction_factor = self.resolution[0] / self.resolution[1] * (x2 - x1) / (y2 - y1)if correction_factor > 1:# increase y sidecenter = y1 + (y2 - y1) // 2length = int(round((y2 - y1) * correction_factor))y1 = max(0, center - length // 2)y2 = min(img.shape[0], center + length // 2)elif correction_factor < 1:# increase x sidecenter = x1 + (x2 - x1) // 2length = int(round((x2 - x1) * 1 / correction_factor))x1 = max(0, center - length // 2)x2 = min(img.shape[1], center + length // 2)boxes[i] = [x1, y1, x2, y2]images[i] = self.transform(img[y1:y2, x1:x2, ::-1])if images.shape[0] > 0:images = images.to(self.device)with torch.no_grad():out = self.model(images)out = out.detach().cpu().numpy()pts = np.empty((out.shape[0], out.shape[1], 3), dtype=np.float32)# For each human, for each joint: y, x, confidencefor i, human in enumerate(out):for j, joint in enumerate(human):pt = np.unravel_index(np.argmax(joint), (self.resolution[0] // 4, self.resolution[1] // 4))# 0: pt_x / (height // 4) * (bb_y2 - bb_y1) + bb_y1# 1: pt_y / (width // 4) * (bb_x2 - bb_x1) + bb_x1# 2: confidencespts[i, j, 0] = pt[1] * 1. / (self.resolution[1] // 4) * (boxes[i][2] - boxes[i][0]) + boxes[i][0]pts[i, j, 1] = pt[0] * 1. / (self.resolution[0] // 4) * (boxes[i][3] - boxes[i][1]) + boxes[i][1]pts[i, j, 2] = joint[pt]else:pts = np.empty((0, 0, 3), dtype=np.float32)return ptsdef _predict_single(self, image):_,detections = self.detector.detect(image)nof_people = len(detections) if detections is not None else 0boxes = np.empty((nof_people, 4), dtype=np.int32)images = torch.empty((nof_people, 3, self.resolution[0], self.resolution[1])) # (height, width)if self.model_name in ('sa-simdr','sasimdr'):pts=self.sa_simdr_pts(image,detections,images,boxes)elif self.model_name in ('hrnet','HRnet','hrnet'):pts = self.hrnet_pts(image, detections, images, boxes)elif self.model_name in ('simdr'):pts = self.simdr_pts(image, detections, images, boxes)return pts# c,s=[],[]# if detections is not None:# for i, (x1, y1, x2, y2) in enumerate(detections):# x1 = int(round(x1.item()))# x2 = int(round(x2.item()))# y1 = int(round(y1.item()))# y2 = int(round(y2.item()))# boxes[i] = [x1,y1,x2,y2]# w ,h= x2-x1,y2-y1# xx1 = np.max((0, x1))# yy1 = np.max((0, y1))# xx2 = np.min((image.shape[1] - 1, x1 + np.max((0, w - 1))))# yy2 = np.min((image.shape[0] - 1, y1 + np.max((0, h - 1))))# box = [xx1, yy1, xx2-xx1, yy2-yy1]# center,scale = self._box2cs(box)# c.append(center)# s.append(scale)## trans = get_affine_transform(center, scale, 0, np.array(cfg.MODEL.IMAGE_SIZE))# input = cv2.warpAffine(# image,# trans,# (int(self.resolution[1]), int(self.resolution[0])),# flags=cv2.INTER_LINEAR)# images[i] = self.transform(input)# if images.shape[0] > 0:# images = images.to(self.device)# with torch.no_grad():# output_x,output_y = self.model(images)# if cfg.TEST.FLIP_TEST:# input_flipped = images.flip(3)# output_x_flipped_, output_y_flipped_ = self.model(input_flipped)# output_x_flipped = flip_back_simdr(output_x_flipped_.cpu().numpy(),# self.flip_pairs, type='x')# output_y_flipped = flip_back_simdr(output_y_flipped_.cpu().numpy(),# self.flip_pairs, type='y')# output_x_flipped = torch.from_numpy(output_x_flipped.copy()).to(self.device)# output_y_flipped = torch.from_numpy(output_y_flipped.copy()).to(self.device)## # feature is not aligned, shift flipped heatmap for higher accuracy# if cfg.TEST.SHIFT_HEATMAP:# output_x_flipped[:, :, 0:-1] = \# output_x_flipped.clone()[:, :, 1:]# output_x = F.softmax((output_x + output_x_flipped) * 0.5, dim=2)# output_y = F.softmax((output_y + output_y_flipped) * 0.5, dim=2)# else:# output_x = F.softmax(output_x, dim=2)# output_y = F.softmax(output_y, dim=2)# max_val_x, preds_x = output_x.max(2, keepdim=True)# max_val_y, preds_y = output_y.max(2, keepdim=True)## mask = max_val_x > max_val_y# max_val_x[mask] = max_val_y[mask]# maxvals = max_val_x.cpu().numpy()## output = torch.ones([images.size(0), preds_x.size(1), 2])# output[:, :, 0] = torch.squeeze(torch.true_divide(preds_x, cfg.MODEL.SIMDR_SPLIT_RATIO))# output[:, :, 1] = torch.squeeze(torch.true_divide(preds_y, cfg.MODEL.SIMDR_SPLIT_RATIO))## output = output.cpu().numpy()# preds = output.copy()# for i in range(output.shape[0]):# preds[i] = transform_preds(# output[i], c[i], s[i], [cfg.MODEL.IMAGE_SIZE[0], cfg.MODEL.IMAGE_SIZE[1]]# )# else:# preds = np.empty((0, 0, 2), dtype=np.float32)# return preds# parser = argparse.ArgumentParser()

# parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')

# parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')

# parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')

# parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')

# parser.add_argument('--augment', action='store_true', help='augmented inference')

# parser.add_argument('--update', action='store_true', help='update all model')

# parser.add_argument('--project', default='runs/detect', help='save results to project/name')

# parser.add_argument('--name', default='exp', help='save results to project/name')

# parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

# opt = parser.parse_args()

# model = Points(model_name='hrnet',opt=opt)

# img0 = cv2.imread('./data/test1.jpg')

# pts = model.predict(img0)

# print(pts.shape)

# for point in pts[0]:

# image = cv2.circle(img0, (int(point[0]), int(point[1])), 3, [255,0,255], -1 , lineType= cv2.LINE_AA)

# cv2.imwrite('./data/test11_result.jpg',image)

4.绘制骨骼关键点

根据以上步骤,我们已经得到了关键点的坐标值,接下来需要在图片中描绘出来,以便展示检测结果。在SimDR/lib/utils/ 文件夹下新建visualization.py文件,将代码写到文件中。骨架绘制代码结合了simple-hrnet与Openpose工程:

import cv2

import matplotlib.pyplot as plt

import numpy as np

import torch

import torchvision

import ffmpeg

import random

import math

import copy

def plot_one_box(x, img, color=None, label=None, line_thickness=3):# Plots one bounding box on image imgtl = line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1 # line/font thicknesscolor = color or [random.randint(0, 255) for _ in range(3)]c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)if label:tf = max(tl - 1, 1) # font thicknesst_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filledcv2.putText(img, label, (c1[0], c1[1] - 2), 0, tl / 3, [225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)return imgdef joints_dict():joints = {"coco": {"keypoints": {0: "nose",1: "left_eye",2: "right_eye",3: "left_ear",4: "right_ear",5: "left_shoulder",6: "right_shoulder",7: "left_elbow",8: "right_elbow",9: "left_wrist",10: "right_wrist",11: "left_hip",12: "right_hip",13: "left_knee",14: "right_knee",15: "left_ankle",16: "right_ankle"},"skeleton": [# # [16, 14], [14, 12], [17, 15], [15, 13], [12, 13], [6, 12], [7, 13], [6, 7], [6, 8],# # [7, 9], [8, 10], [9, 11], [2, 3], [1, 2], [1, 3], [2, 4], [3, 5], [4, 6], [5, 7]# [15, 13], [13, 11], [16, 14], [14, 12], [11, 12], [5, 11], [6, 12], [5, 6], [5, 7],# [6, 8], [7, 9], [8, 10], [1, 2], [0, 1], [0, 2], [1, 3], [2, 4], [3, 5], [4, 6][15, 13], [13, 11], [16, 14], [14, 12], [11, 12], [5, 11], [6, 12], [5, 6], [5, 7],[6, 8], [7, 9], [8, 10], [1, 2], [0, 1], [0, 2], [1, 3], [2, 4], # [3, 5], [4, 6][0, 5], [0, 6]# [15, 13], [13, 11], [16, 14], [14, 12], [11, 12], [5, 11], [6, 12], [5, 6], [5, 7],# [6, 8], [7, 9], [8, 10], [0, 3], [0, 4], [1, 3], [2, 4], # [3, 5], [4, 6]# [0, 5], [0, 6]]},"mpii": {"keypoints": {0: "right_ankle",1: "right_knee",2: "right_hip",3: "left_hip",4: "left_knee",5: "left_ankle",6: "pelvis",7: "thorax",8: "upper_neck",9: "head top",10: "right_wrist",11: "right_elbow",12: "right_shoulder",13: "left_shoulder",14: "left_elbow",15: "left_wrist"},"skeleton": [# [5, 4], [4, 3], [0, 1], [1, 2], [3, 2], [13, 3], [12, 2], [13, 12], [13, 14],# [12, 11], [14, 15], [11, 10], # [2, 3], [1, 2], [1, 3], [2, 4], [3, 5], [4, 6], [5, 7][5, 4], [4, 3], [0, 1], [1, 2], [3, 2], [3, 6], [2, 6], [6, 7], [7, 8], [8, 9],[13, 7], [12, 7], [13, 14], [12, 11], [14, 15], [11, 10],]},}return jointsdef draw_points(image, points, color_palette='tab20', palette_samples=16, confidence_threshold=0.1,color=None):"""Draws `points` on `image`.Args:image: image in opencv formatpoints: list of points to be drawn.Shape: (nof_points, 3)Format: each point should contain (y, x, confidence)color_palette: name of a matplotlib color paletteDefault: 'tab20'palette_samples: number of different colors sampled from the `color_palette`Default: 16confidence_threshold: only points with a confidence higher than this threshold will be drawn. Range: [0, 1]Default: 0.1Returns:A new image with overlaid points"""circle_size = max(2, int(np.sqrt(np.max(np.max(points, axis=0) - np.min(points, axis=0)) // 16)))for i, pt in enumerate(points):if pt[2] >= confidence_threshold:image = cv2.circle(image, (int(pt[0]), int(pt[1])), circle_size, color[i] ,-1, lineType= cv2.LINE_AA)return imagedef draw_skeleton(image, points, skeleton, color_palette='Set2', palette_samples=8, person_index=0,confidence_threshold=0.1,sk_color=None):"""Draws a `skeleton` on `image`.Args:image: image in opencv formatpoints: list of points to be drawn.Shape: (nof_points, 3)Format: each point should contain (y, x, confidence)skeleton: list of joints to be drawnShape: (nof_joints, 2)Format: each joint should contain (point_a, point_b) where `point_a` and `point_b` are an index in `points`color_palette: name of a matplotlib color paletteDefault: 'Set2'palette_samples: number of different colors sampled from the `color_palette`Default: 8person_index: index of the person in `image`Default: 0confidence_threshold: only points with a confidence higher than this threshold will be drawn. Range: [0, 1]Default: 0.1Returns:A new image with overlaid joints"""canvas = copy.deepcopy(image)cur_canvas = canvas.copy()for i, joint in enumerate(skeleton):pt1, pt2 = points[joint]if pt1[2] >= confidence_threshold and pt2[2]>= confidence_threshold :length = ((pt1[0] - pt2[0]) ** 2 + (pt1[1] - pt2[1]) ** 2) ** 0.5angle = math.degrees(math.atan2(pt1[1] - pt2[1],pt1[0] - pt2[0]))polygon = cv2.ellipse2Poly((int(np.mean((pt1[0],pt2[0]))), int(np.mean((pt1[1],pt2[1])))), (int(length / 2), 2), int(angle), 0, 360, 1)cv2.fillConvexPoly(cur_canvas, polygon, sk_color[i],lineType=cv2.LINE_AA)# cv2.fillConvexPoly(cur_canvas, polygon, sk_color,lineType=cv2.LINE_AA)canvas = cv2.addWeighted(canvas, 0.4, cur_canvas, 0.6, 0)return canvasdef draw_points_and_skeleton(image, points, skeleton, points_color_palette='tab20', points_palette_samples=16,skeleton_color_palette='Set2', skeleton_palette_samples=8, person_index=0,confidence_threshold=0.1,color=None,sk_color=None):"""Draws `points` and `skeleton` on `image`.Args:image: image in opencv formatpoints: list of points to be drawn.Shape: (nof_points, 3)Format: each point should contain (y, x, confidence)skeleton: list of joints to be drawnShape: (nof_joints, 2)Format: each joint should contain (point_a, point_b) where `point_a` and `point_b` are an index in `points`points_color_palette: name of a matplotlib color paletteDefault: 'tab20'points_palette_samples: number of different colors sampled from the `color_palette`Default: 16skeleton_color_palette: name of a matplotlib color paletteDefault: 'Set2'skeleton_palette_samples: number of different colors sampled from the `color_palette`Default: 8person_index: index of the person in `image`Default: 0confidence_threshold: only points with a confidence higher than this threshold will be drawn. Range: [0, 1]Default: 0.1Returns:A new image with overlaid joints"""colors1 = [[255, 0, 0], [255, 85, 0], [255, 170, 0], [255, 255, 0], [170, 255, 0], [85, 255, 0], [0, 255, 0],[0, 255, 85], [0, 255, 170], [0, 255, 255], [0, 170, 255], [0, 85, 255], [0, 0, 255], [85, 0, 255],[170, 0, 255], [255, 0, 255], [255, 0, 170], [255, 0, 85], [255, 0, 85]]image = draw_skeleton(image, points, skeleton, color_palette=skeleton_color_palette,palette_samples=skeleton_palette_samples, person_index=person_index,confidence_threshold=confidence_threshold,sk_color=colors1)image = draw_points(image, points, color_palette=points_color_palette, palette_samples=points_palette_samples,confidence_threshold=confidence_threshold,color=colors1)return imagedef save_images(images, target, joint_target, output, joint_output, joint_visibility, summary_writer=None, step=0,prefix=''):"""Creates a grid of images with gt joints and a grid with predicted joints.This is a basic function for debugging purposes only.If summary_writer is not None, the grid will be written in that SummaryWriter with name "{prefix}_images" and"{prefix}_predictions".Args:images (torch.Tensor): a tensor of images with shape (batch x channels x height x width).target (torch.Tensor): a tensor of gt heatmaps with shape (batch x channels x height x width).joint_target (torch.Tensor): a tensor of gt joints with shape (batch x joints x 2).output (torch.Tensor): a tensor of predicted heatmaps with shape (batch x channels x height x width).joint_output (torch.Tensor): a tensor of predicted joints with shape (batch x joints x 2).joint_visibility (torch.Tensor): a tensor of joint visibility with shape (batch x joints).summary_writer (tb.SummaryWriter): a SummaryWriter where write the grids.Default: Nonestep (int): summary_writer step.Default: 0prefix (str): summary_writer name prefix.Default: ""Returns:A pair of images which are built from torchvision.utils.make_grid"""# Input images with gtimages_ok = images.detach().clone()images_ok[:, 0].mul_(0.229).add_(0.485)images_ok[:, 1].mul_(0.224).add_(0.456)images_ok[:, 2].mul_(0.225).add_(0.406)for i in range(images.shape[0]):joints = joint_target[i] * 4.joints_vis = joint_visibility[i]for joint, joint_vis in zip(joints, joints_vis):if joint_vis[0]:a = int(joint[1].item())b = int(joint[0].item())# images_ok[i][:, a-1:a+1, b-1:b+1] = torch.tensor([1, 0, 0])images_ok[i][0, a - 1:a + 1, b - 1:b + 1] = 1images_ok[i][1:, a - 1:a + 1, b - 1:b + 1] = 0grid_gt = torchvision.utils.make_grid(images_ok, nrow=int(images_ok.shape[0] ** 0.5), padding=2, normalize=False)if summary_writer is not None:summary_writer.add_image(prefix + 'images', grid_gt, global_step=step)# Input images with predictionimages_ok = images.detach().clone()images_ok[:, 0].mul_(0.229).add_(0.485)images_ok[:, 1].mul_(0.224).add_(0.456)images_ok[:, 2].mul_(0.225).add_(0.406)for i in range(images.shape[0]):joints = joint_output[i] * 4.joints_vis = joint_visibility[i]for joint, joint_vis in zip(joints, joints_vis):if joint_vis[0]:a = int(joint[1].item())b = int(joint[0].item())# images_ok[i][:, a-1:a+1, b-1:b+1] = torch.tensor([1, 0, 0])images_ok[i][0, a - 1:a + 1, b - 1:b + 1] = 1images_ok[i][1:, a - 1:a + 1, b - 1:b + 1] = 0grid_pred = torchvision.utils.make_grid(images_ok, nrow=int(images_ok.shape[0] ** 0.5), padding=2, normalize=False)if summary_writer is not None:summary_writer.add_image(prefix + 'predictions', grid_pred, global_step=step)# Heatmaps# ToDo# for h in range(0,17):# heatmap = torchvision.utils.make_grid(output[h].detach(), nrow=int(np.sqrt(output.shape[0])),# padding=2, normalize=True, range=(0, 1))# summary_writer.add_image('train_heatmap_%d' % h, heatmap, global_step=step + epoch*len_dl_train)return grid_gt, grid_preddef check_video_rotation(filename):# thanks to# https://stackoverflow.com/questions/53097092/frame-from-video-is-upside-down-after-extracting/55747773#55747773# this returns meta-data of the video file in form of a dictionarymeta_dict = ffmpeg.probe(filename)# from the dictionary, meta_dict['streams'][0]['tags']['rotate'] is the key# we are looking forrotation_code = Nonetry:if int(meta_dict['streams'][0]['tags']['rotate']) == 90:rotation_code = cv2.ROTATE_90_CLOCKWISEelif int(meta_dict['streams'][0]['tags']['rotate']) == 180:rotation_code = cv2.ROTATE_180elif int(meta_dict['streams'][0]['tags']['rotate']) == 270:rotation_code = cv2.ROTATE_90_COUNTERCLOCKWISEelse:raise ValueErrorexcept KeyError:passreturn rotation_code

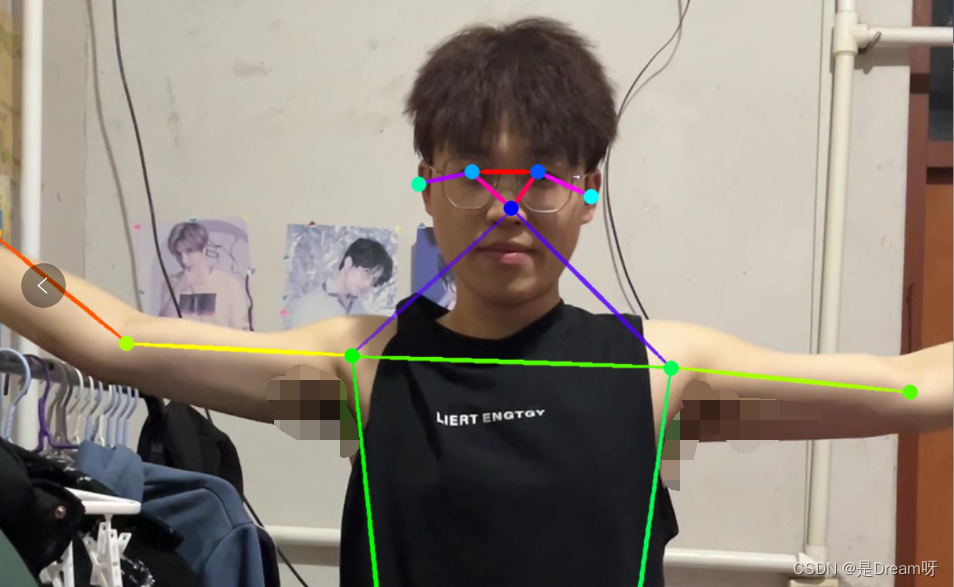

四、结果演示

1.照片演示

在SimDR文件夹下新建main.py ,将下面的代码写入文件中,我们需要自己修改parser参数source的默认值:

import argparse

import time

import os

import cv2 as cv

import numpy as np

from pathlib import Path

from Point_detect import Points

from lib.utils.visualization import draw_points_and_skeleton, joints_dictdef image_detect(opt):skeleton = joints_dict()['coco']['skeleton']hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)img0 = cv.imread(opt.source)frame = img0.copy()# predictpred = hrnet_model.predict(img0)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)# visfor i, pt in enumerate(pred):frame = draw_points_and_skeleton(frame, pt, skeleton)# savecv.imwrite('test_result.jpg', frame)def video_detect(opt):hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)skeleton = joints_dict()['coco']['skeleton']cap = cv.VideoCapture(opt.source)# cap = cv.VideoCapture(0)if opt.save_video:fourcc = cv.VideoWriter_fourcc(*'MJPG')out = cv.VideoWriter('data/runs/{}_out.avi'.format(os.path.basename(opt.source).split('.')[0]), fourcc, 24,(int(cap.get(3)), int(cap.get(4))))while cap.isOpened():ret, frame = cap.read()if not ret:breakpred = hrnet_model.predict(frame)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)for pt in pred:frame = draw_points_and_skeleton(frame, pt, skeleton)if opt.show:cv.imshow('result', frame)if opt.save_video:out.write(frame)if cv.waitKey(1) == 27:breakout.release()cap.release()cv.destroyAllWindows()# video_detect(opt)

if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--source', type=str, default='D:\PycharmProjects\SimDR\yolov5\data\images\\1234.jpg',help='source') # file/folder, 0 for webcamparser.add_argument('--detect_weight', type=str, default="./yolov5/weights/yolov5x.pt",help='e.g "./yolov5/weights/yolov5x.pt"')parser.add_argument('--save_video', action='store_true', default=False, help='save results to *.avi')parser.add_argument('--show', action='store_true', default=True, help='save results to *.avi')parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')parser.add_argument('--augment', action='store_true', help='augmented inference')opt = parser.parse_args()image_detect(opt)# video_detect(opt)

结果展示:

PS:随便找了一张图片,但是忽然一看这男的有点小帅,谁啊这是,原来是CSDN的是Dream呀博主,听说他每周都有免费的送书活动,为大家送上免费的书籍,话都说到这份上了,点个关注和和赞不过分吧,我已经点了(^^)

![[图片]](https://img-blog.csdnimg.cn/direct/555b92b5f7d74ff18ec9b35c02c78013.png)

2.视频演示

import argparse

import time

import os

import cv2 as cv

import numpy as np

from pathlib import Path

from Point_detect import Points

from lib.utils.visualization import draw_points_and_skeleton, joints_dictdef image_detect(opt):skeleton = joints_dict()['coco']['skeleton']hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)img0 = cv.imread(opt.source)frame = img0.copy()# predictpred = hrnet_model.predict(img0)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)# visfor i, pt in enumerate(pred):frame = draw_points_and_skeleton(frame, pt, skeleton)# savecv.imwrite('test_result.jpg', frame)def video_detect(opt):hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)skeleton = joints_dict()['coco']['skeleton']cap = cv.VideoCapture(opt.source)# cap = cv.VideoCapture(0)if opt.save_video:fourcc = cv.VideoWriter_fourcc(*'MJPG')out = cv.VideoWriter('data/runs/{}_out.avi'.format(os.path.basename(opt.source).split('.')[0]), fourcc, 24,(int(cap.get(3)), int(cap.get(4))))while cap.isOpened():ret, frame = cap.read()if not ret:breakpred = hrnet_model.predict(frame)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)for pt in pred:frame = draw_points_and_skeleton(frame, pt, skeleton)if opt.show:cv.imshow('result', frame)if opt.save_video:out.write(frame)if cv.waitKey(1) == 27:breakout.release()cap.release()cv.destroyAllWindows()# video_detect(opt)

if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--source', type=str, default='D:\PycharmProjects\SimDR\yolov5\data\images\\12345.mp4',help='source') # file/folder, 0 for webcamparser.add_argument('--detect_weight', type=str, default="./yolov5/weights/yolov5x.pt",help='e.g "./yolov5/weights/yolov5x.pt"')parser.add_argument('--save_video', action='store_true', default=False, help='save results to *.avi')parser.add_argument('--show', action='store_true', default=True, help='save results to *.avi')parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')parser.add_argument('--augment', action='store_true', help='augmented inference')opt = parser.parse_args()# image_detect(opt)video_detect(opt)

结果展示:

这波属实是是Dream呀博主为艺术献身,喔不是,是为学术献身了,这波点个关注和赞应该真不过份了吧~

![[图片]](https://img-blog.csdnimg.cn/direct/6d2b5ac9f637451b84a2e4ea7ce8397a.png)

3.实时演示

import argparse

import time

import os

import cv2 as cv

import numpy as np

from pathlib import Path

from Point_detect import Points

from lib.utils.visualization import draw_points_and_skeleton, joints_dictdef image_detect(opt):skeleton = joints_dict()['coco']['skeleton']hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)img0 = cv.imread(opt.source)frame = img0.copy()# predictpred = hrnet_model.predict(img0)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)# visfor i, pt in enumerate(pred):frame = draw_points_and_skeleton(frame, pt, skeleton)# savecv.imwrite('test_result.jpg', frame)def video_detect(opt):hrnet_model = Points(model_name='hrnet', opt=opt, resolution=(384, 288)) # resolution = (384,288) or (256,192)# simdr_model = Points(model_name='simdr', opt=opt,resolution=(256,192)) #resolution = (256,192)# sa_simdr_model = Points(model_name='sa-simdr', opt=opt,resolution=(384,288)) #resolution = (384,288) or (256,192)skeleton = joints_dict()['coco']['skeleton']# cap = cv.VideoCapture(opt.source)cap = cv.VideoCapture(0)if opt.save_video:fourcc = cv.VideoWriter_fourcc(*'MJPG')out = cv.VideoWriter('data/runs/{}_out.avi'.format(os.path.basename(opt.source).split('.')[0]), fourcc, 24,(int(cap.get(3)), int(cap.get(4))))while cap.isOpened():ret, frame = cap.read()if not ret:breakpred = hrnet_model.predict(frame)# pred = simdr_model.predict(frame)# pred = sa_simdr_model.predict(frame)for pt in pred:frame = draw_points_and_skeleton(frame, pt, skeleton)if opt.show:cv.imshow('result', frame)if opt.save_video:out.write(frame)if cv.waitKey(1) == 27:breakout.release()cap.release()cv.destroyAllWindows()# video_detect(opt)

if __name__ == '__main__':parser = argparse.ArgumentParser()# parser.add_argument('--source', type=str, default='D:\PycharmProjects\SimDR\yolov5\data\images\\1234.jpg',# help='source') # file/folder, 0 for webcamparser.add_argument('--detect_weight', type=str, default="./yolov5/weights/yolov5x.pt",help='e.g "./yolov5/weights/yolov5x.pt"')parser.add_argument('--save_video', action='store_true', default=False, help='save results to *.avi')parser.add_argument('--show', action='store_true', default=True, help='save results to *.avi')parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')parser.add_argument('--augment', action='store_true', help='augmented inference')opt = parser.parse_args()# image_detect(opt)video_detect(opt)

五、报错分析

这里面记录了我自己所有的报错,以及大家可能经常会遇到的一些报错:

1.路径问题

本文代码是在pycharm中运行,yolov5工程的加入导致有些文件夹名称相同,pycharm会搞混,可能会出现某些包找不到,出现类似下面的错误:

NameError: name ‘Yolov5’ is not defined

解决方法:

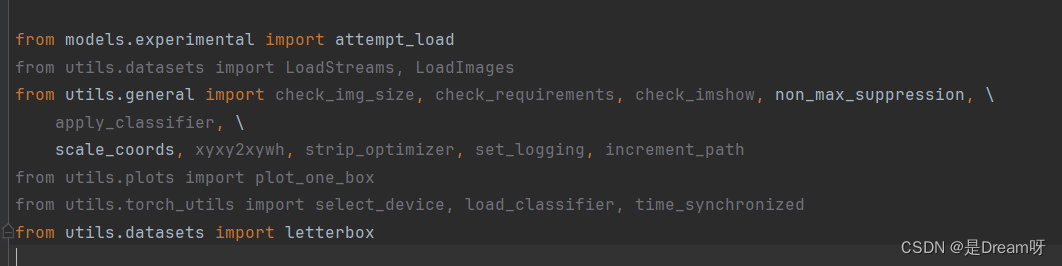

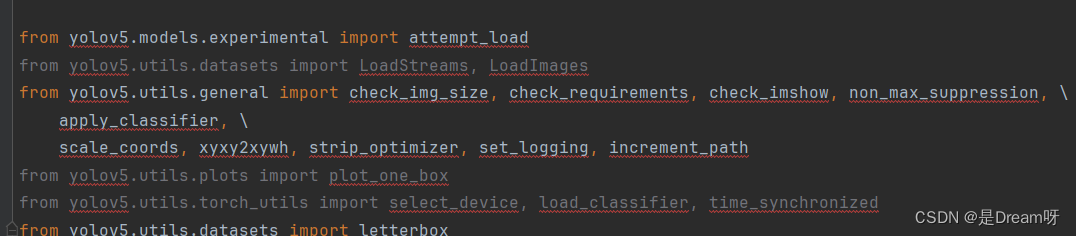

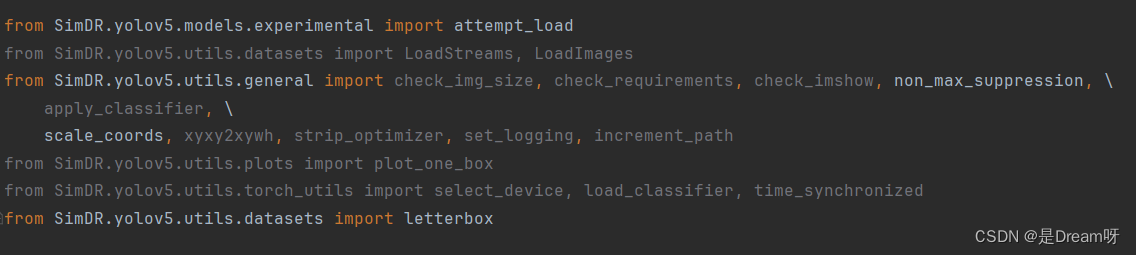

这里我们可以先运行一下YOLOv5.py脚本,根据报错改一下import的内容。举个例子,YOLOv5.py中刚开始导包,有的人这样就不会报错:

而大部分人都需要在前面在加一层索引:

更有甚者需要把所有路径都加上,就比如我,我真的是烦死了,这不是纯纯有冰吗!

破电脑,等哥赚够了money,就把你休了。真气死我了,这个地方我前前后后弄了快俩点了,就是导包导不进去。只要大家的报错中含有XXX不存在,那大概率就是导包没导进去,根据每一个错误的地方去改路径就可以啦!

这个地方出错太正常了,因为文件太多了很容易出错,如果你想万无一失,就和我一样,把路径写到最完全,这样寻找路径就找不错了,如果还错了,那怎么办呢?再生一个吧…

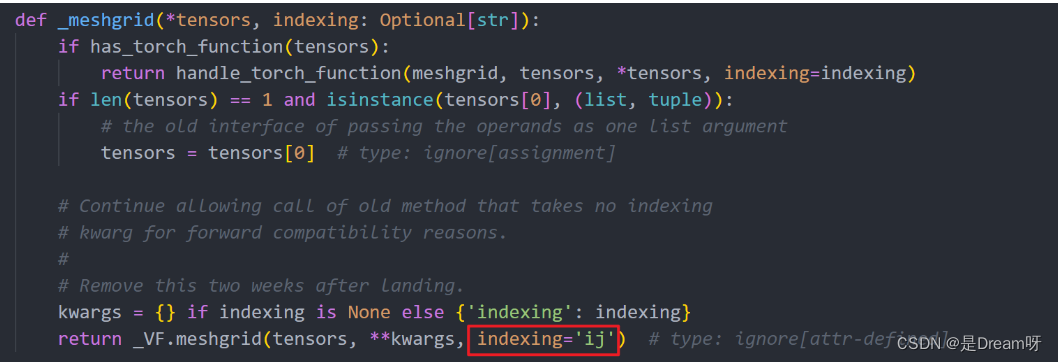

2.YOLOv5、YOLOvx训练过程警告:functional.py

运行程序,遇到出现以下警告:

…pytorch\lib\site-packages\torch\functional.py:478: UserWarning:

torch.meshgrid: in an upcoming release, it will be required to pass

the indexing argument. (Triggered internally at

C:\actions-runner_work\pytorch\pytorch\builder\windows\pytorch\aten\src\ATen\native\TensorShape.cpp:2895.)

巴拉巴拉一大长串,就是functional.py的问题,虽然警告不影响程序,但是看着难受!!!

解决方法:

点击报错处,跳转到代码functional.py,找到错误处,加上indexing='ij'即可,如下图所示:

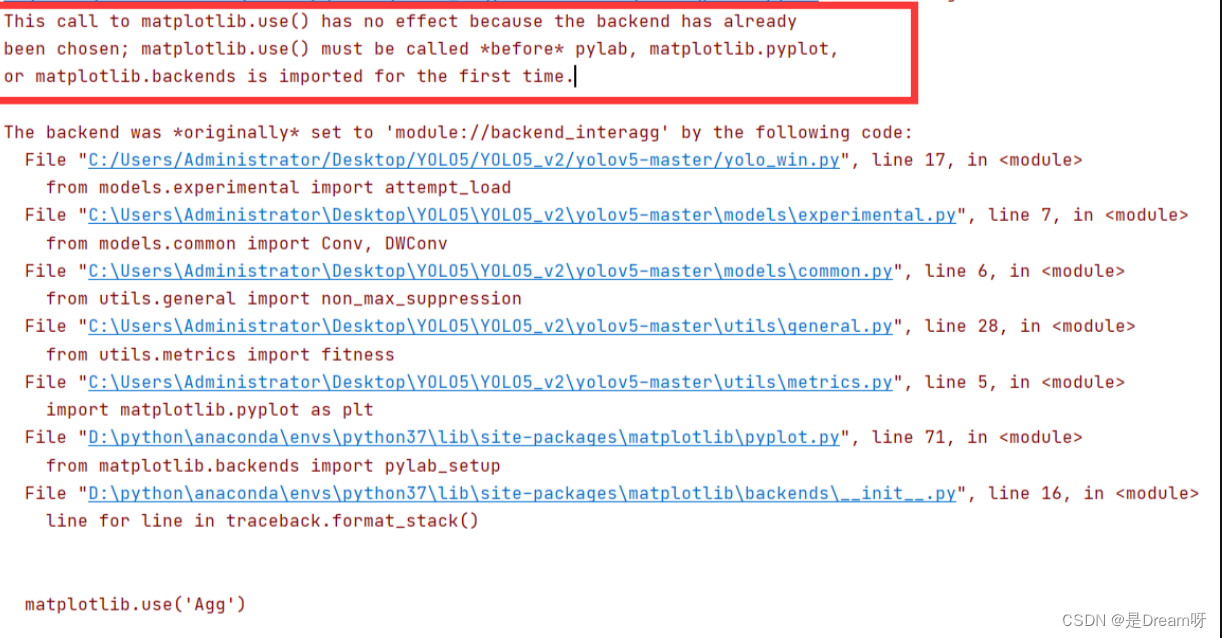

3.matplotlib.use( Agg ) # for writing to files only

出现这个报错:

解决方法:

将:matplotlib.use('Agg')

改为:plt.switch_backend('agg')

4.AttributeError: ‘Upsample‘ object has no attribute ‘recompute_scale_factor‘

在使用yolov5训练的时候,有可能会出现报错:

AttributeError: ‘Upsample’ object has no attribute ‘recompute_scale_factor’

意思是:

“Upsample”对象没有属性“recompute_scale_factor”

我们根据报错的提示,进入到py文件,找到 forward()函数。

def forward(self, input: Tensor) -> Tensor:return F.interpolate(input, self.size, self.scale_factor, self.mode, self.align_corners,recompute_scale_factor=self.recompute_scale_factor)

注释掉,改成下面这个代码。为什么注释,因为以便别的项目使用,以后要改回来:

def forward(self, input: Tensor) -> Tensor:return F.interpolate(input, self.size, self.scale_factor, self.mode, self.align_corners)

5. ‘gbk‘ codec can‘t decode byte

运行程序,报错以下错误:

UnicodeDecodeError: ‘gbk‘ codec can‘t decode byte

然后参考资料,说是编码格式utf-8的问题,可是已经设置过是utf-8了,还是报错。

解决方法:

读取文件的问题,一般都是。

按照给的报错,找到错误那段,有时候比较难找,实在找不到,就找报错程序里,看哪部分有以下代码:

with open(file) as f:

就是它,没跑了,把它改成:

with open(file, ‘r’, encoding=‘utf-8’) as f:

即可解决,over。

六、总结分享

本文主要是用于工程应用,没有涉及算法训练。Hrnet或是simdr都是先目标检测后姿态估计,yolov5就是获得人体边界框的。全文较长,主要都是些代码,在最后也给大家展示了照片、视频、摄像头的展示代码,同时也分享了自己的报错,还是一次非常有意义有收获的实践!

同时因为本文采用的cpu,大家也可以用gpu去跑得到更高效的结果。过后我也会在此代码的基础上,训练新的模型进行动作识别,如果有新的进展会及时分享给大家,欢迎大家一起操作起来,实践出真知!特别感谢围白的尾巴大佬提供的代码和思路,本文也是在此基础上的完善和实践。

原工程文件分享:

考虑到很多同学真的是时间问题不想自己配置参数和权重,在这里我将自己的原工程文件分享给大家,只需要将路径改成自己的就可以啦:

YOLOv5姿态估计:HRnet实时检测人体关键点全工程文件

文末免费送书福利:Python OpenCV从入门到精通

《PythonOpenCV从入门到精通》以在Python开发环境下运用OpenCV处理图像为主线,全面介绍OpenCV提供的处理图像的方法。全书共分为16章,包括Python与OpenCV、搭建开发环境、图像处理的基本操作、像素的操作、色彩空间与通道、绘制图形和文字、图像的几何变换、图像的阈值处理、图像的运算、模板匹配、滤波器、腐蚀与膨胀、图形检测、视频处理、人脸检测和人脸识别以及MR智能视频打卡系统。本书图文丰富,直观呈现处理后的图像与原图之间的差异;在讲解OpenCV提供的方法时,列举了其中的必选参数和可选参数,读者能更快地掌握方法的语法格式;后一章以MR智能视频打卡系统为例,指导读者系统地运用OpenCV解决工作中的实际问题。本书专注于图像处理本身,尽可能忽略图像处理算法的具体实现细节,降低阅读和学习的难度,有助于读者更好更快地达到入门的目的。

抽奖方式: 评论区随机抽取3位小伙伴免费送出!

参与方式: 关注博主、点赞、收藏、评论区评论“人生苦短,我用Python!”(切记要点赞+收藏,否则抽奖无效,每个人最多评论三次!)

活动截止时间: 2024-1-22 20:00:00

当当: 购买链接传送门

京东: 购买链接传送门

😄😄😄名单公布方式: 下期活动开始将在评论区和私信一并公布,中奖者请三天内提供信息😄😄😄

)

)

)

)

)