一、node-exporter

node_exporter:用于监控Linux系统的指标采集器。

未在k8s集群内的linux机器监控

GitHub - prometheus/node_exporter: Exporter for machine metrics

常用指标:

•CPU

• 内存

• 硬盘

• 网络流量

• 文件描述符

• 系统负载

• 系统服务

数据接口:http://IP:9100/metrics

第一种方式:linux主机安装

#x86_64主机下载此客户端

wget --no-check-certificate http://foreman.chinamcloud.com:8080/source/node_exporter-1.6.1.linux-amd64.tar.gz

#安装

useradd prometheus -s /sbin/nologin

#x86_64主机

tar zxvf node_exporter-1.6.1.linux-amd64.tar.gz -C /tmp/

#x86_64主机

mv /tmp/node_exporter-1.6.1.linux-amd64 /usr/local/node_exporter

#目录授权

chown prometheus:prometheus -R /usr/local/node_exporter

#封装service

tee /etc/systemd/system/node-exporter.service <<-'EOF'

[Unit]

Description=Prometheus Node Exporter

After=network.target

[Service]

ExecStart=/usr/local/node_exporter/node_exporter

User=prometheus

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable node-exporter

systemctl start node-exporter验证监控,访问http://ip:9100/metrics

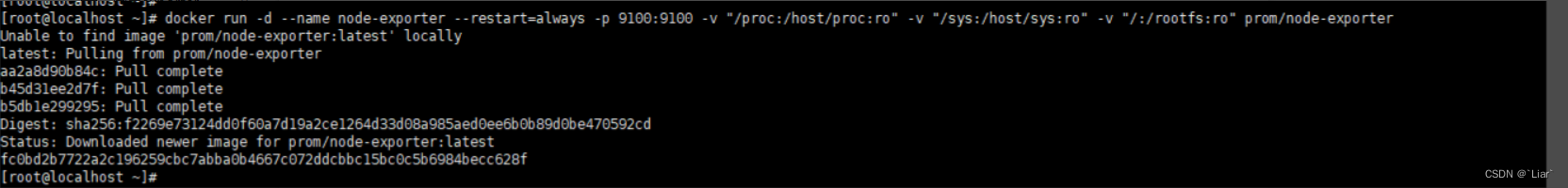

第二种方式:docker容器启动

第二种方式:docker容器启动

docker run -d --name node-exporter --restart=always -p 9100:9100 -v "/proc:/host/proc:ro" -v "/sys:/host/sys:ro" -v "/:/rootfs:ro" prom/node-exporter

配置Prometheus,接入windows的metrics

配置Prometheus,接入windows的metrics

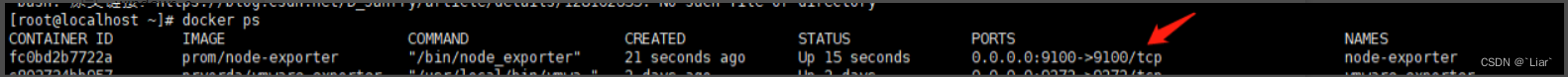

配置映射里Prometheus.yaml新加一个job

- job_name: linux-exporterstatic_configs:- targets:- 192.168.1.1:9100- 192.168.1.2:9100- 192.168.1.3:9100- x.x.x.x:9100将linux的ip加入到配置文件里

常用指标

主机 内存 使用率 > 90%

(1 - (node_memory_MemAvailable_bytes{environment=~"项目标志"} / (node_memory_MemTotal_bytes{environment=~"项目标志"})))* 100 >90主机 CPU 使用率> 90%

(1 - avg(rate(node_cpu_seconds_total{environment=~"项目标志",mode="idle"})) by (instance))*100 >90主机NTP时间差[5m] > 5s

node_timex_tai_offset_seconds{environment=~"项目标志"}>3主机磁盘使用率>80%

(node_filesystem_size_bytes{environment=~"项目标志",fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}-node_filesystem_free_bytes{environment=~"项目标志",fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}) *100/(node_filesystem_avail_bytes {environment=~"项目标志",fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}+(node_filesystem_size_bytes{environment=~"项目标志",fstype=~"ext.*|xfs",mountpoint !~".*pod.*"}-node_filesystem_free_bytes{environment=~"项目标志",fstype=~"ext.*|xfs",mountpoint !~".*pod.*"})) >80主机Inode 使用率[5m] > 80%

(1-node_filesystem_files_free{environment=~"项目标志",fstype=~"ext.?|xfs"} / node_filesystem_files{environment=~"项目标志",fstype=~"ext.?|xfs"})*100 >80二、windows-exporter

GitHub - prometheus-community/windows_exporter: Prometheus exporter for Windows machineswindows-exporter安装部署

1、安装包下载

windows_exporter-0.22.0-amd64.msi

2、直接双击运行该msi程序即可正常安装

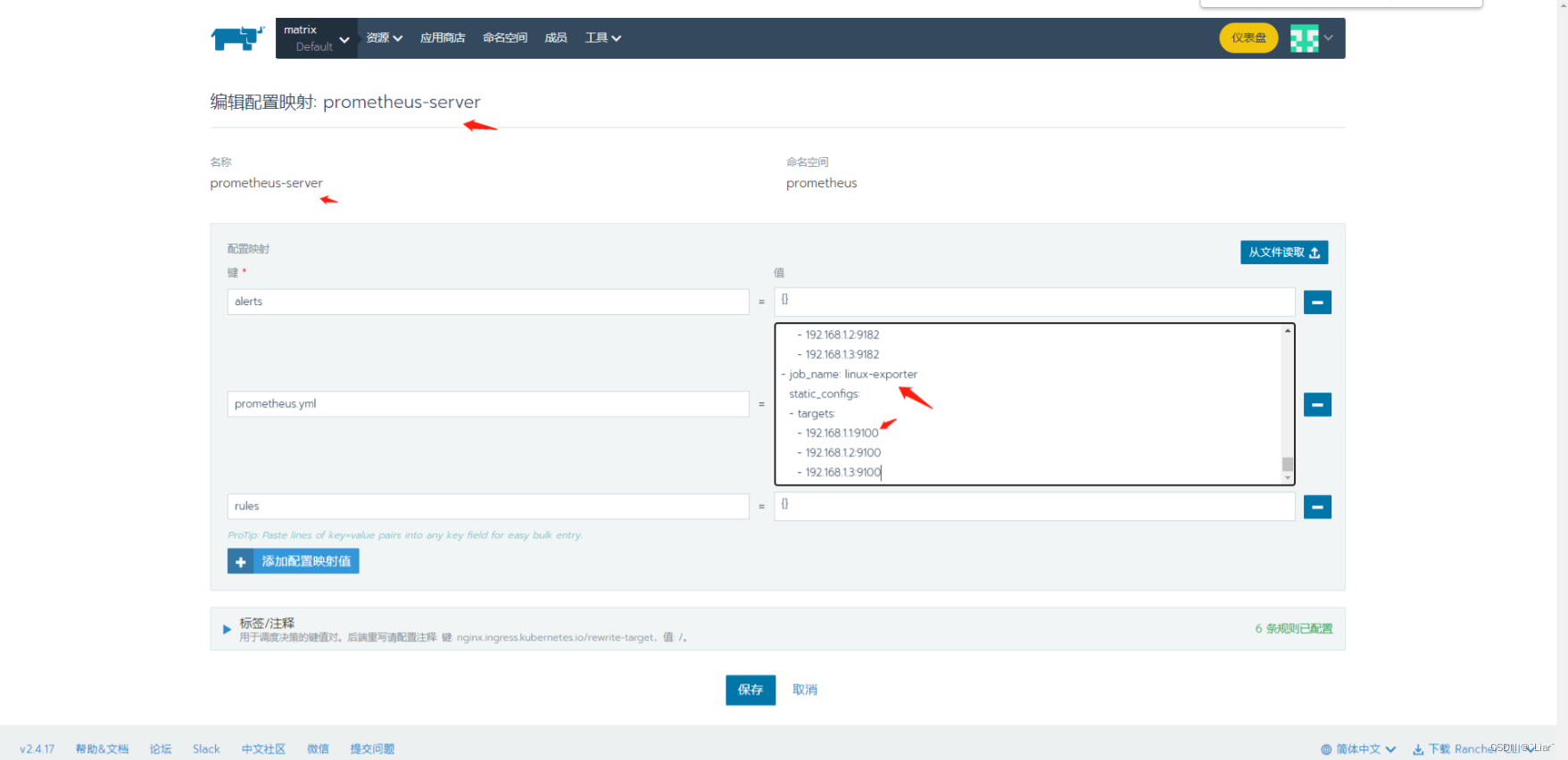

安装完成后,打开任务管理器,会看到里面有个windows-exporter的服务

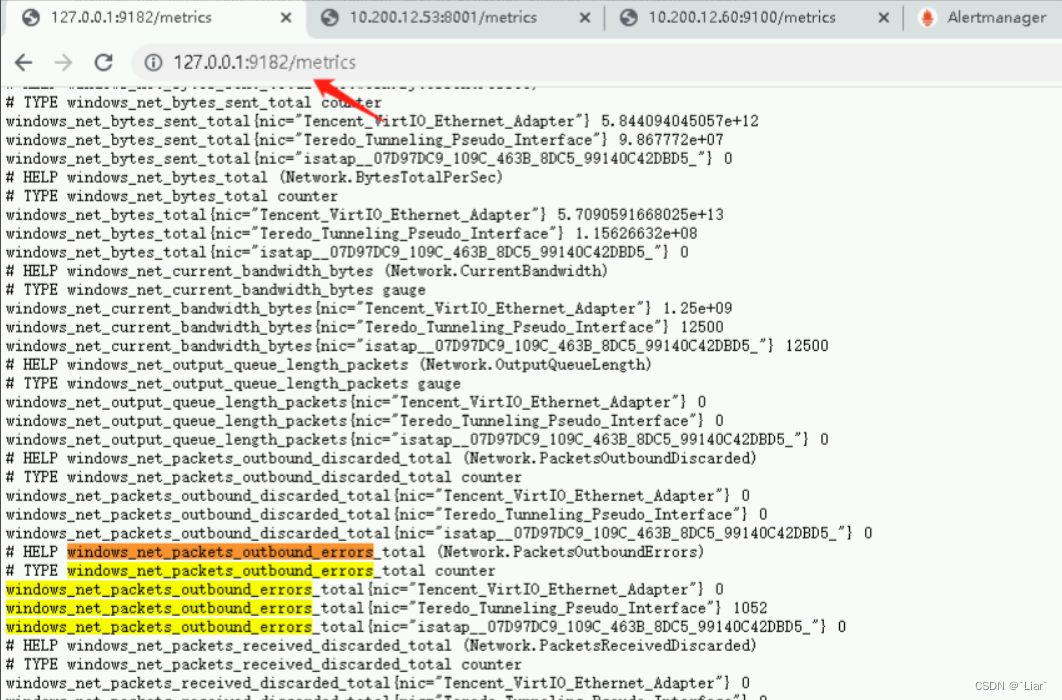

3、使用127.0.0.1:9182端口,可看到对应的metrics

配置Prometheus,接入windows的metrics

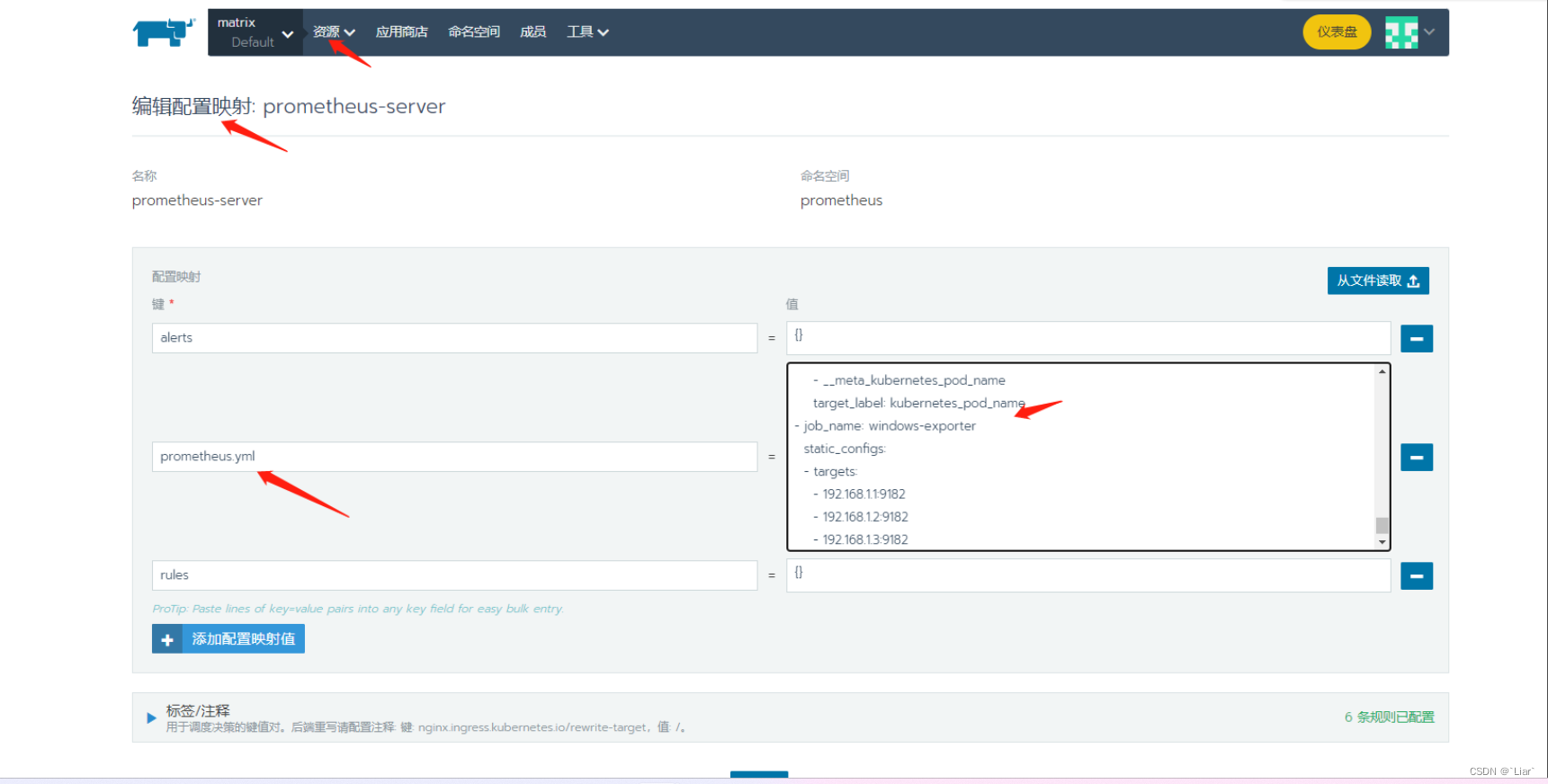

配置映射里Prometheus.yaml新加一个job

- job_name: windows-exporterstatic_configs:- targets:- 192.168.1.1:9182- 192.168.1.2:9182- 192.168.1.3:9182- x.x.x.x:9182将windows的ip加入到配置文件里

GitHub - prometheus-community/windows_exporter: Prometheus exporter for Windows machines

注意

默认收集只收集了cpu,cs,logical_disk,net,os,service,system,textfile相关的指标,若要开启其他的,需配置—collectors.enabled

1.新增C:\Program Files\windows_exporter\config.yml文件

collectors:enabled: "[defaults],process,cpu_info,memory,remote_fx,tcp"

collector:service:services-where: "Name='windows_exporter'"

log:level: warn2.修改windows启动参数

sc config windows_exporter binPath= "\"C:\Program Files\windows_exporter\windows_exporter.exe\" --config.file=\"C:\Program Files\windows_exporter\config.yml\" "3.重启windows_exporter服务

sc stop windows_exporter

sc start windows_exporter二、powershell脚本一键安装windows_exporter,windows_exporter.ps1

Set-Location -Path $Env:TEMP

(New-Object Net.WebClient).DownloadFile('http://foreman.chinamcloud.com:8080/source/windows_exporter-0.23.1-amd64.msi', $ExecutionContext.SessionState.Path.GetUnresolvedProviderPathFromPSPath('./windows_exporter-0.23.1-amd64.msi'))

Start-Process ./windows_exporter-0.23.1-amd64.msi

Add-Content "C:\Program Files\windows_exporter\config.yml" "collectors:"

Add-Content "C:\Program Files\windows_exporter\config.yml" " enabled: ""[defaults],process,cpu_info,memory,remote_fx,tcp"" "

Add-Content "C:\Program Files\windows_exporter\config.yml" "collector:"

Add-Content "C:\Program Files\windows_exporter\config.yml" " service:"

Add-Content "C:\Program Files\windows_exporter\config.yml" " services-where: ""Name='windows_exporter'"""

Add-Content "C:\Program Files\windows_exporter\config.yml" "log:"

Add-Content "C:\Program Files\windows_exporter\config.yml" " level: warn"

sleep 30

sc.exe config "windows_exporter" binpath= """""""C:\Program Files\windows_exporter\windows_exporter.exe"""""" --config.file=""""""C:\Program Files\windows_exporter\config.yml"""""""

sc.exe config "windows_exporter" binpath= """""""C:\Program Files\windows_exporter\windows_exporter.exe"""""" --config.file=""""""C:\Program Files\windows_exporter\config.yml"""""""

sc.exe stop windows_exporter

sc.exe start windows_exporter

sleep 10常用指标

主机CPU使用率

100 - avg(irate(windows_cpu_time_total{job=~"$job",instance=~"$instance",mode="idle"}[5m]))*100主机内存使用率

100 - 100 * (windows_os_physical_memory_free_bytes{job=~"$job"} / windows_cs_physical_memory_bytes{job=~"$job"})主机磁盘使用率

100-(windows_logical_disk_free_bytes/windows_logical_disk_size_bytes)*100主机mpc相关进程句柄数

windows_process_handles{environment=~"$environment",job="windows-exporter",process=~'MPC.*|cloudia.*|APPBaseInTT.*|AppBaseInTT.*'}三、blackbox-exporter

GitHub - prometheus/blackbox_exporter: Blackbox prober exporter

Blackbox Exporter是Prometheus社区提供的官方黑盒监控解决方案,其允许用户通过:HTTP、HTTPS、DNS、TCP以及ICMP的方式对网络进行探测。

应用场景

- HTTP 测试

- 定义 Request Header 信息 判断 Http status Http Respones Header Http Body 内容

- TCP 测试

- 业务组件端口状态监听 应用层协议定义与监听

- ICMP 测试

- 主机探活机制

- POST 测试

- 接口联通性

- SSL 证书过期时间

运行Blackbox Exporter时,需要用户提供探针的配置信息,这些配置信息可能是一些自定义的HTTP头信息,也可能是探测时需要的一些TSL配置,也可能是探针本身的验证行为。在Blackbox Exporter每一个探针配置称为一个module,并且以YAML配置文件的形式提供给Blackbox Exporter。每一个module主要包含以下配置内容,包括探针类型(prober)、验证访问超时时间(timeout)、以及当前探针的具体配置项:

探针类型:http、 tcp、 dns、 icmp.

prober: <prober_string>

超时时间

[ timeout: <duration> ]

探针的详细配置,最多只能配置其中的一个

[ http: <http_probe> ]

[ tcp: <tcp_probe> ]

[ dns: <dns_probe> ]

[ icmp: <icmp_probe> ]

安装部署blackbox-exporter

1.rancher内导入下面的blackbox-exporter.yml文件到Prometheus

apiVersion: apps/v1

kind: Deployment

metadata:generation: 1labels:cattle.io/creator: normanworkload.user.cattle.io/workloadselector: deployment-prometheus-blackbox-exportername: blackbox-exporternamespace: prometheus

spec:progressDeadlineSeconds: 600replicas: 1revisionHistoryLimit: 10selector:matchLabels:workload.user.cattle.io/workloadselector: deployment-prometheus-blackbox-exporterstrategy:rollingUpdate:maxSurge: 25%maxUnavailable: 25%type: RollingUpdatetemplate:metadata:annotations:field.cattle.io/ports: '[[{"containerPort":9115,"dnsName":"blackbox-exporter","hostPort":0,"kind":"ClusterIP","name":"blackbox-port","protocol":"TCP","sourcePort":0}]]'creationTimestamp: nulllabels:app.kubernetes.io/name: blackboxworkload.user.cattle.io/workloadselector: deployment-prometheus-blackbox-exporterspec:containers:- args:- --config.file=/etc/blackbox_exporter/blackbox.yml- --log.level=info- --web.listen-address=:9115image: prom/blackbox-exporter:v0.16.0imagePullPolicy: AlwayslivenessProbe:failureThreshold: 3initialDelaySeconds: 10periodSeconds: 2successThreshold: 1tcpSocket:port: 9115timeoutSeconds: 2name: blackbox-exporterports:- containerPort: 9115name: blackbox-portprotocol: TCPreadinessProbe:failureThreshold: 3initialDelaySeconds: 10periodSeconds: 2successThreshold: 2tcpSocket:port: 9115timeoutSeconds: 2resources: {}securityContext:allowPrivilegeEscalation: falsecapabilities: {}privileged: falsereadOnlyRootFilesystem: falserunAsNonRoot: falsestdin: trueterminationMessagePath: /dev/termination-logterminationMessagePolicy: Filetty: truevolumeMounts:- mountPath: /etc/blackbox_exportername: vol1dnsPolicy: ClusterFirstrestartPolicy: AlwaysschedulerName: default-schedulersecurityContext: {}terminationGracePeriodSeconds: 30volumes:- configMap:defaultMode: 420name: blackbox-exporteroptional: falsename: vol12.rancher内导入下面yml,新增服务发现blackbox-exporter

apiVersion: v1

kind: Service

metadata:annotations:labels:app.kubernetes.io/name: blackboxname: blackbox-exporternamespace: prometheusselfLink: /api/v1/namespaces/prometheus/services/blackbox-exporter

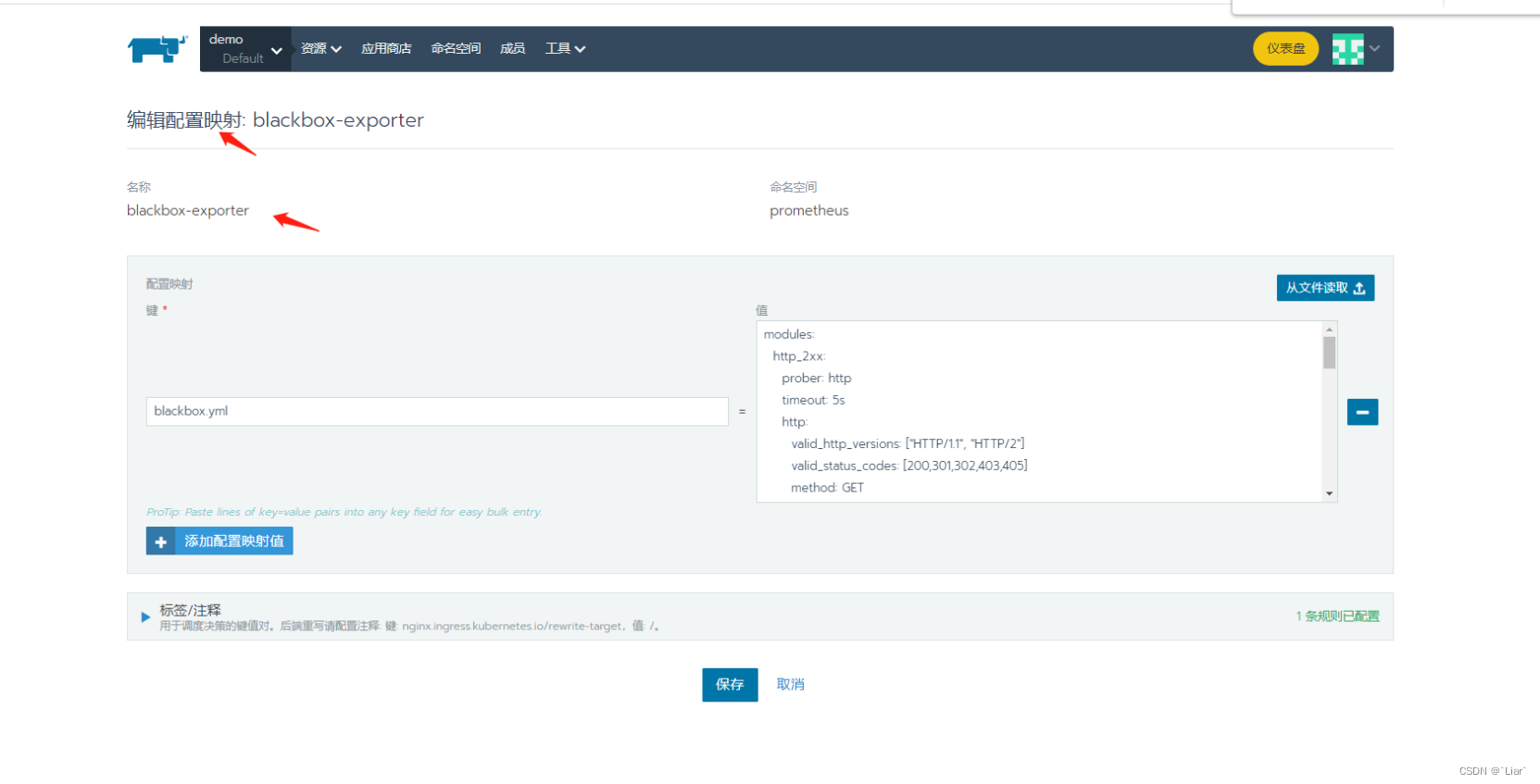

spec:ports:- name: balckboxport: 9115protocol: TCPtargetPort: 9115selector:app.kubernetes.io/name: blackboxsessionAffinity: Nonetype: ClusterIPPrometheus下配置映射新增blackbox-exporter

blackbox.yml

blackbox.yml

modules:http_2xx:prober: httptimeout: 5shttp:valid_http_versions: ["HTTP/1.1", "HTTP/2"]valid_status_codes: [200,301,302,403,405]method: GETpreferred_ip_protocol: "ipv4"https_2xx:prober: httpstimeout: 5shttp:valid_http_versions: ["HTTP/1.1", "HTTP/2"]valid_status_codes: [200,301,302,403,405]method: POSTpreferred_ip_protocol: "ipv4"tcp_connect:prober: tcptimeout: 2shttp_403:prober: httptimeout: 2shttp:valid_http_versions: ["HTTP/1.1", "HTTP/2"]valid_status_codes: [403,405]method: GETpreferred_ip_protocol: "ipv4"https_403:prober: httpstimeout: 2shttp:valid_http_versions: ["HTTP/1.1", "HTTP/2"]valid_status_codes: [403,405]method: GETpreferred_ip_protocol: "ipv4"icmp:prober: icmp/etc/blackbox_exporterprometheus配置映射内新增job

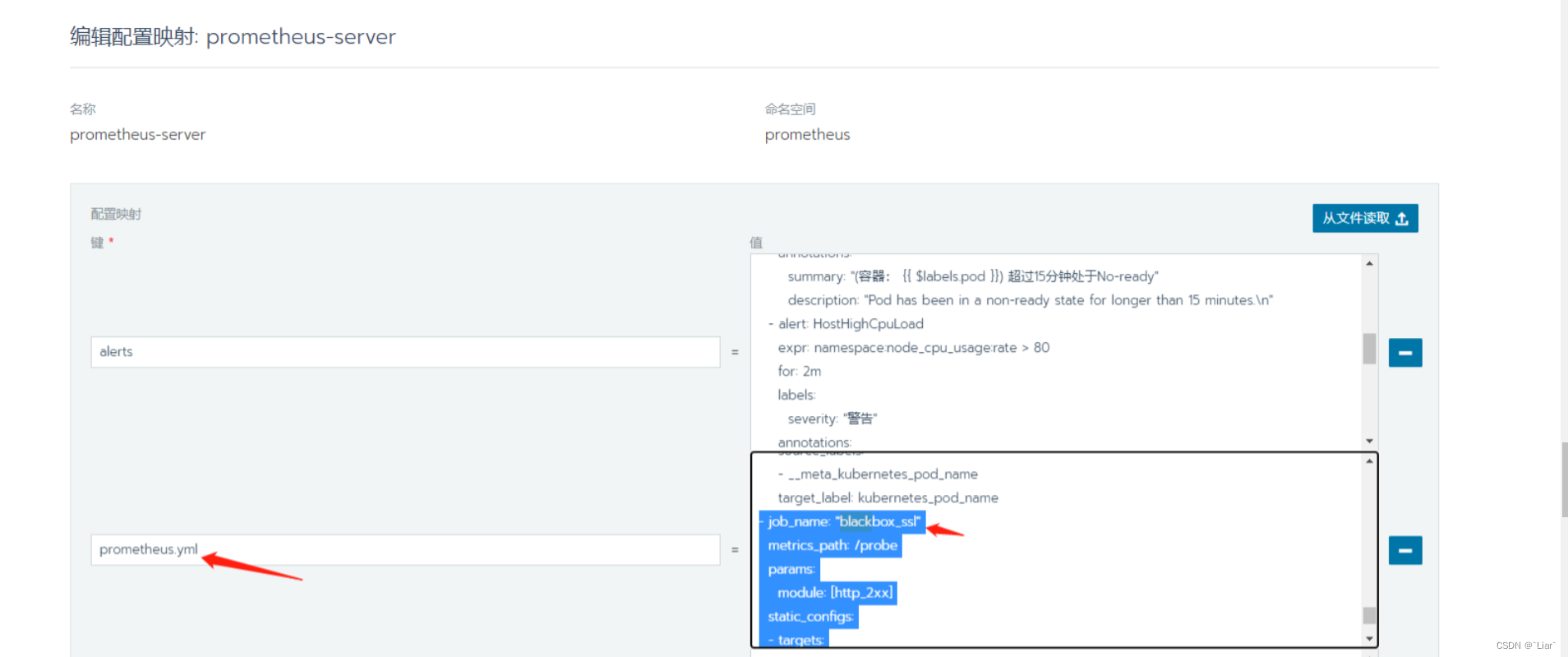

如配置证书的监控,修改Prometheus内配置映射的prometheus.yml

- job_name: "blackbox_ssl"metrics_path: /probeparams:module: [http_2xx]static_configs:- targets:- https://login.demo.chinamcloud.cnrelabel_configs:- source_labels: [__address__]target_label: instance- source_labels: [__address__]target_label: __param_target- target_label: __address__replacement: blackbox-exporter:9115

如配置TCP端口检测,修改Prometheus内配置映射的prometheus.yml

- job_name: "tcp-port-check"scrape_interval: 30smetrics_path: /probeparams:module: [tcp_connect]static_configs:- targets:- mpc.server:8088- mysql.server:3306- redis.server:6379- kafka.kafka:9092labels: server: 'tcp-port-check'relabel_configs:- source_labels: [__address__]target_label: instance- source_labels: [__address__]target_label: __param_target- target_label: __address__replacement: blackbox-exporter:9115POST 测试,监听业务接口地址,用来判断接口是否在线

- job_name: 'blackbox_http_2xx_post'scrape_interval: 10smetrics_path: /probeparams:module: [http_post_2xx_query]static_configs:- targets:- https://xx.xxx.com/api/xx/xx/xx/query.actionlabels:group: 'Interface monitoring'relabel_configs:- source_labels: [__address__]target_label: __param_target- source_labels: [__param_target]target_label: instance- target_label: __address__replacement: blackbox-exporter:9115常用指标

probe_success == 0 ##联通性异常

probe_success == 1 ##联通性正常证书过期时间低于30天

(probe_ssl_earliest_cert_expiry-time()) / 86400 <30http状态码

probe_http_status_code请求耗时

probe_duration_seconds{job=~"blackbox_ssl"}

http请求耗时

probe_http_duration_seconds四、elasticsearch_exporter

GitHub - prometheus-community/elasticsearch_exporter: Elasticsearch stats exporter for Prometheus

elasticsearch_exporter安装部署

1.在k8s内导入下面这个yml文件,创建elasticsearch-exporter

http://elasticsearch.server:9200换成项目上的es地址

若es配置了账号密码,http://账号:密码@elasticsearch.server:9200

apiVersion: apps/v1

kind: Deployment

metadata:name: elasticsearch-exporternamespace: prometheus

spec:selector:matchLabels:workload.user.cattle.io/workloadselector: deployment-prometheus-elasticsearch-exporterstrategy:rollingUpdate:maxSurge: 1maxUnavailable: 0type: RollingUpdatetemplate:metadata:labels:app.kubernetes.io/name: elasticsearch-exporterworkload.user.cattle.io/workloadselector: deployment-prometheus-elasticsearch-exporterspec:containers:- args:- --es.uri=http://elasticsearch.server:9200- --es.all- --es.indices- --es.indices_settings- --es.shards- --es.snapshots- --collector.clustersettingsimage: quay.io/prometheuscommunity/elasticsearch-exporter:v1.6.0imagePullPolicy: Alwaysname: elasticsearch-exporterports:- containerPort: 9114name: tcpprotocol: TCP2.导入下面yml文件,创建elasticsearch-exporter的服务发现

apiVersion: v1

kind: Service

metadata:name: elasticsearch-exporternamespace: prometheus

spec:clusterIP: Noneports:- name: tcpport: 9114protocol: TCPtargetPort: 9114selector:app.kubernetes.io/name: elasticsearch-exporter3.prometheus的配置文件prometheus.yml内新增elasticsearch-exporter的job

- job_name: elasticsearch-exportermetrics_path: '/metrics'static_configs:- targets:- elasticsearch-exporter.prometheus:91144.验证该job状态是否正常,访问Prometheus

5.导入grafana内模板

常用告警指标说明

# ES集群状态YELLOW

elasticsearch_cluster_health_status{environment=~"csp.*",color="yellow"} =1

# ES集群状态RED

elasticsearch_cluster_health_status{environment=~"csp.*",color="red"} =1

# ES集群状态正常

elasticsearch_cluster_health_status{environment=~"csp.*",color="greeen"} =1

#ES总节点数

sum(elasticsearch_cluster_health_number_of_nodes{cluster=~"haihe3"})

#ES数据节点数

sum(elasticsearch_cluster_health_number_of_data_nodes{cluster=~"haihe3"})五、kong接入prometheus

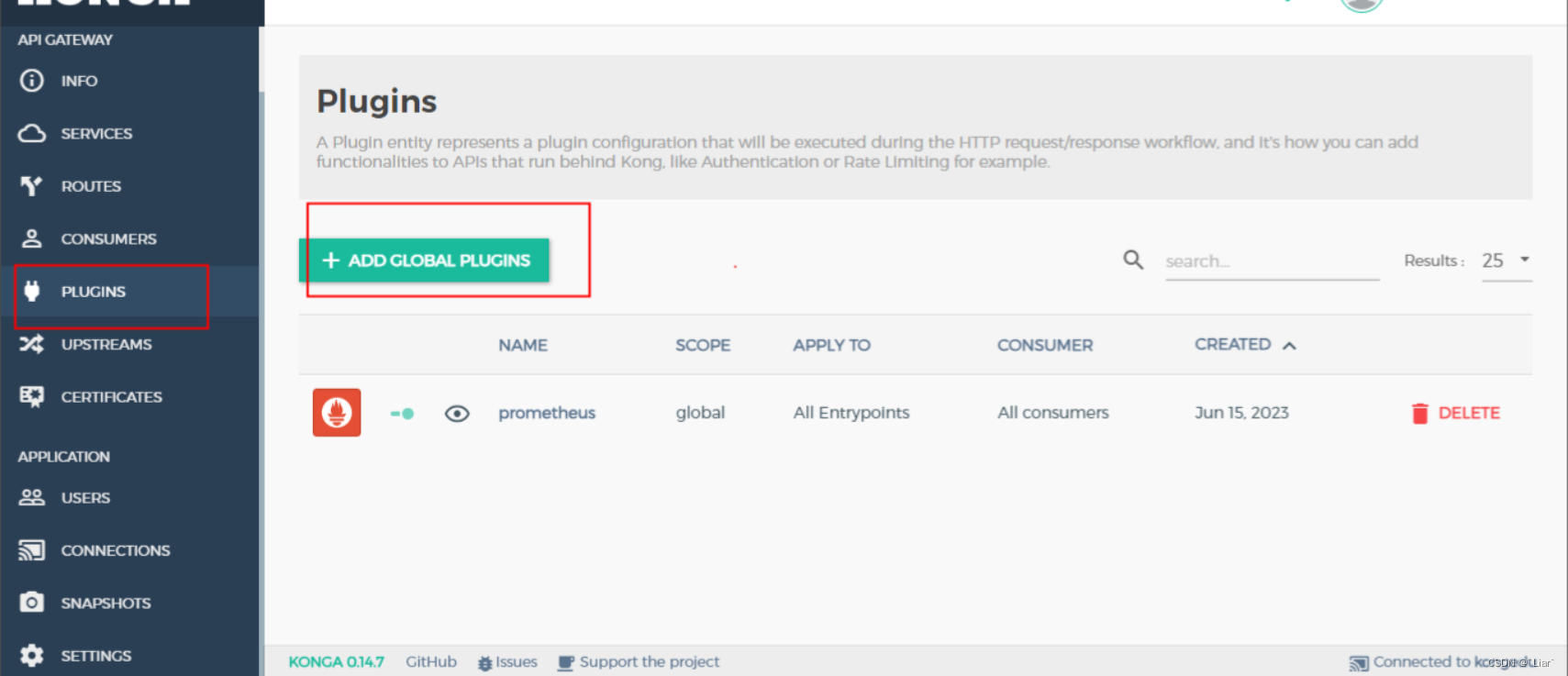

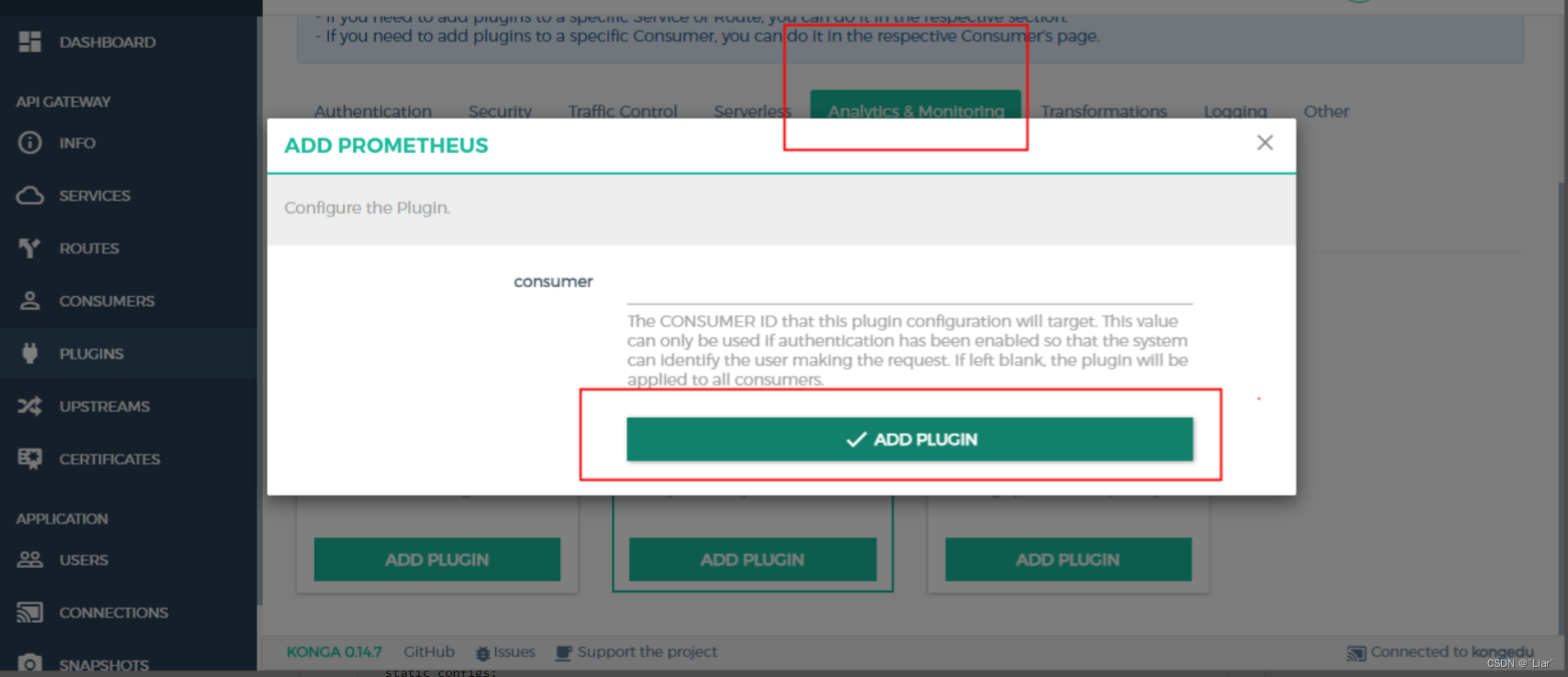

1.kong的dashboard内新加插件Prometheus

2. 修改Prometheus.yaml文件配置,新加kong的job监控

静态注册

- job_name: cmc-kong-monitoringmetrics_path: "/metrics"static_configs:- targets:- kong.cmc:8001动态注册:使用kubernetes_sd_configs自动发现kong的endpoints【如CSP环境】

#框架cmc下kong

- job_name: csp-cmc-kong kubernetes_sd_configs:- role: endpointsscheme: httprelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]regex: cmc;kong;http8001action: keep

#kong下的kong

- job_name: csp-kong-kong kubernetes_sd_configs:- role: endpointsscheme: httprelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]regex: kong;kong;8001to8001-kongaction: keep

#云教kong kong下kongedu

- job_name: csp-kong-kongedu kubernetes_sd_configs:- role: endpointsscheme: httprelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]regex: kong;kongedu;8001tcp80012action: keep

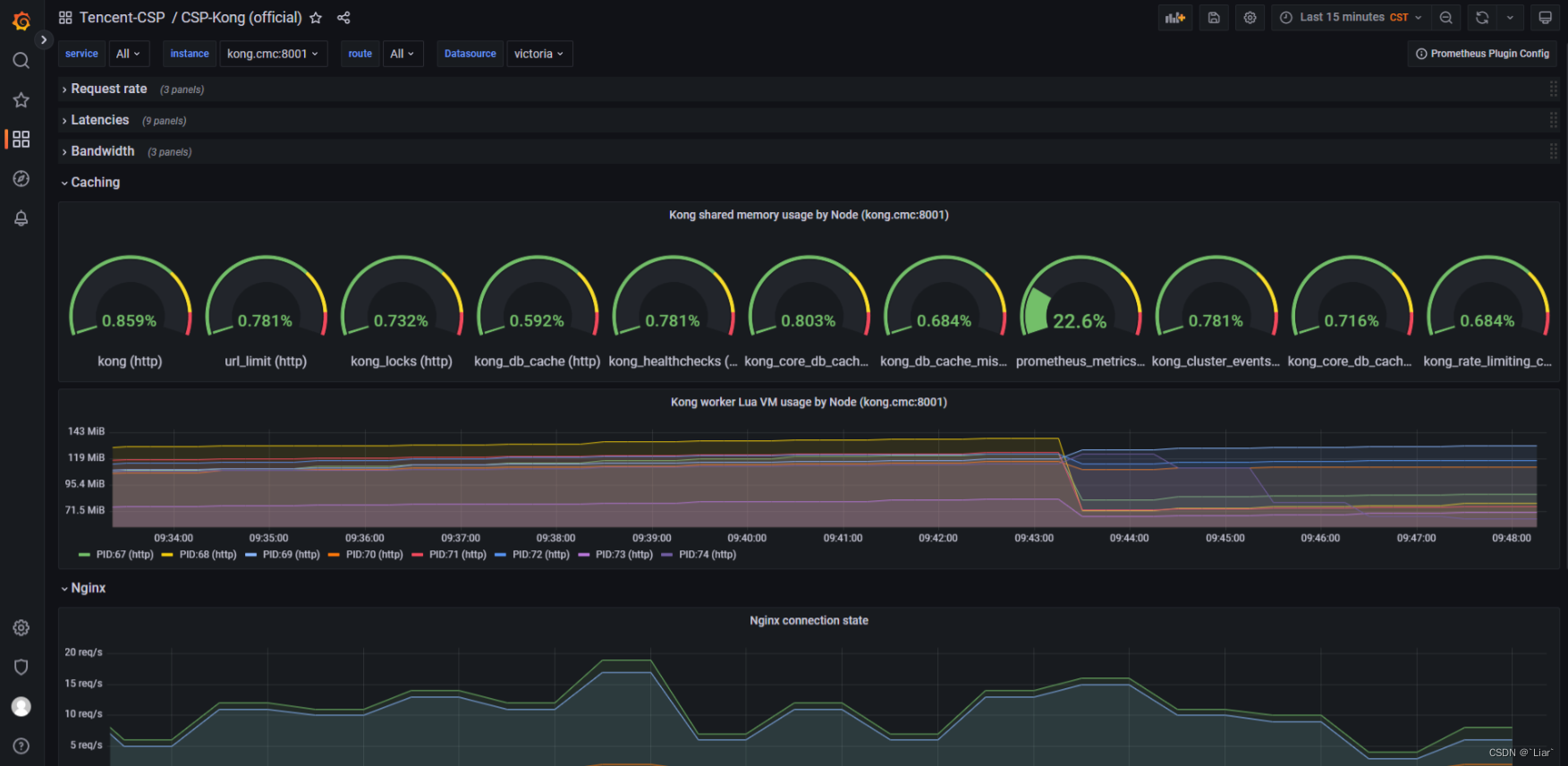

grafana上导入kong的大屏

常用指标

名称 类型 单位 说明

kong_bandwidth counter 字节数bytes kong中每个服务消耗的总带宽(字节)

kong_datastore_reachable gauge 是否 kong对数据库是否可以访问(1:正常 2:不正常)

kong_http_status counter 服务数 kong中每个服务的状态码

kong_latency_count counter 调用次数 kong中每个服务的调用次数

kong_latency_sum counter 调用耗时ms kong中每个服务的调用耗时

kong_latency_bucket histogram 服务数 kong中的服务在各个耗时区间的分布(类型设有:kong内部处理耗时 request请求耗时 ,upstream代理上游服务器耗时)

kong_nginx_http_current_connections gauge 连接数 kong当前的连接数(状态设有:accepted、active、handled、reading、total、waiting、writing)total 客户端请求总数accepted 接受的客户端连接的总数handled 处理连接的总数。一般来说,除非达到一定的资源限制,否则参数值与接受值相同active 包括等待连接的活动客户端连接的当前数量reading 当前Kong正在读取请求头的连接数writing 将响应写入客户端的连接的当前数量waiting 等待请求的空闲客户端连接的当前数量kong_nginx_metric_errors_total counter 个数 kong prometheus插件错误的指标数 kubernetes_sd_configs内新增kong=csp灰度框架kong标签

- job_name: kong-cmc-endpoints kubernetes_sd_configs:- role: endpointsscheme: httprelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name,__meta_kubernetes_endpoint_port_name]regex: cmc;kong;http8001action: keep- action: replacereplacement: csp灰度框架kongtarget_label: kong

中使用队列(Queue))

)

)