一、代码仓库:

InternLM: https://github.com/InternLM/InternLM/

课程讲师:宋志学大佬,d2l-ai-solutions-manual 开源项目负责人

二、Lagent框架

三、基于InternLM的智能对话

3.1 环境配置:

cuda11.7

pytorch2.0.1

其他环境:

# 升级pip

python -m pip install --upgrade pippip install modelscope==1.9.5

pip install transformers==4.35.2

pip install streamlit==1.24.0

pip install sentencepiece==0.1.99

pip install accelerate==0.24.13.2 模型下载

3.2.1.ModelScope 方式下载

import torch

from modelscope import snapshot_download, AutoModel, AutoTokenizer

import os

model_dir = snapshot_download('Shanghai_AI_Laboratory/internlm-chat-7b', cache_dir='/root/model', revision='v1.0.3')3.2.2.Hugging Face 方式下载

使用 Hugging Face 官方提供的 huggingface-cli 命令行工具。安装依赖:

pip install -U huggingface_hub然后新建 python 文件,填入以下代码,运行即可。

import os

# 下载模型

os.system('huggingface-cli download --resume-download internlm/internlm-chat-7b --local-dir your_path')-

resume-download:断点续下

-

local-dir:本地存储路径。(linux 环境下需要填写绝对路径)

使用 huggingface_hub 下载模型中的部分文件

import os

from huggingface_hub import hf_hub_download # Load model directly

hf_hub_download(repo_id="internlm/internlm-7b", filename="config.json")3.2.3 OpenXLab 方式下载

首先需要安装依赖:

pip install -U openxlab下载代码

from openxlab.model import download

download(model_repo='OpenLMLab/InternLM-7b', model_name='InternLM-7b', output='your local path')3.3 demo运行

import torch

from transformers import AutoTokenizer, AutoModelForCausalLMmodel_name_or_path = "/root/model/Shanghai_AI_Laboratory/internlm-chat-7b"tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, trust_remote_code=True, torch_dtype=torch.bfloat16, device_map='auto')

model = model.eval()system_prompt = """You are an AI assistant whose name is InternLM (书生·浦语).

- InternLM (书生·浦语) is a conversational language model that is developed by Shanghai AI Laboratory (上海人工智能实验室). It is designed to be helpful, honest, and harmless.

- InternLM (书生·浦语) can understand and communicate fluently in the language chosen by the user such as English and 中文.

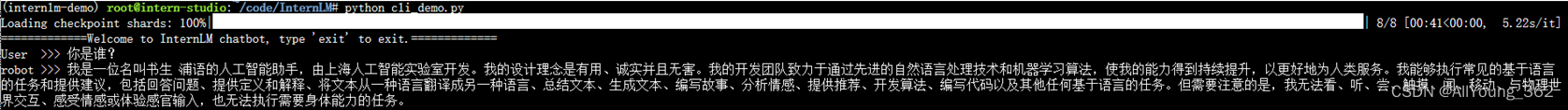

"""messages = [(system_prompt, '')]print("=============Welcome to InternLM chatbot, type 'exit' to exit.=============")while True:input_text = input("User >>> ")input_text = input_text.replace(' ', '')if input_text == "exit":breakresponse, history = model.chat(tokenizer, input_text, history=messages)messages.append((input_text, response))print(f"robot >>> {response}")3.4 效果

:简单幂计算)

)

![[足式机器人]Part2 Dr. CAN学习笔记 - Ch03 傅里叶级数与变换](http://pic.xiahunao.cn/[足式机器人]Part2 Dr. CAN学习笔记 - Ch03 傅里叶级数与变换)

)