前言

之前又看到说VioManager.cpp/h是OpenVINS中的核心程序,这次就看看这里面都写了啥,整体架构什么样,有哪些函数功能。具体介绍:

VioManager类

整体分析

VioManager类包含 MSCKF 工作所需的状态和其他算法。我们将测量结果输入到此类中,并将它们发送到各自的算法。如果我们有要传播或更新的测量值,此类将调用我们的状态来执行此操作。

主要包含下面6个函数/类:

VioManager类的初始化

VioManager::VioManager(VioManagerOptions ¶ms_) : thread_init_running(false), thread_init_success(false)

构造函数,导入所有参数配置。

IMU数据传递

void VioManager::feed_measurement_imu(const ov_core::ImuData &message)

相机数据传递

void ov_msckf::VioManager::feed_measurement_camera(const ov_core::CameraData& message)

模拟数据传递

void ov_msckf::VioManager::feed_measurement_simulation(double timestamp,const std::vector& camids,const std::vector<std::vector<std::pair<size_t, Eigen::VectorXf>>>& feats)

同步模拟相机的数据传递。

图像数据传递跟踪

void VioManager::track_image_and_update(const ov_core::CameraData &message_const)

给定一组新的相机图像,这将跟踪它们。

如果我们有立体跟踪,我们应该调用立体跟踪函数。否则我们将尝试跟踪每个传递的图像。

特征传播与更新

void VioManager::do_feature_propagate_update(const ov_core::CameraData &message)

这将对状态进行传播和功能更新。

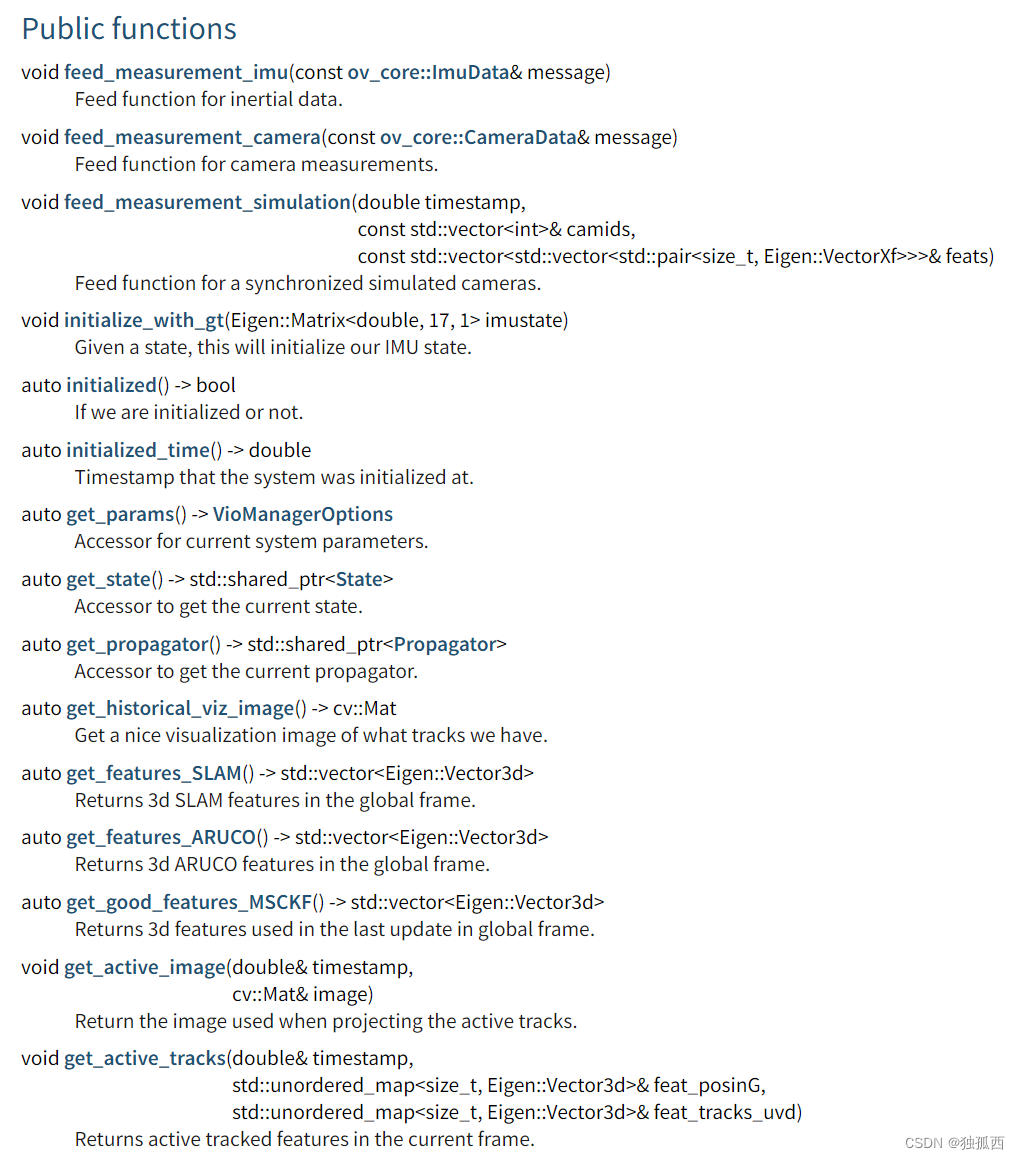

其他公共函数还有:

代码注释

/** OpenVINS: An Open Platform for Visual-Inertial Research* Copyright (C) 2018-2023 Patrick Geneva* Copyright (C) 2018-2023 Guoquan Huang* Copyright (C) 2018-2023 OpenVINS Contributors* Copyright (C) 2018-2019 Kevin Eckenhoff** This program is free software: you can redistribute it and/or modify* it under the terms of the GNU General Public License as published by* the Free Software Foundation, either version 3 of the License, or* (at your option) any later version.** This program is distributed in the hope that it will be useful,* but WITHOUT ANY WARRANTY; without even the implied warranty of* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the* GNU General Public License for more details.** You should have received a copy of the GNU General Public License* along with this program. If not, see <https://www.gnu.org/licenses/>.*/#include "VioManager.h"#include "feat/Feature.h"

#include "feat/FeatureDatabase.h"

#include "feat/FeatureInitializer.h"

#include "track/TrackAruco.h"

#include "track/TrackDescriptor.h"

#include "track/TrackKLT.h"

#include "track/TrackSIM.h"

#include "types/Landmark.h"

#include "types/LandmarkRepresentation.h"

#include "utils/opencv_lambda_body.h"

#include "utils/print.h"

#include "utils/sensor_data.h"#include "init/InertialInitializer.h"#include "state/Propagator.h"

#include "state/State.h"

#include "state/StateHelper.h"

#include "update/UpdaterMSCKF.h"

#include "update/UpdaterSLAM.h"

#include "update/UpdaterZeroVelocity.h"using namespace ov_core;

using namespace ov_type;

using namespace ov_msckf;VioManager::VioManager(VioManagerOptions ¶ms_) : thread_init_running(false), thread_init_success(false) {// Nice startup messagePRINT_DEBUG("=======================================\n");PRINT_DEBUG("OPENVINS ON-MANIFOLD EKF IS STARTING\n");PRINT_DEBUG("=======================================\n");// Nice debugthis->params = params_;params.print_and_load_estimator();params.print_and_load_noise();params.print_and_load_state();params.print_and_load_trackers();// This will globally set the thread count we will use// -1 will reset to the system default threading (usually the num of cores)cv::setNumThreads(params.num_opencv_threads);//设置将要使用的线程数量cv::setRNGSeed(0);// Create the state!!state = std::make_shared<State>(params.state_options);//将参数表的状态设置导入// Set the IMU intrinsics,设置IMU内参state->_calib_imu_dw->set_value(params.vec_dw);state->_calib_imu_dw->set_fej(params.vec_dw);state->_calib_imu_da->set_value(params.vec_da);state->_calib_imu_da->set_fej(params.vec_da);state->_calib_imu_tg->set_value(params.vec_tg);state->_calib_imu_tg->set_fej(params.vec_tg);state->_calib_imu_GYROtoIMU->set_value(params.q_GYROtoIMU);state->_calib_imu_GYROtoIMU->set_fej(params.q_GYROtoIMU);state->_calib_imu_ACCtoIMU->set_value(params.q_ACCtoIMU);state->_calib_imu_ACCtoIMU->set_fej(params.q_ACCtoIMU);// Timeoffset from camera to IMU,设置相机和IMU之间的外参Eigen::VectorXd temp_camimu_dt;temp_camimu_dt.resize(1);temp_camimu_dt(0) = params.calib_camimu_dt;state->_calib_dt_CAMtoIMU->set_value(temp_camimu_dt);state->_calib_dt_CAMtoIMU->set_fej(temp_camimu_dt);// Loop through and load each of the cameras,多个相机的时候循环导入参数state->_cam_intrinsics_cameras = params.camera_intrinsics;for (int i = 0; i < state->_options.num_cameras; i++) {state->_cam_intrinsics.at(i)->set_value(params.camera_intrinsics.at(i)->get_value());state->_cam_intrinsics.at(i)->set_fej(params.camera_intrinsics.at(i)->get_value());state->_calib_IMUtoCAM.at(i)->set_value(params.camera_extrinsics.at(i));state->_calib_IMUtoCAM.at(i)->set_fej(params.camera_extrinsics.at(i));}//===================================================================================//===================================================================================//===================================================================================// If we are recording statistics, then open our file,如果记录统计数据则打开文件if (params.record_timing_information) {// If the file exists, then delete it,文件存在则删除if (boost::filesystem::exists(params.record_timing_filepath)) {boost::filesystem::remove(params.record_timing_filepath);PRINT_INFO(YELLOW "[STATS]: found old file found, deleted...\n" RESET);}// Create the directory that we will open the file in,创建我们将要打开的文件在目标目录下boost::filesystem::path p(params.record_timing_filepath);boost::filesystem::create_directories(p.parent_path());// Open our statistics file!of_statistics.open(params.record_timing_filepath, std::ofstream::out | std::ofstream::app);//打开统计数据文件// Write the header information into itof_statistics << "# timestamp (sec),tracking,propagation,msckf update,";//写入头部信息if (state->_options.max_slam_features > 0) {of_statistics << "slam update,slam delayed,";}of_statistics << "re-tri & marg,total" << std::endl;}//===================================================================================//===================================================================================//===================================================================================// Let's make a feature extractor// NOTE: after we initialize we will increase the total number of feature tracks// NOTE: we will split the total number of features over all cameras uniformly//特征提取部分// 注意:初始化后我们将增加特征跟踪的总数// 注意:我们将统一划分所有相机的特征总数int init_max_features = std::floor((double)params.init_options.init_max_features / (double)params.state_options.num_cameras);if (params.use_klt) {trackFEATS = std::shared_ptr<TrackBase>(new TrackKLT(state->_cam_intrinsics_cameras, init_max_features,state->_options.max_aruco_features, params.use_stereo, params.histogram_method,params.fast_threshold, params.grid_x, params.grid_y, params.min_px_dist));} else {trackFEATS = std::shared_ptr<TrackBase>(new TrackDescriptor(state->_cam_intrinsics_cameras, init_max_features, state->_options.max_aruco_features, params.use_stereo, params.histogram_method,params.fast_threshold, params.grid_x, params.grid_y, params.min_px_dist, params.knn_ratio));}// Initialize our aruco tag extractor//创建aruco标签提取器if (params.use_aruco) {trackARUCO = std::shared_ptr<TrackBase>(new TrackAruco(state->_cam_intrinsics_cameras, state->_options.max_aruco_features,params.use_stereo, params.histogram_method, params.downsize_aruco));}// Initialize our state propagator//创建状态传播量propagator = std::make_shared<Propagator>(params.imu_noises, params.gravity_mag);// Our state initialize//创建初始化量initializer = std::make_shared<ov_init::InertialInitializer>(params.init_options, trackFEATS->get_feature_database());// Make the updater!//创建更新量updaterMSCKF = std::make_shared<UpdaterMSCKF>(params.msckf_options, params.featinit_options);updaterSLAM = std::make_shared<UpdaterSLAM>(params.slam_options, params.aruco_options, params.featinit_options);// If we are using zero velocity updates, then create the updater//如果使用零速矫正创建更新器if (params.try_zupt) {updaterZUPT = std::make_shared<UpdaterZeroVelocity>(params.zupt_options, params.imu_noises, trackFEATS->get_feature_database(),propagator, params.gravity_mag, params.zupt_max_velocity,params.zupt_noise_multiplier, params.zupt_max_disparity);}

}void VioManager::feed_measurement_imu(const ov_core::ImuData &message) {//IMU测量值的传递// The oldest time we need IMU with is the last clone// We shouldn't really need the whole window, but if we go backwards in time we will// 我们需要 IMU 的最早时间是最后一个克隆// 我们并不真的需要整个窗口,但如果我们及时倒退,我们就会需要double oldest_time = state->margtimestep();if (oldest_time > state->_timestamp) {oldest_time = -1;}if (!is_initialized_vio) {oldest_time = message.timestamp - params.init_options.init_window_time + state->_calib_dt_CAMtoIMU->value()(0) - 0.10;}propagator->feed_imu(message, oldest_time);// Push back to our initializer// 推回到我们的初始化器if (!is_initialized_vio) {initializer->feed_imu(message, oldest_time);}// Push back to the zero velocity updater if it is enabled// No need to push back if we are just doing the zv-update at the begining and we have moved// 如果启用则推回到零速度更新器// 如果我们只是在开始时进行 zv-update 并且我们已经移动了,则无需推迟if (is_initialized_vio && updaterZUPT != nullptr && (!params.zupt_only_at_beginning || !has_moved_since_zupt)) {updaterZUPT->feed_imu(message, oldest_time);}

}void VioManager::feed_measurement_simulation(double timestamp, const std::vector<int> &camids,const std::vector<std::vector<std::pair<size_t, Eigen::VectorXf>>> &feats) {

//模拟测量值的传递// Start timingrT1 = boost::posix_time::microsec_clock::local_time();//开始时间// Check if we actually have a simulated tracker// If not, recreate and re-cast the tracker to our simulation tracker// 检查我们是否真的有一个模拟跟踪器// 如果没有,则重新创建跟踪器并将其重新投射到我们的模拟跟踪器std::shared_ptr<TrackSIM> trackSIM = std::dynamic_pointer_cast<TrackSIM>(trackFEATS);if (trackSIM == nullptr) {// Replace with the simulated tracker// 替换为模拟跟踪器trackSIM = std::make_shared<TrackSIM>(state->_cam_intrinsics_cameras, state->_options.max_aruco_features);trackFEATS = trackSIM;// Need to also replace it in init and zv-upt since it points to the trackFEATS db pointer// 还需要在 init 和 zv-upt 中替换它,因为它指向 trackFEATS db 指针initializer = std::make_shared<ov_init::InertialInitializer>(params.init_options, trackFEATS->get_feature_database());if (params.try_zupt) {updaterZUPT = std::make_shared<UpdaterZeroVelocity>(params.zupt_options, params.imu_noises, trackFEATS->get_feature_database(),propagator, params.gravity_mag, params.zupt_max_velocity,params.zupt_noise_multiplier, params.zupt_max_disparity);}PRINT_WARNING(RED "[SIM]: casting our tracker to a TrackSIM object!\n" RESET);}// Feed our simulation tracker//给模拟跟踪器传递信息trackSIM->feed_measurement_simulation(timestamp, camids, feats);rT2 = boost::posix_time::microsec_clock::local_time();// Check if we should do zero-velocity, if so update the state with it// Note that in the case that we only use in the beginning initialization phase// If we have since moved, then we should never try to do a zero velocity update!// 检查我们是否应该进行零速度,如果是,则用它更新状态// 注意,我们只在开始初始化阶段使用的情况// 如果我们已经移动,那么我们永远不应该尝试进行零速度更新!if (is_initialized_vio && updaterZUPT != nullptr && (!params.zupt_only_at_beginning || !has_moved_since_zupt)) {// If the same state time, use the previous timestep decision// 如果状态时间相同,则使用前一个时间步决策if (state->_timestamp != timestamp) {did_zupt_update = updaterZUPT->try_update(state, timestamp);}if (did_zupt_update) {assert(state->_timestamp == timestamp);propagator->clean_old_imu_measurements(timestamp + state->_calib_dt_CAMtoIMU->value()(0) - 0.10);updaterZUPT->clean_old_imu_measurements(timestamp + state->_calib_dt_CAMtoIMU->value()(0) - 0.10);propagator->invalidate_cache();return;}}// If we do not have VIO initialization, then return an error// 如果我们没有VIO初始化,则返回错误if (!is_initialized_vio) {PRINT_ERROR(RED "[SIM]: your vio system should already be initialized before simulating features!!!\n" RESET);PRINT_ERROR(RED "[SIM]: initialize your system first before calling feed_measurement_simulation()!!!!\n" RESET);std::exit(EXIT_FAILURE);}// Call on our propagate and update function// Simulation is either all sync, or single camera...// 调用我们的传播和更新函数// 模拟要么是全同步的,要么是单相机的...ov_core::CameraData message;message.timestamp = timestamp;for (auto const &camid : camids) {int width = state->_cam_intrinsics_cameras.at(camid)->w();int height = state->_cam_intrinsics_cameras.at(camid)->h();message.sensor_ids.push_back(camid);message.images.push_back(cv::Mat::zeros(cv::Size(width, height), CV_8UC1));message.masks.push_back(cv::Mat::zeros(cv::Size(width, height), CV_8UC1));}do_feature_propagate_update(message);

}void VioManager::track_image_and_update(const ov_core::CameraData &message_const) {//图像跟踪并更新// Start timingrT1 = boost::posix_time::microsec_clock::local_time();//开始计时// Assert we have valid measurement data and ids//保障是有效的测量数据和标号assert(!message_const.sensor_ids.empty());assert(message_const.sensor_ids.size() == message_const.images.size());for (size_t i = 0; i < message_const.sensor_ids.size() - 1; i++) {assert(message_const.sensor_ids.at(i) != message_const.sensor_ids.at(i + 1));}// Downsample if we are downsampling//如果我们正在下采样,则下采样ov_core::CameraData message = message_const;for (size_t i = 0; i < message.sensor_ids.size() && params.downsample_cameras; i++) {cv::Mat img = message.images.at(i);cv::Mat mask = message.masks.at(i);cv::Mat img_temp, mask_temp;cv::pyrDown(img, img_temp, cv::Size(img.cols / 2.0, img.rows / 2.0));message.images.at(i) = img_temp;cv::pyrDown(mask, mask_temp, cv::Size(mask.cols / 2.0, mask.rows / 2.0));message.masks.at(i) = mask_temp;}// Perform our feature tracking!// 执行我们的特征跟踪!trackFEATS->feed_new_camera(message);// If the aruco tracker is available, the also pass to it// NOTE: binocular tracking for aruco doesn't make sense as we by default have the ids// NOTE: thus we just call the stereo tracking if we are doing binocular!// 如果 aruco 跟踪器可用,也会传递给它// 注意:aruco 的双目跟踪没有意义,因为我们默认有 ids// 注意:因此,如果我们进行双目观察,我们只需调用立体跟踪!if (is_initialized_vio && trackARUCO != nullptr) {trackARUCO->feed_new_camera(message);}rT2 = boost::posix_time::microsec_clock::local_time();// Check if we should do zero-velocity, if so update the state with it// Note that in the case that we only use in the beginning initialization phase// If we have since moved, then we should never try to do a zero velocity update!// 检查我们是否应该进行零速度,如果是,则用它更新状态// 注意,我们只在开始初始化阶段使用的情况// 如果我们已经移动,那么我们永远不应该尝试进行零速度更新!if (is_initialized_vio && updaterZUPT != nullptr && (!params.zupt_only_at_beginning || !has_moved_since_zupt)) {// If the same state time, use the previous timestep decision// 如果状态时间相同,则使用前一个时间步决策if (state->_timestamp != message.timestamp) {did_zupt_update = updaterZUPT->try_update(state, message.timestamp);}if (did_zupt_update) {assert(state->_timestamp == message.timestamp);propagator->clean_old_imu_measurements(message.timestamp + state->_calib_dt_CAMtoIMU->value()(0) - 0.10);updaterZUPT->clean_old_imu_measurements(message.timestamp + state->_calib_dt_CAMtoIMU->value()(0) - 0.10);propagator->invalidate_cache();return;}}// If we do not have VIO initialization, then try to initialize// TODO: Or if we are trying to reset the system, then do that here!// 如果我们没有VIO初始化,那么尝试初始化// TODO:或者如果我们尝试重置系统,请在此处执行此操作!if (!is_initialized_vio) {is_initialized_vio = try_to_initialize(message);if (!is_initialized_vio) {double time_track = (rT2 - rT1).total_microseconds() * 1e-6;PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for tracking\n" RESET, time_track);return;}}// Call on our propagate and update function// 调用我们的传播和更新函数do_feature_propagate_update(message);

}void VioManager::do_feature_propagate_update(const ov_core::CameraData &message) {//特征传播与更新//===================================================================================// State propagation, and clone augmentation//===================================================================================

// 状态传播和克隆扩维// Return if the camera measurement is out of order//如果相机测量超过数量则返回if (state->_timestamp > message.timestamp) {PRINT_WARNING(YELLOW "image received out of order, unable to do anything (prop dt = %3f)\n" RESET,(message.timestamp - state->_timestamp));return;}// Propagate the state forward to the current update time// Also augment it with a new clone!// NOTE: if the state is already at the given time (can happen in sim)// NOTE: then no need to prop since we already are at the desired timestep// 将状态向前传播到当前更新时间// 还用一个新的克隆来增强它!// 注意:如果状态已经在给定时间(可能发生在 sim 中)// 注意:那么不需要支撑,因为我们已经处于所需的时间步长if (state->_timestamp != message.timestamp) {propagator->propagate_and_clone(state, message.timestamp);}rT3 = boost::posix_time::microsec_clock::local_time();// If we have not reached max clones, we should just return...// This isn't super ideal, but it keeps the logic after this easier...// We can start processing things when we have at least 5 clones since we can start triangulating things...

// 如果我们还没有达到最大克隆数,我们应该返回...// 这不是非常理想,但它使之后的逻辑更容易......// 当我们有至少 5 个克隆时,我们可以开始处理事物,因为我们可以开始对事物进行三角测量...if ((int)state->_clones_IMU.size() < std::min(state->_options.max_clone_size, 5)) {PRINT_DEBUG("waiting for enough clone states (%d of %d)....\n", (int)state->_clones_IMU.size(),std::min(state->_options.max_clone_size, 5));return;}// Return if we where unable to propagate// 如果我们无法传播则返回if (state->_timestamp != message.timestamp) {PRINT_WARNING(RED "[PROP]: Propagator unable to propagate the state forward in time!\n" RESET);PRINT_WARNING(RED "[PROP]: It has been %.3f since last time we propagated\n" RESET, message.timestamp - state->_timestamp);return;}has_moved_since_zupt = true;//===================================================================================// MSCKF features and KLT tracks that are SLAM features//===================================================================================

//MSCKF特征和KLT跟踪SLAM特征// Now, lets get all features that should be used for an update that are lost in the newest frame// We explicitly request features that have not been deleted (used) in another update step// 现在,让我们获取应该用于更新但在最新帧中丢失的所有特征// 我们明确请求在另一个更新步骤中尚未删除(使用)的功能std::vector<std::shared_ptr<Feature>> feats_lost, feats_marg, feats_slam;feats_lost = trackFEATS->get_feature_database()->features_not_containing_newer(state->_timestamp, false, true);// Don't need to get the oldest features until we reach our max number of clones

//不需要获得最旧的特征,知道我们到达克隆的最大数量if ((int)state->_clones_IMU.size() > state->_options.max_clone_size || (int)state->_clones_IMU.size() > 5) {feats_marg = trackFEATS->get_feature_database()->features_containing(state->margtimestep(), false, true);if (trackARUCO != nullptr && message.timestamp - startup_time >= params.dt_slam_delay) {feats_slam = trackARUCO->get_feature_database()->features_containing(state->margtimestep(), false, true);}}// Remove any lost features that were from other image streams// E.g: if we are cam1 and cam0 has not processed yet, we don't want to try to use those in the update yet// E.g: thus we wait until cam0 process its newest image to remove features which were seen from that camera//删除其他图像流中丢失的所有特征// 例如:如果我们的 cam1 和 cam0 尚未处理,我们还不想在更新中尝试使用它们// 例如:因此我们等到 cam0 处理其最新图像以删除从该相机看到的特征auto it1 = feats_lost.begin();while (it1 != feats_lost.end()) {bool found_current_message_camid = false;for (const auto &camuvpair : (*it1)->uvs) {if (std::find(message.sensor_ids.begin(), message.sensor_ids.end(), camuvpair.first) != message.sensor_ids.end()) {found_current_message_camid = true;break;}}if (found_current_message_camid) {it1++;} else {it1 = feats_lost.erase(it1);}}// We also need to make sure that the max tracks does not contain any lost features// This could happen if the feature was lost in the last frame, but has a measurement at the marg timestep// 我们还需要确保最大轨道不包含任何丢失的特征// 如果该特征在最后一帧中丢失,但在 Marg 时间步长处有测量,则可能会发生这种情况it1 = feats_lost.begin();while (it1 != feats_lost.end()) {if (std::find(feats_marg.begin(), feats_marg.end(), (*it1)) != feats_marg.end()) {// PRINT_WARNING(YELLOW "FOUND FEATURE THAT WAS IN BOTH feats_lost and feats_marg!!!!!!\n" RESET);it1 = feats_lost.erase(it1);} else {it1++;}}// Find tracks that have reached max length, these can be made into SLAM features// 找到已经达到最大长度的轨迹,这些可以制作成SLAM特征std::vector<std::shared_ptr<Feature>> feats_maxtracks;auto it2 = feats_marg.begin();while (it2 != feats_marg.end()) {// See if any of our camera's reached max track//查看是否有我们的相机达到最大跟踪bool reached_max = false;for (const auto &cams : (*it2)->timestamps) {if ((int)cams.second.size() > state->_options.max_clone_size) {reached_max = true;break;}}// If max track, then add it to our possible slam feature list//如果是最大跟踪则将他加入到我们可能的slam特征列表if (reached_max) {feats_maxtracks.push_back(*it2);it2 = feats_marg.erase(it2);} else {it2++;}}// Count how many aruco tags we have in our state//计数在我们的状态中有多少aruco标签int curr_aruco_tags = 0;auto it0 = state->_features_SLAM.begin();while (it0 != state->_features_SLAM.end()) {if ((int)(*it0).second->_featid <= 4 * state->_options.max_aruco_features)curr_aruco_tags++;it0++;}// Append a new SLAM feature if we have the room to do so// Also check that we have waited our delay amount (normally prevents bad first set of slam points)// 如果我们有空间的话,添加一个新的 SLAM 功能// 还要检查我们是否已经等待了延迟量(通常可以防止第一组猛击点出现问题)if (state->_options.max_slam_features > 0 && message.timestamp - startup_time >= params.dt_slam_delay &&(int)state->_features_SLAM.size() < state->_options.max_slam_features + curr_aruco_tags) {// Get the total amount to add, then the max amount that we can add given our marginalize feature array// 获取要添加的总量,然后是给定边缘化特征数组时我们可以添加的最大数量int amount_to_add = (state->_options.max_slam_features + curr_aruco_tags) - (int)state->_features_SLAM.size();int valid_amount = (amount_to_add > (int)feats_maxtracks.size()) ? (int)feats_maxtracks.size() : amount_to_add;// If we have at least 1 that we can add, lets add it!// Note: we remove them from the feat_marg array since we don't want to reuse information...// 如果我们至少有 1 个可以添加,就添加它!// 注意:我们从 feat_marg 数组中删除它们,因为我们不想重用信息...if (valid_amount > 0) {feats_slam.insert(feats_slam.end(), feats_maxtracks.end() - valid_amount, feats_maxtracks.end());feats_maxtracks.erase(feats_maxtracks.end() - valid_amount, feats_maxtracks.end());}}// Loop through current SLAM features, we have tracks of them, grab them for this update!// NOTE: if we have a slam feature that has lost tracking, then we should marginalize it out// NOTE: we only enforce this if the current camera message is where the feature was seen from// NOTE: if you do not use FEJ, these types of slam features *degrade* the estimator performance....// NOTE: we will also marginalize SLAM features if they have failed their update a couple times in a row// 循环当前的 SLAM 功能,我们有它们的踪迹,抓住它们进行这次更新!// 注意:如果我们有一个失去跟踪的 slam 功能,那么我们应该将其边缘化// 注意:如果当前相机消息是从其中看到该功能的位置,我们只会强制执行此操作// 注意:如果您不使用 FEJ,这些类型的 slam 功能会*降低*估计器的性能......// 注意:如果 SLAM 功能连续几次更新失败,我们也会将其边缘化for (std::pair<const size_t, std::shared_ptr<Landmark>> &landmark : state->_features_SLAM) {if (trackARUCO != nullptr) {std::shared_ptr<Feature> feat1 = trackARUCO->get_feature_database()->get_feature(landmark.second->_featid);if (feat1 != nullptr)feats_slam.push_back(feat1);}std::shared_ptr<Feature> feat2 = trackFEATS->get_feature_database()->get_feature(landmark.second->_featid);if (feat2 != nullptr)feats_slam.push_back(feat2);assert(landmark.second->_unique_camera_id != -1);bool current_unique_cam =std::find(message.sensor_ids.begin(), message.sensor_ids.end(), landmark.second->_unique_camera_id) != message.sensor_ids.end();if (feat2 == nullptr && current_unique_cam)landmark.second->should_marg = true;if (landmark.second->update_fail_count > 1)landmark.second->should_marg = true;}// Lets marginalize out all old SLAM features here// These are ones that where not successfully tracked into the current frame// We do *NOT* marginalize out our aruco tags landmarks// 让我们在这里边缘化所有旧的 SLAM 功能// 这些是未成功跟踪到当前帧的帧// 我们*不*边缘化我们的aruco标签地标StateHelper::marginalize_slam(state);// Separate our SLAM features into new ones, and old ones//将我们的SLAM特征分为新的和旧的std::vector<std::shared_ptr<Feature>> feats_slam_DELAYED, feats_slam_UPDATE;for (size_t i = 0; i < feats_slam.size(); i++) {if (state->_features_SLAM.find(feats_slam.at(i)->featid) != state->_features_SLAM.end()) {feats_slam_UPDATE.push_back(feats_slam.at(i));// PRINT_DEBUG("[UPDATE-SLAM]: found old feature %d (%d// measurements)\n",(int)feats_slam.at(i)->featid,(int)feats_slam.at(i)->timestamps_left.size());} else {feats_slam_DELAYED.push_back(feats_slam.at(i));// PRINT_DEBUG("[UPDATE-SLAM]: new feature ready %d (%d// measurements)\n",(int)feats_slam.at(i)->featid,(int)feats_slam.at(i)->timestamps_left.size());}}// Concatenate our MSCKF feature arrays (i.e., ones not being used for slam updates)//连接我们的MSCKF特征数组std::vector<std::shared_ptr<Feature>> featsup_MSCKF = feats_lost;featsup_MSCKF.insert(featsup_MSCKF.end(), feats_marg.begin(), feats_marg.end());featsup_MSCKF.insert(featsup_MSCKF.end(), feats_maxtracks.begin(), feats_maxtracks.end());//===================================================================================// Now that we have a list of features, lets do the EKF update for MSCKF and SLAM!//===================================================================================

//现在我们有了一系列特征,接着做MSCKF和SLAM 的EKF更新// Sort based on track length// TODO: we should have better selection logic here (i.e. even feature distribution in the FOV etc..)// TODO: right now features that are "lost" are at the front of this vector, while ones at the end are long-tracks// 根据轨道长度排序// TODO:我们应该在这里有更好的选择逻辑(即,FOV 中的特征分布等)// TODO:现在“丢失”的特征位于该向量的前面,而末尾的特征是长轨道auto compare_feat = [](const std::shared_ptr<Feature> &a, const std::shared_ptr<Feature> &b) -> bool {size_t asize = 0;size_t bsize = 0;for (const auto &pair : a->timestamps)asize += pair.second.size();for (const auto &pair : b->timestamps)bsize += pair.second.size();return asize < bsize;};std::sort(featsup_MSCKF.begin(), featsup_MSCKF.end(), compare_feat);// Pass them to our MSCKF updater// NOTE: if we have more then the max, we select the "best" ones (i.e. max tracks) for this update// NOTE: this should only really be used if you want to track a lot of features, or have limited computational resources// 将它们传递给我们的 MSCKF 更新程序// 注意:如果我们有比最大还多的,我们会选择“最佳”(即最大跟踪)进行此更新// 注意:只有当您想要跟踪大量特征或计算资源有限时才应该使用此方法if ((int)featsup_MSCKF.size() > state->_options.max_msckf_in_update)featsup_MSCKF.erase(featsup_MSCKF.begin(), featsup_MSCKF.end() - state->_options.max_msckf_in_update);updaterMSCKF->update(state, featsup_MSCKF);propagator->invalidate_cache();rT4 = boost::posix_time::microsec_clock::local_time();// Perform SLAM delay init and update// NOTE: that we provide the option here to do a *sequential* update// NOTE: this will be a lot faster but won't be as accurate.// 执行SLAM延迟初始化和更新// 注意:我们在这里提供了执行*顺序*更新的选项// 注意:这会快得多,但不会那么准确。std::vector<std::shared_ptr<Feature>> feats_slam_UPDATE_TEMP;while (!feats_slam_UPDATE.empty()) {// Get sub vector of the features we will update with//得到我们要更新的特征子向量std::vector<std::shared_ptr<Feature>> featsup_TEMP;featsup_TEMP.insert(featsup_TEMP.begin(), feats_slam_UPDATE.begin(),feats_slam_UPDATE.begin() + std::min(state->_options.max_slam_in_update, (int)feats_slam_UPDATE.size()));feats_slam_UPDATE.erase(feats_slam_UPDATE.begin(),feats_slam_UPDATE.begin() + std::min(state->_options.max_slam_in_update, (int)feats_slam_UPDATE.size()));// Do the update//做更新updaterSLAM->update(state, featsup_TEMP);feats_slam_UPDATE_TEMP.insert(feats_slam_UPDATE_TEMP.end(), featsup_TEMP.begin(), featsup_TEMP.end());propagator->invalidate_cache();}feats_slam_UPDATE = feats_slam_UPDATE_TEMP;rT5 = boost::posix_time::microsec_clock::local_time();updaterSLAM->delayed_init(state, feats_slam_DELAYED);rT6 = boost::posix_time::microsec_clock::local_time();//===================================================================================// Update our visualization feature set, and clean up the old features//===================================================================================

//更新我们的可视化特征集并清除旧的特征// Re-triangulate all current tracks in the current frame//对于当前帧下所有目前跟踪进行重新三角化if (message.sensor_ids.at(0) == 0) {// Re-triangulate featuresretriangulate_active_tracks(message);//特征重新三角化// Clear the MSCKF features only on the base camera// Thus we should be able to visualize the other unique camera stream// MSCKF features as they will also be appended to the vector// 仅清除基础相机上的 MSCKF 特征// 因此我们应该能够可视化其他独特的相机流// MSCKF 特征,因为它们也将被附加到向量中good_features_MSCKF.clear();}// Save all the MSCKF features used in the update//保存更新中使用的所有 MSCKF 功能for (auto const &feat : featsup_MSCKF) {good_features_MSCKF.push_back(feat->p_FinG);feat->to_delete = true;}//===================================================================================// Cleanup, marginalize out what we don't need any more...//===================================================================================//清理,边缘化我们不再需要的东西......// Remove features that where used for the update from our extractors at the last timestep// This allows for measurements to be used in the future if they failed to be used this time// Note we need to do this before we feed a new image, as we want all new measurements to NOT be deleted// 在最后一个时间步从提取器中删除用于更新的特征// 如果这次未能使用,这允许将来使用测量值// 请注意,我们需要在提供新图像之前执行此操作,因为我们希望不删除所有新测量值trackFEATS->get_feature_database()->cleanup();if (trackARUCO != nullptr) {trackARUCO->get_feature_database()->cleanup();}// First do anchor change if we are about to lose an anchor pose// 如果我们即将失去锚点姿态,首先进行锚点更改updaterSLAM->change_anchors(state);// Cleanup any features older than the marginalization time//清楚所有比边缘化时间早的特征if ((int)state->_clones_IMU.size() > state->_options.max_clone_size) {trackFEATS->get_feature_database()->cleanup_measurements(state->margtimestep());if (trackARUCO != nullptr) {trackARUCO->get_feature_database()->cleanup_measurements(state->margtimestep());}}// Finally marginalize the oldest clone if needed// 如果需要的话,最后边缘化最旧的克隆StateHelper::marginalize_old_clone(state);rT7 = boost::posix_time::microsec_clock::local_time();//===================================================================================// Debug info, and stats tracking//===================================================================================

//调试信息和统计跟踪// Get timing statitics information//获取时序统计信息double time_track = (rT2 - rT1).total_microseconds() * 1e-6;double time_prop = (rT3 - rT2).total_microseconds() * 1e-6;double time_msckf = (rT4 - rT3).total_microseconds() * 1e-6;double time_slam_update = (rT5 - rT4).total_microseconds() * 1e-6;double time_slam_delay = (rT6 - rT5).total_microseconds() * 1e-6;double time_marg = (rT7 - rT6).total_microseconds() * 1e-6;double time_total = (rT7 - rT1).total_microseconds() * 1e-6;// Timing information//时序信息PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for tracking\n" RESET, time_track);PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for propagation\n" RESET, time_prop);PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for MSCKF update (%d feats)\n" RESET, time_msckf, (int)featsup_MSCKF.size());if (state->_options.max_slam_features > 0) {PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for SLAM update (%d feats)\n" RESET, time_slam_update, (int)state->_features_SLAM.size());PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for SLAM delayed init (%d feats)\n" RESET, time_slam_delay, (int)feats_slam_DELAYED.size());}PRINT_DEBUG(BLUE "[TIME]: %.4f seconds for re-tri & marg (%d clones in state)\n" RESET, time_marg, (int)state->_clones_IMU.size());std::stringstream ss;ss << "[TIME]: " << std::setprecision(4) << time_total << " seconds for total (camera";for (const auto &id : message.sensor_ids) {ss << " " << id;}ss << ")" << std::endl;PRINT_DEBUG(BLUE "%s" RESET, ss.str().c_str());// Finally if we are saving stats to file, lets save it to file//最后,如果我们将统计数据保存到文件,让我们将其保存到文件if (params.record_timing_information && of_statistics.is_open()) {// We want to publish in the IMU clock frame// The timestamp in the state will be the last camera time// 我们要在 IMU 时钟帧中发布// 状态中的时间戳将是最后一个相机时间double t_ItoC = state->_calib_dt_CAMtoIMU->value()(0);double timestamp_inI = state->_timestamp + t_ItoC;// Append to the file// 追加到文件of_statistics << std::fixed << std::setprecision(15) << timestamp_inI << "," << std::fixed << std::setprecision(5) << time_track << ","<< time_prop << "," << time_msckf << ",";if (state->_options.max_slam_features > 0) {of_statistics << time_slam_update << "," << time_slam_delay << ",";}of_statistics << time_marg << "," << time_total << std::endl;of_statistics.flush();}// Update our distance traveled// 更新我们行驶的距离if (timelastupdate != -1 && state->_clones_IMU.find(timelastupdate) != state->_clones_IMU.end()) {Eigen::Matrix<double, 3, 1> dx = state->_imu->pos() - state->_clones_IMU.at(timelastupdate)->pos();distance += dx.norm();}timelastupdate = message.timestamp;// Debug, print our current statePRINT_INFO("q_GtoI = %.3f,%.3f,%.3f,%.3f | p_IinG = %.3f,%.3f,%.3f | dist = %.2f (meters)\n", state->_imu->quat()(0),state->_imu->quat()(1), state->_imu->quat()(2), state->_imu->quat()(3), state->_imu->pos()(0), state->_imu->pos()(1),state->_imu->pos()(2), distance);PRINT_INFO("bg = %.4f,%.4f,%.4f | ba = %.4f,%.4f,%.4f\n", state->_imu->bias_g()(0), state->_imu->bias_g()(1), state->_imu->bias_g()(2),state->_imu->bias_a()(0), state->_imu->bias_a()(1), state->_imu->bias_a()(2));// Debug for camera imu offsetif (state->_options.do_calib_camera_timeoffset) {PRINT_INFO("camera-imu timeoffset = %.5f\n", state->_calib_dt_CAMtoIMU->value()(0));}// Debug for camera intrinsicsif (state->_options.do_calib_camera_intrinsics) {for (int i = 0; i < state->_options.num_cameras; i++) {std::shared_ptr<Vec> calib = state->_cam_intrinsics.at(i);PRINT_INFO("cam%d intrinsics = %.3f,%.3f,%.3f,%.3f | %.3f,%.3f,%.3f,%.3f\n", (int)i, calib->value()(0), calib->value()(1),calib->value()(2), calib->value()(3), calib->value()(4), calib->value()(5), calib->value()(6), calib->value()(7));}}// Debug for camera extrinsicsif (state->_options.do_calib_camera_pose) {for (int i = 0; i < state->_options.num_cameras; i++) {std::shared_ptr<PoseJPL> calib = state->_calib_IMUtoCAM.at(i);PRINT_INFO("cam%d extrinsics = %.3f,%.3f,%.3f,%.3f | %.3f,%.3f,%.3f\n", (int)i, calib->quat()(0), calib->quat()(1), calib->quat()(2),calib->quat()(3), calib->pos()(0), calib->pos()(1), calib->pos()(2));}}// Debug for imu intrinsicsif (state->_options.do_calib_imu_intrinsics && state->_options.imu_model == StateOptions::ImuModel::KALIBR) {PRINT_INFO("q_GYROtoI = %.3f,%.3f,%.3f,%.3f\n", state->_calib_imu_GYROtoIMU->value()(0), state->_calib_imu_GYROtoIMU->value()(1),state->_calib_imu_GYROtoIMU->value()(2), state->_calib_imu_GYROtoIMU->value()(3));}if (state->_options.do_calib_imu_intrinsics && state->_options.imu_model == StateOptions::ImuModel::RPNG) {PRINT_INFO("q_ACCtoI = %.3f,%.3f,%.3f,%.3f\n", state->_calib_imu_ACCtoIMU->value()(0), state->_calib_imu_ACCtoIMU->value()(1),state->_calib_imu_ACCtoIMU->value()(2), state->_calib_imu_ACCtoIMU->value()(3));}if (state->_options.do_calib_imu_intrinsics && state->_options.imu_model == StateOptions::ImuModel::KALIBR) {PRINT_INFO("Dw = | %.4f,%.4f,%.4f | %.4f,%.4f | %.4f |\n", state->_calib_imu_dw->value()(0), state->_calib_imu_dw->value()(1),state->_calib_imu_dw->value()(2), state->_calib_imu_dw->value()(3), state->_calib_imu_dw->value()(4),state->_calib_imu_dw->value()(5));PRINT_INFO("Da = | %.4f,%.4f,%.4f | %.4f,%.4f | %.4f |\n", state->_calib_imu_da->value()(0), state->_calib_imu_da->value()(1),state->_calib_imu_da->value()(2), state->_calib_imu_da->value()(3), state->_calib_imu_da->value()(4),state->_calib_imu_da->value()(5));}if (state->_options.do_calib_imu_intrinsics && state->_options.imu_model == StateOptions::ImuModel::RPNG) {PRINT_INFO("Dw = | %.4f | %.4f,%.4f | %.4f,%.4f,%.4f |\n", state->_calib_imu_dw->value()(0), state->_calib_imu_dw->value()(1),state->_calib_imu_dw->value()(2), state->_calib_imu_dw->value()(3), state->_calib_imu_dw->value()(4),state->_calib_imu_dw->value()(5));PRINT_INFO("Da = | %.4f | %.4f,%.4f | %.4f,%.4f,%.4f |\n", state->_calib_imu_da->value()(0), state->_calib_imu_da->value()(1),state->_calib_imu_da->value()(2), state->_calib_imu_da->value()(3), state->_calib_imu_da->value()(4),state->_calib_imu_da->value()(5));}if (state->_options.do_calib_imu_intrinsics && state->_options.do_calib_imu_g_sensitivity) {PRINT_INFO("Tg = | %.4f,%.4f,%.4f | %.4f,%.4f,%.4f | %.4f,%.4f,%.4f |\n", state->_calib_imu_tg->value()(0),state->_calib_imu_tg->value()(1), state->_calib_imu_tg->value()(2), state->_calib_imu_tg->value()(3),state->_calib_imu_tg->value()(4), state->_calib_imu_tg->value()(5), state->_calib_imu_tg->value()(6),state->_calib_imu_tg->value()(7), state->_calib_imu_tg->value()(8));}

}

)

进程的退出码)

)

)

真题试卷)

宏学习)

)

)