爬取的目标网址:https://music.douban.com/top250

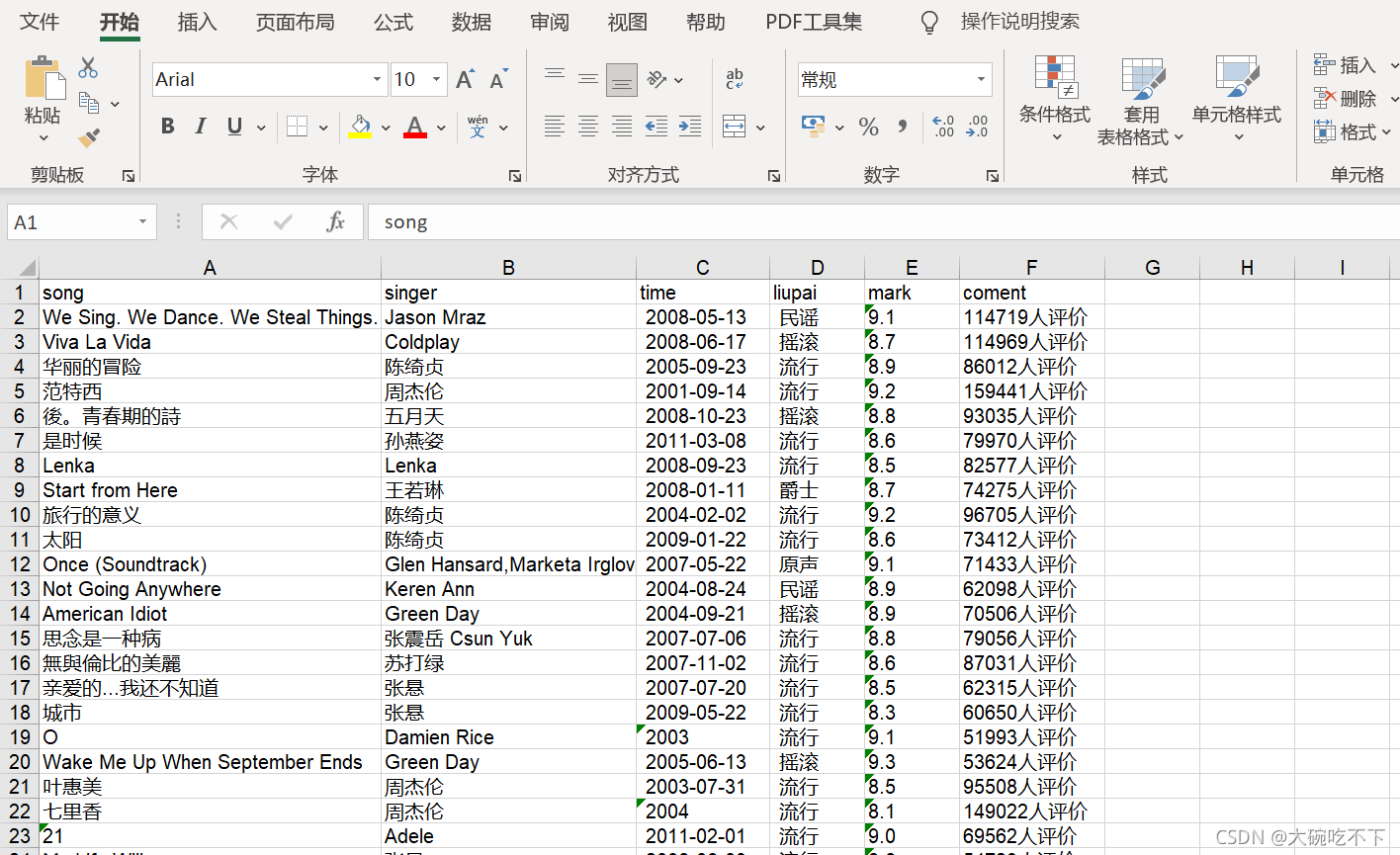

利用lxml库,获取前10页的信息,需要爬取的信息包括歌曲名、表演者、流派、发行时间、评分和评论人数,把这些信息存到csv和xls文件

在爬取的数据保存到csv文件时,有可能每一行数据后都会出现空一行,查阅资料后,发现用newline=’'可解决,但又会出现错误:‘gbk’ codec can’t encode character ‘\xb3’ in position 1: illegal multibyte sequence,然后可用encoding = "gb18030"解决

代码如下:

import xlwt

import csv

import requests

from lxml import etree

import timelist_music = []

#请求头

headers={"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36"}

#爬取的内容保存在csv文件中

f = open(r"D:\Python爬虫\doubanmusic.csv","w+",newline = '',encoding = "gb18030")

writer = csv.writer(f,dialect = 'excel')

#csv文件中第一行写入标题

writer.writerow(["song","singer","time","liupai","mark","coment"])#定义爬取内容的函数

def music_info(url):html = requests.get(url,headers=headers)selector = etree.HTML(html.text)infos = selector.xpath('//tr[@class="item"]')for info in infos:song = info.xpath('td[2]/div/a/text()')[0].strip()singer = info.xpath('td[2]/div/p/text()')[0].split("/")[0]times = info.xpath('td[2]/div/p/text()')[0].split("/")[1]liupai = info.xpath('td[2]/div/p/text()')[0].split("/")[-1]mark = info.xpath('td[2]/div/div/span[2]/text()')[0].strip()coment = info.xpath('td[2]/div/div/span[3]/text()')[0].strip().strip("(").strip(")").strip()list_info = [song,singer,times,liupai,mark,coment]writer.writerow([song,singer,times,liupai,mark,coment])list_music.append(list_info)#防止请求频繁,故睡眠1秒time.sleep(1)if __name__ == '__main__':urls = ['https://music.douban.com/top250?start={}'.format(str(i)) for i in range(0,250,25)]#调用函数music_info循环爬取内容for url in urls:music_info(url)#关闭csv文件f.close()#爬取的内容保存在xls文件中header = ["song","singer","time","liupai","mark","coment"]#打开工作簿book = xlwt.Workbook(encoding='utf-8')#建立Sheet1工作表sheet = book.add_sheet('Sheet1')for h in range(len(header)):sheet.write(0, h, header[h])i = 1for list in list_music:j = 0for data in list:sheet.write(i, j, data)j += 1i += 1#保存文件book.save('doubanmusic.xls')

结果部分截图:

:主成分分析)