使用 Python 爬虫获取顶级域名及对应的 WHOIS Server 并保存可用于 WhoisCL.exe 的文件 whois-servers.txt。

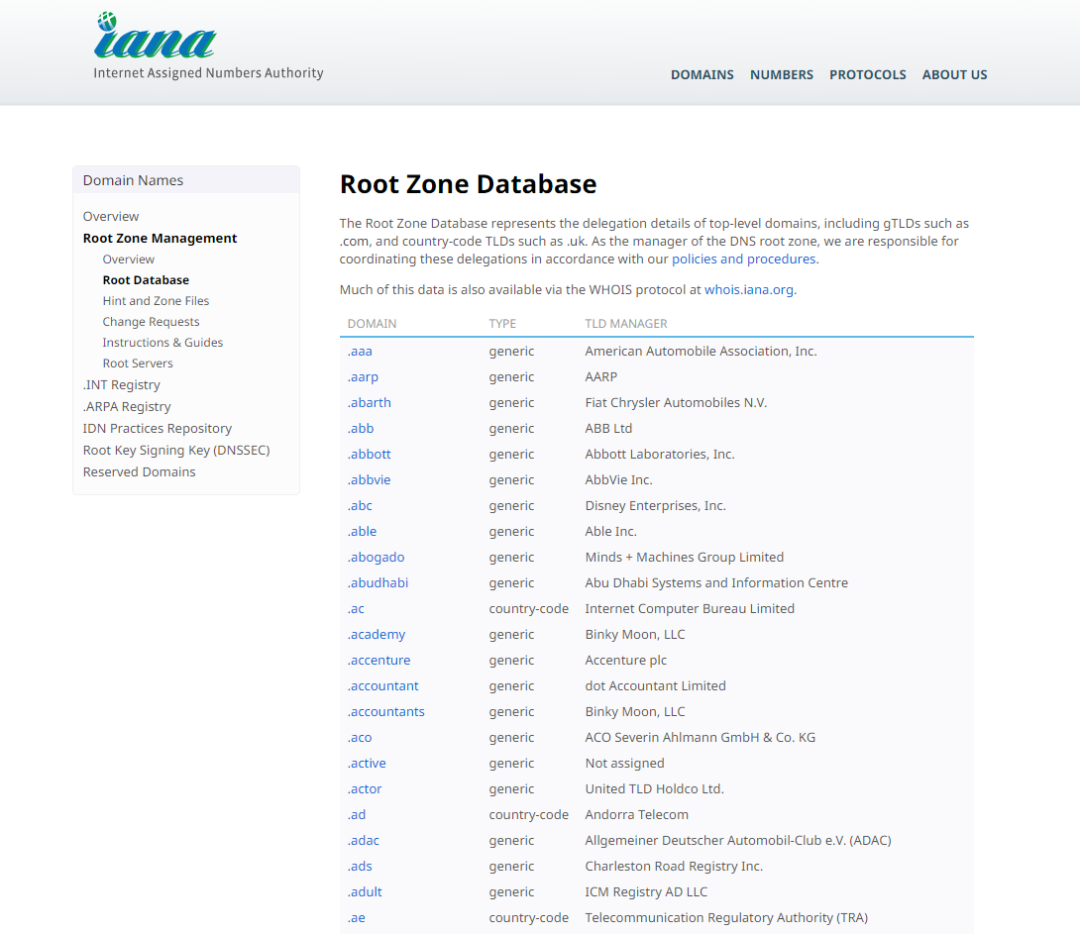

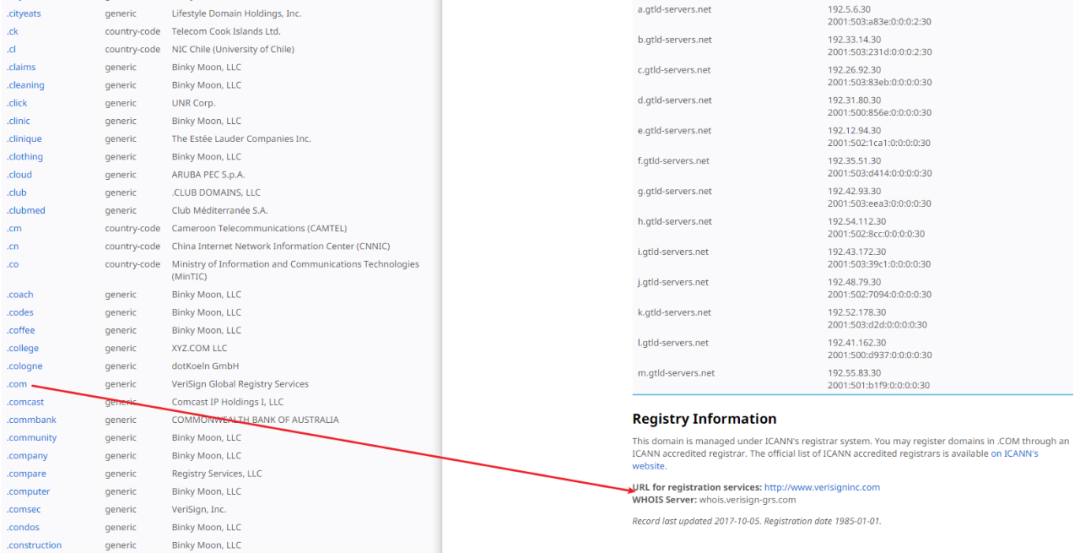

环境: 获取顶级域名的 WHOIS Server点击带查询的顶级域名,往下拉 WHOIS Server

获取顶级域名的 WHOIS Server点击带查询的顶级域名,往下拉 WHOIS Server 每个域名后缀对应的 WHOIS Server 是不一样的。安装 beautifulsoup4 库

每个域名后缀对应的 WHOIS Server 是不一样的。安装 beautifulsoup4 库

环境:

Windows 10

Python 3.9.1

获取顶级域名的 WHOIS Server点击带查询的顶级域名,往下拉 WHOIS Server

获取顶级域名的 WHOIS Server点击带查询的顶级域名,往下拉 WHOIS Server 每个域名后缀对应的 WHOIS Server 是不一样的。安装 beautifulsoup4 库

每个域名后缀对应的 WHOIS Server 是不一样的。安装 beautifulsoup4 库pip install beautifulsoup4python -m pip install requestsimport requests

from bs4 import BeautifulSoup

iurl = 'https://www.iana.org/domains/root/db'

res = requests.get(iurl, timeout=600)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text, 'html.parser')

list1 = []

list2 = []

jsonStr = {}

for tag in soup.find_all('span', class_='domain tld'):

d_suffix = tag.get_text()

print(d_suffix)import requests

from bs4 import BeautifulSoup

import re

import time

iurl = 'https://www.iana.org/domains/root/db'

res = requests.get(iurl, timeout=600)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text, 'html.parser')

list1 = []

list2 = []

jsonStr = {}

for tag in soup.find_all('span', class_='domain tld'):

d_suffix = tag.get_text()

print(d_suffix)

list2.append(d_suffix)

n_suffix = d_suffix.split('.')[1]

new_url = iurl + '/' + n_suffix

server = ''

try:

res2 = requests.get(new_url, timeout=600)

res2.encoding = 'utf-8'

soup2 = BeautifulSoup(res2.text, 'html.parser') retxt = re.compile(r'WHOIS Server: (.*?)\n') arr = retxt.findall(res2.text) if len(arr) > 0: server = arr[0] list2.append(server) print(server) time.sleep(1) except Exception as e: print('超时') with open('whois-servers.txt', "a", encoding='utf-8') as my_file: my_file.write(n_suffix + " " + server+'\n')

print('抓取结束')

)