-

问题描述

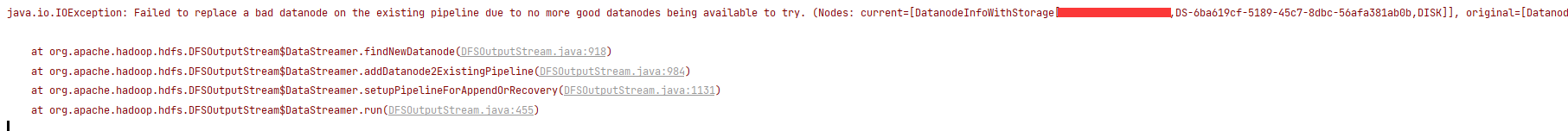

在使用hdfs api追加内容操作,从windows电脑上的idea对aliyun服务器上的hdfs中的文件追加内容时,出现错误,如下:java.io.IOException: Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try. (Nodes: current=[DatanodeInfoWithStorage[172.25.55.228:9866,DS-6ba619cf-5189-45c7-8dbc-56afa381ab0b,DISK]], original=[DatanodeInfoWithStorage[172.25.55.228:9866,DS-6ba619cf-5189-45c7-8dbc-56afa381ab0b,DISK]]). The current failed datanode replacement policy is DEFAULT, and a client may configure this via ‘dfs.client.block.write.replace-datanode-on-failure.policy’ in its configuration.

at org.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.findNewDatanode(DFSOutputStream.java:918)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.findNewDatanode(DFSOutputStream.java:918) at org.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.findNewDatanode(DFSOutputStream.java:918)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:984)

at org.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1131)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1131) at org.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1131)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.run(DFSOutputStream.java:455)截图:

-

问题解决

在idea代码中添加

configuration.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER");

)

in the type FileInputFormat is not)

![配置frp报错start error: type [http] not support when vhost_http_port is not set](http://pic.xiahunao.cn/配置frp报错start error: type [http] not support when vhost_http_port is not set)

报错SyntaxError: invalid syntax)

)