kubernetes集群使用GPU及安装kubeflow1.0.RC操作步骤

Kubeflow使用场景

-

希望训练tensorflow模型且可以使用模型接口发布应用服务在k8s环境中(eg.local,prem,cloud)

-

希望使用Jupyter notebooks来调试代码,多用户的notebook server

-

在训练的Job中,需要对的CPU或者GPU资源进行调度编排

-

希望Tensorflow和其他组件进行组合来发布服务

依赖库

-

ksonnet 0.11.0以上版本 /可以直接从github上下载,scp ks文件到usr/local/bin

-

kubernetes 1.8以上(直接使用CCE服务节点,需要创建一个CCE集群和若干节点,并为某个节点绑定EIP)

-

kubectl tools

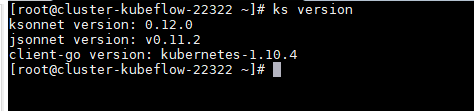

1、安装ksonnet

ksonnet 安装过程,可以去网址里面查看ks最新版本

wget https://github.com/ksonnet/ksonnet/releases/download/v0.13.0/ks_0.13.0_linux_amd64.tar.gz

tar -vxf ks_0.13.0_linux_amd64.tar.gz

cd -vxf ks_0.13.0_linux_amd64

sudo cp ks /usr/local/bin

安装完成后

安装显卡驱动

sudo yum-config-manager --add-repo http://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/cuda-rhel7.repo

sudo yum clean all

sudo yum -y install nvidia-driver-latest-dkms cuda

sudo yum -y install cuda-drivers

如缺少gcc依赖,则实行如下命令

yum install kernel-devel kernel-doc kernel-headers gcc\* glibc\* glibc-\*

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgrpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpmyum install -y kmod-nvidia

###在GRUB_CMDLINE_LINUX添加 rdblacklist=nouveau 项

echo -e "blacklist nouveau\noptions nouveau modeset=0" > /etc/modprobe.d/blacklist.conf

重启,查看nouveau是否被禁用成功

lsmod|grep nouv

没有任何输出,则表示nouveau已被禁用

查看服务器显卡信息

[root@master ~]# nvidia-smi

Tue Jan 14 03:46:41 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 440.44 Driver Version: 440.44 CUDA Version: 10.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:18:00.0 Off | 0 |

| N/A 29C P8 10W / 70W | 0MiB / 15109MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 1 Tesla T4 Off | 00000000:86:00.0 Off | 0 |

| N/A 25C P8 9W / 70W | 0MiB / 15109MiB | 0% Default |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+安装NVIDIA-DOCKER

curl -s -L https://nvidia.github.io/nvidia-docker/centos7/x86_64/nvidia-docker.repo | sudo tee /etc/yum.repos.d/nvidia-docker.repo

- 查找NVIDIAdocker版本

yum search --showduplicates nvidia-docker

- 安装NVIDIA-docker

docker版本为docker18.09.7.ce,所以安装下述NVIDIAdocker版本

yum install -y nvidia-docker2

pkill -SIGHUP dockerd

nvidia-docker version 可查看已安装的nvidia docker版本

修改docker runtimes为nvidia-docker

[root@ks-allinone ~]# cat /etc/docker/daemon.json

{"default-runtime": "nvidia","runtimes": {"nvidia": {"path": "nvidia-container-runtime","runtimeArgs": []}},"registry-mirrors": ["https://o96k4rm0.mirror.aliyuncs.com"]

}重启docker及k8s

systemctl daemon-reload

systemctl restart docker.service

systemctl restart kubelet

cd /etc/kubernetes/

curl -O https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/config/scheduler-policy-config.json

cd /tmp/

curl -O https://raw.githubusercontent.com/AliyunContainerService/gpushare-scheduler-extender/master/config/gpushare-schd-extender.yaml

kubectl create -f gpushare-schd-extender.yaml

kubectl create -f device-plugin-rbac.yaml

# rbac.yaml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: gpushare-device-plugin

rules:

- apiGroups:- ""resources:- nodesverbs:- get- list- watch

- apiGroups:- ""resources:- eventsverbs:- create- patch

- apiGroups:- ""resources:- podsverbs:- update- patch- get- list- watch

- apiGroups:- ""resources:- nodes/statusverbs:- patch- update

---

apiVersion: v1

kind: ServiceAccount

metadata:name: gpushare-device-pluginnamespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: gpushare-device-pluginnamespace: kube-system

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: gpushare-device-plugin

subjects:

- kind: ServiceAccountname: gpushare-device-pluginnamespace: kube-system

kubectl create -f device-plugin-ds.yamlapiVersion: extensions/v1beta1

kind: DaemonSet

metadata:name: gpushare-device-plugin-dsnamespace: kube-system

spec:template:metadata:annotations:scheduler.alpha.kubernetes.io/critical-pod: ""labels:component: gpushare-device-pluginapp: gpusharename: gpushare-device-plugin-dsspec:serviceAccount: gpushare-device-pluginhostNetwork: truenodeSelector:gpushare: "true"containers:- image: registry.cn-hangzhou.aliyuncs.com/acs/k8s-gpushare-plugin:v2-1.11-35eccabname: gpushare# Make this pod as Guaranteed pod which will never be evicted because of node's resource consumption.command:- gpushare-device-plugin-v2- -logtostderr- --v=5#- --memory-unit=Miresources:limits:memory: "300Mi"cpu: "1"requests:memory: "300Mi"cpu: "1"env:- name: KUBECONFIGvalue: /etc/kubernetes/kubelet.conf- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeNamesecurityContext:allowPrivilegeEscalation: falsecapabilities:drop: ["ALL"]volumeMounts:- name: device-pluginmountPath: /var/lib/kubelet/device-pluginsvolumes:- name: device-pluginhostPath:path: /var/lib/kubelet/device-plugins参考

https://github.com/AliyunContainerService/gpushare-scheduler-extender

https://github.com/AliyunContainerService/gpushare-device-plugin

为共享节点打上gpushare标签

kubectl label node mynode gpushare=true

安装扩展

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.12.1/bin/linux/amd64/kubectl

chmod +x ./kubectl

sudo mv ./kubectl /usr/bin/kubectlcd /usr/bin/

wget https://github.com/AliyunContainerService/gpushare-device-plugin/releases/download/v0.3.0/kubectl-inspect-gpushare

chmod u+x /usr/bin/kubectl-inspect-gpushare

kubectl inspect gpushare ##查看集群GPU使用情况

wget https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/metallb.yamlkubectl apply -f metallb.yaml

metallb-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:namespace: metallb-systemname: config

data:config: |address-pools:- name: defaultprotocol: layer2addresses:- 10.18.5.30-10.18.5.50kubectl apply -f metallb-config.yaml

kubectl apply -f tensorflow.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:name: tensorflow-gpu

spec:replicas: 1template:metadata:labels:name: tensorflow-gpuspec:containers:- name: tensorflow-gpuimage: tensorflow/tensorflow:1.15.0-py3-jupyterimagePullPolicy: Neverresources:limits:aliyun.com/gpu-mem: 1024ports:- containerPort: 8888

---

apiVersion: v1

kind: Service

metadata:name: tensorflow-gpu

spec:ports:- port: 8888targetPort: 8888nodePort: 30888name: jupyterselector:name: tensorflow-gputype: NodePort

查看集群GPU使用情况

[root@master ~]# kubectl inspect gpushare

NAME IPADDRESS GPU0(Allocated/Total) GPU1(Allocated/Total) GPU Memory(MiB)

master 10.18.5.20 1024/15109 0/15109 1024/30218

node 10.18.5.21 0/15109 0/15109 0/30218

------------------------------------------------------------------

Allocated/Total GPU Memory In Cluster:

1024/60436 (1%)

[root@master ~]#

可通过动态伸缩tensorflow service 的节点数量以及修改单个节点的显存大小测试GPU使用情况

kubectl scale --current-replicas=1 --replicas=100 deployment/tensorflow-gpu

经测试,得出以下测试结果:

环境

| 节点 | GPU个数 | GPU内存 |

|---|---|---|

| master | 2 | 15109M*2=30218M |

| node | 2 | 15109M*2=30218M |

测试结果

| podGpu | pod个数 | gpu利用率 |

|---|---|---|

| 256M | 183 | 77% |

| 512M | 116 | 98% |

| 1024M | 56 | 94% |

安装kubeflow(V1.0.RC)

tar -vxf ks_0.12.0_linux_amd64.tar.gzcp ks_0.12.0_linux_amd64/* /usr/local/bin/

kfctl_v1.0-rc.3-1-g24b60e8_linux.tar.gz

tar -zxvf kfctl_v1.0-rc.3-1-g24b60e8_linux.tar.gz

cp kfctl /usr/bin/

准备工作,创建PV及PVC,使用NFS作为文件存储

创建storageclass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: local-pathnamespace: kubeflow

#provisioner: example.com/nfs

provisioner: kubernetes.io/gce-pd

parameters:type: pd-ssdkubectl create -f storage.yml

yum install nfs-utils rpcbind

#创建NFS挂载目录(至少需要四个)

mkdir -p /data/nfsvim /etc/exports

#添加上面的挂载目录

/data/nfs 192.168.122.0/24(rw,sync)systemctl restart nfs-server.service

创建PV,因多个pod挂载文件可能重名,所以最好创建多个PV由pod选择挂载(至少4个,分别供katib-mysql,metadata-mysql,minio,mysql挂载)

[root@master pv]# cat mysql-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:name: local-path #不同的PVC需要修改

spec:capacity:storage: 200GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RecyclestorageClassName: local-pathnfs:path: /data/nfs #不同的PVC需要修改server: 10.18.5.20

创建命名空间 kubeflow-anonymous

kubectl create namespace kubeflow-anonymous

下载kubeflow1.0.RC yml文件, https://github.com/kubeflow/manifests/blob/v1.0-branch/kfdef/kfctl_k8s_istio.yaml

[root@master 2020-0219]# cat kfctl_k8s_istio.yaml

apiVersion: kfdef.apps.kubeflow.org/v1

kind: KfDef

metadata:clusterName: kubernetescreationTimestamp: nullname: 2020-0219namespace: kubeflow

spec:applications:- kustomizeConfig:parameters:- name: namespacevalue: istio-systemrepoRef:name: manifestspath: istio/istio-crdsname: istio-crds- kustomizeConfig:parameters:- name: namespacevalue: istio-systemrepoRef:name: manifestspath: istio/istio-installname: istio-install- kustomizeConfig:parameters:- name: namespacevalue: istio-systemrepoRef:name: manifestspath: istio/cluster-local-gatewayname: cluster-local-gateway- kustomizeConfig:parameters:- name: clusterRbacConfigvalue: "OFF"repoRef:name: manifestspath: istio/istioname: istio- kustomizeConfig:parameters:- name: namespacevalue: istio-systemrepoRef:name: manifestspath: istio/add-anonymous-user-filtername: add-anonymous-user-filter- kustomizeConfig:repoRef:name: manifestspath: application/application-crdsname: application-crds- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: application/applicationname: application- kustomizeConfig:parameters:- name: namespacevalue: cert-managerrepoRef:name: manifestspath: cert-manager/cert-manager-crdsname: cert-manager-crds- kustomizeConfig:parameters:- name: namespacevalue: kube-systemrepoRef:name: manifestspath: cert-manager/cert-manager-kube-system-resourcesname: cert-manager-kube-system-resources- kustomizeConfig:overlays:- self-signed- applicationparameters:- name: namespacevalue: cert-managerrepoRef:name: manifestspath: cert-manager/cert-managername: cert-manager- kustomizeConfig:repoRef:name: manifestspath: metacontrollername: metacontroller- kustomizeConfig:overlays:- istio- applicationrepoRef:name: manifestspath: argoname: argo- kustomizeConfig:repoRef:name: manifestspath: kubeflow-rolesname: kubeflow-roles- kustomizeConfig:overlays:- istio- applicationrepoRef:name: manifestspath: common/centraldashboardname: centraldashboard- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: admission-webhook/bootstrapname: bootstrap- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: admission-webhook/webhookname: webhook- kustomizeConfig:overlays:- istio- applicationparameters:- name: userid-headervalue: kubeflow-useridrepoRef:name: manifestspath: jupyter/jupyter-web-appname: jupyter-web-app- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: spark/spark-operatorname: spark-operator- kustomizeConfig:overlays:- istio- application- dbrepoRef:name: manifestspath: metadataname: metadata- kustomizeConfig:overlays:- istio- applicationrepoRef:name: manifestspath: jupyter/notebook-controllername: notebook-controller- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pytorch-job/pytorch-job-crdsname: pytorch-job-crds- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pytorch-job/pytorch-operatorname: pytorch-operator- kustomizeConfig:overlays:- applicationparameters:- name: usageIdvalue: <randomly-generated-id>- name: reportUsagevalue: "true"repoRef:name: manifestspath: common/spartakusname: spartakus- kustomizeConfig:overlays:- istiorepoRef:name: manifestspath: tensorboardname: tensorboard- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: tf-training/tf-job-crdsname: tf-job-crds- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: tf-training/tf-job-operatorname: tf-job-operator- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: katib/katib-crdsname: katib-crds- kustomizeConfig:overlays:- application- istiorepoRef:name: manifestspath: katib/katib-controllername: katib-controller- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/api-servicename: api-service- kustomizeConfig:overlays:- applicationparameters:- name: minioPvcNamevalue: minio-pv-claimrepoRef:name: manifestspath: pipeline/minioname: minio- kustomizeConfig:overlays:- applicationparameters:- name: mysqlPvcNamevalue: mysql-pv-claimrepoRef:name: manifestspath: pipeline/mysqlname: mysql- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/persistent-agentname: persistent-agent- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/pipelines-runnername: pipelines-runner- kustomizeConfig:overlays:- istio- applicationrepoRef:name: manifestspath: pipeline/pipelines-uiname: pipelines-ui- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/pipelines-viewername: pipelines-viewer- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/scheduledworkflowname: scheduledworkflow- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: pipeline/pipeline-visualization-servicename: pipeline-visualization-service- kustomizeConfig:overlays:- application- istioparameters:- name: adminvalue: johnDoe@acme.comrepoRef:name: manifestspath: profilesname: profiles- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: seldon/seldon-core-operatorname: seldon-core-operator- kustomizeConfig:overlays:- applicationparameters:- name: namespacevalue: knative-servingrepoRef:name: manifestspath: knative/knative-serving-crdsname: knative-crds- kustomizeConfig:overlays:- applicationparameters:- name: namespacevalue: knative-servingrepoRef:name: manifestspath: knative/knative-serving-installname: knative-install- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: kfserving/kfserving-crdsname: kfserving-crds- kustomizeConfig:overlays:- applicationrepoRef:name: manifestspath: kfserving/kfserving-installname: kfserving-installrepos:- name: manifestsuri: https://github.com/kubeflow/manifests/archive/master.tar.gzversion: master

status:reposCache:- localPath: '"../.cache/manifests/manifests-master"'name: manifests

[root@master 2020-0219]##进入你的kubeflowapp目录 执行

kfctl apply -V -f kfctl_k8s_istio.yaml

#安装过程中需要从GitHub下载配置文件,可能会失败,失败时重试

在kubeflowapp平级目录下会生成kustomize文件夹,为防重启时镜像拉取失败,需修改所有镜像拉取策略为IfNotPresent

然后再次执行 kfctl apply -V -f kfctl_k8s_istio.yaml

查看运行状态

kubectl get all -n kubeflow

#修改ingeress-gateway访问方式为LoadBalancer

kubectl -n istio-system edit svc istio-ingressgateway

#修改此处为LoadBalancer

selector:app: istio-ingressgatewayistio: ingressgatewayrelease: istio

sessionAffinity: None

type: LoadBalancer

保存,再次查看该svc信息

[root@master 2020-0219]# kubectl -n istio-system get svc istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway LoadBalancer 10.98.19.247 10.18.5.30 15020:32230/TCP,80:31380/TCP,443:31390/TCP,31400:31400/TCP,15029:31908/TCP,15030:31864/TCP,15031:31315/TCP,15032:30372/TCP,15443:32631/TCP 42h

[root@master 2020-0219]#

EXTERNAL-IP 即为外部访问地址,访问http://10.18.5.30 即可进入kubeflow主页

关于镜像拉取,gcr镜像国内无法拉取,可以通过如下方式拉取

curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- 镜像信息

若上述方法也无法拉取,可以使用阿里云手动构建镜像方式使用海外服务器构建

)

)

)

)

的使用)