目录

一、安装Sentry

1.MariaDB中创建sentry数据库

2.CDH中添加sentry 服务

3.hive配置 启动Sentry

4.Impala配置 启动Sentry

5.Hue配置 启动Sentry

6.Hdfs配置 启动Sentry

7.重启服务,使配置生效

二、Sentry权限测试

1.创建hive超级用户

1.1 创建kerberos hive用户

1.2创建Admin角色

1.3为admin角色赋予管理员权限

1.4 将admin角色授权给hive用户组

2. 创建test表

3. 创建其他角色,授予权限

3.1 创建用户,默认用户组与用户名相同

3.2 授权read角色对test表的select权限,write角色对test表的insert权限

3.3 将read角色授权给 jast_r 用户组,write角色授权给 jast_w 用户组

3.4 使用kadmin创建 jast_r 和 jast_w 用户

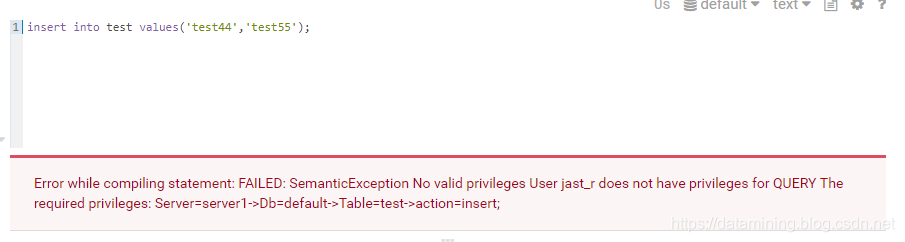

3.5 Hive 验证

3.6 HDFS 权限验证

3.7 HUE 验证

3.8 Impala 验证

4. Sentry 列权限设置

4.1 创建test表列读角色并授权给用户组

4.2 列读权限测试

5. Sentry 权限命令详解(更多介绍参考:https://datamining.blog.csdn.net/article/details/99212770)

Sentry 介绍 : https://datamining.blog.csdn.net/article/details/98969244

一、安装Sentry

1.MariaDB中创建sentry数据库

MariaDB [(none)]> create database sentry default character set utf8;

Query OK, 1 row affected (0.01 sec)MariaDB [(none)]> CREATE USER 'sentry'@'%' IDENTIFIED BY 'password';

Query OK, 0 rows affected (0.03 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON sentry. * TO 'sentry'@'%';

Query OK, 0 rows affected (0.01 sec)MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

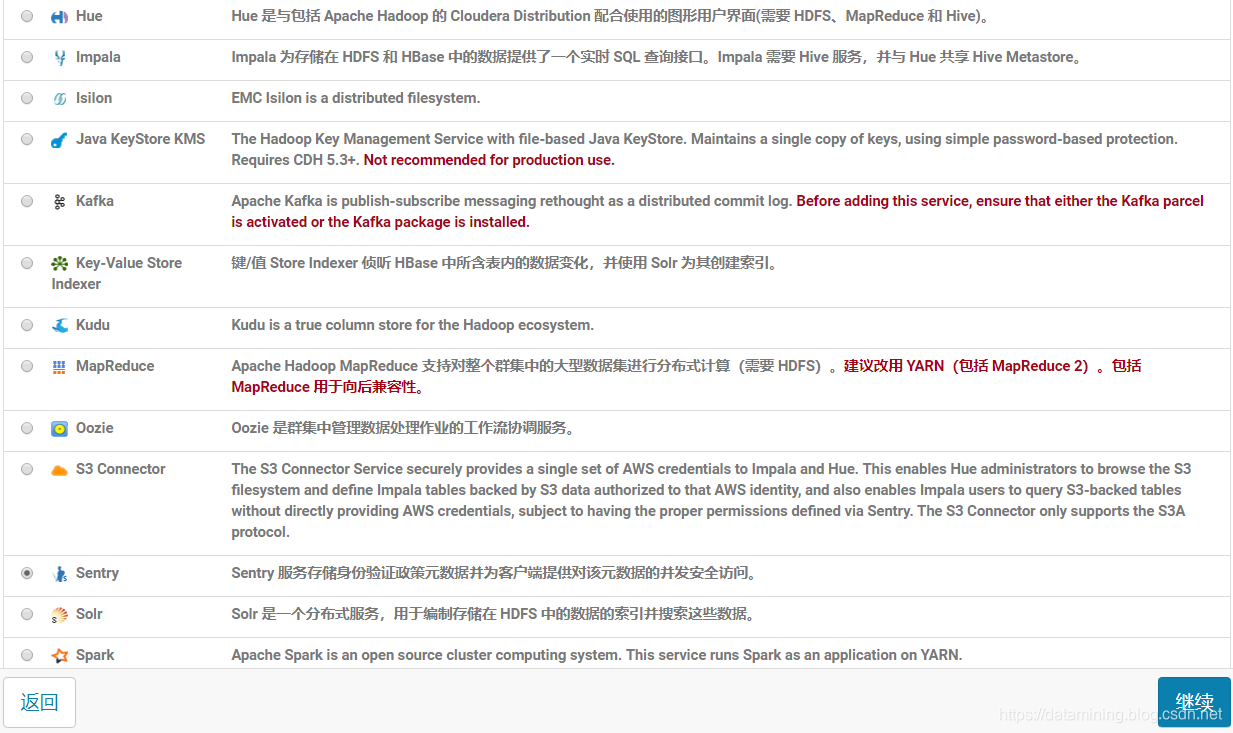

2.CDH中添加sentry 服务

填写刚刚创建数据库信息

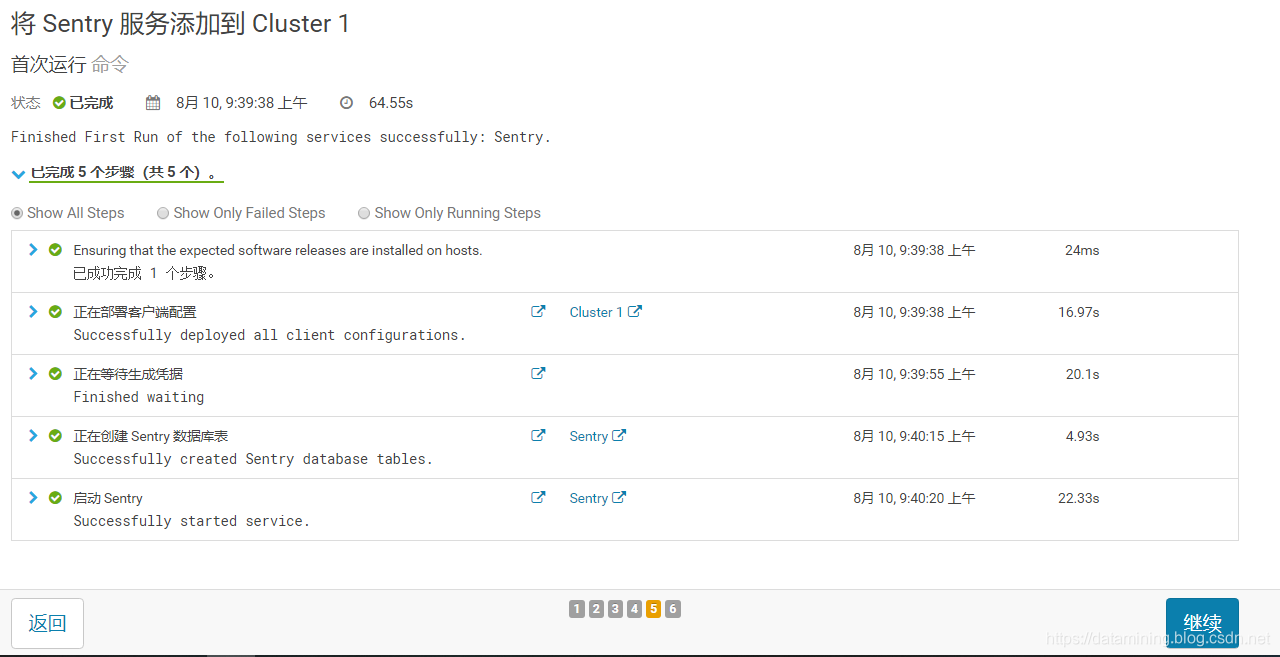

继续安装完成

继续安装完成

3.hive配置 启动Sentry

启动hive Sentry

取消模拟用户身份提交

启用数据库中的存储通知

保存

4.Impala配置 启动Sentry

5.Hue配置 启动Sentry

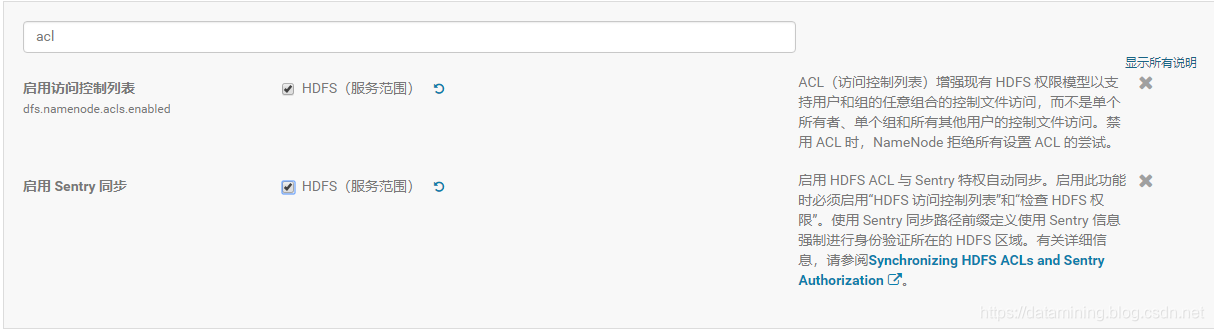

6.Hdfs配置 启动Sentry

启动HDFS ACL控制,与Sentry权限同步

7.重启服务,使配置生效

至此服务安装成功

二、Sentry权限测试

1.创建hive超级用户

1.1 创建kerberos hive用户

[root@xxx ~]# kadmin.local

Authenticating as principal hbase/admin@JAST.COM with password.

kadmin.local: addprinc hive@JAST.COM

WARNING: no policy specified for hive@JAST.COM; defaulting to no policy

Enter password for principal "hive@JAST.COM":

Re-enter password for principal "hive@JAST.COM":

Principal "hive@JAST.COM" created.

kadmin.local: exit

[root@xxx ~]# kinit hive

Password for hive@JAST.COM:

[root@xxx ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: hive@JAST.COMValid starting Expires Service principal

2019-08-09T10:07:24 2019-08-10T10:07:24 krbtgt/JAST.COM@JAST.COMrenew until 2019-08-17T10:07:24

通过 beeline连接 hiveserver2

[root@xxx001 ~]# beeline

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Beeline version 1.1.0-cdh5.13.0 by Apache Hive

beeline> !connect jdbc:hive2://192.168.1.101:10000/;principal=hive@JAST.COM

scan complete in 2ms

Connecting to jdbc:hive2://192.168.1.101:10000/;principal=hive@JAST.COM

Kerberos principal should have 3 parts: hive@JAST.COM

这里就遇到问题了,提示 Kerberos principal should have 3 parts: hive@JAST.COM ,说是需要三个部分,翻阅资料发现创建kerberos principal指定三个部分,命名规则类似于:服务名/HOST@域名 , 域名就是/etc/krb5.conf 中 domain_realm 配置的,如 hive/hostname1@JAST.COM

此处参考:https://community.hortonworks.com/questions/22897/kerberos-principal-should-have-3-parts.html

注意这里hostname要与hiveserver2的相同,例:

!connect jdbc:hive2://hostname:10000/;principal=hive/hostname@JAST.COM不相同会报错:

Error: Could not open client transport with JDBC Uri: jdbc:hive2://hostname:10000/;principal=hive/hostname1@JAST.COM: GSS initiate failed (state=08S01,code=0)修改第一步创建principal

[root@xxx ~]# kadmin.local

Authenticating as principal hbase/admin@JAST.COM with password.

kadmin.local: addprinc hive/hostname@JAST.COM

WARNING: no policy specified for hive/hostname@JAST.COM; defaulting to no policy

Enter password for principal "hive/hostname@JAST.COM":

Re-enter password for principal "hive/hostname@JAST.COM":

Principal "hive/hostname@JAST.COM" created.

kadmin.local: exit

[root@xxx ~]# kinit hive

Password for hive/hostname@JAST.COM:

[root@xxx ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: hive/hostname@JAST.COMValid starting Expires Service principal

2019-08-09T10:07:24 2019-08-10T10:07:24 krbtgt/JAST.COM@JAST.COMrenew until 2019-08-17T10:07:24

再次通过beeline连接,成功

beeline> !connect jdbc:hive2://hostname:10000/;principal=hive/hostname@JAST.COM

Connecting to jdbc:hive2://hostname:10000/;principal=hive/hostname@JAST.COM

Connected to: Apache Hive (version 1.1.0-cdh5.13.0)

Driver: Hive JDBC (version 1.1.0-cdh5.13.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hostname:10000/>

1.2创建Admin角色

0: jdbc:hive2://xxx:10000/> create role admin;

INFO : Compiling command(queryId=hive_20190811133535_e7b93b4e-ee8e-4aee-b0ce-49b62bda60c8): create role admin

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811133535_e7b93b4e-ee8e-4aee-b0ce-49b62bda60c8); Time taken: 0.532 seconds

INFO : Executing command(queryId=hive_20190811133535_e7b93b4e-ee8e-4aee-b0ce-49b62bda60c8): create role admin

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811133535_e7b93b4e-ee8e-4aee-b0ce-49b62bda60c8); Time taken: 0.204 seconds

INFO : OK

No rows affected (2.118 seconds)

1.3为admin角色赋予管理员权限

0: jdbc:hive2://XXX:10000/> GRANT ALL ON SERVER server1 TO ROLE admin;

INFO : Compiling command(queryId=hive_20190811134141_549bde26-29b1-48fc-a5b1-62ffe2ced6e9): GRANT ALL ON SERVER server1 TO ROLE admin

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811134141_549bde26-29b1-48fc-a5b1-62ffe2ced6e9); Time taken: 0.12 seconds

INFO : Executing command(queryId=hive_20190811134141_549bde26-29b1-48fc-a5b1-62ffe2ced6e9): GRANT ALL ON SERVER server1 TO ROLE admin

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811134141_549bde26-29b1-48fc-a5b1-62ffe2ced6e9); Time taken: 0.104 seconds

INFO : OK

No rows affected (0.241 seconds)1.4 将admin角色授权给hive用户组

0: jdbc:hive2://xxx:10000/> GRANT ROLE admin TO GROUP hive;

INFO : Compiling command(queryId=hive_20190811134242_2e020ff8-08ee-4b68-a19c-ff83b68a24ae): GRANT ROLE admin TO GROUP hive

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811134242_2e020ff8-08ee-4b68-a19c-ff83b68a24ae); Time taken: 0.088 seconds

INFO : Executing command(queryId=hive_20190811134242_2e020ff8-08ee-4b68-a19c-ff83b68a24ae): GRANT ROLE admin TO GROUP hive

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811134242_2e020ff8-08ee-4b68-a19c-ff83b68a24ae); Time taken: 0.061 seconds

INFO : OK

No rows affected (0.163 seconds)

以上操作创建了一个admin角色:

admin : 具有管理员权限,可以读写所有数据库,并授权给hive组(对应操作系统的组)

2. 创建test表

使用hive用户登录Kerberos,通过beeline登录HiveServer2,创建test表,并插入测试数据

0: jdbc:hive2://xxx:10000/> create table test (s1 string, s2 string) row format delimited fields terminated by ',';

INFO : Compiling command(queryId=hive_20190811134747_3bf78819-78e0-4f3f-921d-57aae50184ab): create table test (s1 string, s2 string) row format delimited fields terminated by ','

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811134747_3bf78819-78e0-4f3f-921d-57aae50184ab); Time taken: 0.352 seconds

INFO : Executing command(queryId=hive_20190811134747_3bf78819-78e0-4f3f-921d-57aae50184ab): create table test (s1 string, s2 string) row format delimited fields terminated by ','

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811134747_3bf78819-78e0-4f3f-921d-57aae50184ab); Time taken: 0.465 seconds

INFO : OK

No rows affected (0.856 seconds)

插入数据

0: jdbc:hive2://hostname:10000/> insert into test values('a','b'),('1','2');

INFO : Compiling command(queryId=hive_20190811134848_7d61ee56-7e53-4889-bd3b-7833bbe45793): insert into test values('a','b'),('1','2')

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:values__tmp__table__1.tmp_values_col1, type:string, comment:null), FieldSchema(name:values__tmp__table__1.tmp_values_col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811134848_7d61ee56-7e53-4889-bd3b-7833bbe45793); Time taken: 0.856 seconds

INFO : Executing command(queryId=hive_20190811134848_7d61ee56-7e53-4889-bd3b-7833bbe45793): insert into test values('a','b'),('1','2')

INFO : Query ID = hive_20190811134848_7d61ee56-7e53-4889-bd3b-7833bbe45793

INFO : Total jobs = 3

INFO : Launching Job 1 out of 3

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks is set to 0 since there's no reduce operator

INFO : number of splits:1

INFO : Submitting tokens for job: job_1565402085038_0001

INFO : Kind: HDFS_DELEGATION_TOKEN, Service: 10.248.161.16:8020, Ident: (token for hive: HDFS_DELEGATION_TOKEN owner=hive/hostname.zh@IZHONGHONG.COM, renewer=yarn, realUser=, issueDate=1565502522452, maxDate=1566107322452, sequenceNumber=62, masterKeyId=8)

INFO : The url to track the job: http://hostname.zh:8088/proxy/application_1565402085038_0001/

INFO : Starting Job = job_1565402085038_0001, Tracking URL = http://hostname.zh:8088/proxy/application_1565402085038_0001/

INFO : Kill Command = /opt/cloudera/parcels/CDH-5.13.0-1.cdh5.13.0.p0.29/lib/hadoop/bin/hadoop job -kill job_1565402085038_0001

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

INFO : 2019-08-11 13:48:56,862 Stage-1 map = 0%, reduce = 0%

INFO : 2019-08-11 13:49:02,147 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

INFO : MapReduce Total cumulative CPU time: 2 seconds 80 msec

INFO : Ended Job = job_1565402085038_0001

INFO : Starting task [Stage-7:CONDITIONAL] in serial mode

INFO : Stage-4 is selected by condition resolver.

INFO : Stage-3 is filtered out by condition resolver.

INFO : Stage-5 is filtered out by condition resolver.

INFO : Starting task [Stage-4:MOVE] in serial mode

INFO : Moving data to: hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_13-48-41_149_6972252053826421751-1/-ext-10000 from hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_13-48-41_149_6972252053826421751-1/-ext-10002

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table default.test from hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_13-48-41_149_6972252053826421751-1/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : Table default.test stats: [numFiles=1, numRows=2, totalSize=8, rawDataSize=6]

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Cumulative CPU: 2.08 sec HDFS Read: 3564 HDFS Write: 76 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 seconds 80 msec

INFO : Completed executing command(queryId=hive_20190811134848_7d61ee56-7e53-4889-bd3b-7833bbe45793); Time taken: 21.547 seconds

INFO : OK

No rows affected (22.428 seconds)查询数据

0: jdbc:hive2://hostname:10000/> select * from test;

INFO : Compiling command(queryId=hive_20190811134949_edeab35a-b1ae-49b8-b4b7-8281ae736fa8): select * from test

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:test.s1, type:string, comment:null), FieldSchema(name:test.s2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811134949_edeab35a-b1ae-49b8-b4b7-8281ae736fa8); Time taken: 0.201 seconds

INFO : Executing command(queryId=hive_20190811134949_edeab35a-b1ae-49b8-b4b7-8281ae736fa8): select * from test

INFO : Completed executing command(queryId=hive_20190811134949_edeab35a-b1ae-49b8-b4b7-8281ae736fa8); Time taken: 0.0 seconds

INFO : OK

+----------+----------+--+

| test.s1 | test.s2 |

+----------+----------+--+

| a | b |

| 1 | 2 |

+----------+----------+--+

2 rows selected (0.345 seconds)

3. 创建其他角色,授予权限

创建两个角色:jast_r 与 jast_w , 一个是读权限,一个是写权限

注意:集群所有节点必须存在fayson和user_w用户,用户默认用户组与用户名一致,赋权是针对用户组而不是针对用户。

3.1 创建用户,默认用户组与用户名相同

[root@hostname ~]# useradd jast_r

[root@hostname ~]# id jast_r

uid=1002(jast_r) gid=1002(jast_r) 组=1002(jast_r)

[root@hostname ~]# useradd jast_w

[root@hostname ~]# id jast_w

uid=1003(jast_w) gid=1003(jast_w) 组=1003(jast_w)

3.2 授权read角色对test表的select权限,write角色对test表的insert权限

0: jdbc:hive2://hostname:10000/> create role read;

INFO : Compiling command(queryId=hive_20190811140000_d4a8362b-c976-4a83-a189-c806ba909f2b): create role read

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140000_d4a8362b-c976-4a83-a189-c806ba909f2b); Time taken: 0.082 seconds

INFO : Executing command(queryId=hive_20190811140000_d4a8362b-c976-4a83-a189-c806ba909f2b): create role read

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140000_d4a8362b-c976-4a83-a189-c806ba909f2b); Time taken: 0.024 seconds

INFO : OK

No rows affected (0.12 seconds)

0: jdbc:hive2://hostname:10000/> GRANT SELECT ON TABLE test TO ROLE read;

INFO : Compiling command(queryId=hive_20190811140000_df975a0d-1eef-4e80-adea-c0a5a2a83370): GRANT SELECT ON TABLE test TO ROLE read

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140000_df975a0d-1eef-4e80-adea-c0a5a2a83370); Time taken: 0.089 seconds

INFO : Executing command(queryId=hive_20190811140000_df975a0d-1eef-4e80-adea-c0a5a2a83370): GRANT SELECT ON TABLE test TO ROLE read

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140000_df975a0d-1eef-4e80-adea-c0a5a2a83370); Time taken: 0.025 seconds

INFO : OK

No rows affected (0.125 seconds)

0: jdbc:hive2://hostname:10000/> CREATE ROLE write;

INFO : Compiling command(queryId=hive_20190811140101_5688fe84-9e95-49e3-86df-5bf599ab31c7): CREATE ROLE write

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140101_5688fe84-9e95-49e3-86df-5bf599ab31c7); Time taken: 0.091 seconds

INFO : Executing command(queryId=hive_20190811140101_5688fe84-9e95-49e3-86df-5bf599ab31c7): CREATE ROLE write

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140101_5688fe84-9e95-49e3-86df-5bf599ab31c7); Time taken: 0.012 seconds

INFO : OK

No rows affected (0.113 seconds)

0: jdbc:hive2://hostname:10000/> GRANT INSERT ON TABLE test TO ROLE write;

INFO : Compiling command(queryId=hive_20190811140202_4ac6ca45-9829-47ae-893e-376778c17030): GRANT INSERT ON TABLE test TO ROLE write

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140202_4ac6ca45-9829-47ae-893e-376778c17030); Time taken: 0.082 seconds

INFO : Executing command(queryId=hive_20190811140202_4ac6ca45-9829-47ae-893e-376778c17030): GRANT INSERT ON TABLE test TO ROLE write

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140202_4ac6ca45-9829-47ae-893e-376778c17030); Time taken: 0.014 seconds

INFO : OK

No rows affected (0.107 seconds)3.3 将read角色授权给 jast_r 用户组,write角色授权给 jast_w 用户组

0: jdbc:hive2://hostname:10000/> GRANT ROLE read TO GROUP jast_r;

INFO : Compiling command(queryId=hive_20190811140303_914d96ac-577e-49fd-99a4-69e6ef2c977c): GRANT ROLE read TO GROUP jast_r

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140303_914d96ac-577e-49fd-99a4-69e6ef2c977c); Time taken: 0.082 seconds

INFO : Executing command(queryId=hive_20190811140303_914d96ac-577e-49fd-99a4-69e6ef2c977c): GRANT ROLE read TO GROUP jast_r

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140303_914d96ac-577e-49fd-99a4-69e6ef2c977c); Time taken: 0.02 seconds

INFO : OK

No rows affected (0.112 seconds)

0: jdbc:hive2://hostname:10000/> GRANT ROLE write TO GROUP jast_w;

INFO : Compiling command(queryId=hive_20190811140303_0f5e0d44-e853-4ffe-a184-f3c5beebf46f): GRANT ROLE write TO GROUP jast_w

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811140303_0f5e0d44-e853-4ffe-a184-f3c5beebf46f); Time taken: 0.081 seconds

INFO : Executing command(queryId=hive_20190811140303_0f5e0d44-e853-4ffe-a184-f3c5beebf46f): GRANT ROLE write TO GROUP jast_w

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811140303_0f5e0d44-e853-4ffe-a184-f3c5beebf46f); Time taken: 0.011 seconds

INFO : OK

No rows affected (0.1 seconds)3.4 使用kadmin创建 jast_r 和 jast_w 用户

[root@xxx ~]# kadmin.local

Authenticating as principal hive/admin@JAST.COM with password.

kadmin.local: addprinc jast_r@JAST.COM

WARNING: no policy specified for jast_r@JAST.COM; defaulting to no policy

Enter password for principal "jast_r@JAST.COM":

Re-enter password for principal "jast_r@JAST.COM":

Principal "jast_r@JAST.COM" created.

kadmin.local: addprinc jast_w@JAST.COM

WARNING: no policy specified for jast_w@JAST.COM; defaulting to no policy

Enter password for principal "jast_w@JAST.COM":

Re-enter password for principal "jast_w@JAST.COM":

Principal "jast_w@JAST.COM" created.

kadmin.local: exit

3.5 Hive 验证

使用 jast_r 用户登录kerberos

[root@xxx ~]# kinit jast_r

Password for jast_r@JAST.COM:

[root@xxx ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: jast_r@JAST.COMValid starting Expires Service principal

2019-08-11T14:10:15 2019-08-12T14:10:15 krbtgt/JAST.COM@JAST.COMrenew until 2019-08-18T14:10:15

通过beeline链接HiveServer2,并测试 jast_r 权限

[root@hostname ~]# beeline

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Beeline version 1.1.0-cdh5.13.0 by Apache Hive

beeline> !connect jdbc:hive2://hostname:10000/;principal=hive/hostname@IZHONGHONG.COM

scan complete in 2ms

Connecting to jdbc:hive2://hostname:10000/;principal=hive/hostname@IZHONGHONG.COM

Connected to: Apache Hive (version 1.1.0-cdh5.13.0)

Driver: Hive JDBC (version 1.1.0-cdh5.13.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hostname:10000/> show tables;

INFO : Compiling command(queryId=hive_20190811141212_d7b14463-37df-43c9-baa2-f8e1ac1d0bd3): show tables

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811141212_d7b14463-37df-43c9-baa2-f8e1ac1d0bd3); Time taken: 0.095 seconds

INFO : Executing command(queryId=hive_20190811141212_d7b14463-37df-43c9-baa2-f8e1ac1d0bd3): show tables

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811141212_d7b14463-37df-43c9-baa2-f8e1ac1d0bd3); Time taken: 0.148 seconds

INFO : OK

+-----------+--+

| tab_name |

+-----------+--+

| test |

+-----------+--+

1 row selected (0.355 seconds)

0: jdbc:hive2://hostname:10000/> select * from test;

INFO : Compiling command(queryId=hive_20190811141212_60a84683-d394-4d2a-94c8-b861355afe29): select * from test

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:test.s1, type:string, comment:null), FieldSchema(name:test.s2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811141212_60a84683-d394-4d2a-94c8-b861355afe29); Time taken: 0.156 seconds

INFO : Executing command(queryId=hive_20190811141212_60a84683-d394-4d2a-94c8-b861355afe29): select * from test

INFO : Completed executing command(queryId=hive_20190811141212_60a84683-d394-4d2a-94c8-b861355afe29); Time taken: 0.001 seconds

INFO : OK

+----------+----------+--+

| test.s1 | test.s2 |

+----------+----------+--+

| a | b |

| 1 | 2 |

+----------+----------+--+

2 rows selected (0.194 seconds)

0: jdbc:hive2://hostname:10000/> insert into test values("2", "222");

Error: Error while compiling statement: FAILED: SemanticException No valid privilegesUser jast_r does not have privileges for QUERYThe required privileges: Server=server1->Db=default->Table=test->action=insert; (state=42000,code=40000)

命令行中直接使用hive去查询结果相同

hive> select * from test;

OK

a b

1 2

Time taken: 2.2 seconds, Fetched: 2 row(s)

hive> insert into test values("2", "222");

FAILED: RuntimeException Cannot create staging directory 'hdfs://xxx:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_14-14-32_295_6847917828039371771-1': Permission denied: user=jast_r, access=WRITE, inode="/user/hive/warehouse/test":hive:hive:drwxrwx--xat org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkAccessAcl(DefaultAuthorizationProvider.java:363)at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:256)at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240)at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162)at org.apache.sentry.hdfs.SentryAuthorizationProvider.checkPermission(SentryAuthorizationProvider.java:178)at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3770)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3753)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3735)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6723)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4493)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4463)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4436)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:876)at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:326)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:640)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2226)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2222)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2220)

使用 jast_w 用户登录kerberos

[root@hostname ~]# kinit jast_w

Password for jast_w@JAST.COM:

[root@hostname ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: jast_w@JAST.COMValid starting Expires Service principal

2019-08-11T14:16:56 2019-08-12T14:16:56 krbtgt/JAST.COM@JAST.COMrenew until 2019-08-18T14:16:56

验证jast_w 权限

[root@hostname ~]# beeline

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Beeline version 1.1.0-cdh5.13.0 by Apache Hive

beeline> !connect jdbc:hive2://hostname:10000/;principal=hive/hostname@IZHONGHONG.COM

scan complete in 2ms

Connecting to jdbc:hive2://hostname:10000/;principal=hive/hostname@IZHONGHONG.COM

Connected to: Apache Hive (version 1.1.0-cdh5.13.0)

Driver: Hive JDBC (version 1.1.0-cdh5.13.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hostname:10000/> show tables;

INFO : Compiling command(queryId=hive_20190811141818_c8c1f9dc-cb3c-48e4-a059-e8b609daf967): show tables

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811141818_c8c1f9dc-cb3c-48e4-a059-e8b609daf967); Time taken: 0.081 seconds

INFO : Executing command(queryId=hive_20190811141818_c8c1f9dc-cb3c-48e4-a059-e8b609daf967): show tables

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811141818_c8c1f9dc-cb3c-48e4-a059-e8b609daf967); Time taken: 0.097 seconds

INFO : OK

+-----------+--+

| tab_name |

+-----------+--+

| test |

+-----------+--+

1 row selected (0.272 seconds)

0: jdbc:hive2://hostname:10000/> select * from test;

Error: Error while compiling statement: FAILED: SemanticException No valid privilegesUser jast_w does not have privileges for QUERYThe required privileges: Server=server1->Db=default->Table=test->Column=s1->action=select; (state=42000,code=40000)

0: jdbc:hive2://hostname:10000/> insert into test values("2", "333");

INFO : Compiling command(queryId=hive_20190811141818_0f210123-2878-44d2-82ee-19006bc3db9a): insert into test values("2", "333")

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:values__tmp__table__1.tmp_values_col1, type:string, comment:null), FieldSchema(name:values__tmp__table__1.tmp_values_col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811141818_0f210123-2878-44d2-82ee-19006bc3db9a); Time taken: 0.169 seconds

INFO : Executing command(queryId=hive_20190811141818_0f210123-2878-44d2-82ee-19006bc3db9a): insert into test values("2", "333")

INFO : Query ID = hive_20190811141818_0f210123-2878-44d2-82ee-19006bc3db9a

INFO : Total jobs = 3

INFO : Launching Job 1 out of 3

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks is set to 0 since there's no reduce operator

INFO : number of splits:1

INFO : Submitting tokens for job: job_1565402085038_0004

INFO : Kind: HDFS_DELEGATION_TOKEN, Service: 10.248.161.16:8020, Ident: (token for hive: HDFS_DELEGATION_TOKEN owner=hive/hostname.zh@IZHONGHONG.COM, renewer=yarn, realUser=, issueDate=1565504336371, maxDate=1566109136371, sequenceNumber=71, masterKeyId=8)

INFO : The url to track the job: http://hostname.zh:8088/proxy/application_1565402085038_0004/

INFO : Starting Job = job_1565402085038_0004, Tracking URL = http://hostname.zh:8088/proxy/application_1565402085038_0004/

INFO : Kill Command = /opt/cloudera/parcels/CDH-5.13.0-1.cdh5.13.0.p0.29/lib/hadoop/bin/hadoop job -kill job_1565402085038_0004

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

INFO : 2019-08-11 14:19:03,340 Stage-1 map = 0%, reduce = 0%

INFO : 2019-08-11 14:19:08,570 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.13 sec

INFO : MapReduce Total cumulative CPU time: 2 seconds 130 msec

INFO : Ended Job = job_1565402085038_0004

INFO : Starting task [Stage-7:CONDITIONAL] in serial mode

INFO : Stage-4 is selected by condition resolver.

INFO : Stage-3 is filtered out by condition resolver.

INFO : Stage-5 is filtered out by condition resolver.

INFO : Starting task [Stage-4:MOVE] in serial mode

INFO : Moving data to: hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_14-18-56_097_7619254651339763505-1/-ext-10000 from hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_14-18-56_097_7619254651339763505-1/-ext-10002

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table default.test from hdfs://hostname.zh:8020/user/hive/warehouse/test/.hive-staging_hive_2019-08-11_14-18-56_097_7619254651339763505-1/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : Table default.test stats: [numFiles=2, numRows=3, totalSize=14, rawDataSize=11]

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Cumulative CPU: 2.13 sec HDFS Read: 3651 HDFS Write: 74 SUCCESS

INFO : Total MapReduce CPU Time Spent: 2 seconds 130 msec

INFO : Completed executing command(queryId=hive_20190811141818_0f210123-2878-44d2-82ee-19006bc3db9a); Time taken: 13.606 seconds

INFO : OK

No rows affected (13.795 seconds)

我们发现之前配置的hive读写权限相对应的用户组生效

3.6 HDFS 权限验证

kerberos 登录 jast_r 用户

[root@hostname ~]# hdfs dfs -ls hdfs://hostname:8020/user/hive/warehouse/test

Found 2 items

-rwxrwx--x+ 2 hive hive 8 2019-08-11 13:49 hdfs://hostname:8020/user/hive/warehouse/test/000000_0

-rwxrwx--x+ 2 hive hive 6 2019-08-11 14:19 hdfs://hostname:8020/user/hive/warehouse/test/000000_0_copy_1

[root@hostname ~]# hdfs dfs -cat hdfs://hostname:8020/user/hive/warehouse/test/000000_0

a,b

1,2

[root@hostname ~]# hdfs dfs -rm hdfs://hostname:8020/user/hive/warehouse/test/000000_0

19/08/11 14:28:05 WARN fs.TrashPolicyDefault: Can't create trash directory: hdfs://hostname:8020/user/jast_r/.Trash/Current/user/hive/warehouse/test

org.apache.hadoop.security.AccessControlException: Permission denied: user=jast_r, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

[root@hostname ~]# hdfs dfs -put derby.log hdfs://hostname:8020/user/hive/warehouse/test/

put: Permission denied: user=jast_r, access=WRITE, inode="/user/hive/warehouse/test":hive:hive:drwxrwx--x

kerberos 登录 jast_w 用户

[root@hostname ~]# hadoop fs -ls /user/hive/warehouse/test

ls: Permission denied: user=jast_w, access=READ_EXECUTE, inode="/user/hive/warehouse/test":hive:hive:drwxrwx--x

[root@hostname ~]# hadoop fs -ls /user/hive/warehouse

ls: Permission denied: user=jast_w, access=READ_EXECUTE, inode="/user/hive/warehouse":hive:hive:drwxrwx--x

[root@hostname ~]# hadoop fs -ls /user/hive

Found 3 items

drwx------ - hive hive 0 2019-08-11 12:00 /user/hive/.Trash

drwx------ - hive hive 0 2019-08-11 14:19 /user/hive/.staging

drwxrwx--x+ - hive hive 0 2019-08-11 13:47 /user/hive/warehouse

[root@hostname ~]# hdfs dfs -cat hdfs://hostname.zh:8020/user/hive/warehouse/test/000000_0

cat: Permission denied: user=jast_w, access=READ, inode="/user/hive/warehouse/test/000000_0":hive:hive:-rwxrwx--x

[root@hostname ~]# hdfs dfs -put derby.log hdfs://hostname.zh:8020/user/hive/warehouse/test

[root@hostname ~]# hdfs dfs -rm hdfs://hostname.zh:8020/user/hive/warehouse/test/000000_0

19/08/11 14:39:15 WARN fs.TrashPolicyDefault: Can't create trash directory: hdfs://hostname.zh:8020/user/jast_w/.Trash/Current/user/hive/warehouse/test

org.apache.hadoop.security.AccessControlException: Permission denied: user=jast_w, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

我们发现,

读用户可以读取所有内容,但是不能删除和添加hdfs中内容

写用户可以读取非hive目录下的文件,但是不能读取hive/warehouse目录下的的数据,可以写入数据,但是也不能删除数据(这个和网上部分资料说的不太相同,我又测了一下,使用hive用用户的话是可以删除的,jast_w 就是不可以)

说明hive的权限对hdfs权限是同步的

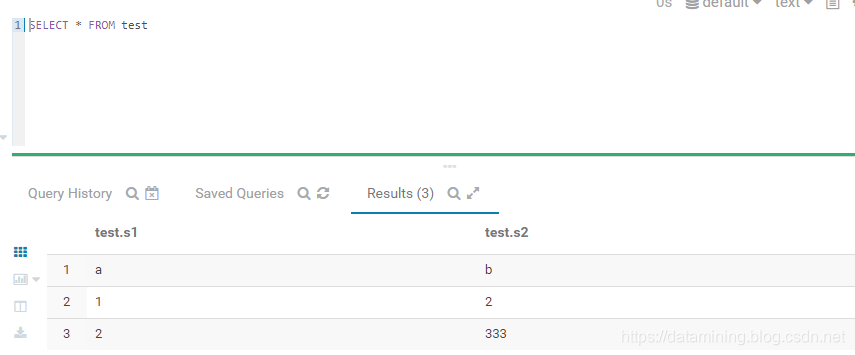

3.7 HUE 验证

在HUE中创建 read_r 与 read_w 用户,登录查询hive测试,结果相同

3.8 Impala 验证

kerberos 登录 jast_r 用户

[root@hostname ~]# impala-shell

Starting Impala Shell without Kerberos authentication

Error connecting: TTransportException, TSocket read 0 bytes

Kerberos ticket found in the credentials cache, retrying the connection with a secure transport.

Connected to hostname.zh:21000

Server version: impalad version 2.10.0-cdh5.13.0 RELEASE (build 2511805f1eaa991df1460276c7e9f19d819cd4e4)

***********************************************************************************

Welcome to the Impala shell.

(Impala Shell v2.10.0-cdh5.13.0 (2511805) built on Wed Oct 4 10:55:37 PDT 2017)You can change the Impala daemon that you're connected to by using the CONNECT

command.To see how Impala will plan to run your query without actually executing

it, use the EXPLAIN command. You can change the level of detail in the EXPLAIN

output by setting the EXPLAIN_LEVEL query option.

***********************************************************************************

[hostname.zh:21000] > select * from test ;

Query: select * from test

Query submitted at: 2019-08-11 15:26:33 (Coordinator: http://hostname:25000)

Query progress can be monitored at: http://hostname:25000/query_plan?query_id=d84fbf93ba1b86d2:d602912400000000

+--------+--------+

| s1 | s2 |

+--------+--------+

| a | b |

| 1 | 2 |

| 2 | 333 |

| test44 | test55 |

+--------+--------+

Fetched 4 row(s) in 0.17s

[hostname.zh:21000] > insert into test values('test','test');

Query: insert into test values('test','test')

Query submitted at: 2019-08-11 15:26:48 (Coordinator: http://hostname:25000)

ERROR: AuthorizationException: User 'jast_r@IZHONGHONG.COM' does not have privileges to execute 'INSERT' on: default.test

[hostname.zh:21000] > show tables;

Query: show tables

+------+

| name |

+------+

| test |

+------+

Fetched 1 row(s) in 0.01s

kerberos 登录 jast_w 用户

[hostname.zh:21000] > select * from test ;

Query: select * from test

Query submitted at: 2019-08-11 15:32:36 (Coordinator: http://hostname:25000)

ERROR: AuthorizationException: User 'jast_w@IZHONGHONG.COM' does not have privileges to execute 'SELECT' on: default.test[hostname.zh:21000] > insert into test values('test111','test000');

Query: insert into test values('test111','test000')

Query submitted at: 2019-08-11 15:32:44 (Coordinator: http://hostname:25000)

Query progress can be monitored at: http://hostname:25000/query_plan?query_id=b5426d5fbe985590:6d98dcd500000000

Modified 1 row(s) in 0.11s

Impala与Sentry集成后可以使用Sentry来进行权限管理,拥有read角色的fayson用户组只能对test表进行select和count操作不能插入数据,拥有write角色的user_w

用户组只能对test表插入数据不能进行select和count操作。说明Sentry实现了Hive权限与Impala的同步。

4. Sentry 列权限设置

其实这里的步骤就是 : 创建kerberos 账号 ->创建 role -> 给role赋予相关权限 -> 给用户/用户组赋予role权限

kerberos 登录hive用户,链接hiveserver2

4.1 创建test表列读角色并授权给用户组

0: jdbc:hive2://hostname:10000/> CREATE ROLE test_column_read;

0: jdbc:hive2://hostname:10000/> GRANT SELECT(s2) ON TABLE test TO ROLE test_column_read;

0: jdbc:hive2://hostname:10000/> GRANT ROLE test_column_read TO GROUP jast_r;0: jdbc:hive2://hostname:10000/> CREATE ROLE test_column_read;

INFO : Compiling command(queryId=hive_20190811161818_864832bd-5231-4cfb-8042-6450249101ec): CREATE ROLE test_column_read

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811161818_864832bd-5231-4cfb-8042-6450249101ec); Time taken: 0.11 seconds

INFO : Executing command(queryId=hive_20190811161818_864832bd-5231-4cfb-8042-6450249101ec): CREATE ROLE test_column_read

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811161818_864832bd-5231-4cfb-8042-6450249101ec); Time taken: 0.021 seconds

INFO : OK

No rows affected (0.194 seconds)

0: jdbc:hive2://hostname:10000/> GRANT SELECT(s2) ON TABLE test TO ROLE test_column_read;

INFO : Compiling command(queryId=hive_20190811162020_136407ae-891e-45d1-a9b5-2f52a0d4bd8e): GRANT SELECT(s1) ON TABLE test TO ROLE test_column_read

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811162020_136407ae-891e-45d1-a9b5-2f52a0d4bd8e); Time taken: 0.08 seconds

INFO : Executing command(queryId=hive_20190811162020_136407ae-891e-45d1-a9b5-2f52a0d4bd8e): GRANT SELECT(s1) ON TABLE test TO ROLE test_column_read

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811162020_136407ae-891e-45d1-a9b5-2f52a0d4bd8e); Time taken: 0.021 seconds

INFO : OK

No rows affected (0.11 seconds)

0: jdbc:hive2://hostname:10000/> GRANT ROLE test_column_read TO GROUP jast_r;

INFO : Compiling command(queryId=hive_20190811162323_224f9a66-9dd7-4fb9-ad9c-9c42cd3cf759): GRANT ROLE test_column_read TO GROUP jast_r

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20190811162323_224f9a66-9dd7-4fb9-ad9c-9c42cd3cf759); Time taken: 0.092 seconds

INFO : Executing command(queryId=hive_20190811162323_224f9a66-9dd7-4fb9-ad9c-9c42cd3cf759): GRANT ROLE test_column_read TO GROUP jast_r

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20190811162323_224f9a66-9dd7-4fb9-ad9c-9c42cd3cf759); Time taken: 0.012 seconds

INFO : OK

No rows affected (0.113 seconds)

4.2 列读权限测试

登录kerberos jast_r 用户

0: jdbc:hive2://hostname:10000/> select s2 from test;

INFO : Compiling command(queryId=hive_20190811172828_148d8d32-33a1-49af-9fb9-62a24d8ad8dd): select s2 from test

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:s2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20190811172828_148d8d32-33a1-49af-9fb9-62a24d8ad8dd); Time taken: 0.219 seconds

INFO : Executing command(queryId=hive_20190811172828_148d8d32-33a1-49af-9fb9-62a24d8ad8dd): select s2 from test

INFO : Completed executing command(queryId=hive_20190811172828_148d8d32-33a1-49af-9fb9-62a24d8ad8dd); Time taken: 0.001 seconds

INFO : OK

+----------+--+

| s2 |

+----------+--+

| b |

| 2 |

| 333 |

| test000 |

| test55 |

| test000 |

+----------+--+

6 rows selected (0.378 seconds)

0: jdbc:hive2://hostname:10000/> select s1 from test;

Error: Error while compiling statement: FAILED: SemanticException No valid privilegesUser jast_cloumn does not have privileges for QUERYThe required privileges: Server=server1->Db=default->Table=test->Column=s1->action=select; (state=42000,code=40000)

0: jdbc:hive2://hostname:10000/> select * from test;

Error: Error while compiling statement: FAILED: SemanticException No valid privilegesUser jast_cloumn does not have privileges for QUERYThe required privileges: Server=server1->Db=default->Table=test->Column=s1->action=select; (state=42000,code=40000)

发现,我们只能查看我们指定的列

去hdfs查看是否有权限查看数据

[root@hostname ~]# hdfs dfs -ls /user/hive/warehouse

ls: Permission denied: user=jast_cloumn, access=READ_EXECUTE, inode="/user/hive/warehouse":hive:hive:drwxrwx--x

[root@hostname ~]# hdfs dfs -ls /user/hive

Found 3 items

drwx------ - hive hive 0 2019-08-11 15:00 /user/hive/.Trash

drwx------ - hive hive 0 2019-08-11 14:19 /user/hive/.staging

drwxrwx--x+ - hive hive 0 2019-08-11 13:47 /user/hive/warehouse

发现无权限查看hive中的数据

注意:Sentry只支持SELECT的列授权,不能用于INSERT和ALL的列授权。

5. Sentry 权限命令详解(更多介绍参考:https://datamining.blog.csdn.net/article/details/99212770)

#权限分为 SELECT ,INSERT ,ALL

#查看所有role

show roles;#创建role

create role role_name;#删除rolt

drop role role_name; #将某个数据库读权限授予给某个role

GRANT SELECT ON DATABASE db_name TO ROLE role_name;#将test 表的 S1 列的读权限授权给role_name (TABLE也可以不写)

GRANT SELECT(s1) ON TABLE test TO ROLE role_name;

本文参考公号文章:

https://mp.weixin.qq.com/s?__biz=MzI4OTY3MTUyNg==&mid=2247484811&idx=1&sn=5d54f0b0e85c2264033575c1b051b5b1&chksm=ec2ad582db5d5c94e7919faca495d4a5a8de21592cd1f95f93c02b98d92686238826a6a3c1fc&scene=21#wechat_redirect

)

文件存储,查看)

)