下载ES

下载ES安装包上传至服务器,地址为:

https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.1.0.tar.gz上传完成后解压

tar -zxvf elasticsearch-6.1.0.tar.gz安装JDK

这里使用jdk8,官网下载安装即可,这里不做讲解

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin

[jast@001 es6]$ java -version

java version "1.8.0_101"

Java(TM) SE Runtime Environment (build 1.8.0_101-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.101-b13, mixed mode)修改Linux配置

1.编辑 /etc/security/limits.conf,追加以下内容;

* soft nofile 65536

* hard nofile 655362.在/etc/sysctl.conf文件最后添加一行

vm.max_map_count=262144

sysctl -p修改ES配置文件

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

# 集群名称

cluster.name: log_cluster

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

# 节点名称

node.name: node_01

# 是否可以为主节点

node.master: true

# 是否可以为数据节点

node.data: true

# 允许在对文档进行索引之前进行预处理

node.ingest: true

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

# 数据存储目录,默认在es目录下data

#path.data: /path/to/data

#

# Path to log files:

#

# 日志存储目录,默认在es目录下logs

#path.logs: /path/to/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

# 锁定物理内存地址,防止es内存被交换出去,也就是避免es使用swap交换分区,频繁的交换,会导致IOPS变高

bootstrap.memory_lock: true

# 禁止检测SecComp

bootstrap.system_call_filter: false

# 当前机器ip地址,0.0.0.0 代表当前

network.host: 10.10.0.1

# 是否使用http协议对外提供服务,默认为true,开启

http.enabled: true

# 设置对外服务的http端口,默认为9200

http.port: 9200

# 设置节点间交互的tcp端口,默认是9300

transport.tcp.port: 9300

# 设置是否压缩tcp传输时的数据,默认为false,不压缩

transport.tcp.compress: true

#

# For more information, consult the network module documentation.

http.cors.enabled: true

http.cors.allow-origin: "*"

#

# --------------------------------- Discovery ----------------------------------

#

# 设置集群中master节点的初始列表,可以通过这些节点来自动发现新加入集群的节点

discovery.zen.ping.unicast.hosts: ["10.10.0.1:9300","10.10.0.2:9300","10.10.0.3:9300"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

# 设置这个参数来保证集群中的节点可以知道其它N个有master资格的节点。默认为1,(total number of master-eligible nodes / 2 + 1)

discovery.zen.minimum_master_nodes: 1

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes: 3

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

# ---------------------------------- Cache -----------------------------------# 启用脚本 默认painless

cluster.routing.allocation.same_shard.host: true

#

# TODO 集群搭建好后配置

#

#

# #超时时间

discovery.zen.ping_timeout: 5s

discovery.zen.fd.ping_timeout: 5s

#

# #禁止自动创建索引

action.auto_create_index: true

#

# # 一个集群中的N个节点启动后,才允许进行恢复处理

gateway.recover_after_nodes: 1

# #

# # 设置初始化恢复过程的超时时间,超时时间从上一个配置中配置的N个节点启动后算起

gateway.recover_after_time: 5m

# #

# # 设置这个集群中期望有多少个节点.一旦这N个节点启动(并且recover_after_nodes也符合),

# # 立即开始恢复过程(不等待recover_after_time超时)

gateway.expected_nodes: 2

# # 线程池

thread_pool.search.size: 100

thread_pool.search.queue_size: 1000 启动es

./elasticsearch -d -p pid浏览器访问端口

启动成功

安装head插件

安装npm

yum install -y npm下载插件上传到服务器:GitHub - mobz/elasticsearch-head: A web front end for an elastic search cluster

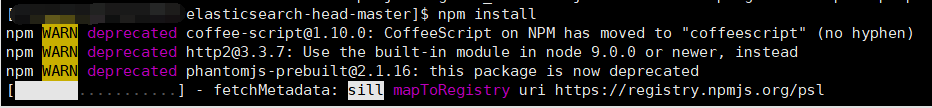

执行 npm install 安装

安装过程中提示如下warn

npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expression

修改 package.json ,中license 如下图所示即可

后台启动 head 插件

nohup npm run start > run.log &访问 ip:9100 ,显示如下,安装成功

Kibana 安装

下载 https://artifacts.elastic.co/downloads/kibana/kibana-6.1.0-linux-x86_64.tar.gz 并上传到服务器,解压

在kibana conf目录下修改kibana.yml文件

server.host: "10.10.0.1"

elasticsearch.url: "http://10.10.0.1:9200"启动kibana

nohup bin/kibana > kibana.log &页面访问 10.10.0.1:5601

安装成功

异常1:

kibana启动报错:

{"type":"error","@timestamp":"2019-08-30T05:47:57Z","tags":["fatal"],"pid":3026,"level":"fatal","error":{"message":"listen EADDRNOTAVAIL 192.169.1.111:5606","name":"Error","stack":"Error: listen EADDRNOTAVAIL 192.169.1.111:5606\n at Object.exports._errnoException (util.js:1020:11)\n at exports._exceptionWithHostPort (util.js:1043:20)\n at Server._listen2 (net.js:1249:19)\n at listen (net.js:1298:10)\n at net.js:1408:9\n at _combinedTickCallback (internal/process/next_tick.js:83:11)\n at process._tickCallback (internal/process/next_tick.js:104:9)","code":"EADDRNOTAVAIL"},"message":"listen EADDRNOTAVAIL 192.169.1.111:5606"}

FATAL { Error: listen EADDRNOTAVAIL 192.169.1.111:5606at Object.exports._errnoException (util.js:1020:11)at exports._exceptionWithHostPort (util.js:1043:20)at Server._listen2 (net.js:1249:19)at listen (net.js:1298:10)at net.js:1408:9at _combinedTickCallback (internal/process/next_tick.js:83:11)at process._tickCallback (internal/process/next_tick.js:104:9)cause: { Error: listen EADDRNOTAVAIL 192.169.1.111:5606at Object.exports._errnoException (util.js:1020:11)at exports._exceptionWithHostPort (util.js:1043:20)at Server._listen2 (net.js:1249:19)at listen (net.js:1298:10)at net.js:1408:9at _combinedTickCallback (internal/process/next_tick.js:83:11)at process._tickCallback (internal/process/next_tick.js:104:9)code: 'EADDRNOTAVAIL',errno: 'EADDRNOTAVAIL',syscall: 'listen',address: '192.169.1.111',port: 5606 },isOperational: true,code: 'EADDRNOTAVAIL',errno: 'EADDRNOTAVAIL',syscall: 'listen',address: '192.169.1.111',port: 5606 }将server.host 修改为 0.0.0.0 ,再次启动恢复

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.1.111:9200"

)