1 问题描述

如何定义一个评价指标来评估睡眠声音识别中的准确率?

1.1 按照评分如何设计评价指标

睡眠声音识别预测的结果是一个概率分布,如下表所示:

| c1c_1c1 | c2c_2c2 | c3c_3c3 | snort | speech | cough |

|---|---|---|---|---|---|

| 0.5 | 0.2 | 0.7 | 0.55 | 0.45 | 0.77 |

在这种场景下,如何设计合适的评价指标。

1.2 按照多标签如何设计评价指标

令阈值δ=0.5\delta = 0.5δ=0.5,将上表的概率分布学习退化为多标签学习,如下表所示:

| c1c_1c1 | c2c_2c2 | c3c_3c3 | snort | speech | cough |

|---|---|---|---|---|---|

| 0.5 | 0.2 | 0.7 | 1 | 0 | 1 |

在这种场景下,如何设计合适的评价指标。

1.3 按照top-n标签如何设计评价指标

睡眠声音识别预测的结果是一个top-n的标签,如何设计合适的评价指标?

(1)场景1:

假设样本x1x_1x1的top-5原始标签为:d1,d2,d3,d4,d5d_1, d_2, d_3, d_4, d_5d1,d2,d3,d4,d5

假设样本x1x_1x1的top-5预测标签为:d2,d3,d4,d5,d6d_2, d_3, d_4, d_5, d_6d2,d3,d4,d5,d6

(2)场景2:

假设样本x1x_1x1的原始标签为:d1d_1d1(单标签)

假设样本x1x_1x1的top-5预测标签为:d2,d1,d3,d4,d5d_2, d_1, d_3, d_4, d_5d2,d1,d3,d4,d5

2 解决思路

2.1 按照评分设计评价指标

是否可以借鉴标签分布学习来设计评价指标?

2.2 按照多标签设计评价指标

是否借鉴多标签学习来设计评价指标?在AudioSet数据集中,用到AUC和mAP两种评价指标,如何改进AUC和mAP来适应当前的应用场景?

2.3 按照top-n设计评价指标

(1) 对于场景1,设计思路为:

对于预测返回top-n个标签,我们计算多个标签的平均精度。以top-5为例说明,对于样本x1x_1x1,我们计算标签d1d_1d1的精度p1p_1p1,再计算d2d_2d2的精度p2p_2p2,依次类推,最后计算

mP=∑i=15pi5(1)mP = \frac{\sum_{i = 1}^5{p_i}}{5} \tag1mP=5∑i=15pi(1)

这里需要考虑的问题是: 标签是否考虑所处的位置?如在原始标签中d2d_2d2排第二名,而预测标签中d2d_2d2排第一名。

(2) 对于场景2,设计思路为:

如果样本的原始标签只有一个d1d_1d1,我们可以做一个非常粗暴的方案,只要预测的top-n标签中出现了d1d_1d1,则认为是准确的。

3 讨论

1、YAMNet的学习样本是怎么来的?

(1)它是有监督的学习,还是无监督的学习,还是半监督学习?

(2)它的音频特征、标签、标签的概率值是怎么得来的?

2、斌元提到在测试的时候,鼾声音频的评分不稳定?

(1)对同一个样本评分是一致的;

(2)不同的鼾声音频,YAMNet的评分不太一样,有些的分数是0.8,有些分数是0.2;

(3)在做评价的时候要不要去考虑强度问题?

3、在预测的时候,翻身和磨牙都不怎么准确,怎么处理?

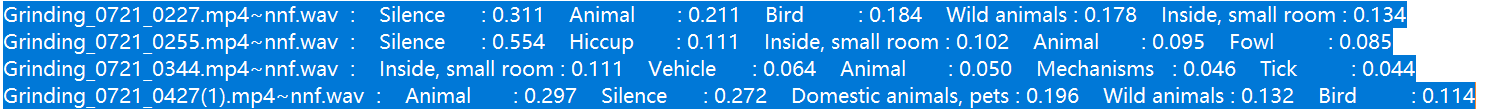

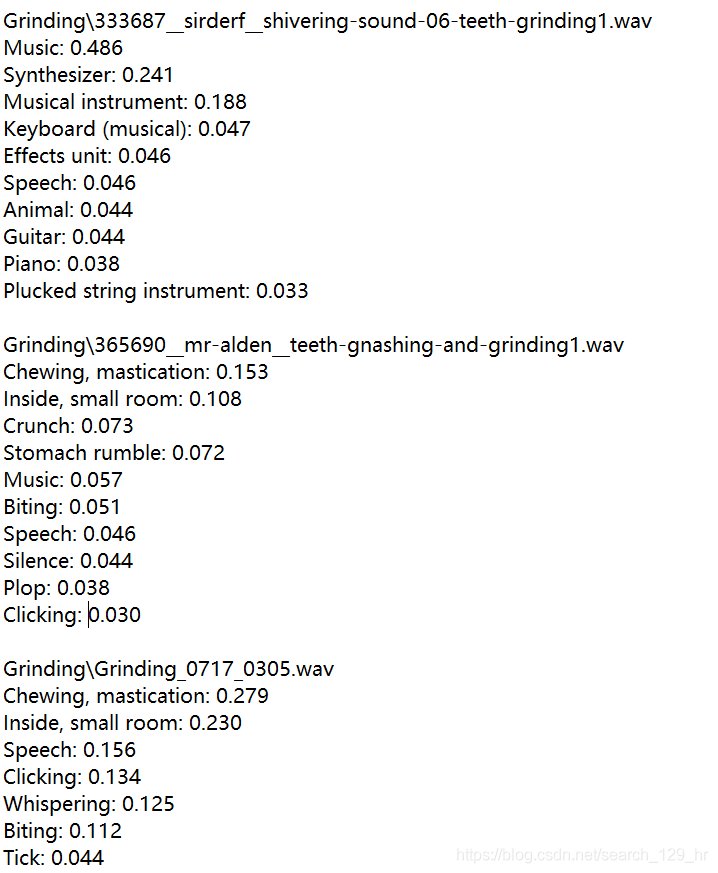

(1)磨牙的预测结果:

4 下一步工作:

4.1 调研YAMNet是怎么进行评价的?

For our metrics, we calculated the balanced average across all classes of AUC (also reported as the equivalent d-prime class separation), and mean Average Precision (mAP).

对于我们的指标,我们计算了所有 AUC 类的平衡平均值(也报告为等效的 d-prime 类分离)和平均精度 (mAP)。

AUC is the area under the Receiver Operating Characteristic (ROC) curve, that is, the probability of correctly classifying a positive example (correct accept rate) as a function of the probability of incorrectly classifying a negative example as positive (false accept rate); perfect classification achieves AUC of 1.0 (corresponding to an infinite d-prime), and random guessing gives an AUC of 0.5 (d-prime of zero).

AUC是Receiver Operating Characteristic (ROC)曲线下的面积,即正确分类正例的概率(正确接受率)作为将负例错误分类为正例的概率(错误接受率)的函数; 完美分类的 AUC 为 1.0(对应于无限的 d-prime),随机猜测的 AUC 为 0.5(d-prime 为零)。

mAP is the mean across classes of the Average Precision (AP), which is the proportion of positive items in a ranked list of trials (i.e., Precision) averaged across lists just long enough to include each individual positive trial.

mAP 是平均精度 (AP) 的跨类别的平均值,它是经过排序的试验列表(即精度)中正项的比例,该列表的平均长度刚好足以包括每个单独的正试验。

AP is widely used as an indicator of precision that does not require a particular retrieval list length, but, unlike AUC, it is directly correlated with the prior probability of the class. Because most of our classes have very low priors (<10−4< 10^{−4}<10−4), the mAPs we report are typically small, even though the false alarm rates are good.

AP 被广泛用作不需要特定检索列表长度的精度指标,但与 AUC 不同,它与类的先验概率直接相关。 因为我们的大多数类都有非常低的先验(<10−4< 10^{−4}<10−4),我们报告的 mAP 通常很小,即使误报率很高。

On the 20,366-segment AudioSet eval set, over the 521 included classes, the balanced average d-prime is 2.318, balanced mAP is 0.306, and the balanced average lwlrap is 0.393.

在 20,366 段 AudioSet 评估集上,在 521 个包含的类中,平衡平均 d-prime 为 2.318,平衡 mAP 为 0.306,平衡平均 lwlrap 为 0.393。

4.2 调研AudioSet是如何标注的分数?

Segments are proposed for labeling using searches based on metadata, context (e.g., links), and content analysis.

建议使用基于元数据、上下文(例如,链接)和内容分析的搜索来标记音频段.

一个提供语音片段的网站:freesound.org.

This set of classes will allow us to collect labeled data for training and evaluation.

这组类将允许我们收集标记数据以进行训练和评估。

For each segment, raters were asked to independently rate the presence of one or more labels. The possible ratings were “present”, “not present” and “unsure”. Each segment was rated by three raters and a majority vote was required to record an overall rating. For speed, a segment’s third rating was not collected if the first two ratings agreed for all labels.

对于每个语音段,评估者被要求独立评估一个或多个标签的存在。 可能的评级为“存在”、“不存在”和“不确定”。 每个部分由三名评分者评分,需要多数票才能记录总体评分。 出于速度的考虑,如果所有标签的前两个评分都一致,则不会收集细分的第三个评分。

The raters were unanimous in 76.2% of votes. The “unsure” rating was rare, representing only 0.5% of responses, so 2:1 majority votes account for 23.6% of the decisions. Categories that achieved the highest rater agreement include “Christmas music”, “Accordion” and “Babbling” (>0.92> 0.92>0.92); while some categories with low agreement include “Basketball bounce”, “Boiling” and “Bicycle” (<0.17< 0.17<0.17).

评分者以 76.2% 的票数一致通过。 “不确定”评级很少见,仅占回应的 0.5%,因此 2:1 多数票占决定的 23.6%。 获得评分者一致同意的最高类别包括“圣诞音乐”、“手风琴”和“Babbling”(>0.92> 0.92>0.92); 而一些一致性较低的类别包括“篮球反弹”、“沸腾”和“自行车”(<0.17<0.17<0.17)。

--测试结果及分析)

--采集的音频测试结果及分析)

--基于IOS的YAMNet音频识别(总结))

)

: 深探Handler,多线程,Bitmap)