chatbot1.2

如何处理多义词的embedding?

- 每个意思一个向量,多方叠加。在某个切面与其相同意思的向量相近

如何识别和学习词组的向量?

- 多次出现在一起,认为是词组

如何处理未曾见过的新词?

- 语境平均,语境猜测

通过非监督学习训练词向量需要多少数据?

- Glove

- Word2vec

如何用已有的数据优化?

Retrofit

- 灰色的是非监督学习学到的向量,白色的是知识库的词和其关系。白色标记学到的尽量接近灰色的来加强知识库里的词的关系或消弱。–》兼顾两者特征的新的。形成下面的

- Conceptnet embedding

RNN

https://r2rt.com/recurrent-neural-networks-in-tensorflow-i.html

demo1

-

这个,可以在理论上计算一个一般精确的和非常精确的模型会有什么样的准确率,和理论数据做比较。

-

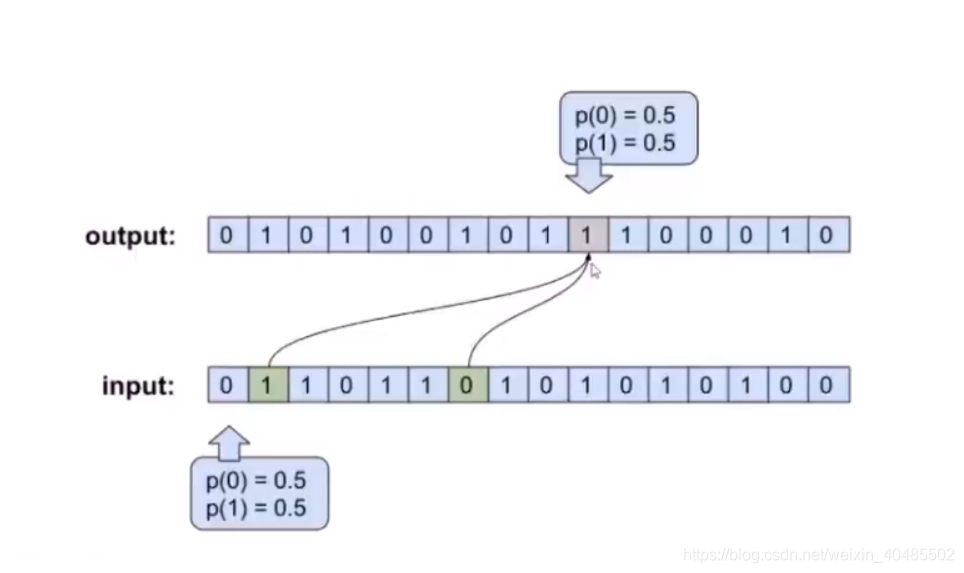

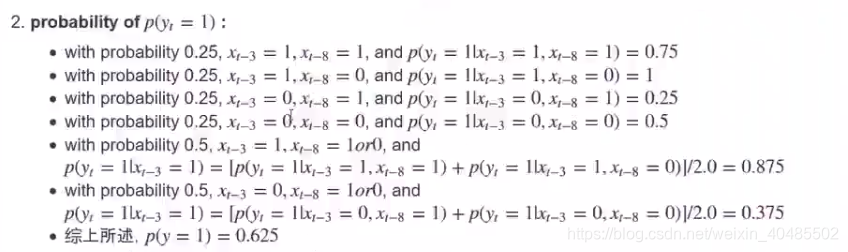

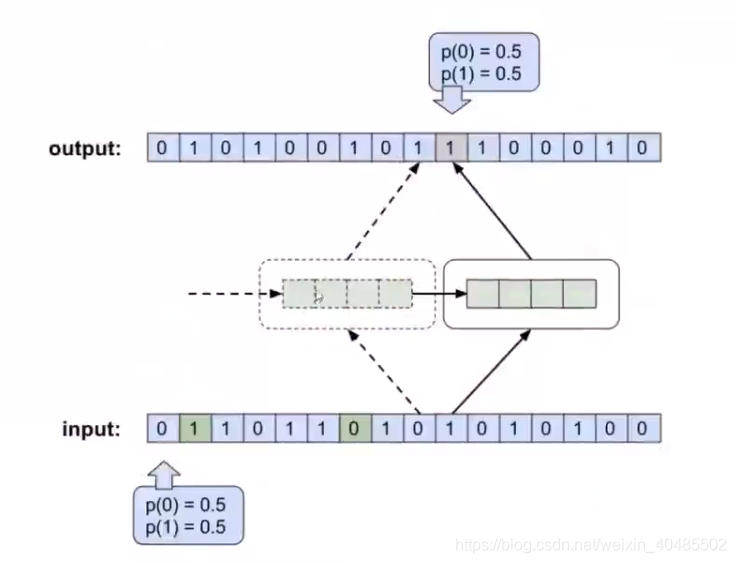

input是简单的0,1序列,虽然排成了序列,但相互之间是独立的。

-

输出序列并不完全独立,有一部分和事件相关的信息。每一个node都会和输入序列有一定的关系。每一个node是0或者1的概率受一个先验概率和输入序列里面的t-3和t-8这两个位置的数字的影响。如图。

import numpy as np

import tensorflow as tfmatplotlib inline

import matplotlib.pyplot as plt

Global config variables

num_steps = 5 # number of truncated backprop steps (‘n’ in the discussion above)

batch_size = 200

num_classes = 2

state_size = 4

learning_rate = 0.1

def gen_data(size=1000000):按照数据生成合成序列数据:param size:input 和output序列的总长度:return:X,Y:input和output序列,rank-1和numpy array(即.vector)X = np.array(np.random.choice(2, size=(size,)))Y = []for i in range(size):threshold = 0.5if X[i-3] == 1:threshold += 0.5if X[i-8] == 1:threshold -= 0.25if np.random.rand() > threshold:Y.append(0)else:Y.append(1)return X, np.array(Y)

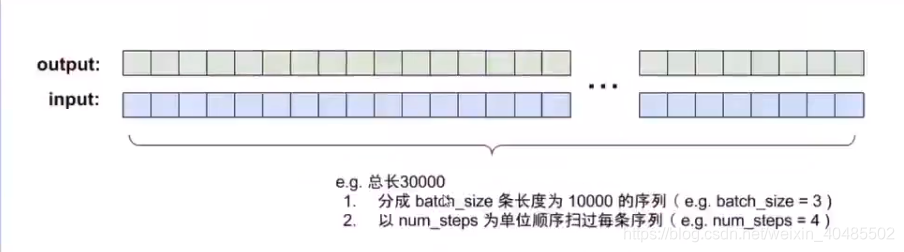

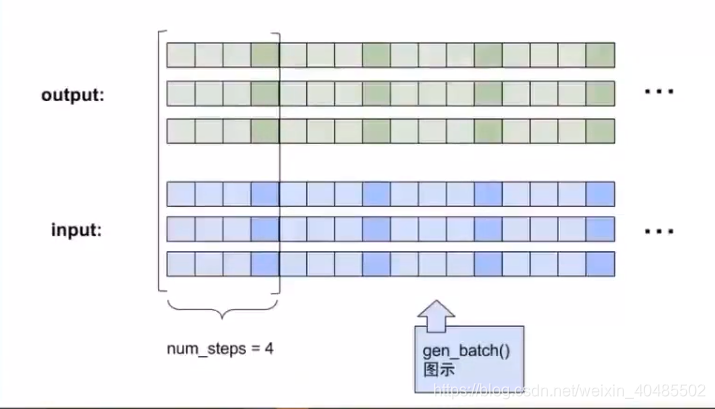

# adapted from https://github.com/tensorflow/tensorflow/blob/master/tensorflow/models/rnn/ptb/reader.py

def gen_batch(raw_data, batch_size, num_steps):# """# 产生minibatch数据# :param raw_data:所有的数据,(input,output)tuple# :param batch_size: 一个minibatch包含的样本数量,每个样本是一个sequence,有多少个序列# :param num_steps: 每个sequence样本的长度# :return:# 一个generator,在一个tuple里面包含一个minibatch的输入,输出序列# """raw_x, raw_y = raw_datadata_length = len(raw_x)# 将raw_data分为batches并且stack他们垂直的在数据矩阵中# partition raw data into batches and stack them vertically in a data matrixbatch_partition_length = data_length // batch_sizedata_x = np.zeros([batch_size, batch_partition_length], dtype=np.int32)data_y = np.zeros([batch_size, batch_partition_length], dtype=np.int32)#这两个形状相同for i in range(batch_size):data_x[i] = raw_x[batch_partition_length * i:batch_partition_length * (i + 1)]data_y[i] = raw_y[batch_partition_length * i:batch_partition_length * (i + 1)]# further divide batch partitions into num_steps for truncated backprop更进一步分割epoch_size = batch_partition_length // num_stepsfor i in range(epoch_size):x = data_x[:, i * num_steps:(i + 1) * num_steps]y = data_y[:, i * num_steps:(i + 1) * num_steps]yield (x, y)def gen_epochs(n, num_steps):for i in range(n):yield gen_batch(gen_data(), batch_size, num_steps)

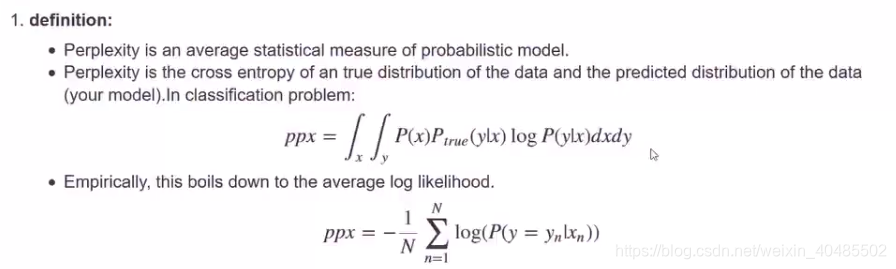

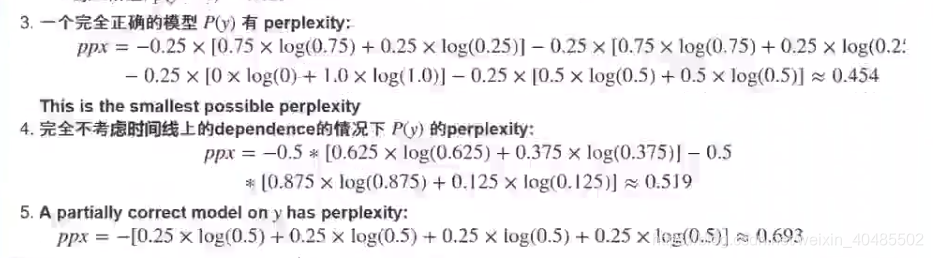

caculate the perplexity

- 3中完美的模型表示,既考虑t-3的时间也考虑了t-8的时间

- 4中不完美的模型不考虑时间

简易的RNN模型实现

- 前一个会把它自己的状态作为输入放到后一个里面,他也会把自己的观测时作为另一部分放在rnn里面,两个输入会拼接到一起映射到一个四维的向量里,然后这个四维的向量会进一步映射到一个一维的输出里面。这就是如何使用一个rnn模型给定一个输入,预测一个输出。

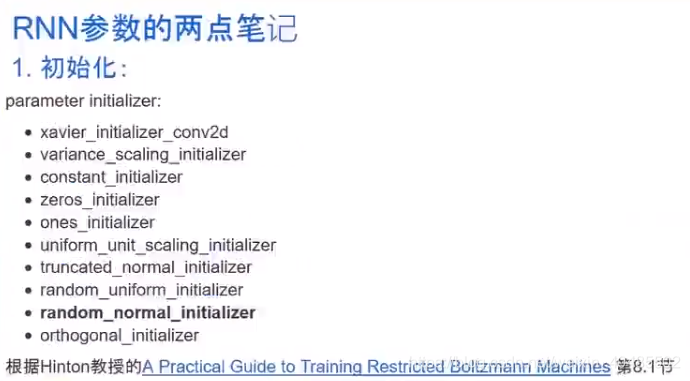

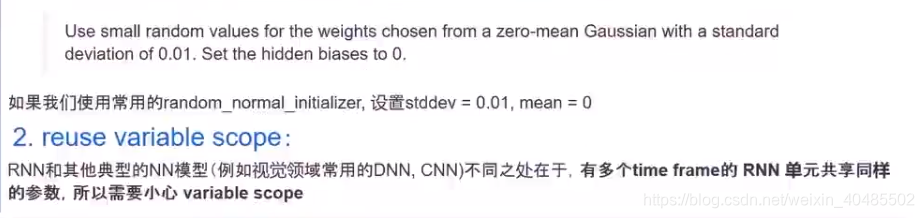

- 默认graph,variale_scope,name_scope

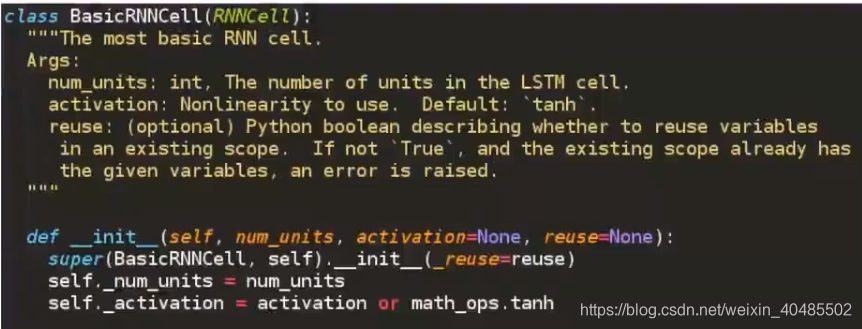

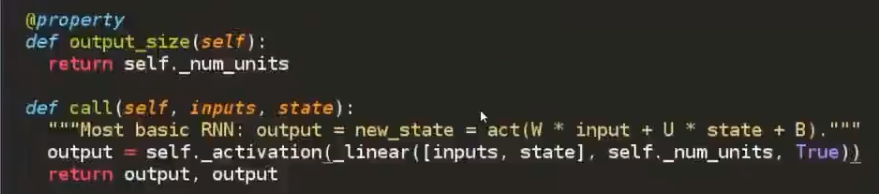

- 自定义rnn cell

####tensorflow tip:tf.placeholder #### - 在计算图中添加node,用于存储数据

- placeholder没有默认数据,数据完全由外部提供。注意tf.Variable,tf.Tensor,tf.placeholder的区别

- 外界输入数据:tf.placeholder

- 中间数据:tf.Tensor,tensorflow operation的output

- 参数:tf.Variable

"""

Placeholders

"""x = tf.placeholder(tf.int32, [batch_size, num_steps], name='input_placeholder')

y = tf.placeholder(tf.int32, [batch_size, num_steps], name='labels_placeholder')

init_state = tf.zeros([batch_size, state_size])#得到的是当前的状态,也是下一个的其中一个输入"""

RNN Inputs

"""

# RNN Inputs

# Turn our placeholder into a list of one-hot tensors:

# rnn_inputs is a list of num_steps tensors with shape [batch_size, num_classes]

# 将前面定义的placeholder输入到rnn cells

# 将输入序列的每一个0,1数字转化为二维one-hot向量

x_one_hot = tf.one_hot(x, num_classes)

rnn_inputs = tf.unstack(x_one_hot, axis=1)

"""

Definition of rnn_cellThis is very similar to the __call__ method on Tensorflow's BasicRNNCell. See:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/rnn/python/ops/core_rnn_cell_impl.py#L95

"""

# state_size#隐含状态的维度,希望把num_classes + state_size映射到新的rnn向量的维度上state_size

#这里只是定义变量

with tf.variable_scope('rnn_cell'):W = tf.get_variable('W', [num_classes + state_size, state_size])b = tf.get_variable('b', [state_size], initializer=tf.constant_initializer(0.0))

#这里使用定义的变量形成一个又一个的rnn单元,且公用同样的参数

def rnn_cell(rnn_input, state):with tf.variable_scope('rnn_cell', reuse=True):#reuse=True如果已存在,就直接拿来用,不再重新建立了W = tf.get_variable('W', [num_classes + state_size, state_size])b = tf.get_variable('b', [state_size], initializer=tf.constant_initializer(0.0))return tf.tanh(tf.matmul(tf.concat([rnn_input, state], 1), W) + b)

init_state,state,final_state

"""

Adding rnn_cells to graphThis is a simplified version of the "static_rnn" function from Tensorflow's api. See:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/rnn/python/ops/core_rnn.py#L41

Note: In practice, using "dynamic_rnn" is a better choice that the "static_rnn":

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/rnn.py#L390

"""

state = init_state#初始化的状态,对第一个rnn单元,我们也要给他一个初始状态,一般是0

rnn_outputs = []

for rnn_input in rnn_inputs:state = rnn_cell(rnn_input, state)rnn_outputs.append(state)

final_state = rnn_outputs[-1]

"""

Predictions, loss, training stepLosses is similar to the "sequence_loss"

function from Tensorflow's API, except that here we are using a list of 2D tensors, instead of a 3D tensor. See:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/seq2seq/python/ops/loss.py#L30

"""#logits and predictions

with tf.variable_scope('softmax'):W = tf.get_variable('W', [state_size, num_classes])b = tf.get_variable('b', [num_classes], initializer=tf.constant_initializer(0.0))

logits = [tf.matmul(rnn_output, W) + b for rnn_output in rnn_outputs]

predictions = [tf.nn.softmax(logit) for logit in logits]# Turn our y placeholder into a list of labels

y_as_list = tf.unstack(y, num=num_steps, axis=1)#losses and train_step

# """

# 计算损失函数,定义优化器

# 从每一个time frame 的hidden state

# 映射到每个time frame的最终output(prediction)

# 和cbow或者skip_gram的最上层相同

# Predictions, loss, training step

# Losses is similar to the "sequence_loss"

# function from Tensorflow's API, except that here we are using a list of 2D tensors, instead of a 3D tensor. See:

# https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/seq2seq/python/ops/loss.py#L30

# """

# logits and predictions

losses = [tf.nn.sparse_softmax_cross_entropy_with_logits(labels=label, logits=logit) for \logit, label in zip(logits, y_as_list)]

total_loss = tf.reduce_mean(losses)

train_step = tf.train.AdagradOptimizer(learning_rate).minimize(total_loss)

tf.variable_scope(‘softmax’)和tf.variable_scope(‘rnn_cell’, reuse=True)中,各有两个W,b的tf.Variable,因为在不同的variable_scope,即便用相同的名字,也是不同的对象。

##打印所有的variable

all_vars =[node.name for node in tf.global_variables()]

for var in all_vars:print(var) # rnn_cell/W:0

# rnn_cell/b:0

# softmax/W:0

# softmax/b:0

# rnn_cell/W/Adagrad:0计算和优化的东西

# rnn_cell/b/Adagrad:0

# softmax/W/Adagrad:0

# softmax/b/Adagrad:0all_node_names=[node for node in tf.get_default_graph().as_graph_def().node]

#或者tf.get_default_graph().get_operations()

all_node_values=[node.values() for node in tf.get_default_graph().getoperationa()]

for i in range(0,len(all_node_values),50):print("output and operation %d:"%i)print(all_node_values[i])print('---------------------------')print(all_node_names[i])print('\n')print('\n')for i in range(len(all_node_values)):print('%d:%s'%(i,all_node_values[i]))

tensor命名规则

add_7:0第七次调用add操作,返回0个向量7

"""

Train the network

"""def train_network(num_epochs, num_steps, state_size=4, verbose=True):with tf.Session() as sess:sess.run(tf.global_variables_initializer())training_losses = []for idx, epoch in enumerate(gen_epochs(num_epochs, num_steps)):training_loss = 0training_state = np.zeros((batch_size, state_size))if verbose:print("\nEPOCH", idx)for step, (X, Y) in enumerate(epoch):tr_losses, training_loss_, training_state, _ = \sess.run([losses,total_loss,final_state,train_step],feed_dict={x:X, y:Y, init_state:training_state})training_loss += training_loss_if step % 100 == 0 and step > 0:if verbose:print("Average loss at step", step,"for last 250 steps:", training_loss/100)training_losses.append(training_loss/100)training_loss = 0return training_losses

training_losses = train_network(1,num_steps)

plt.plot(training_losses)

)

」)

)