简明易懂的c#入门指南

介绍 (Introduction)

One of the main applications of frequentist statistics is the comparison of sample means and variances between one or more groups, known as statistical hypothesis testing. A statistic is a summarized/compressed probability distribution; for example, the Gaussian distribution can be summarized with mean and standard deviation. In the view of a frequentist statistician, said statistics are random variables when estimated from data with unknown fixed true values behind them — and the question is whether groups are significantly different with respect to these estimated values.

频度统计的主要应用之一是比较样本均值和一个或多个组之间的方差 ,称为统计假设检验 。 统计是汇总/压缩的概率分布; 例如,可以用均值和标准差来概括高斯分布。 根据常客统计学家的观点,当从其后具有未知固定真实值的数据进行估算时,所述统计信息是随机变量 ,问题是,这些估算值的组是否显着不同。

Suppose, for example, that a researcher is interested in the growth of children and wonders whether boys and girls of the same age, e.g. twelve years old, have the same height; said researcher collects a data set of the random variable height in some school. In this case, the randomness of height arises due to the sampled population (the children he finds that are twelve years old) and not necessarily due to noise — unless the measuring method is very inaccurate (this leads into the field of metrology). Different children from other schools would have led to different data.

例如,假设研究人员对儿童的成长感兴趣,并且想知道同年龄(例如十二岁)的男孩和女孩的身高是否相同; 他说,研究人员在某所学校收集了一个随机可变高度的数据集。 在这种情况下, 高度的随机性是由于抽样人口(他发现的十二岁的孩子)而不一定是由于噪声引起的,除非测量方法非常不准确(这导致了计量领域)。 来自其他学校的不同孩子会得出不同的数据。

假设 (Hypothesis)

Assuming the research question is now formulated as “Do boys and girls of the same age have different heights?”, the first step would be to pose an hypothesis, although conventionally stated as null hypothesis (H0), i.e. boys and girls of the same age are the same height (there is no difference). This is analogous to thinking of two distributions for height with fixed mean μ and standard deviation σ generating the random variable height, however it is not known whether the means for boys (μ1) and girls (μ2) are the same. Additionally, there is an alternative hypothesis (HA) which is often the negation of the null hypothesis. The null hypothesis, in that case, would be

假设现在将研究问题表述为“同一年龄的男孩和女孩的身高不同吗?”,第一步将是提出一个假设,尽管通常被称为零假设 (H0) ,即相同年龄的男孩和女孩年龄是相同的身高(没有差异)。 这类似于考虑具有固定均值μ和标准偏差σ的两个高度分布生成随机可变高度的想法,但是尚不清楚男孩( μ1 )和女孩( μ2 )的均值是否相同。 此外,还有一个替代假设(HA),通常是对原假设的否定。 在这种情况下,原假设为

H0: μ1 = μ2The researcher computes two sample means and might obtain some difference between them; but how can he be sure that this difference is true and not randomly unequal zero, as he could have also included other (or more) children in this study?

研究人员计算出两个样本均值,并且可能会在两者之间获得一些差异。 但是他如何确定这种差异是正确的,而不是随机的不等于零,因为他也可以在本研究中包括其他(或更多)孩子?

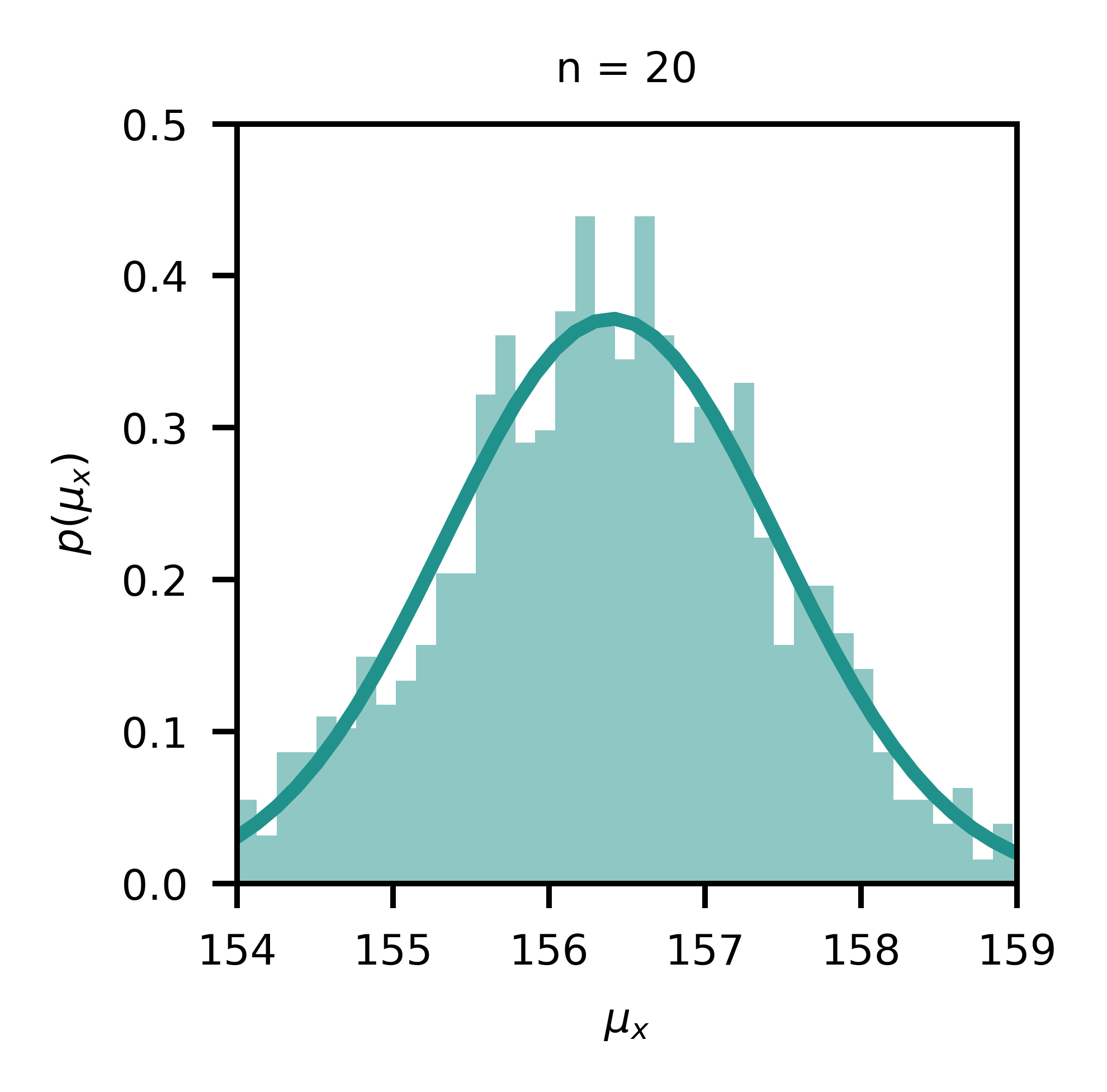

In Figure 1, a simulation with a random number generator is presented. Samples are drawn from a Gaussian distribution for a random variable x (e.g. height) with μ=156.4 and σ=4.8; in each subplot, n samples are drawn and the sample mean is computed; this process is repeated 1000 times for each sample size, and the corresponding histogram of sample means is visualized. This is essentially the distribution of sample means for different sample sizes, and it becomes evident that this distribution becomes narrower with increasing sample size as the standard deviation of the mean, also known as standard error (s.e.), scales with the inverse square root of the sample size.

在图1中,展示了一个带有随机数生成器的仿真。 从高斯分布中抽取一个随机变量x (例如height )的样本,其中μ = 156.4和σ = 4.8; 在每个子图中,绘制n个样本并计算样本均值; 对于每个样本大小,此过程重复1000次,并显示相应的样本均值直方图。 这实际上是不同样本大小的样本均值的分布,并且很明显,随着平均值的标准偏差(也称为标准误差)的增加,该分布会变窄 (se),以样本大小的平方根的倒数进行缩放。

s.e. = σ / sqrt(n)置信区间 (Confidence Intervals)

The law of large numbers states that the average obtained from a large number of sampled random variables should be close to the expected value and will tend to become closer to the expected value as more samples are drawn. For example, for n=20, some sample means are 154 others are 160 — just by chance. Imagine computing two sample means, one for boys and one for girls; they could be different just by chance, particularly with higher probability in small sample sizes; the “true mean” can be located more precisely by the sample mean if enough samples are collected; but what if this is not the case? In many studies, the number of participants is often limited.

大数定律 指出从大量抽样随机变量获得的平均值应该接近预期值,并且随着抽取更多样本,趋向于接近预期值。 例如,对于n = 20,一些样本均值是154,其他样本均值是160,这只是偶然。 想象一下计算两个样本均值,一个用于男孩,一个用于女孩; 它们可能只是偶然而不同,尤其是在小样本量中更有可能; 如果收集了足够的样本,则可以通过样本平均值更精确地定位“真实平均值”; 但是如果不是这种情况怎么办? 在许多研究中,参与者的数量通常是有限的。

This is the origin of the so-called confidence interval. A confidence interval for an estimated statistic is a random interval calculated from the sample that contains the true value with some specified probability. For example, a 95% confidence interval for the mean is a random interval that contains the true mean with probability of 0.95; if we were to take many random samples and compute a confidence interval for each one, about 95% of these intervals would contain the true mean. (The two concepts of randomness and frequency are ubiquitous in the frequentist’s paradigm.) This way, the distributions in Figure 1 can be approximated with confidence intervals. To compute a confidence interval, the quantiles z from the t-distribution corresponding to the chosen probability 1-α (e.g. α = 0.05 for 95%) are multiplied with the standard error, centered on the sample mean on both sides.

这就是所谓的置信区间的起源。 估计统计量的置信区间是从样本中计算出的随机区间,其中包含具有某个指定概率的真实值。 例如,均值的95%置信区间是包含真实均值且概率为0.95的随机区间; 如果我们要抽取许多随机样本并为每个样本计算一个置信区间,那么这些区间中的大约95%将包含真实均值。 (在频率论者的范式中,随机性和频率这两个概念无处不在。)这样,图1中的分布可以用置信区间来近似。 为了计算置信区间,将对应于所选概率1- α (例如,对于95%的α = 0.05)的t分布的分位数z与标准误差相乘,并以两侧的样本平均值为中心。

confidence interval = [μ - z(α/2)*s.e., μ + z(α/2)*s.e.]The t-distribution is a continuous probability distribution that arises when estimating the sample mean of a normally distributed population in situations where the sample size is small and the population standard deviation is unknown — which is quite common. (The t-distribution will be discussed more in detail below.)

t分布是连续的概率分布,在样本量较小且总体标准偏差未知的情况下估计正态分布总体的样本均值时会出现这种概率分布,这很常见。 (t分布将在下面详细讨论。)

统计检验 (Statistical Tests)

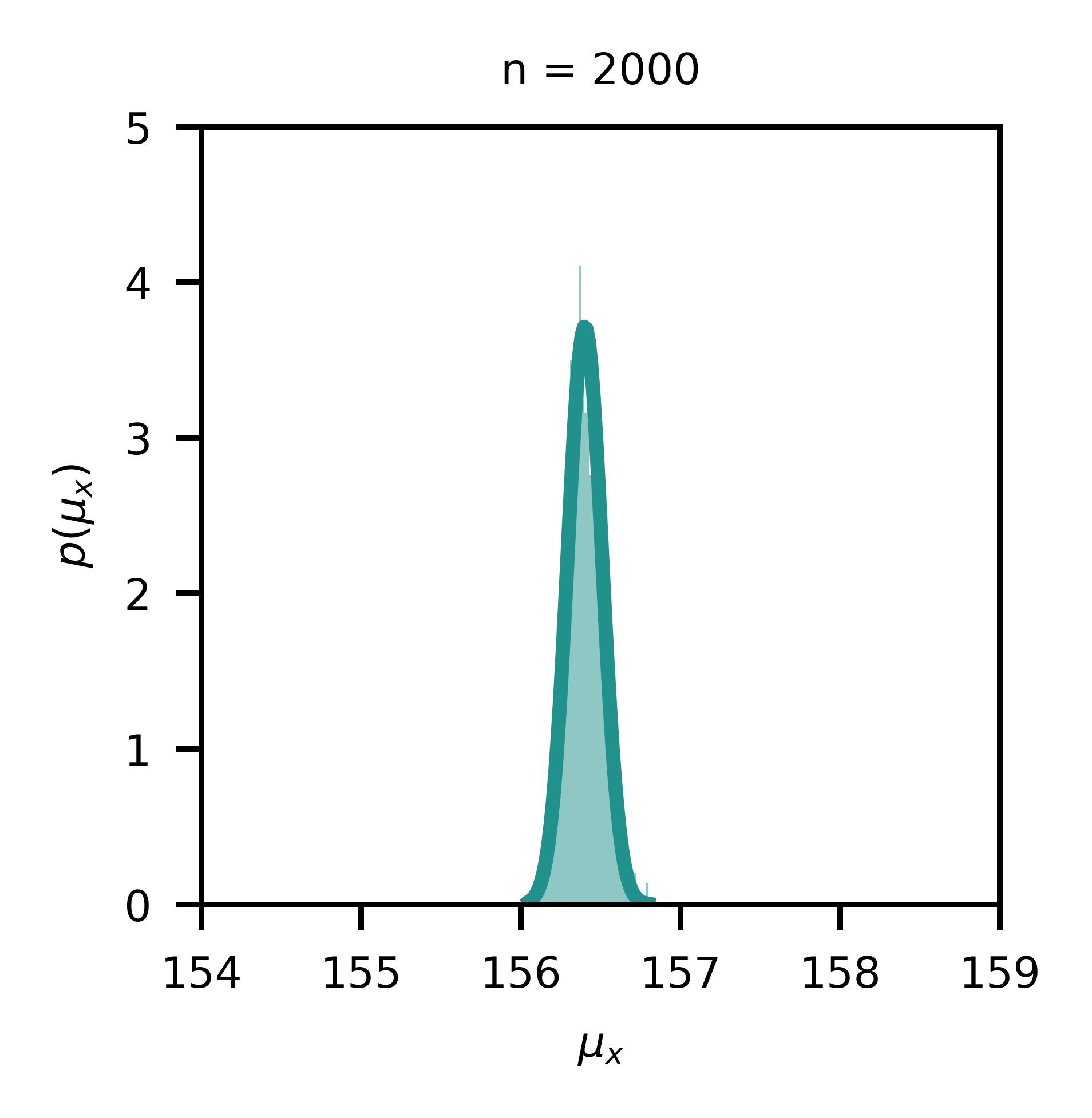

It is of relevance to know the distribution of the random variable for the selection of the appropriate statistical test. More precisely, a parametric tests like the t-test assumes a normal distribution of the random variable, but this might not necessarily be the case. In that case, it might be reasonable to use a non-parametric test such as the Mann-Whitney U test. However, the Mann-Whitney U test uses another null hypothesis in which the probability distributions of both groups are related to each other

了解随机变量的分布对于选择适当的统计检验至关重要。 更准确地说,像t检验这样的参数检验假设正态分布 随机变量的大小,但不一定是这种情况。 在这种情况下,使用诸如Mann-Whitney U检验的非参数检验可能是合理的。 但是,Mann-Whitney U检验使用另一个无效假设,在该假设中两组的概率分布相互关联

H0: p(height1) = p(height2)There is a whole zoo of statistical tests (Figure 2); which test to use depends on the type of data (quantitative vs. categorical), whether the data is normally distributed (parametric vs. non-parametric), and whether samples are paired (independent vs. dependent). However, it is important to be aware the null hypothesis is not always the same, so the conclusions change slightly.

有一个完整的统计测试动物园(图2); 使用哪种测试取决于数据类型(定量与分类),数据是否为正态分布(参数与非参数)以及样本是否成对(独立与依赖)。 但是,重要的是要知道零假设并不总是相同的,因此结论略有变化。

Once the assumptions are verified, a test is chosen, and the test statistic is computed from the two samples with sizes n and m. In this example, it would be the t-statistic T, which is distributed according to the t-distribution (with n+m-2 degrees of freedom). S is the pooled (aggregated) sample variance. In addition, it is worth mentioning that T scales with the sample size(s).

一旦验证了假设,就选择一个测试,然后从大小为n和m的两个样本中计算出测试统计量。 在这个例子中,它将是t统计量 T ,根据 t分布 ( n + m -2自由度)。 S是合并(汇总)的样本方差。 另外,值得一提的是, T与样本量成正比。

T = (mean(height1) - mean(height2)) / (sqrt(S) * (1/n + 1/m))S = ((n - 1) * std(height1) + (m - 1) * std(height1)) / (n + m - 2)Note the similarity of the t-statistic to the z-score, which is associated with the Gaussian distribution. The higher the absolute value of the z-score the lower the probability, which is also true for the t-distribution. Hence, the higher the absolute value of the t-statistic, the less probable it is that the null hypothesis is true.

注意t统计量与z得分的相似性, 与高斯分布有关。 z分数的绝对值越高,概率越低,这对于t分布也是如此。 因此,t统计量的绝对值越高,原假设为真的可能性就越小。

Just to provide some clarification, the t-statistic follows a t-distribution because the standard deviation/error is unknown and has to be estimated from (little amount of) data. If it was known, one would use a normal distribution and the z-score. For larger sample sizes, the distribution of the t-statistic becomes more and more normal as the standard error approaches zero. (Note that the estimated standard deviation is also a random variable that follows a Chi-square distribution with n-1 degrees of freedom.)

只是为了澄清一下,t统计量遵循t分布,因为标准偏差/误差是未知的,必须从(少量)数据中估算出来。 如果知道的话,将使用正态分布和z得分。 对于更大的样本量,随着标准误差接近零,t统计量的分布变得越来越正态。 (请注意,估算的标准偏差也是遵循卡方分布的随机变量 具有n-1个自由度。)

p值 (p-Value)

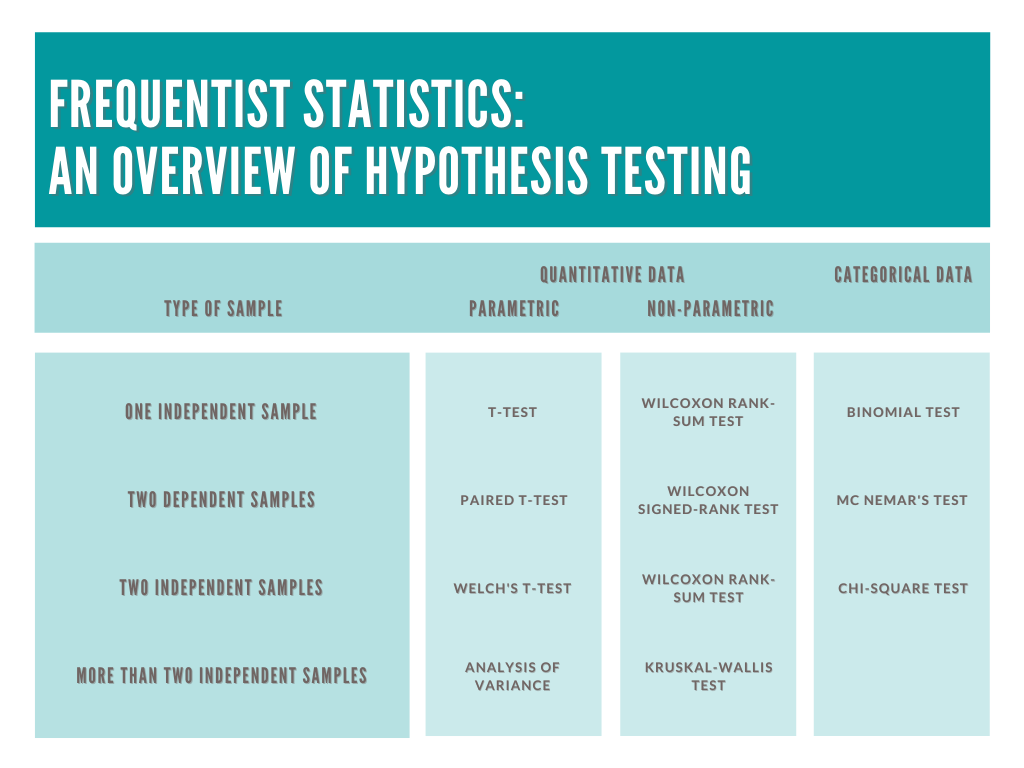

As mentioned above, statistical hypothesis testing deals with group comparison and the goal is to assess whether differences across groups are significant or not — given the estimated sample statistics. For this purpose, the sufficient statistics, their corresponding confidence intervals, and the p-value are computed. The p-value is the probability associated with the T-statistic using the t-distribution, similar as the probability associated to a z-score and the Gaussian distribution (Figure 3). In most cases, a two-sided test is applied in which the absolute value of the T-statistic is assessed. In mathematical terms, the p-value is

如上所述,统计假设检验用于组比较,目标是评估给定的样本统计量,评估组之间的差异是否显着。 为此,要计算足够的统计量,其相应的置信区间和p值 。 p值是使用t分布与T统计量相关联的概率,类似于与z得分和高斯分布相关的概率(图3)。 在大多数情况下,将使用双向检验来评估T统计量的绝对值。 用数学术语来说,p值是

p = 2*min{Pr(θ <= T|H0), Pr(θ >= T|H0)}

As such, the p-value is the largest probability of obtaining test results θ at least as “extreme” as the result actually observed T — under the assumption that the null hypothesis is true. A very small p-value means that such an “extreme” observed outcome is very unlikely under the null hypothesis (the observed data is “sufficiently” inconsistent with the null hypothesis).

这样,p值获得测试结果至少θ为“极端”作为实际观察到T中的结果的最大概率-假设零假设为真下。 p值非常小意味着在原假设下观察到的这种“极端”结果极不可能(观察数据与原假设“足够”不一致)。

假阳性和假阴性 (False Positive and False Negative)

If the p-value is lower than some threshold α, the difference is said to be statistically significant. Rejecting the null hypothesis when it is actually true is called a type I error (false positive), and the probability of a type I error is called the significance level (“some threshold”) α. Accepting the null hypothesis when it is false is called a type II error (false negative) and its probability is denoted by β. The probability, that the null hypothesis is rejected when it is false is called the power of the test and is equals 1-β. By being more strict with the significance level α, the risk for false positives can be minimized. However, tuning for false negatives is more difficult because the alternative hypothesis includes all other possibilities.

如果p值低于某个阈值α ,则该差异被认为具有统计学意义 。 在原假设为真时拒绝原假设的情况称为I类错误 (假阳性),而将I类错误的概率称为显着性水平(“某个阈值”) α 。 如果为假则接受原假设,称为II型错误 (假否定) 其概率用β表示。 当零假设为假时拒绝原假设的概率称为检验的功效,等于1- β 。 通过对显着性水平α进行更严格的规定,可以将误报的风险降到最低。 但是,由于其他假设包括所有其他可能性,因此调整假阴性更加困难。

In practice it is the case that the choice of α is essentially arbitrary; small values, such as 0.05 or even 0.01 are commonly used in science. One critisim of this approach is that the null hypothesis has to be rejected or accepted, although this would not be necessary; for instance, in a Bayesian approach, both hypotheses could exist simultaneously with some associated posterior probability (modeling the likelihood of hypotheses).

在实践中, α的选择基本上是任意的。 在科学中通常使用较小的值,例如0.05甚至0.01。 这种方法的一个罪魁祸首是原假设必须被拒绝或接受,尽管这不是必须的。 例如,在贝叶斯方法中,两个假设可以同时存在一些相关的后验概率(对假设的可能性进行建模)。

结束语 (Final Remarks)

It should be stated that there is a duality between confidence intervals and hypothesis tests. Without going too much into detail, it is worth mentioning that if two confidence intervals overlap for a given level α, the null hypothesis is rejected.

应该指出,置信区间和假设检验之间存在二重性。 无需赘述,值得一提的是,如果对于给定的水平α ,两个置信区间重叠,则原假设被拒绝。

However, only because a difference is statistically significant, it might not be relevant. A small p-value can be observed for an effect that is not meaningful or important. In fact, the larger the sample sizes, the smaller the minimum effect needed to produce a statistically significant p-value.

但是,仅因为差异在统计上显着,才可能不相关。 可以观察到较小的p值,表示该效果没有意义或不重要。 实际上,样本数量越大,产生统计上显着的p值所需的最小影响越小。

Lastly, the conclusions are worthless is they are based on wrong (e.g. biased) data. It is important to guarantee that sampled data is of high quality and whitout biases — which is not a trivial task at all.

最后,结论是毫无根据的,因为它们基于错误(例如有偏见)的数据。 重要的是要确保采样数据的高质量和偏见 - 这根本不是一件简单的任务。

翻译自: https://towardsdatascience.com/statistical-hypothesis-testing-b9e641da8cb0

简明易懂的c#入门指南

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/389229.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

![bzoj 1016 [JSOI2008]最小生成树计数——matrix tree(相同权值的边为阶段缩点)(码力)...](http://pic.xiahunao.cn/bzoj 1016 [JSOI2008]最小生成树计数——matrix tree(相同权值的边为阶段缩点)(码力)...)

)