数据挖掘 , 编程 (Data Mining, Programming)

Getting Twitter data

获取Twitter数据

Let’s use the Tweepy package in python instead of handling the Twitter API directly. The two things we will do with the package are, authorize ourselves to use the API and then use the cursor to access the twitter search APIs.

让我们在python中使用Tweepy包,而不是直接处理Twitter API。 我们将对该软件包执行的两件事是,授权自己使用API,然后使用光标访问twitter搜索API。

Let’s go ahead and get our imports loaded.

让我们继续加载我们的导入。

import tweepy

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as npsns.set()

%matplotlib inlineTwitter授权 (Twitter authorization)

To use the Twitter API, you must first register to get an API key. To get Tweepy just install it via pip install Tweepy. The Tweepy documentation is best at explaining how to authenticate, but I’ll go over the basic steps.

要使用Twitter API,您必须首先注册以获得API密钥。 要获取Tweepy,只需通过pip安装Tweepy即可安装。 Tweepy文档最擅长于说明如何进行身份验证,但我将介绍一些基本步骤。

Once you register your app you will receive API keys, next use Tweepy to get an OAuthHandler. I have the keys stored in a separate config dict.

一旦注册您的应用程序,您将收到API密钥,接下来请使用Tweepy获取OAuthHandler。 我将密钥存储在单独的配置字典中。

config = {"twitterConsumerKey":"XXXX", "twitterConsumerSecretKey" :"XXXX"}

auth = tweepy.OAuthHandler(config["twitterConsumerKey"], config["twitterConsumerSecretKey"])

redirect_url = auth.get_authorization_url()

redirect_urlNow that we’ve given Tweepy our keys to generate an OAuthHandler, we will now use the handler to get a redirect URL. Go to the URL from the output in a browser where you can allow your app to authorize your account so you can get access to the API.

现在,我们已经为Tweepy提供了密钥来生成OAuthHandler,现在将使用该处理程序来获取重定向URL。 在浏览器中从输出转到URL,您可以在其中允许您的应用对帐户进行授权,以便可以访问API。

Once you’ve authorized your account with the app, you’ll be given a PIN. Use that number in Tweepy to let it know that you’ve authorized it with the API.

使用该应用授权您的帐户后,将获得PIN码。 在Tweepy中使用该编号,以使其知道您已使用API授权。

pin = "XXXX"

auth.get_access_token(pin)搜索推文 (Searching for tweets)

After getting the authorization, we can use it to search for all the tweets containing the term “British Airways”; we have restricted the maximum results to 1000.

获得授权后,我们可以使用它来搜索包含“英国航空”一词的所有推文; 我们已将最大结果限制为1000。

query = 'British Airways'

max_tweets = 10

searched_tweets = [status for status in tweepy.Cursor(api.search, q=query,tweet_mode='extended').items(max_tweets)]search_dict = {"text": [], "author": [], "created_date": []}for item in searched_tweets:

if not item.retweet or "RT" not in item.full_text:

search_dict["text"].append(item.full_text)

search_dict["author"].append(item.author.name)

search_dict["created_date"].append(item.created_at)df = pd.DataFrame.from_dict(search_dict)

df.head()#

text author created_date

0 @RwandAnFlyer @KenyanAviation @KenyaAirways @U... Bkoskey 2019-03-06 10:06:14

1 @PaulCol56316861 Hi Paul, I'm sorry we can't c... British Airways 2019-03-06 10:06:09

2 @AmericanAir @British_Airways do you agree wit... Hat 2019-03-06 10:05:38

3 @Hi_Im_AlexJ Hi Alex, I'm glad you've managed ... British Airways 2019-03-06 10:02:58

4 @ZRHworker @British_Airways @Schmidy_87 @zrh_a... Stefan Paetow 2019-03-06 10:02:33语言检测 (Language detection)

The tweets downloaded by the code above can be in any language, and before we use this data for further text mining, we should classify it by performing language detection.

上面的代码下载的推文可以使用任何语言,并且在我们使用此数据进行进一步的文本挖掘之前,我们应该通过执行语言检测对其进行分类。

In general, language detection is performed by a pre-trained text classifier based on either the Naive Bayes algorithm or more modern neural networks. Google’s compact language detector library is an excellent choice for production-level workloads where you have to analyze hundreds of thousands of documents in less than a few minutes. However, it’s a bit tricky to set up and as a result, a lot of people rely on calling a language detection API from third-party providers like Algorithmia which are free to use for hundreds of calls a month (free sign up required with no credit cards needed).

通常,语言检测由基于Naive Bayes算法或更现代的神经网络的预训练文本分类器执行。 Google的紧凑型语言检测器库是生产级工作负载的绝佳选择,您必须在几分钟之内分析成千上万的文档。 但是,设置起来有点棘手,因此,许多人依赖于从第三方提供商(例如Algorithmia)调用语言检测API ,这些提供商每月可以免费使用数百次呼叫(无需注册即可免费注册)需要信用卡)。

Let’s keep things simple in this example and just use a Python library called Langid which is orders of magnitude slower than the options discussed above but should be OK for us in this example since we are only to analyze about a hundred tweets.

让我们在此示例中保持简单,只使用一个名为Langid的Python库,该库比上面讨论的选项慢几个数量级,但在本示例中应该可以接受,因为我们仅分析大约100条推文。

from langid.langid import LanguageIdentifier, model

def get_lang(document):

identifier = LanguageIdentifier.from_modelstring(model, norm_probs=True)

prob_tuple = identifier.classify(document)

return prob_tuple[0]df["language"] = df["text"].apply(get_lang)We find that there are tweets in four unique languages present in the output, and only 45 out of 100 tweets are in English, which are filtered as shown below.

我们发现输出中存在四种独特语言的推文,而100条推文中只有45条是英文,如下所示进行过滤。

print(df["language"].unique())

df_filtered = df[df["language"]=="en"]

print(df_filtered.shape)#Out:

array(['en', 'rw', 'nl', 'es'], dtype=object)

(45, 4)获得情绪来为推特打分 (Getting sentiments to score for tweets)

We can take df_filtered created in the preceding section and run it through a pre-trained sentiments analysis library. For illustration purposes we are using the one present in Textblob, however, I would highly recommend using a more accurate sentiments model such as those in coreNLP or train your own model using Sklearn or Keras.

我们可以采用在上一节中创建的df_filtered并将其通过预训练的情感分析库运行。 为了便于说明,我们使用Textblob中提供的模型,但是,我强烈建议使用更准确的情感模型(例如coreNLP中的模型),或者使用Sklearn或Keras训练自己的模型。

Alternately, if you choose to go via the API route, then there is a pretty good sentiments API at Algorithmia.

或者,如果您选择通过API路线,那么Algorithmia中会有一个相当不错的情绪API 。

from textblob import TextBlobdef get_sentiments(text):

blob = TextBlob(text)# sent_dict = {}# sent_dict["polarity"] = blob.sentiment.polarity# sent_dict["subjectivity"] = blob.sentiment.subjectivity

if blob.sentiment.polarity > 0.1:

return 'positive'

elif blob.sentiment.polarity < -0.1:

return 'negative'

else:

return 'neutral'def get_sentiments_score(text):

blob = TextBlob(text)

return blob.sentiment.polarity

df_filtered["sentiments"]=df_filtered["text"].apply(get_sentiments)

df_filtered["sentiments_score"]=df_filtered["text"].apply(get_sentiments_score)

df_filtered.head()

#Out:

text author created_date language sentiments sentiments_score

0 @British_Airways Having some trouble with our ... Rosie Smith 2019-03-06 10:24:57 en neutral 0.025

1 @djban001 This doesn't sound good, Daniel. Hav... British Airways 2019-03-06 10:24:45 en positive 0.550

2 First #British Airways Flight to #Pakistan Wil... Developing Pakistan 2019-03-06 10:24:43 en positive 0.150

3 I don’t know why he’s not happy. I thought he ... Joyce Stevenson 2019-03-06 10:24:18 en negative -0.200

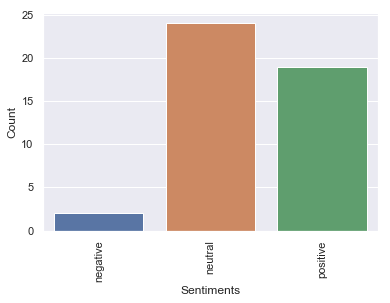

4 Fancy winning a global holiday for you and a f... Selective Travel Mgt 🌍 2019-03-06 10:23:40 en positive 0.360Let us plot the sentiments score to see how many negative, neutral, and positive tweets people are sending for “British airways”. You can also save it as a CSV file for further processing at a later time.

让我们绘制情绪分数,以查看人们向“英国航空公司”发送了多少条负面,中立和正面的推文。 您也可以将其另存为CSV文件,以便以后进行进一步处理。

Originally published at http://jaympatel.com on February 1, 2019.

最初于 2019年2月1日 发布在 http://jaympatel.com 上。

翻译自: https://medium.com/towards-artificial-intelligence/using-twitter-rest-apis-in-python-to-search-and-download-tweets-in-bulk-da234b5f155a

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/388393.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!

)

-原创力文档)