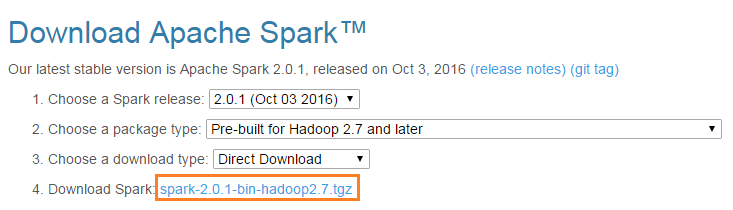

1. 官网下载

wget http://d3kbcqa49mib13.cloudfront.net/spark-2.0.1-bin-hadoop2.7.tgz

2. 解压

tar -zxvf spark-2.0.1-bin-hadoop2.7.tgz

ln -s spark-2.0.1-bin-hadoop2.7 spark2

3. 环境变量

vi /etc/profile

#Spark 2.0.1

export SPARK_HOME=/usr/local/spark2

export PATH=$PATH:$SPARK_HOME/bin

4. 配置文件(/usr/local/spark2/conf)

1) spark-env.sh

cp -a spark-env.sh.template spark-env.sh

vi spark-env.sh

export JAVA_HOME=/usr/java/jdk1.8

export SPARK_MASTER_HOST=cdh01

2) slaves

cp -a slaves.template slaves

vi slaves

cdh02

cdh03

5. 复制到其他节点

scp -r spark-2.0.1-bin-hadoop2.7 root@cdh02:/usr/local

scp -r spark-2.0.1-bin-hadoop2.7 root@cdh03:/usr/local

6. 启动

$SPARK_HOME/sbin/start-all.sh

7. 运行

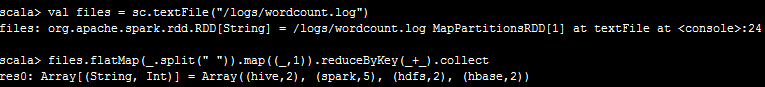

1) 准备一个文本文件放在/logs/wordcount.log内容为:

hdfs hbase hive hdfs

hive hbase spark spark

spark spark spark

2) 运行spark-shell

3) 运行wordcount

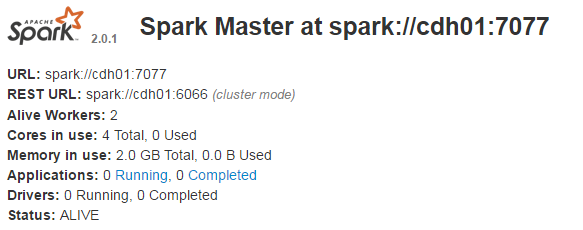

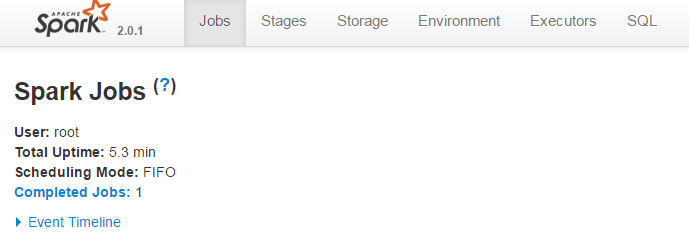

8. Web界面

http://cdh01:8080/jobs/

http://cdh01:4040/jobs/

应用在模板制作)

——构造函数和析构函数)

——友元)

—— 运算符重载)

—— 菱形继承)

—— 多态)

![[转]c++类的构造函数详解](http://pic.xiahunao.cn/[转]c++类的构造函数详解)