做网站贵么/佛山网站建设维护

做网站贵么,佛山网站建设维护,镜像网站能否做google排名,武汉seo推广优化文章目录 💕效果展示💕代码展示HTML💕效果展示 💕代码展示

HTML

<!DOCTYPE html>

<html lang=

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.mzph.cn/news/242029.shtml

如若内容造成侵权/违法违规/事实不符,请联系多彩编程网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

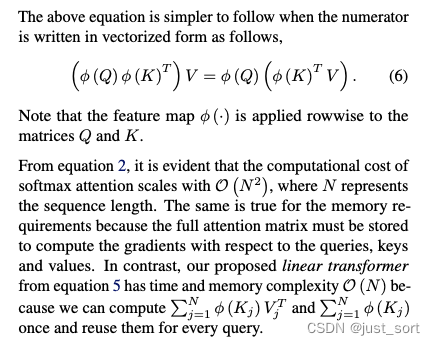

【BBuf的CUDA笔记】十,Linear Attention的cuda kernel实现解析

欢迎来 https://github.com/BBuf/how-to-optim-algorithm-in-cuda 踩一踩。 0x0. 问题引入

Linear Attention的论文如下: Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention:https://arxiv.org/pdf/2006.16236.pdf 。官方…

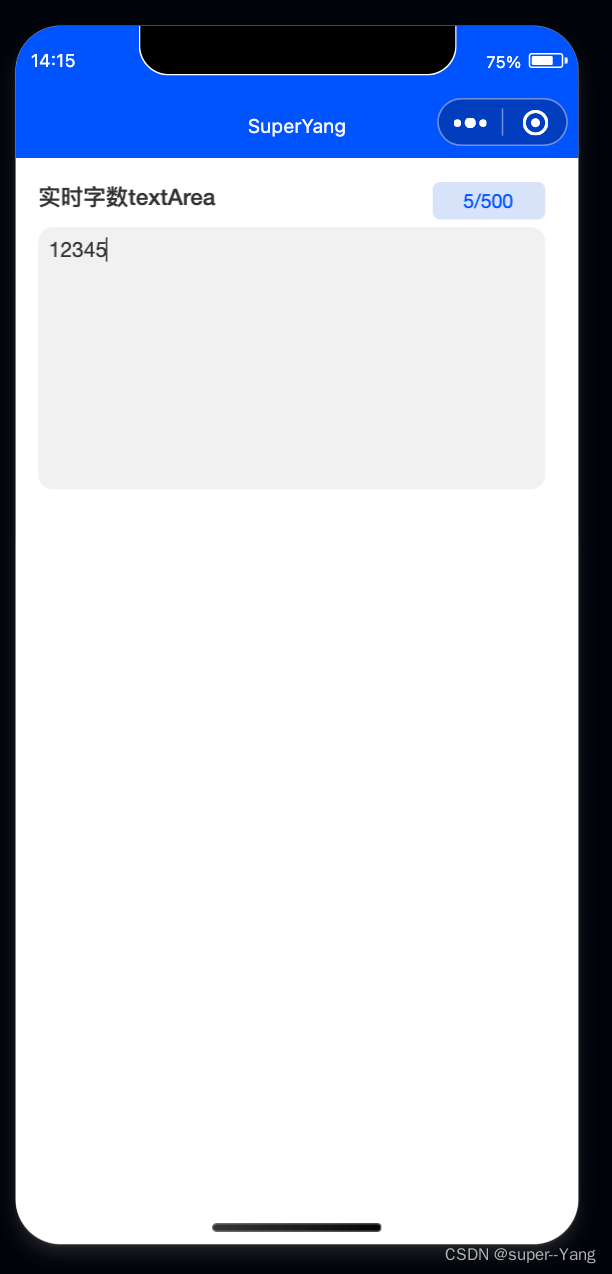

微信小程序-textarea组件字数实时更新

一、前言

本文实现的是在小程序中,textarea文本框输入文字后,实时显示文字的字数,获取更好的用户输入体验以及提示。

下图是实现的效果

二、代码实现

2-1、wxml代码

<view style"padding: 30rpx;"><view style"…

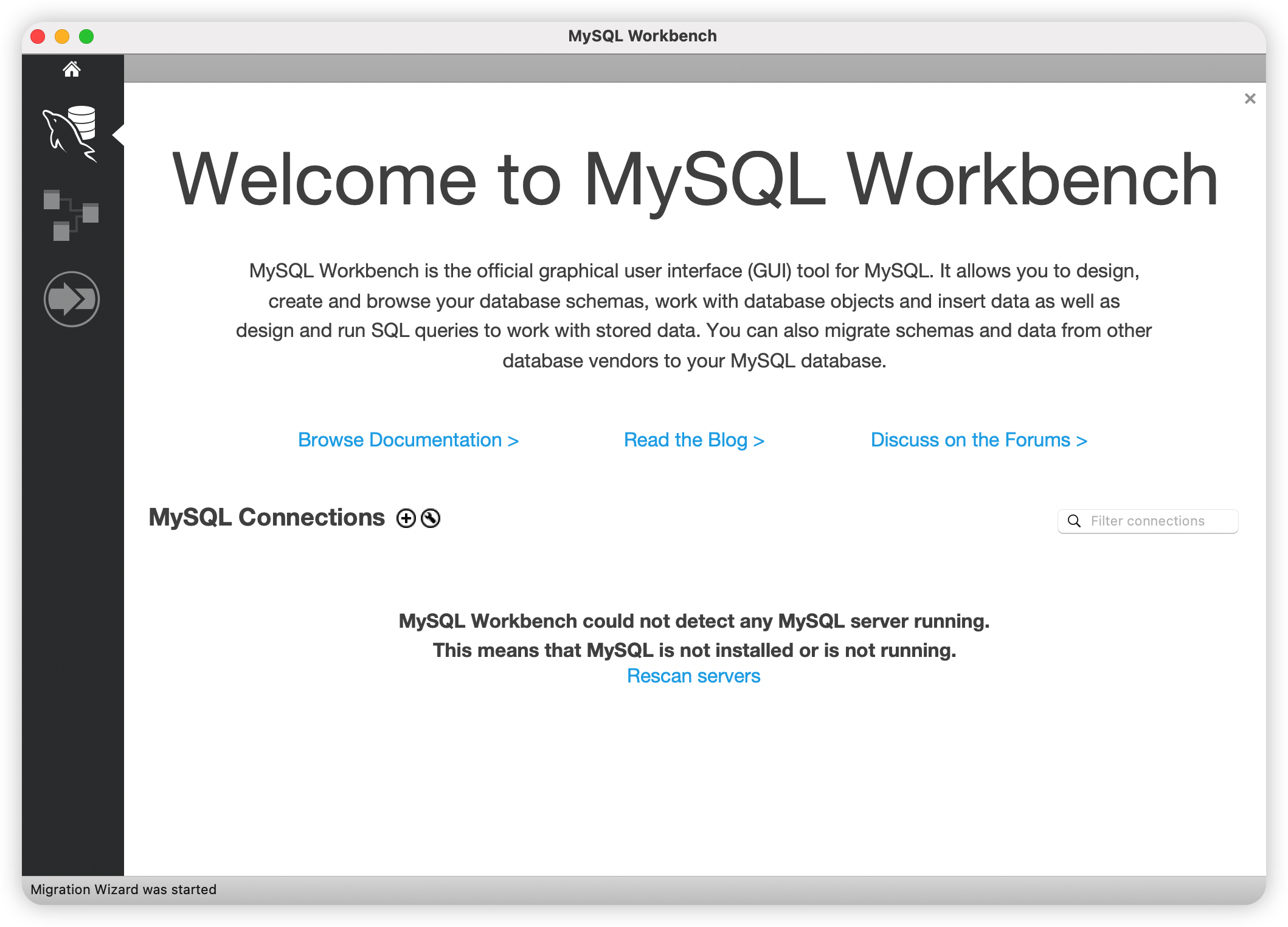

MySQL 数据库系列课程 04:MySQL Workbench的安装

Workbench 是 MySQL 官方推出的免费的强大的可视化工具,不熟悉命令行工具的人,可以安装这一款软件,通过编写 SQL 进行数据库中数据的增删改查操作,接下来我们详细说明一下 Workbench 的安装。

一、Windows安装Workbench

&#x…

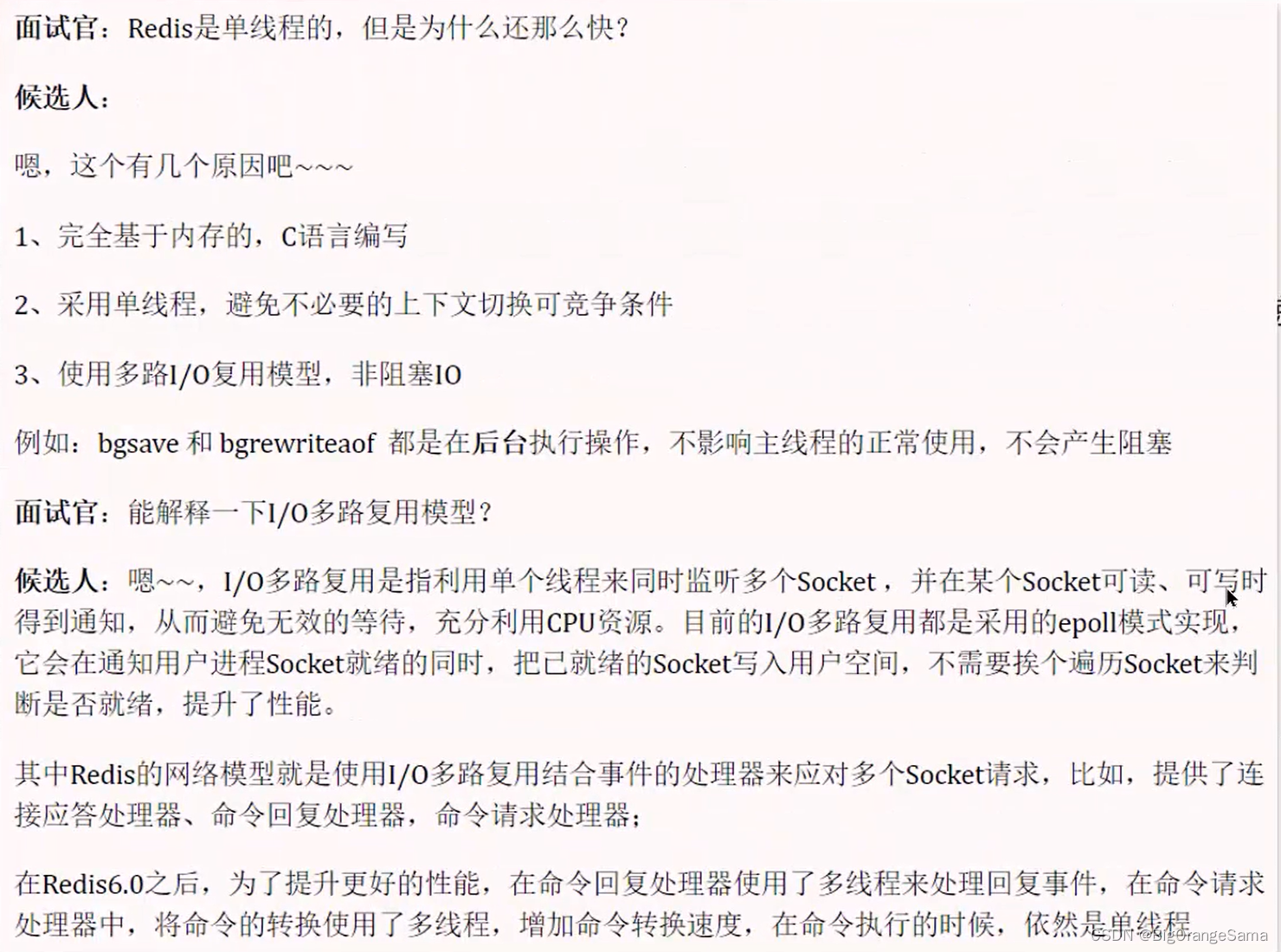

java八股 redis

Redis篇-01-redis开篇_哔哩哔哩_bilibili 1.缓存穿透 2.缓存击穿 逻辑过期里的互斥锁是为了保证只有一个线程去缓存重建 3.缓存雪崩 4.双写一致性 4.1要求一致性(延迟双删/互斥锁) 延迟双删无法保证强一致性 那么前两步删缓和更新数据库哪个先呢…

基于Java SSM框架实现实现定西扶贫惠农推介志愿者系统项目【项目源码+论文说明】

基于java的SSM框架实现定西扶贫惠农推介志愿者系统演示 摘要

扶贫工作是党中央、国务院的一项重要战略部署。党政机关定点扶贫是中国扶贫开发战略部署的重要组成部分,是新阶段扶贫开发的一项重大举措,对推动贫困地区经济社会的发展有着积极的意义。

本…

Ethercat“配置从站地址”报文分析(0x0010:0x0011)

基于IgH主站接了3个从站,分析报文。

涉及的从站寄存器:Configured Station Address 0x0010:0x0011。 使用场景举例:

IgH启动后,通过“配置从站地址”报文将所有从站地址清零,然后通过APWR指令“配置从站地址”报文&a…

TIA博途Wincc_通过VBS脚本实现电机风扇或水泵旋转动画的具体方法

TIA博途Wincc_通过VBS脚本实现电机风扇或水泵旋转动画的具体方法 前面和大家介绍了通过在PLC中编程,结合HMI的图形IO域实现电机风扇或水泵旋转动画的具体方法,详细内容可参考以下链接:

TIA博途Wincc中制作电机风扇或水泵旋转动画的具体方法示例 本次和大家分享通过VBS脚本实…

windows下使用vccode+cmake编译cuda程序

1、在vscode中安装Nsight Visual Studio Code Edition 在vscode中安装插件能够对cuda的代码进行语法检查 2、编写cuda程序

#include <iostream>__global__ void mykernelfunc(){};

int main()

{mykernelfunc<<<1,1>>>();std::cout << "hel…

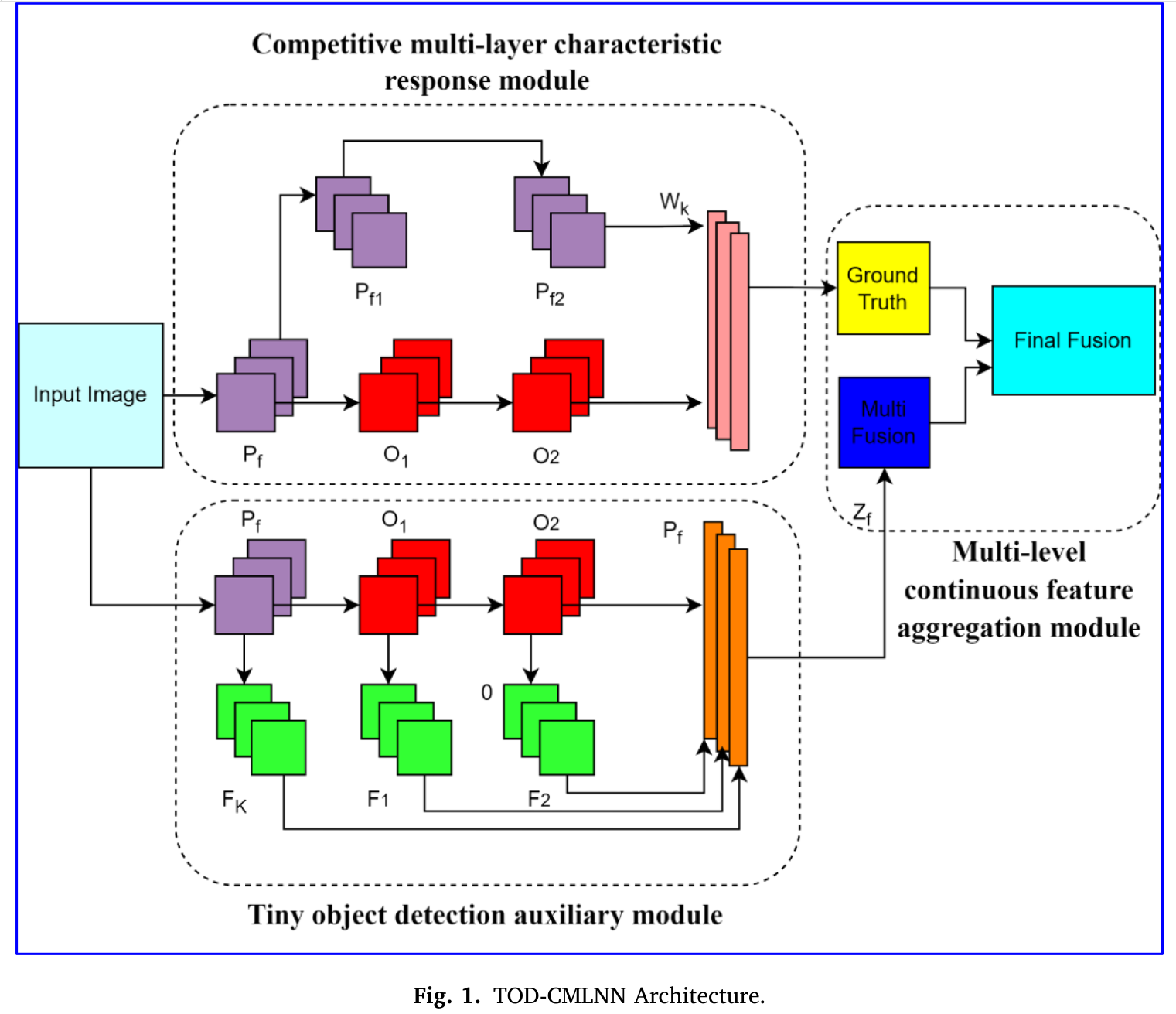

Tiny Object Detection

文章目录 RFLA: Gaussian Receptive Field based Label Assignment for Tiny Object Detection(ECCV2022)Dynamic Coarse-to-Fine Learning for Oriented Tiny Object Detection(CVPR2023)TOD-CMLNN(2023) …

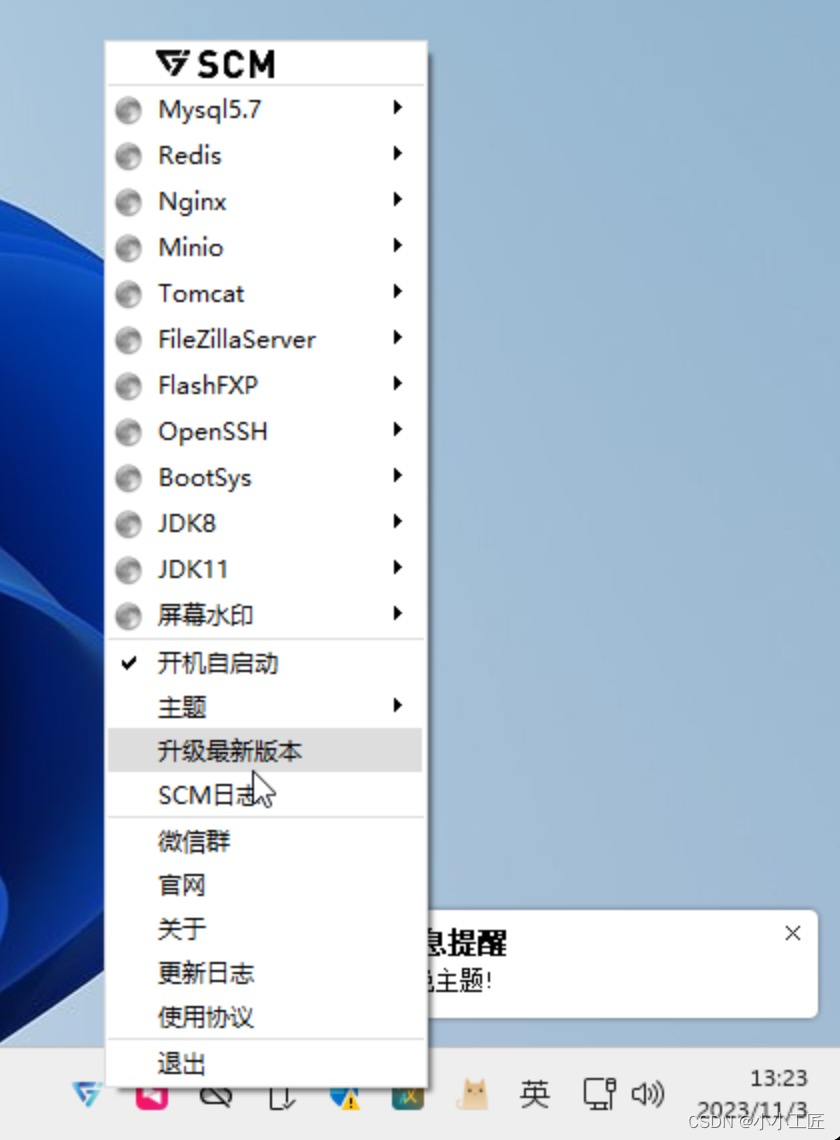

OpenSource - SCM服务管理平台

文章目录 官方网址文档下载版本功能解决了哪些问题使用对象优势Linxu版本scm-dev deb服务列表 Windows版本scm-dev 服务列表scm-all 服务列表scm-jdk 服务列表scm-springboot 精简版本服务列表scm-springboot 服务列表scm-tomcat 服务列表 SCM 截图 官方网址

https://scm.chus…

RabbitMQ入门指南(七):生产者可靠性

专栏导航 RabbitMQ入门指南 从零开始了解大数据 目录

专栏导航

前言

一、消息丢失的可能性

1.发送消息时丢失:

2.MQ导致消息丢失:

3.消费者处理消息时消息丢失:

二、生产者可靠性

1.生产者重试机制

2.生产者确认机制

总结 前言

Ra…

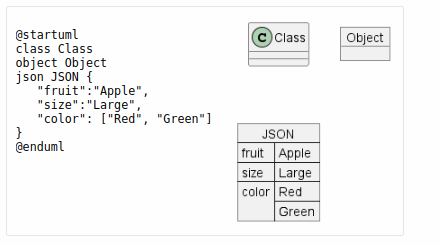

Plantuml之对象图语法介绍(十九)

简介: CSDN博客专家,专注Android/Linux系统,分享多mic语音方案、音视频、编解码等技术,与大家一起成长! 优质专栏:Audio工程师进阶系列【原创干货持续更新中……】🚀 优质专栏:多媒…

VSCode运行时弹出powershell

问题

安装好了vscode并且装上code runner插件后,运行代码时总是弹出powershell,而不是在vscode底部终端

显示运行结果。

解决方法

打开系统cmd ,在窗口顶部条右击打开属性,把最下面的旧版控制台选项取消,即可

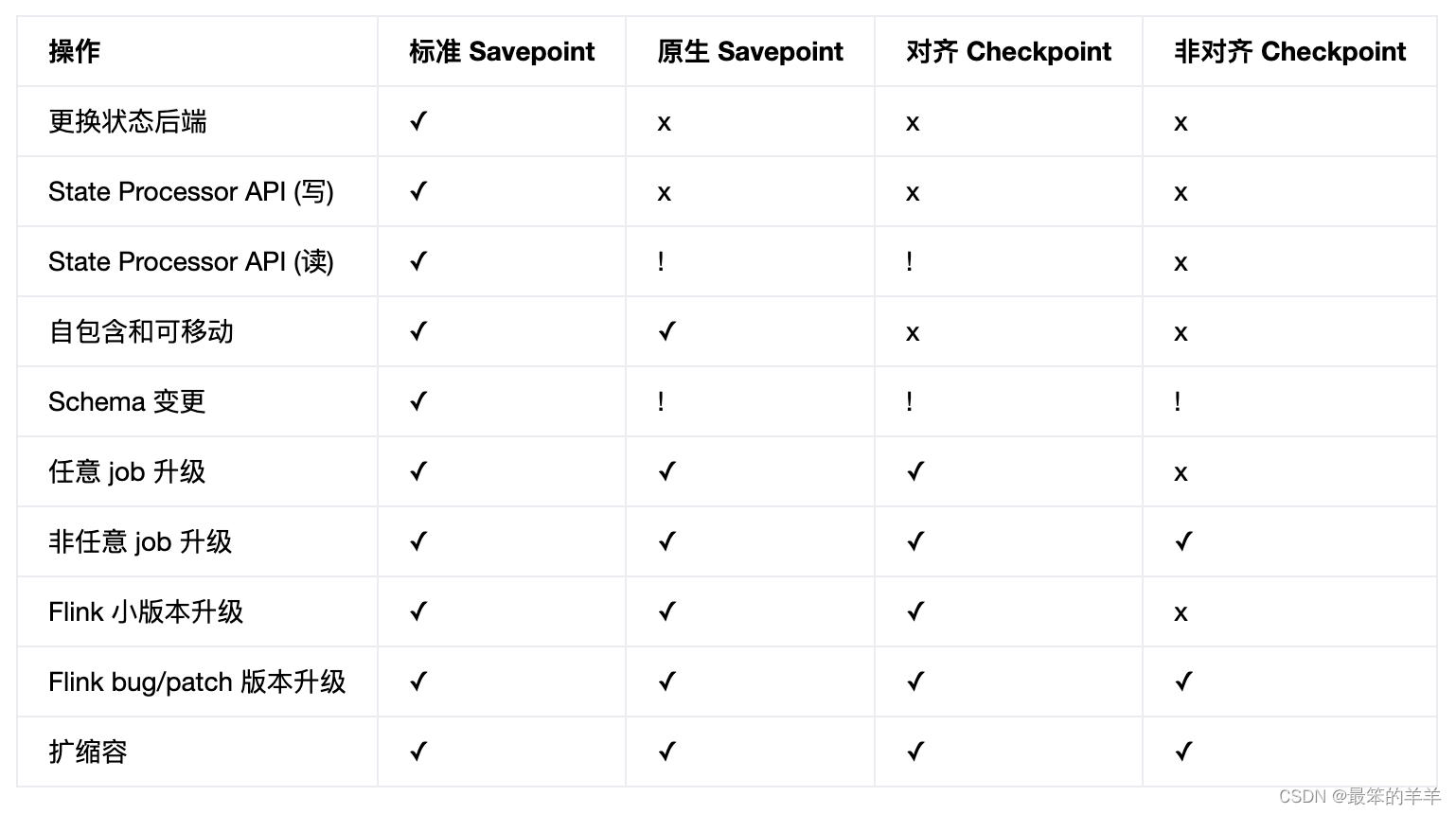

Flink系列之:Checkpoints 与 Savepoints

Flink系列之:Checkpoints 与 Savepoints 一、概述二、功能和限制 一、概述

从概念上讲,Flink 的 savepoints 与 checkpoints 的不同之处类似于传统数据库系统中的备份与恢复日志之间的差异。

Checkpoints 的主要目的是为意外失败的作业提供恢复机制。 …

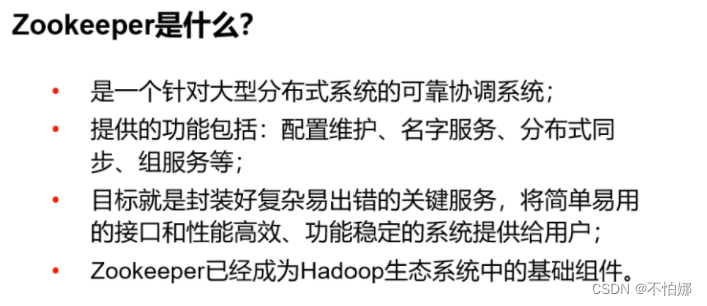

【Hadoop】Zookeeper是什么?怎么理解它的工作机制?

Zookeeper是什么Zookeeper工作机制 Zookeeper是什么

Zookeeper是一个开源的分布式的,为别的分布式矿建提供协调服务的Apache项目。分布式简单地理解就是多台机器共同完成一个任务。 Zookeeper工作机制

从设计模式的角度来理解,是一个基于观察者模式设…

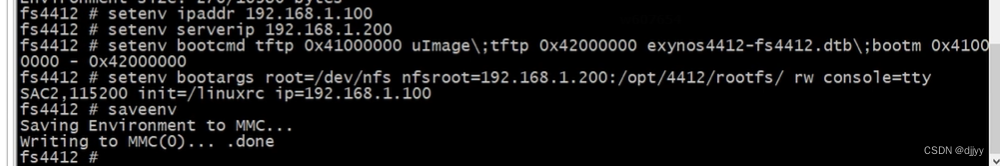

LV.13 D6 Linux内核安装及交叉编译 学习笔记

一、tftp加载Linux内核及rootfs

1.1 uboot内核启动命令

bootm 启动指定内存地址上的Linux内核并为内核传递参数 bootm kernel-addr ramdisk-addr dtb-addr 注: kernel-addr: 内核的下载地址 ramdisk-addr: 根文件系统的下载地址 …

postMessage——不同源的网页直接通过localStorage/sessionStorage/Cookies——技能提升

最近遇到一个问题,就是不同源的两个网页之间进行localstorage或者cookie的共享。

上周其实遇到过一次,觉得麻烦就让后端换了种方式处理了,昨天又遇到了同样的问题。

使用场景

比如从网页A通过iframe跳转到网页B,而且这两个网页…

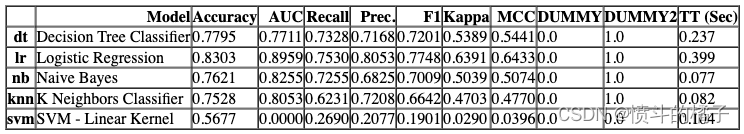

工具系列:PyCaret介绍_Fugue 集成_Spark、Dask分布式训练

文章目录 1、分布式计算场景(1)分类(2)回归(3)时间序列 2、分布式应用技巧(1)一个更实际的案例(2) 在设置中使用lambda而不是dataframe(3) 保持确定性(4) 设置n_jobs(4)设置适当的批量大小(5) 显示进度(6)自…

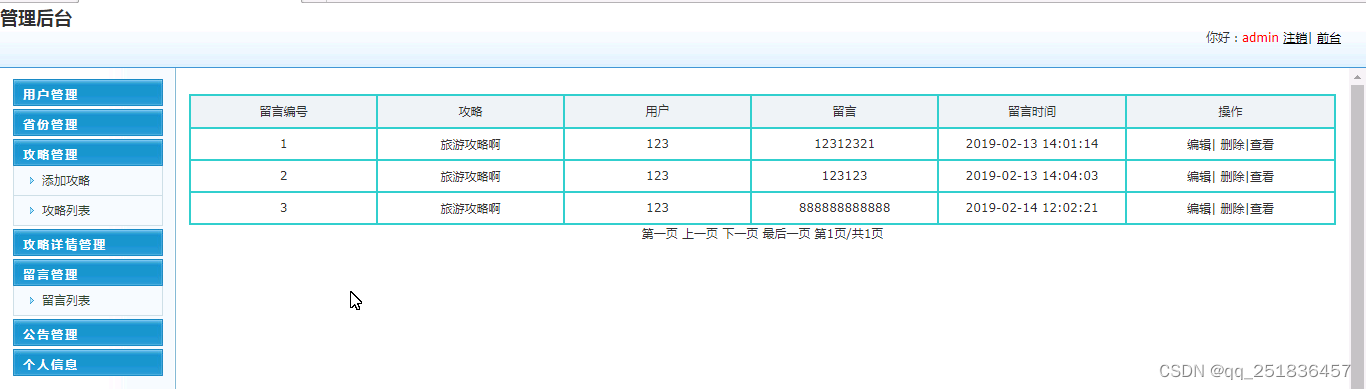

java旅游攻略管理系统Myeclipse开发mysql数据库web结构java编程计算机网页项目

一、源码特点 java Web旅游攻略管理系统是一套完善的java web信息管理系统,对理解JSP java编程开发语言有帮助,系统具有完整的源代码和数据库,系统主要采用B/S模式开发。开发环境为 TOMCAT7.0,Myeclipse8.5开发,数据库为Mysql…

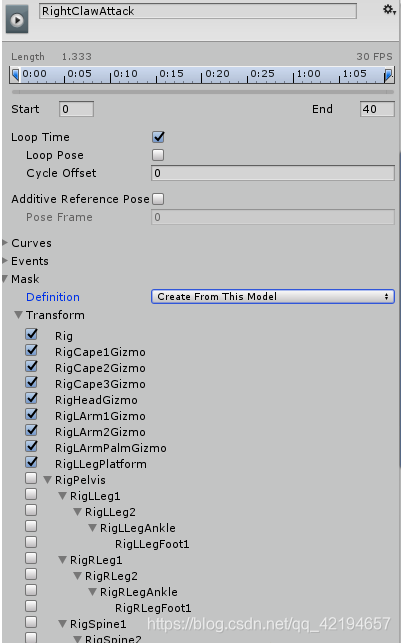

Unity新动画系统之动画层和动画遮罩

Unity新动画系统之动画层和动画遮罩 一、介绍二、动画骨骼遮罩层使用第一种就是create一个avatar Mask,如下:第二种遮罩,就是直接在动画剪辑的属性上更改,如图一为humanoid类型的动画剪辑属性: 一、介绍

之前分享过FSM动画控制系…