-

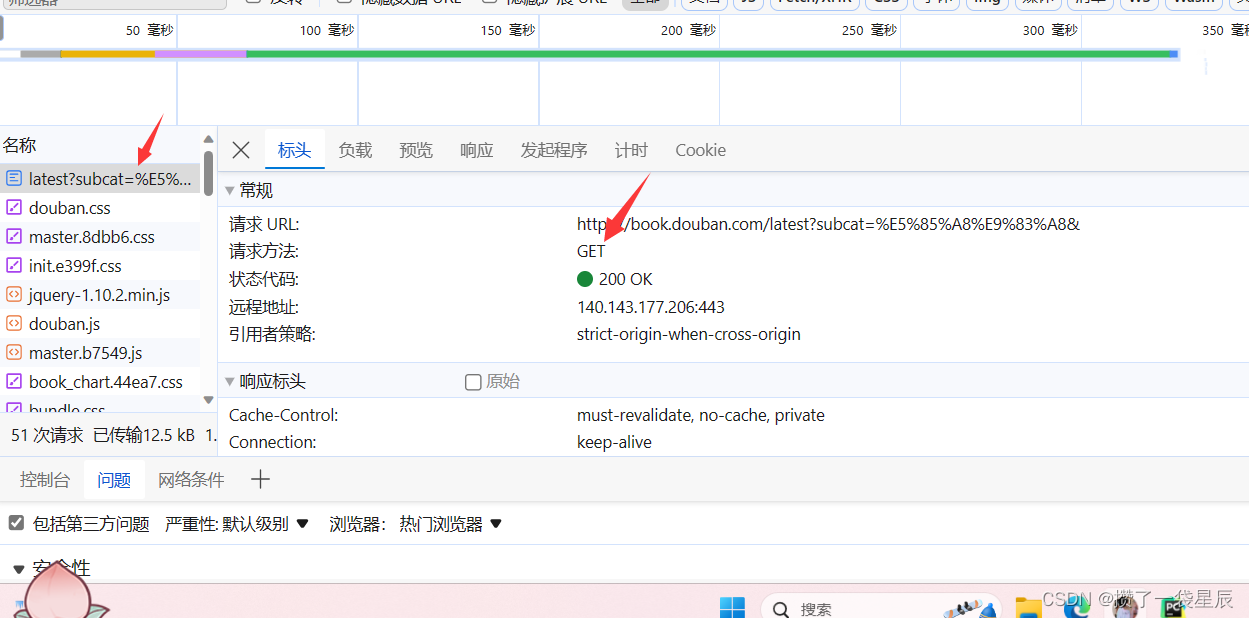

进入网站检查信息 , 确定请求方式以及相关数据

-

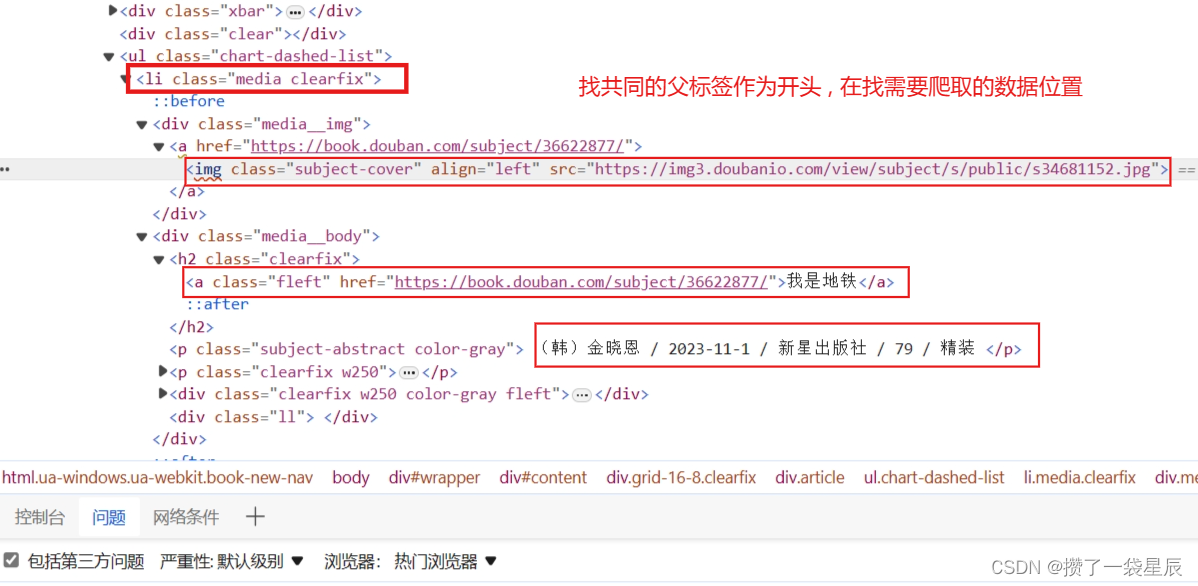

找到爬取目标位置

-

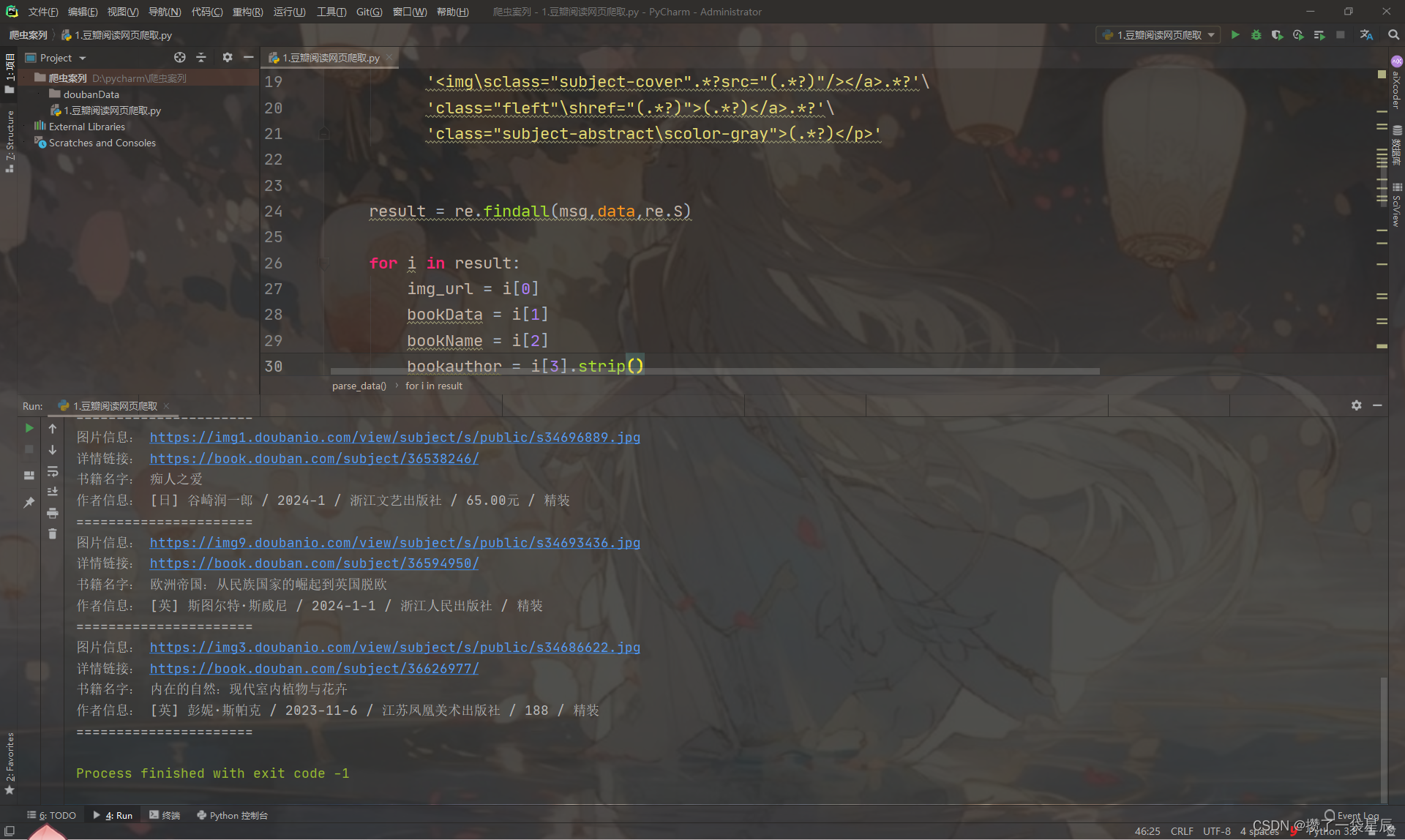

开始敲代码

# 链接网站

def url_link(url):res = requests.get(url,headers = headers)response = res.textparse_data(response)# 爬取信息

def parse_data(data):msg = '<li\sclass="media\sclearfix">.*?'\'<img\sclass="subject-cover".*?src="(.*?)"/></a>.*?'\'class="fleft"\shref="(.*?)">(.*?)</a>.*?'\'class="subject-abstract\scolor-gray">(.*?)</p>'result = re.findall(msg,data,re.S)for i in result:img_url = i[0]bookData = i[1]bookName = i[2]bookauthor = i[3].strip()print('图片信息:', img_url)print('详情链接:', bookData)print('书籍名字:', bookName)print('作者信息:', bookauthor)print('======================')keep_data(bookName,img_url,bookData,bookauthor)# 保存数据

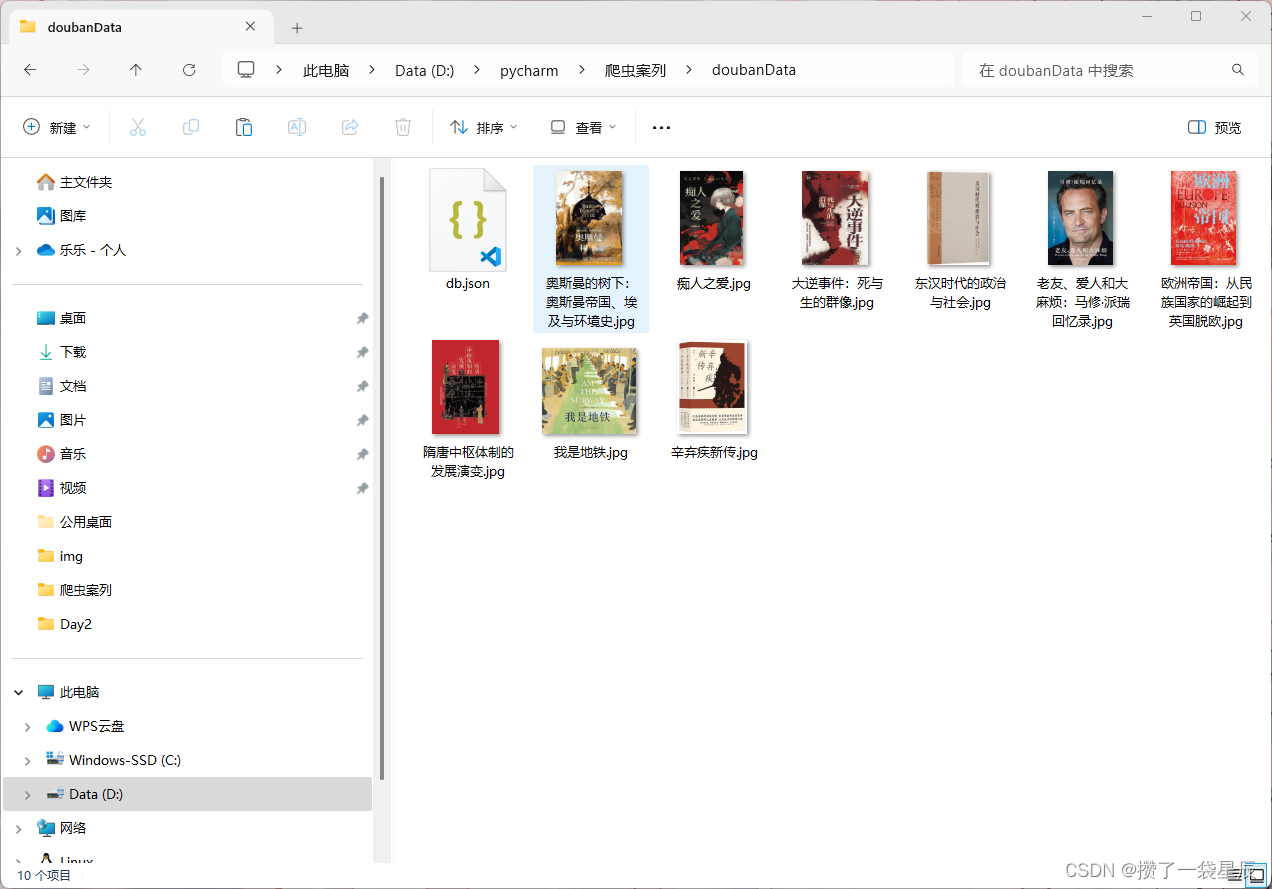

def keep_data(img, data, name, author):# 创建文件夹if not os.path.exists('doubanData'):os.mkdir('doubanData')# 保存书籍信息with open("doubanData\db.json", "a", encoding="utf-8") as f:f.write("书籍名称:" + name + "\n")f.write("图片信息:" + img + "\n")f.write("书籍详情页:" + data + "\n")f.write('书籍作者:' + author + "\n\n")# 保存图片信息urlDta = requests.get(data).contentwith open('doubanData/{}.jpg'.format(img),'wb') as f:f.write(urlDta)if __name__ == '__main__':# 设置爬取页数for i in range(1,6):url = f'https://book.douban.com/latest?subcat=%E5%85%A8%E9%83%A8&p={i}'print(f"正在爬取第{i}页")print("====================================================================")url_link(url)

最终效果

![[AutoSar]基础部分 RTE 02 S/R Port 显式/隐式](http://pic.xiahunao.cn/[AutoSar]基础部分 RTE 02 S/R Port 显式/隐式)

)

)