最近为公司做了一个ChatGPT工具,这里展示一下OpenAI接口的调用

前提条件

访问OpenAI官网(国内需要翻墙)的账号,需要sk

地址:https://platform.openai.com

依赖

使用开源工具调用OpenAI接口,依赖如下:

<dependency><groupId>com.unfbx</groupId><artifactId>chatgpt-java</artifactId><version>1.1.2-beta0</version></dependency>

代码示例

import cn.hutool.core.collection.CollUtil;

import cn.hutool.core.map.MapUtil;import com.unfbx.chatgpt.OpenAiClient;

import com.unfbx.chatgpt.entity.chat.ChatChoice;

import com.unfbx.chatgpt.entity.chat.ChatCompletion;

import com.unfbx.chatgpt.entity.chat.ChatCompletionResponse;

import com.unfbx.chatgpt.entity.chat.Message;

import com.unfbx.chatgpt.function.KeyRandomStrategy;

import com.unfbx.chatgpt.interceptor.OpenAILogger;

import com.unfbx.chatgpt.interceptor.OpenAiResponseInterceptor;

import com.unfbx.chatgpt.utils.TikTokensUtil;

import lombok.extern.slf4j.Slf4j;

import okhttp3.*;

import okhttp3.logging.HttpLoggingInterceptor;import java.io.IOException;

import java.net.InetSocketAddress;

import java.net.Proxy;

import java.util.Arrays;

import java.util.Collections;

import java.util.List;

import java.util.Map;

import java.util.concurrent.TimeUnit;/*** 请求OpenAI接口示例*/

@Slf4j

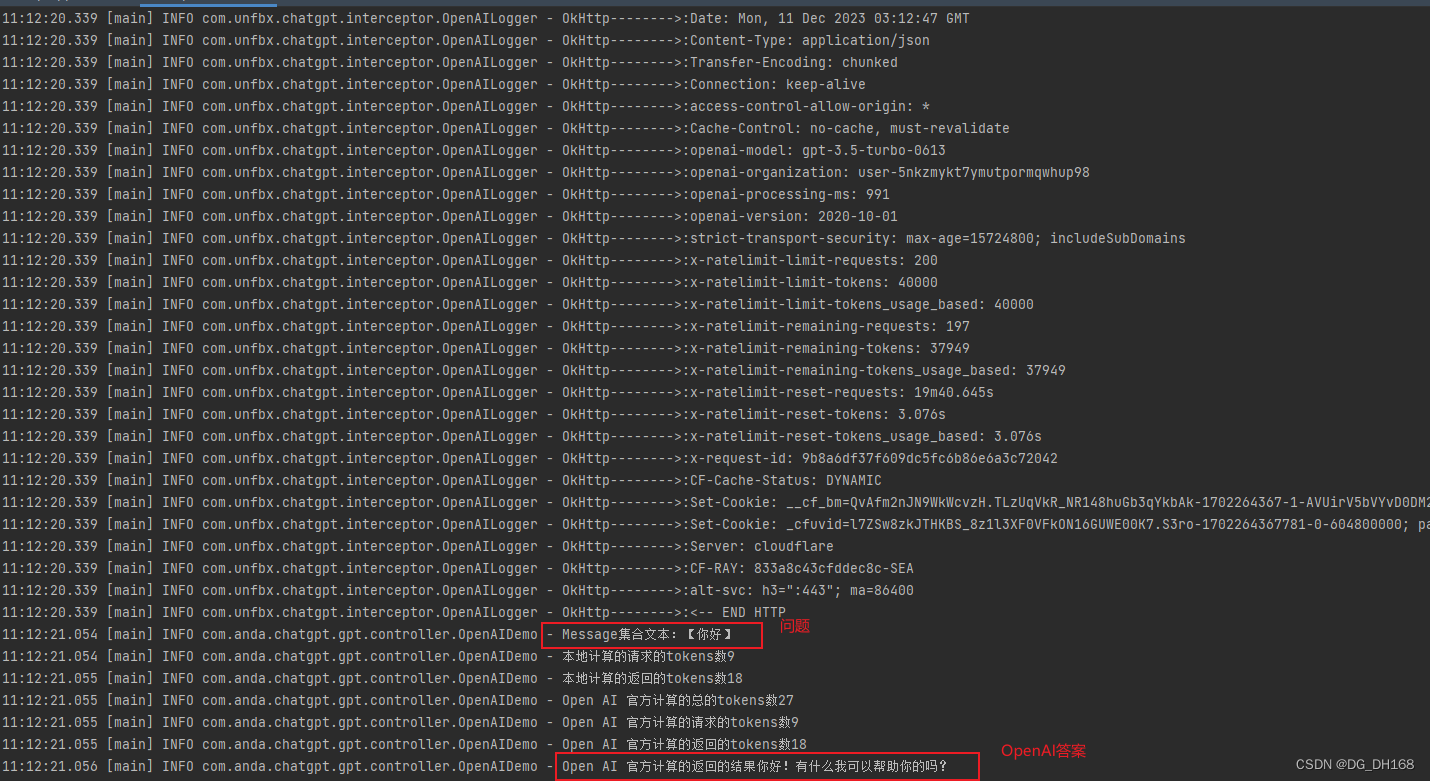

public class OpenAIDemo {public static void main(String[] args) {getOpenAiResult("你好");}private static OpenAiResult getOpenAiResult(String questionContent) {OpenAiClient openAiClient = builderOpenAiClient();//聊天模型:gpt-3.5Message message = Message.builder().role(Message.Role.USER).content(questionContent).build();ChatCompletion chatCompletion = ChatCompletion.builder().messages(Collections.singletonList(message)).model(ChatCompletion.Model.GPT_3_5_TURBO_0613.getName()) //指定模型.build();ChatCompletionResponse chatCompletionResponse = openAiClient.chatCompletion(chatCompletion);List<ChatChoice> choices = chatCompletionResponse.getChoices();//获取请求的tokens数量long tokens = chatCompletion.tokens();//这种方式也可以

// long tokens = TikTokensUtil.tokens(chatCompletion.getModel(),messages);log.info("Message集合文本:【{}】", questionContent);log.info("本地计算的请求的tokens数{}", tokens);log.info("本地计算的返回的tokens数{}", TikTokensUtil.tokens(chatCompletion.getModel(), chatCompletionResponse.getChoices().get(0).getMessage().getContent()));log.info("Open AI 官方计算的总的tokens数{}", chatCompletionResponse.getUsage().getTotalTokens());log.info("Open AI 官方计算的请求的tokens数{}", chatCompletionResponse.getUsage().getPromptTokens());log.info("Open AI 官方计算的返回的tokens数{}", chatCompletionResponse.getUsage().getCompletionTokens());OpenAiResult result = new OpenAiResult();result.setChatCompletion(chatCompletion).setChatCompletionResponse(chatCompletionResponse).setLocalToken(tokens).setCompletionTokens(chatCompletionResponse.getUsage().getCompletionTokens()).setTotalTokens(chatCompletionResponse.getUsage().getTotalTokens()).setPromptTokens(chatCompletionResponse.getUsage().getPromptTokens());if (CollUtil.isNotEmpty(choices)) {result.setContent(choices.get(0).getMessage().getContent());log.info("Open AI 官方计算的返回的结果{}", result.getContent());}return result;}private static OpenAiClient builderOpenAiClient() {Map<String, String> proxyUser = setProxyUser();//可以为nullProxy proxy = new Proxy(Proxy.Type.HTTP,new InetSocketAddress(proxyUser.get("PROXY_HOST"), Integer.valueOf(proxyUser.get("PROXY_PORT"))));//设置socks代理服务器ip端口Authenticator proxyAuthenticator = new Authenticator() {@Overridepublic Request authenticate(Route route, Response response) throws IOException {String credential = Credentials.basic(proxyUser.get("PROXY_USERNAME"), proxyUser.get("PROXY_PASSWORD"));return response.request().newBuilder().header("Proxy-Authorization", credential).build();}};HttpLoggingInterceptor httpLoggingInterceptor = new HttpLoggingInterceptor(new OpenAILogger());//!!!!千万别再生产或者测试环境打开BODY级别日志!!!!//!!!生产或者测试环境建议设置为这三种级别:NONE,BASIC,HEADERS,!!!httpLoggingInterceptor.setLevel(HttpLoggingInterceptor.Level.HEADERS);OkHttpClient okHttpClient = new OkHttpClient.Builder().proxy(proxy).proxyAuthenticator(proxyAuthenticator).addInterceptor(httpLoggingInterceptor).addInterceptor(new OpenAiResponseInterceptor()).connectTimeout(60, TimeUnit.SECONDS).writeTimeout(60, TimeUnit.SECONDS).readTimeout(60, TimeUnit.SECONDS).build();OpenAiClient openAiClient = OpenAiClient.builder()//支持多key传入,请求时候随机选择 openAiSk.apiKey(Arrays.asList("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"))

// .apiKey(Arrays.asList("sk-########"))//自定义key的获取策略:默认KeyRandomStrategy.keyStrategy(new KeyRandomStrategy()).okHttpClient(okHttpClient)//自己做了代理就传代理地址

// .apiHost("https://自己代理的服务器地址/").build();return openAiClient;}/**

*因为国内不能直接访问OpenAI接口,必须通过翻墙工具访问

*/private static Map<String, String> setProxyUser() {Map<String, String> proxyUser = MapUtil.newHashMap();proxyUser.put("PROXY_USERNAME", "****");proxyUser.put("PROXY_PASSWORD", "****");proxyUser.put("PROXY_HOST", "****");proxyUser.put("PROXY_PORT", "****");return proxyUser;}

}import com.unfbx.chatgpt.entity.chat.ChatCompletion;

import com.unfbx.chatgpt.entity.chat.ChatCompletionResponse;

import lombok.Data;

import lombok.experimental.Accessors;@Data

@Accessors(chain = true)

public class OpenAiResult {/*** 本地接口请求信息*/private ChatCompletion chatCompletion;/*** OpenAI接口返回信息对象*/private ChatCompletionResponse chatCompletionResponse;/*** 本地请求计算的token*/private long localToken;/*** OpenAI接口返回信息*/private String content;/*** 官方计算的请求的tokens数*/private long promptTokens;/*** 官方计算的返回的tokens数{*/private long completionTokens;/*** 官方计算的总的tokens数*/private long totalTokens;

}调用结果

配置文件详细)