一、前言

歌词上做文本分析,数据存储在网页上,需要爬取数据下来,词云展示在工作中也变得日益重要,接下来将数据爬虫与可视化结合起来,做个词云展示案例。

二、操作步骤

代码如下:

# -*- coding:utf-8 -*-

# 网易云音乐 通过获取每首歌ID,生成该歌的词云

import requests

import sys

import re

import os

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import jieba

from PIL import Image

import numpy as np

from lxml import etree%matplotlib inlineheaders = {'Referer' :'http://music.163.com','Host' :'music.163.com','Accept' :'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8','User-Agent':'Chrome/10'}# 得到一首歌的歌词

def get_song_lyric(headers,lyric_url):res = requests.request('GET', lyric_url, headers=headers)if 'lrc' in res.json():lyric = res.json()['lrc']['lyric']new_lyric = re.sub(r'[\d:.[\]]','',lyric)return new_lyricelse:return ''print(res.json())

# 去掉停用词

def remove_stop_words(f):stop_words = ['作词', '陈咏谦', '作曲', 'Howie', '@', 'Dear Jane', '编曲', '关礼琛', '监制', '/', 'Tim']#, '你', '说', '的', '我', '在'for stop_word in stop_words:f = f.replace(stop_word, '')return f

# 生成词云

def create_word_cloud(f):print('根据词频,开始生成词云!')f = remove_stop_words(f)cut_text = " ".join(jieba.cut(f,cut_all=False, HMM=True))wc = WordCloud(font_path="./SimHei.ttf",max_words=100,width=2000,height=1200,)print(cut_text)wordcloud = wc.generate(cut_text)# 写词云图片wordcloud.to_file("wordcloud.jpg")# 显示词云文件plt.imshow(wordcloud)plt.axis("off")plt.show()# 所有歌词

all_word = ''

# 获取每首歌歌词

song_id = '405790387'

song_name = '哪里只得我共你'# 歌词API URL

lyric_url = 'http://music.163.com/api/song/lyric?os=pc&id=' + song_id + '&lv=-1&kv=-1&tv=-1'

lyric = get_song_lyric(headers, lyric_url)

all_word = all_word + ' ' + lyric

print(song_name)

#根据词频 生成词云

create_word_cloud(all_word)三、效果展示:

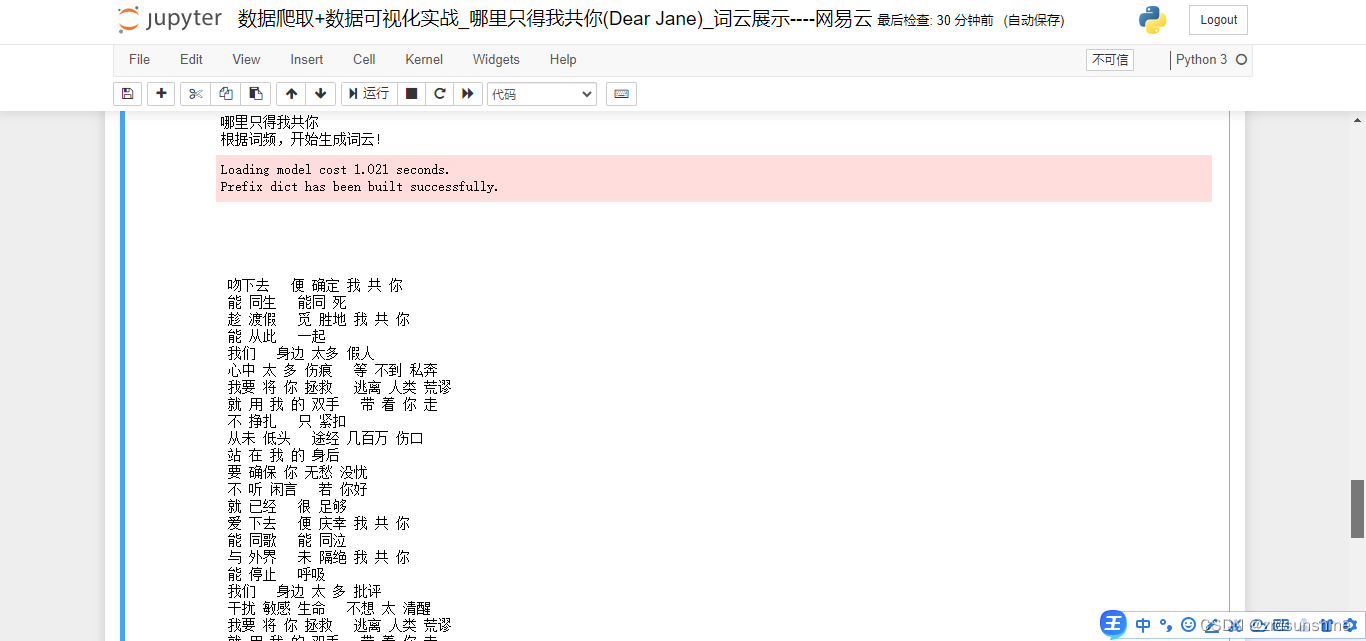

(1)歌词文本展示:

(2)词云图:

:批量合并GDB数据库)

)

)

给C#)