在网上下载了现成的商城代码的源码

本次旨在熟悉hdfs的api调用,不关注前后端代码的编写,所以直接下载现成的代码,代码下载地址。我下载的是前后端在一起的代码,这样测试起来方便

GitHub - newbee-ltd/newbee-mall: 🔥 🎉newbee-mall是一套电商系统,包括基础版本(Spring Boot+Thymeleaf)、前后端分离版本(Spring Boot+Vue 3+Element-Plus+Vue-Router 4+Pinia+Vant 4) 、秒杀版本、Go语言版本、微服务版本(Spring Cloud Alibaba+Nacos+Sentinel+Seata+Spring Cloud Gateway+OpenFeign+ELK)。 前台商城系统包含首页门户、商品分类、新品上线、首页轮播、商品推荐、商品搜索、商品展示、购物车、订单结算、订单流程、个人订单管理、会员中心、帮助中心等模块。 后台管理系统包含数据面板、轮播图管理、商品管理、订单管理、会员管理、分类管理、设置等模块。

我选择的第一个 下载的,明显是前后端没有隔离的版本,而且最简单的,没有加微服务分布式等的

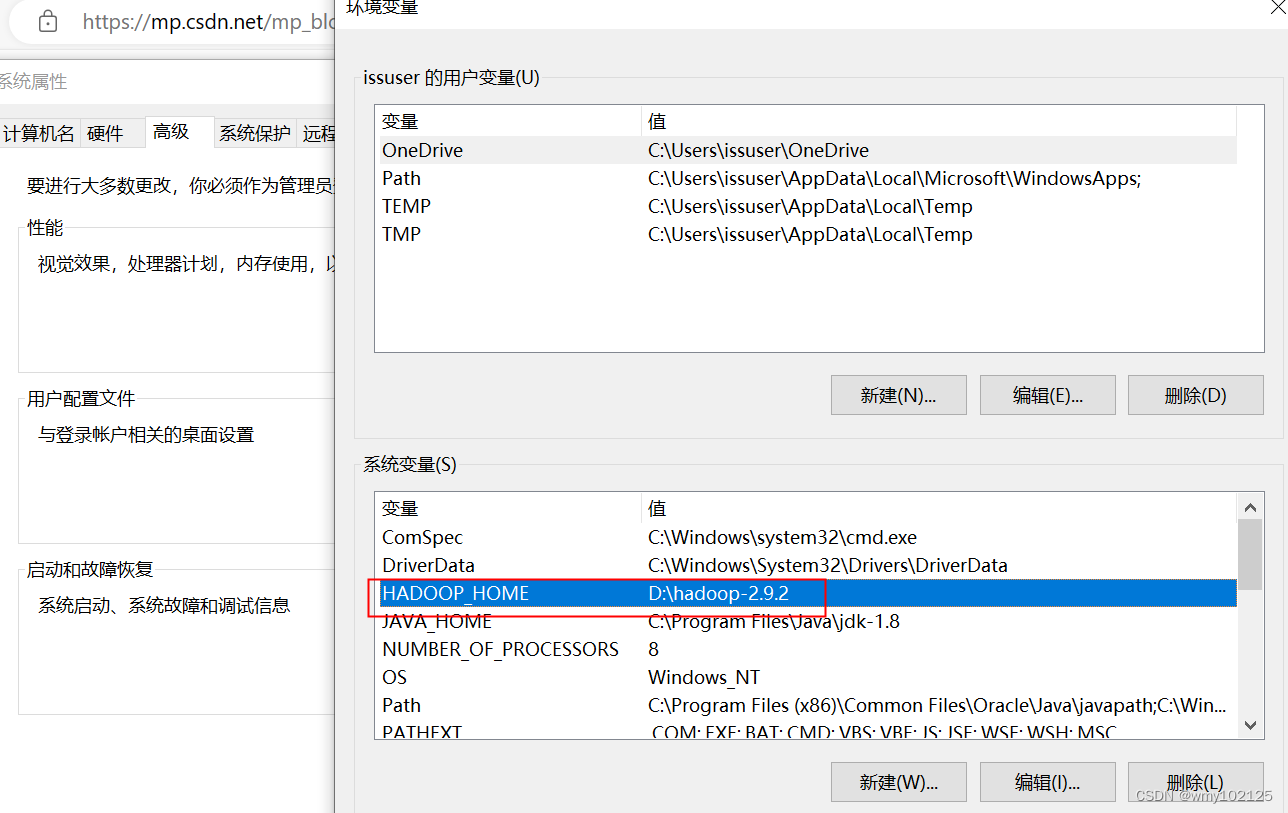

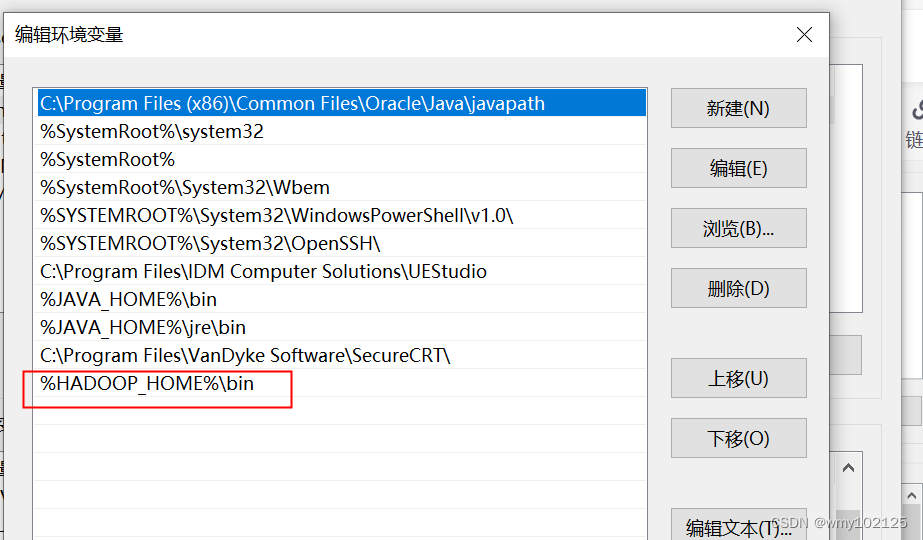

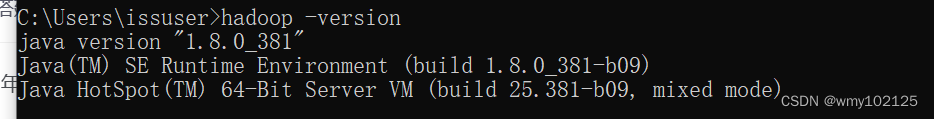

本机配置下hadoop的环境变量等的

否则idea连接hdfs的时候会报错,虽然报错不影响数据存储,但是还是配置下比较好。

官网下载地址:http://archive.apache.org/dist/hadoop/common/hadoop-2.9.2/hadoop-2.9.2.tar.gz

我本机win系统没有解压tar的工具,所以我在虚拟机上将hadoop-2.9.2.tar.gz的包解压缩后,用zip命令压缩了一遍下载到了win的本机

将压缩包解压到/home/admin目录下: tar -zxvf hadoop-2.9.2.tar.gz -C /home/admin

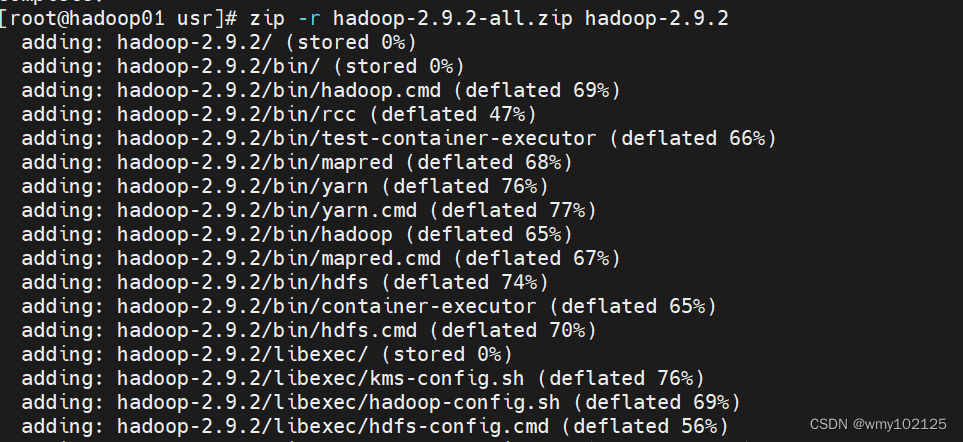

到目录下打包:

cd /home/admin

zip -r hadoop-2.9.2-all.zip hadoop-2.9.2

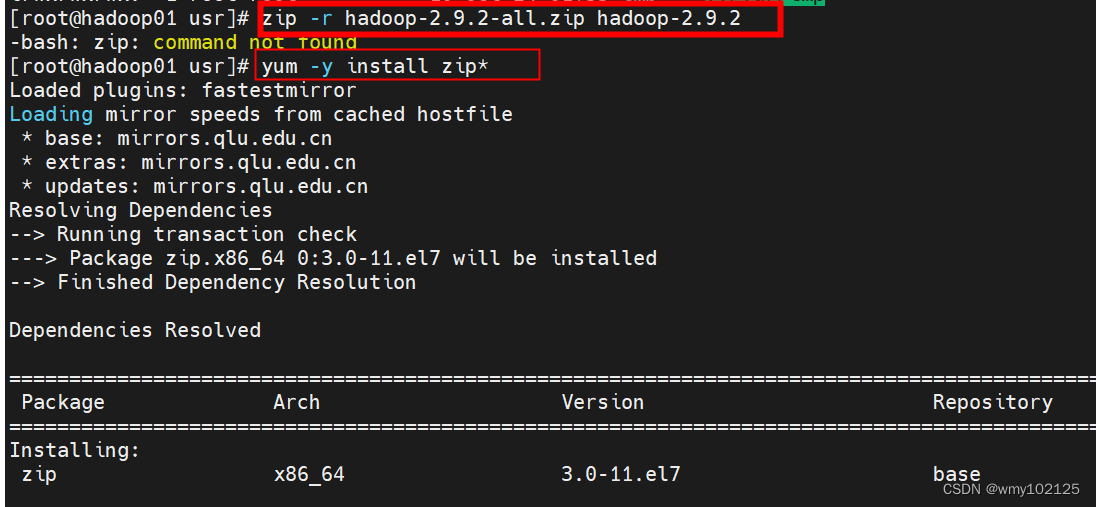

如果没有zip的命令则安装一下

yum -y install zip*

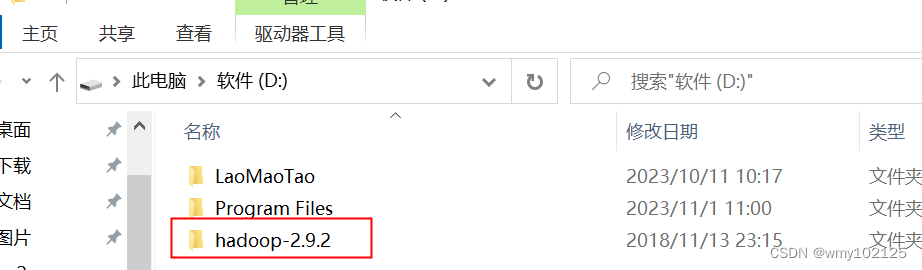

下载到win本机,解压然后配置环境变量

不要放到有空格的目录文件夹下,

例如Program Files,我一开始放到这个目录下,配置完环境变量之后,读取不到hadoop的目录,报错D:Program

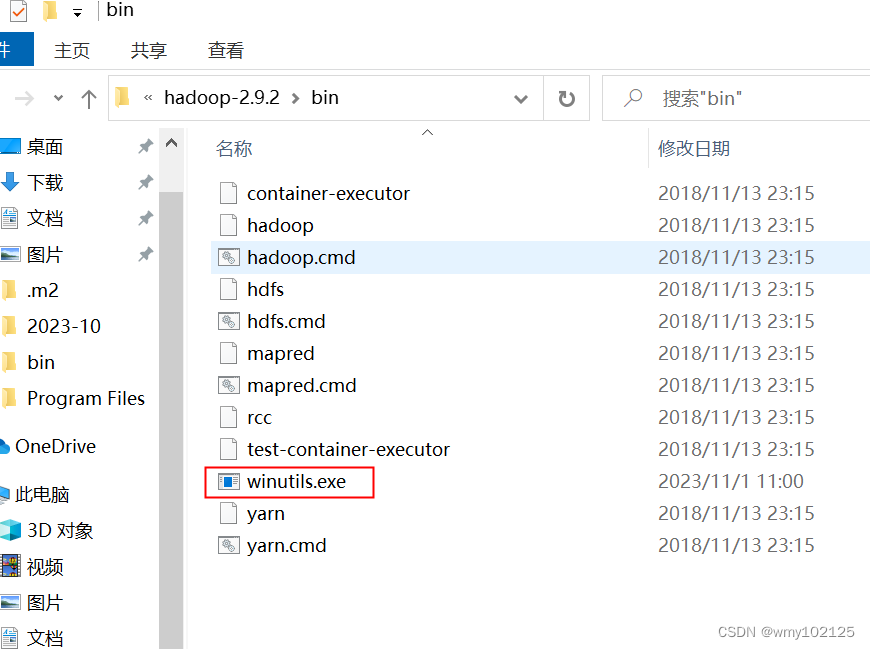

下载 windows 环境下所需的winutils.exe文件

hadoop主要基于linux编写,这个winutil.exe主要用于模拟linux下的目录环境。

因此hadoop放在windows下运行的时候,需要这个辅助程序才能运行。将winutils.exe放到你解压的 hadoop-2.9.2.tar.gz 的bin目录下

下载地址:https://raw.githubusercontent.com/cdarlint/winutils/master/hadoop-2.9.2/bin/winutils.exe

其他版本的winutils.exe下载:https://github.com/cdarlint/winutils

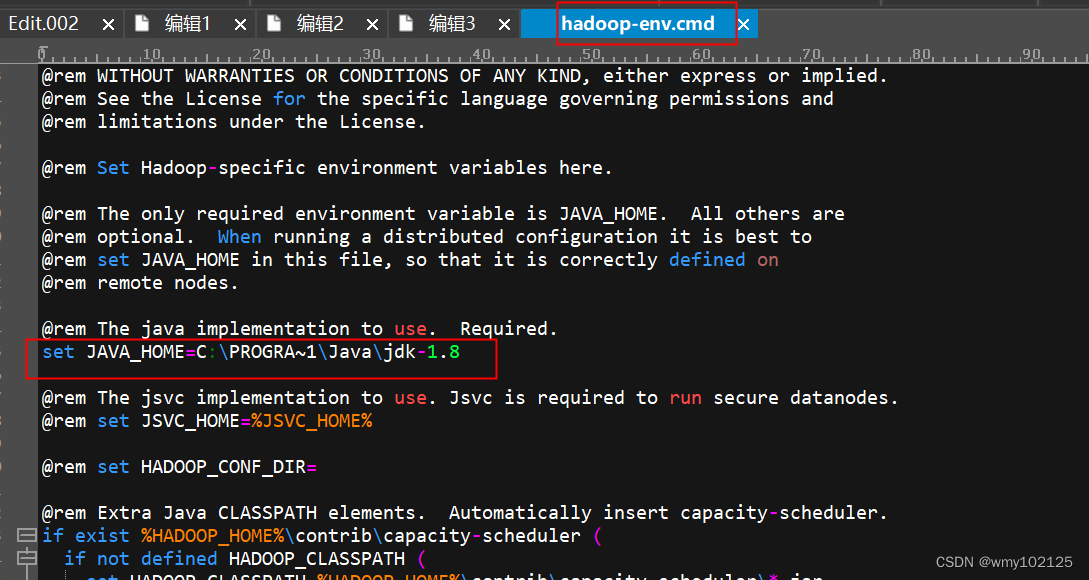

修改D:\hadoop-2.9.2\etc\hadoop\hadoop-env.cmd

配置成功,重启idea即可重新加载hadoop的配置了

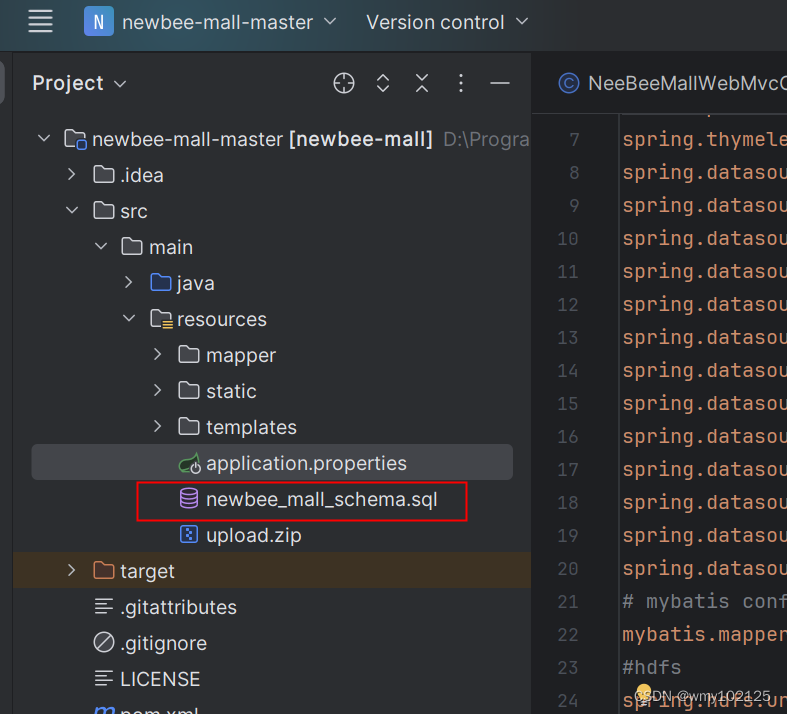

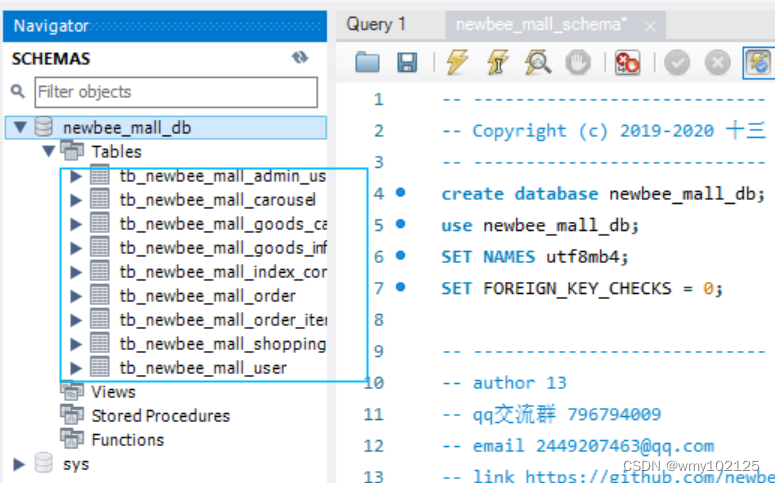

下载下来已有的项目源代码之后,我们先将数据库的表导入准备好

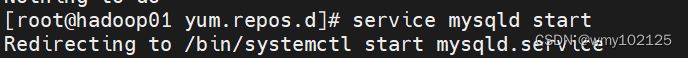

先启动自己的虚拟机,在虚拟机上启动mysql

service mysqld start

自己本机的虚拟机记得关机之前将mysql退出,否则下次启动可能会出问题

service mysqld stop

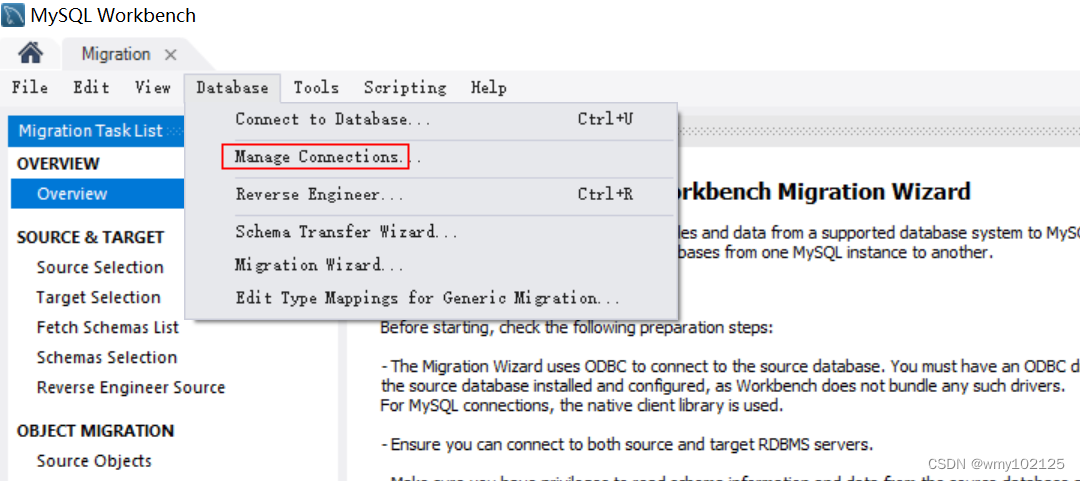

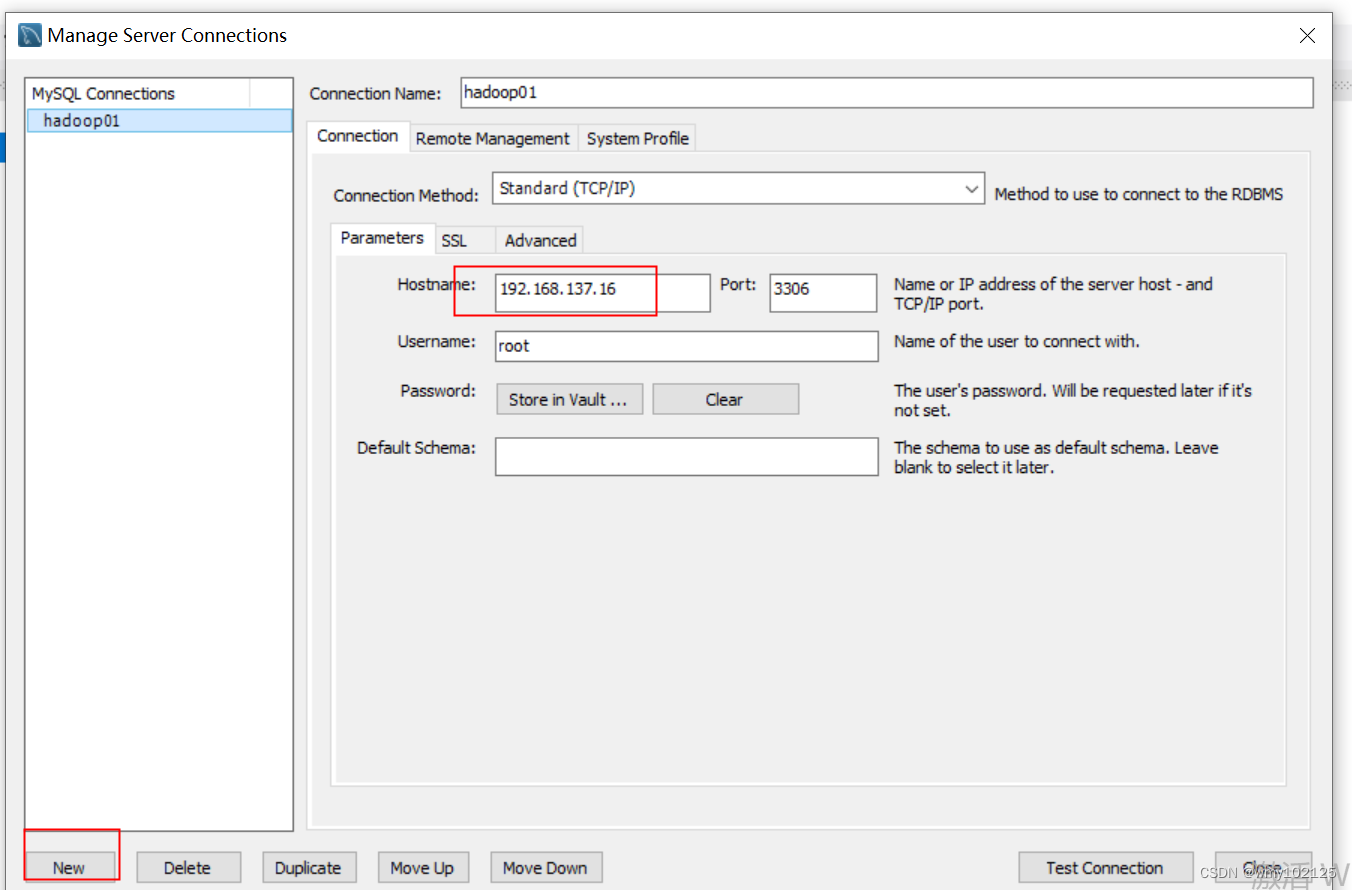

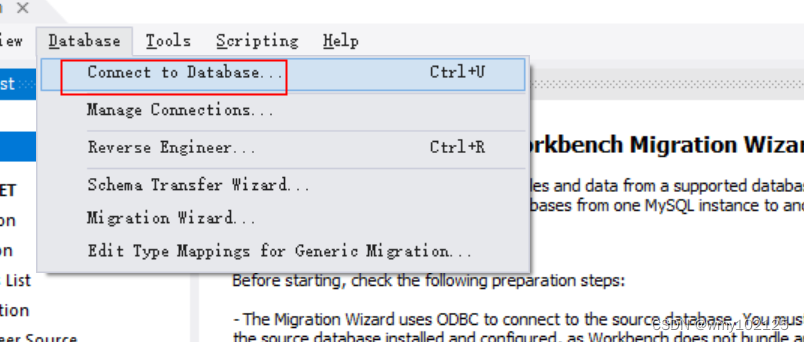

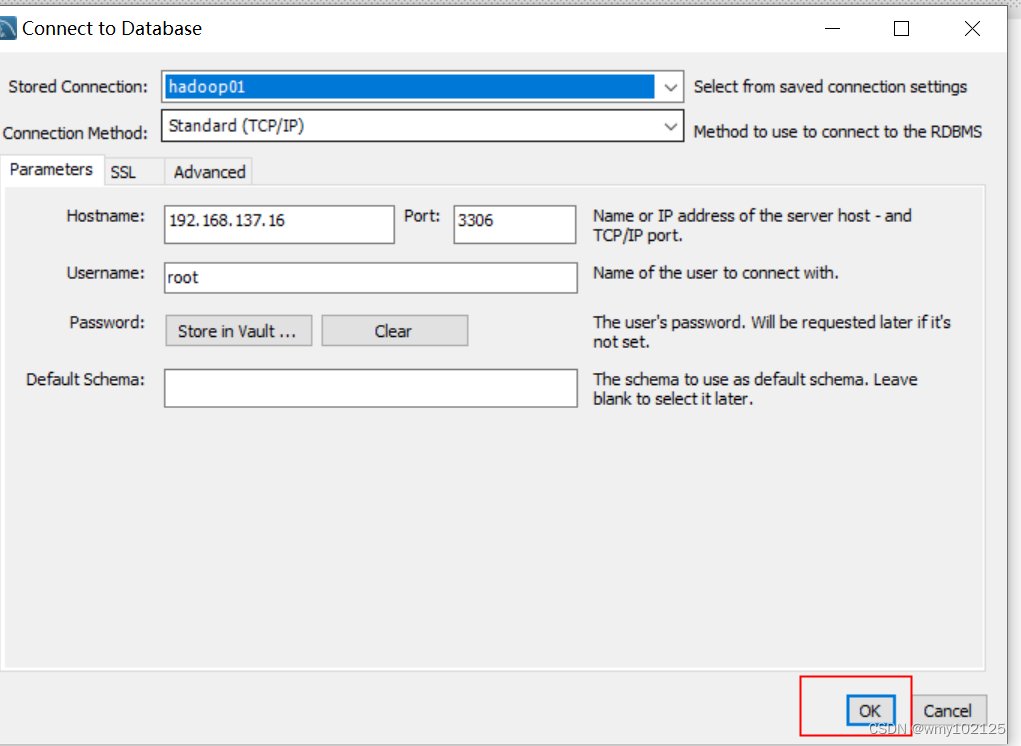

用mysql的客户端连接

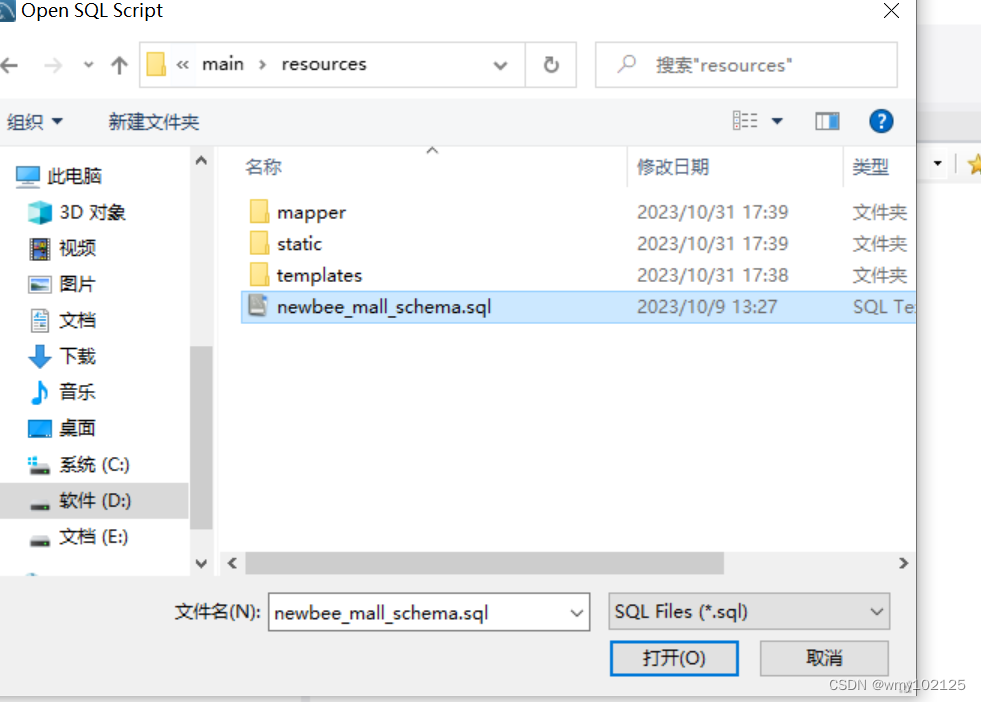

在mysql客户端运行商城的sql语句建表以及导入数据

D:\Program Files\IntelliJ IDEA 2023.2.2\workplace\newbee-mall-master\src\main\resources

打开后,添加两行代码,创建数据库以及使用当前创建的数据库

然后运行所有语句

表创建完成

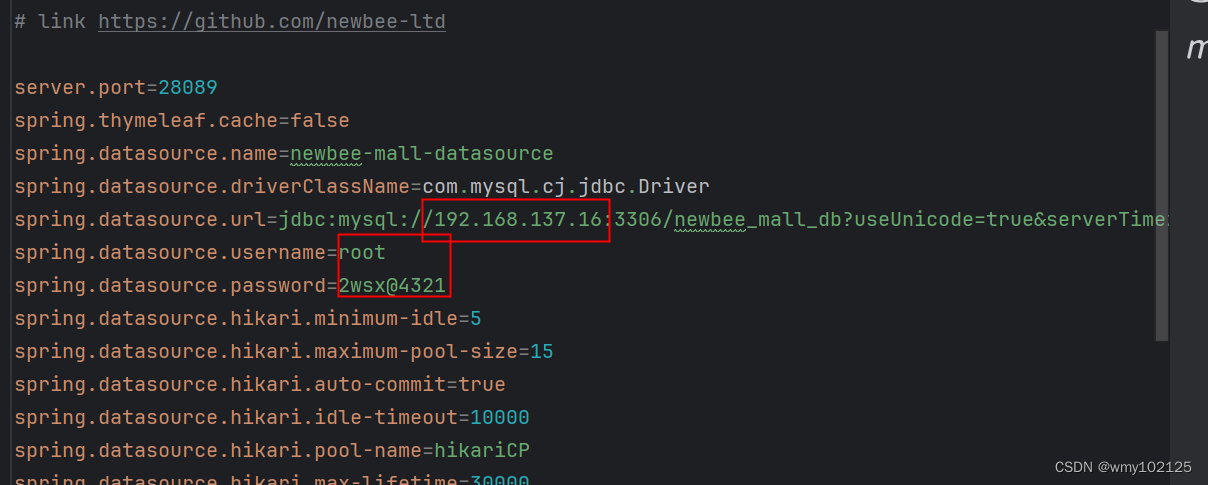

更改mysql连接为自己安装了mysql虚拟机的ip地址,以及用户名和密码都要更改成自己的

虚拟机的安装、mysql在虚拟机上的安装、hadoop分布式安装请参考我前面的几篇文章

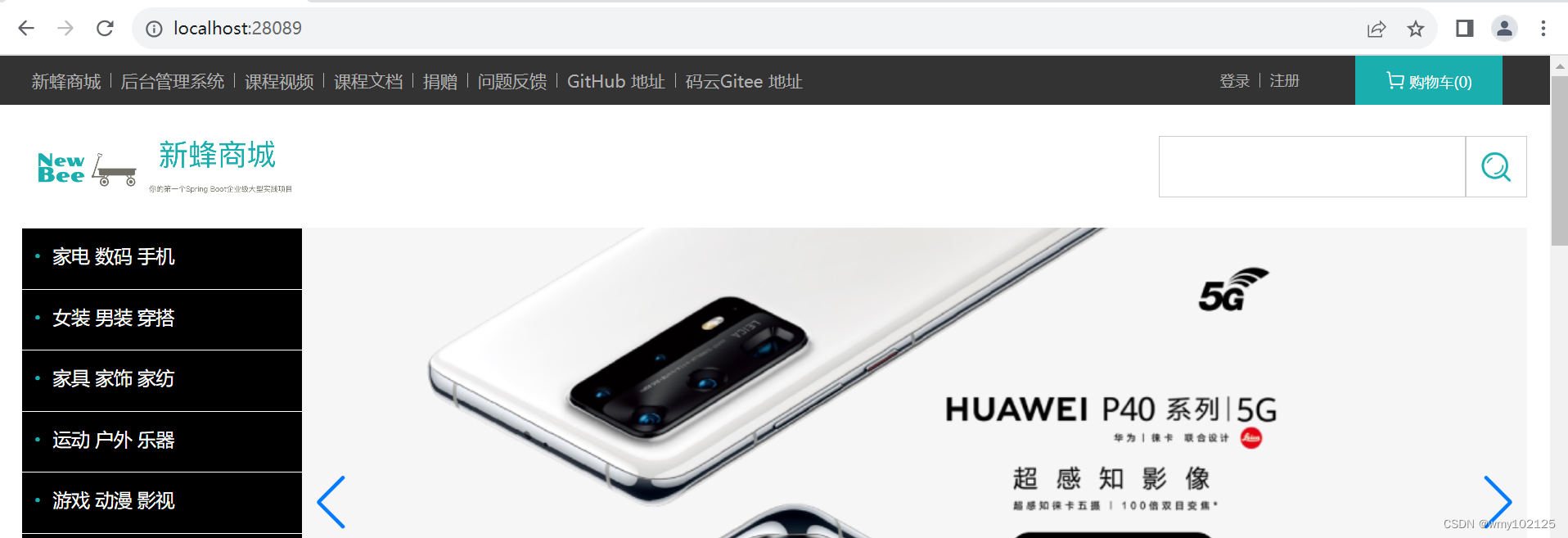

启动项目查看项目是否能够正常点页面等的,自己验证下注册登录等的是否能够正常使用,我的可以正常使用

到自己的虚拟机上启动hdfs,一定要记得启动,否则本机项目启动连接会报错,我的hadoop部署的是分布式集群,一台namenode,两台datanode,所以三台会都启动起来

添加hdfs配置以及dao层连接代码

package ltd.newbee.mall.dao;import ltd.newbee.mall.util.SystemUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.springframework.stereotype.Component;import java.net.URI;@Component

public class HdfsDao {static Configuration configuration;static FileSystem fileSystem;static {try {configuration = new Configuration();configuration.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER");configuration.set("dfs.client.block.write.replace-datanode-on-failure.enable", "true");fileSystem = FileSystem.get(URI.create(SystemUtil.getProperties().getProperty("spring.hdfs.url")), configuration, "admin");} catch (Exception e) {throw new RuntimeException(e);}}public static Configuration getConfiguration() {return configuration;}public static FileSystem getFileSystem() {return fileSystem;}

}

package ltd.newbee.mall.util;import org.apache.logging.log4j.util.PropertiesUtil;import java.io.IOException;

import java.math.BigInteger;

import java.security.MessageDigest;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Properties;/*** @author 13* @qq交流群 796794009* @email 2449207463@qq.com* @link https://github.com/newbee-ltd*/

public class SystemUtil {static Properties properties;private SystemUtil() {}static {properties = new Properties();try {properties.load(PropertiesUtil.class.getClassLoader().getResourceAsStream("application.properties"));} catch (IOException e) {throw new RuntimeException(e);}}public static Properties getProperties() {return properties;}public static String getToday(){SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");return dateFormat.format(new Date());}

}

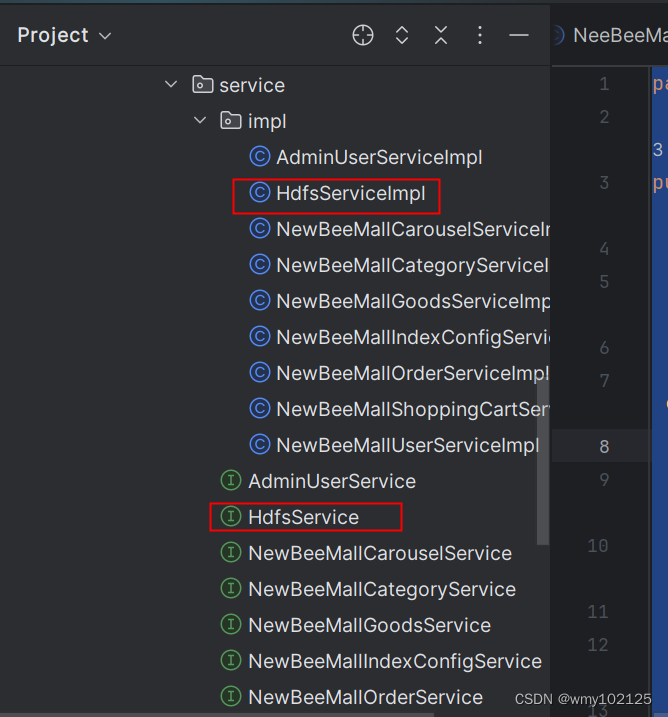

添加HdfsService,service添加完成后,记得自测一下,看下hdfs是否连接成功

package ltd.newbee.mall.service;public interface HdfsService {/*** json数据到hdfs的业务*/void jsonToHDFS(String line);

}package ltd.newbee.mall.service.impl;import ltd.newbee.mall.dao.HdfsDao;

import ltd.newbee.mall.service.HdfsService;

import ltd.newbee.mall.util.SystemUtil;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;import java.io.IOException;import static ltd.newbee.mall.util.SystemUtil.getToday;@Service

public class HdfsServiceImpl implements HdfsService {@Autowiredprivate HdfsDao hdfsDao;@Overridepublic void jsonToHDFS(String line) {FileSystem fileSystem = hdfsDao.getFileSystem();FSDataOutputStream os = null;try {String path = SystemUtil.getProperties().getProperty("spring.hdfs.shoppingLog") + "/" + getToday() + ".log";if (fileSystem.exists(new Path(path))) {os = fileSystem.append(new Path(path));} else {os = fileSystem.create(new Path(path));}IOUtils.copyBytes(org.apache.commons.io.IOUtils.toInputStream(line + "\r\n"), os, HdfsDao.getConfiguration(), true);} catch (IOException e) {throw new RuntimeException(e);}}}

添加hdfs entity

package ltd.newbee.mall.entity;public class HdfsLog {private Long userId;private String nickName;private String loginName;private String address;private Long goodsId;private String goodsName;private String goodsIntro;private Long goodsCategoryId;private Integer originalPrice;private Integer sellingPrice;private Integer stockNum;private String tag;private Byte goodsSellStatus;public HdfsLog(){}public HdfsLog(Long userId, String nickName, String loginName, String address, Long goodsId, String goodsName, String goodsIntro, Long goodsCategoryId, Integer originalPrice, Integer sellingPrice, Integer stockNum, String tag, Byte goodsSellStatus) {this.userId = userId;this.nickName = nickName;this.loginName = loginName;this.address = address;this.goodsId = goodsId;this.goodsName = goodsName;this.goodsIntro = goodsIntro;this.goodsCategoryId = goodsCategoryId;this.originalPrice = originalPrice;this.sellingPrice = sellingPrice;this.stockNum = stockNum;this.tag = tag;this.goodsSellStatus = goodsSellStatus;}public Long getUserId() {return userId;}public void setUserId(Long userId) {this.userId = userId;}public String getNickName() {return nickName;}public void setNickName(String nickName) {this.nickName = nickName;}public String getLoginName() {return loginName;}public void setLoginName(String loginName) {this.loginName = loginName;}public String getAddress() {return address;}public void setAddress(String address) {this.address = address;}public Long getGoodsId() {return goodsId;}public void setGoodsId(Long goodsId) {this.goodsId = goodsId;}public String getGoodsName() {return goodsName;}public void setGoodsName(String goodsName) {this.goodsName = goodsName;}public String getGoodsIntro() {return goodsIntro;}public void setGoodsIntro(String goodsIntro) {this.goodsIntro = goodsIntro;}public Long getGoodsCategoryId() {return goodsCategoryId;}public void setGoodsCategoryId(Long goodsCategoryId) {this.goodsCategoryId = goodsCategoryId;}public Integer getOriginalPrice() {return originalPrice;}public void setOriginalPrice(Integer originalPrice) {this.originalPrice = originalPrice;}public Integer getSellingPrice() {return sellingPrice;}public void setSellingPrice(Integer sellingPrice) {this.sellingPrice = sellingPrice;}public Integer getStockNum() {return stockNum;}public void setStockNum(Integer stockNum) {this.stockNum = stockNum;}public String getTag() {return tag;}public void setTag(String tag) {this.tag = tag;}public Byte getGoodsSellStatus() {return goodsSellStatus;}public void setGoodsSellStatus(Byte goodsSellStatus) {this.goodsSellStatus = goodsSellStatus;}}

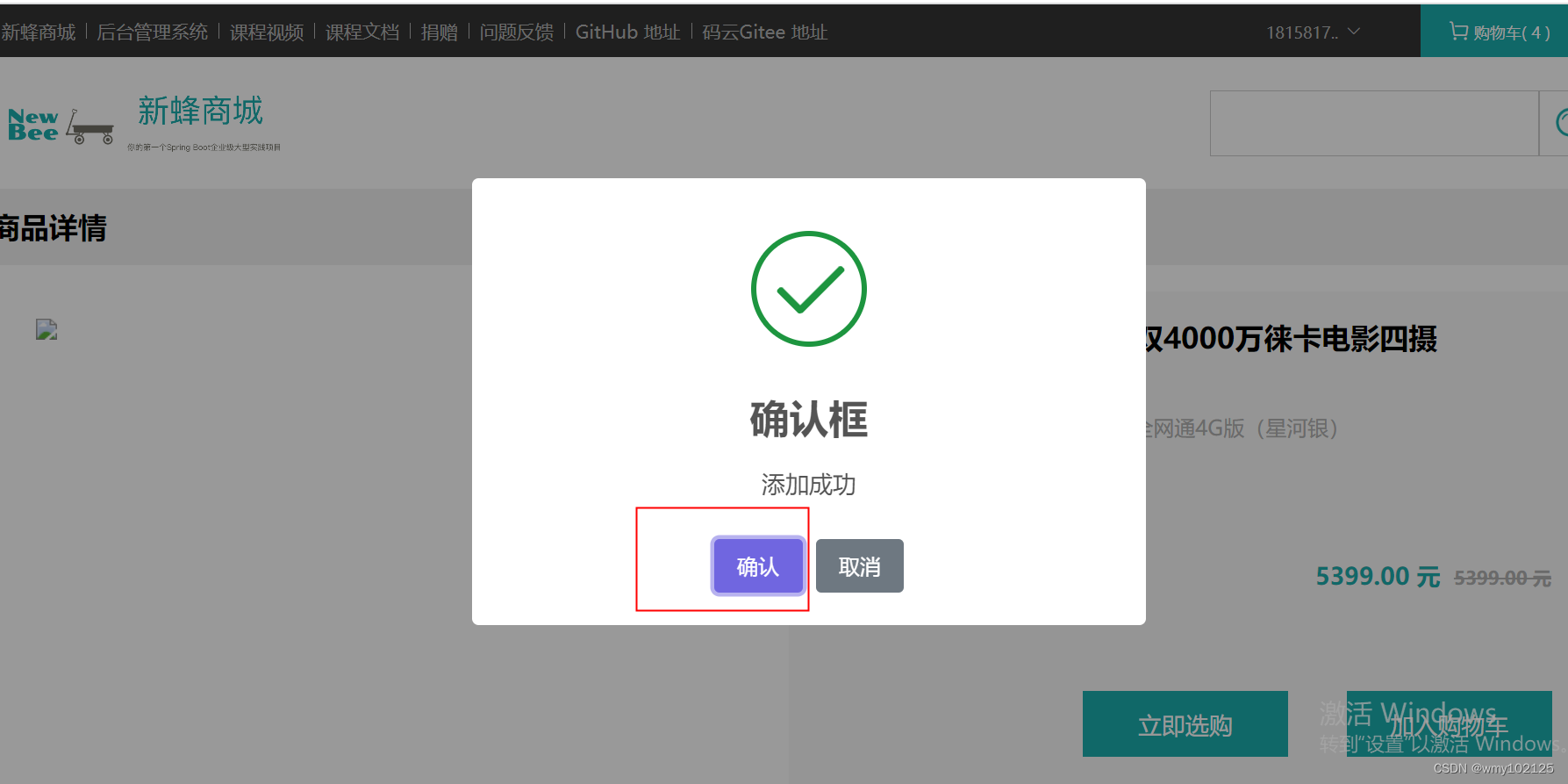

controller中添加hdfs的调用,我添加到了购物车添加成功的接口中

ShoppingCartController

@PostMapping("/shop-cart")@ResponseBodypublic Result saveNewBeeMallShoppingCartItem(@RequestBody NewBeeMallShoppingCartItem newBeeMallShoppingCartItem,HttpSession httpSession) {NewBeeMallUserVO user = (NewBeeMallUserVO) httpSession.getAttribute(Constants.MALL_USER_SESSION_KEY);newBeeMallShoppingCartItem.setUserId(user.getUserId());String saveResult = newBeeMallShoppingCartService.saveNewBeeMallCartItem(newBeeMallShoppingCartItem);//添加成功if (ServiceResultEnum.SUCCESS.getResult().equals(saveResult)) {NewBeeMallGoods newBeeMallGoods = newBeeMallGoodsService.getNewBeeMallGoodsById(newBeeMallShoppingCartItem.getGoodsId());//添加hdfs日志信息HdfsLog hdfsLog = new HdfsLog();BeanUtils.copyProperties(user,hdfsLog);hdfsLog.setGoodsId(newBeeMallGoods.getGoodsId());hdfsLog.setGoodsIntro(newBeeMallGoods.getGoodsIntro());hdfsLog.setGoodsName(newBeeMallGoods.getGoodsName());hdfsLog.setGoodsCategoryId(newBeeMallGoods.getGoodsCategoryId());hdfsLog.setGoodsSellStatus(newBeeMallGoods.getGoodsSellStatus());Gson gson = new GsonBuilder().serializeNulls().setDateFormat("yyyy-MM-dd HH:mm:ss").setFieldNamingPolicy(FieldNamingPolicy.IDENTITY).create();hdfsService.jsonToHDFS(gson.toJson(hdfsLog));return ResultGenerator.genSuccessResult();}//添加失败return ResultGenerator.genFailResult(saveResult);}启动项目进行测试,点击一个商品,添加到购物车添加多个商品看下hadoop日志文件

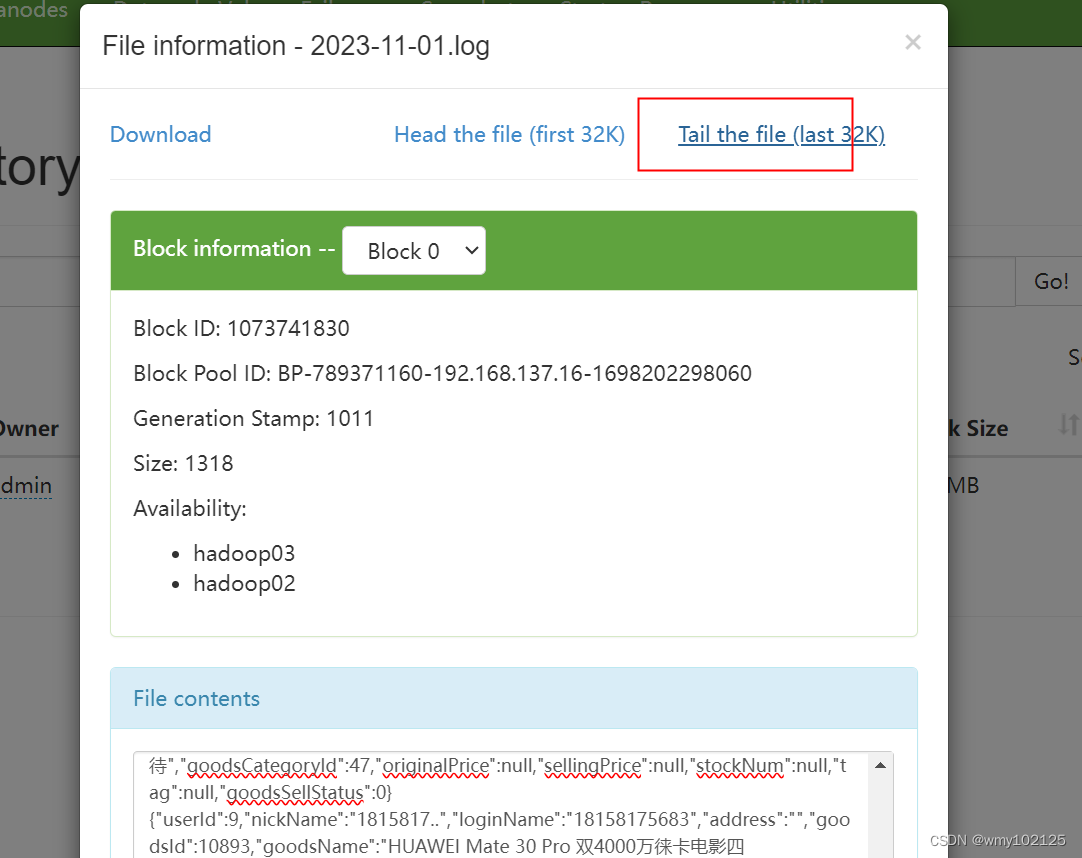

http://192.168.137.16:50070/explorer.html#/logs/shoppingLog

查看一下是不是每次点击一次添加购物车,日志中都会追加一条

)