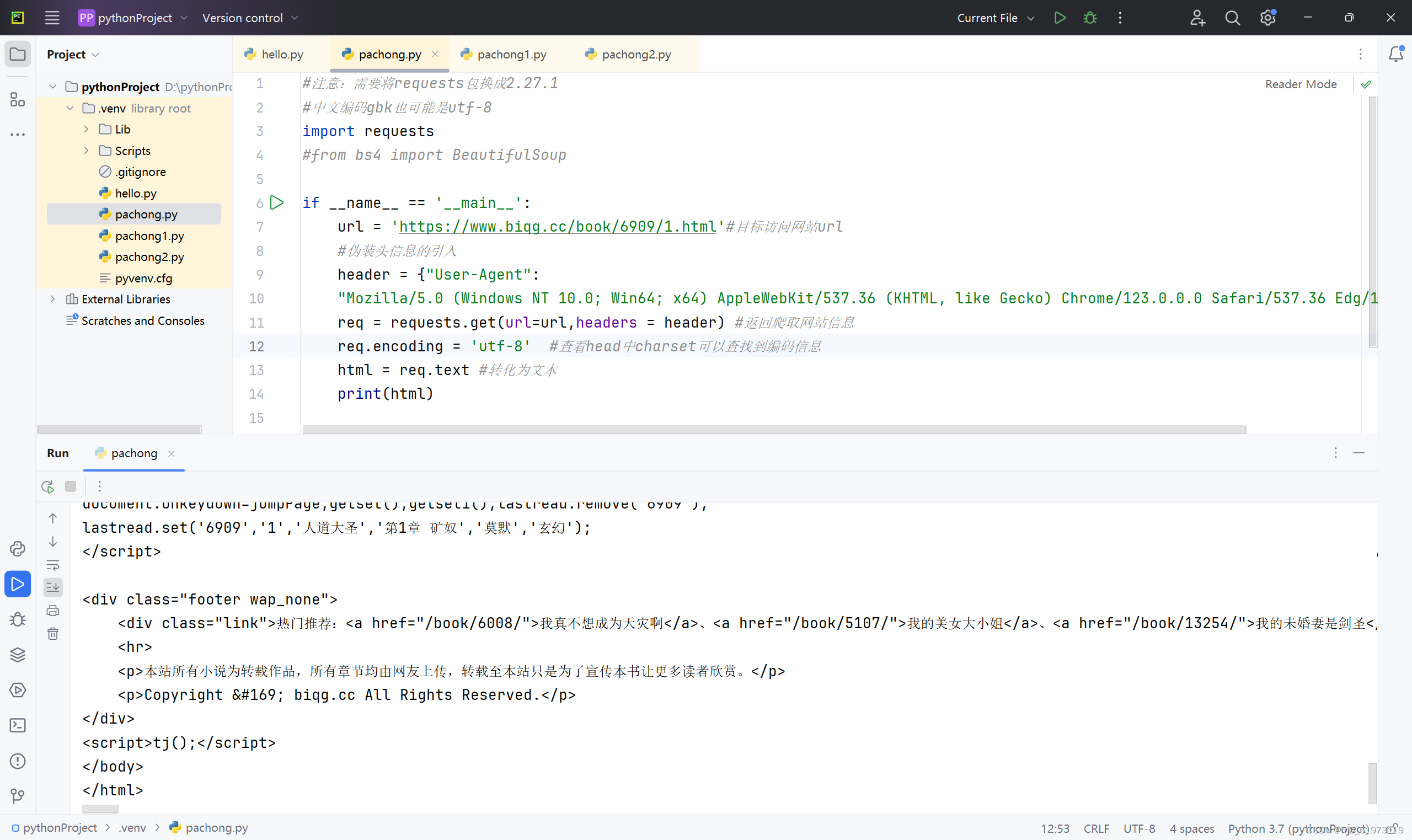

一、基本案例

#注意:需要将requests包换成2.27.1

#中文编码gbk也可能是utf-8

import requests

#from bs4 import BeautifulSoupif __name__ == '__main__':url = 'https://www.biqg.cc/book/6909/1.html'#目标访问网站url#伪装头信息的引入header = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.0.0"}req = requests.get(url=url,headers = header) #返回爬取网站信息req.encoding = 'utf-8' #查看head中charset可以查找到编码信息html = req.text #转化为文本print(html)

二、升级案例

# import bs4 from BeautifulSoup

# #html接上文中的已爬取得到的全部信息

# bes= BeautifulSoup(html,"lxml")#通过lxml方式解析获取网页中文本信息

# text = bes.find("div", id = "content"[,class_ = "<class的名称>"]) #解析text中,提取标签为"div"内id = "content"全部信息,也可解析提取class = <某名称>的内容信息import requests

from bs4 import BeautifulSoupif __name__ == '__main__':url = 'https://www.biqg.cc/book/6909/1.html'#目标访问网站urlheader = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.0.0"}req = requests.get(url=url,headers = header)req.encoding = 'utf-8'html = req.textbes = BeautifulSoup(html,"lxml")texts = bes.find("div", class_="content")print(texts)

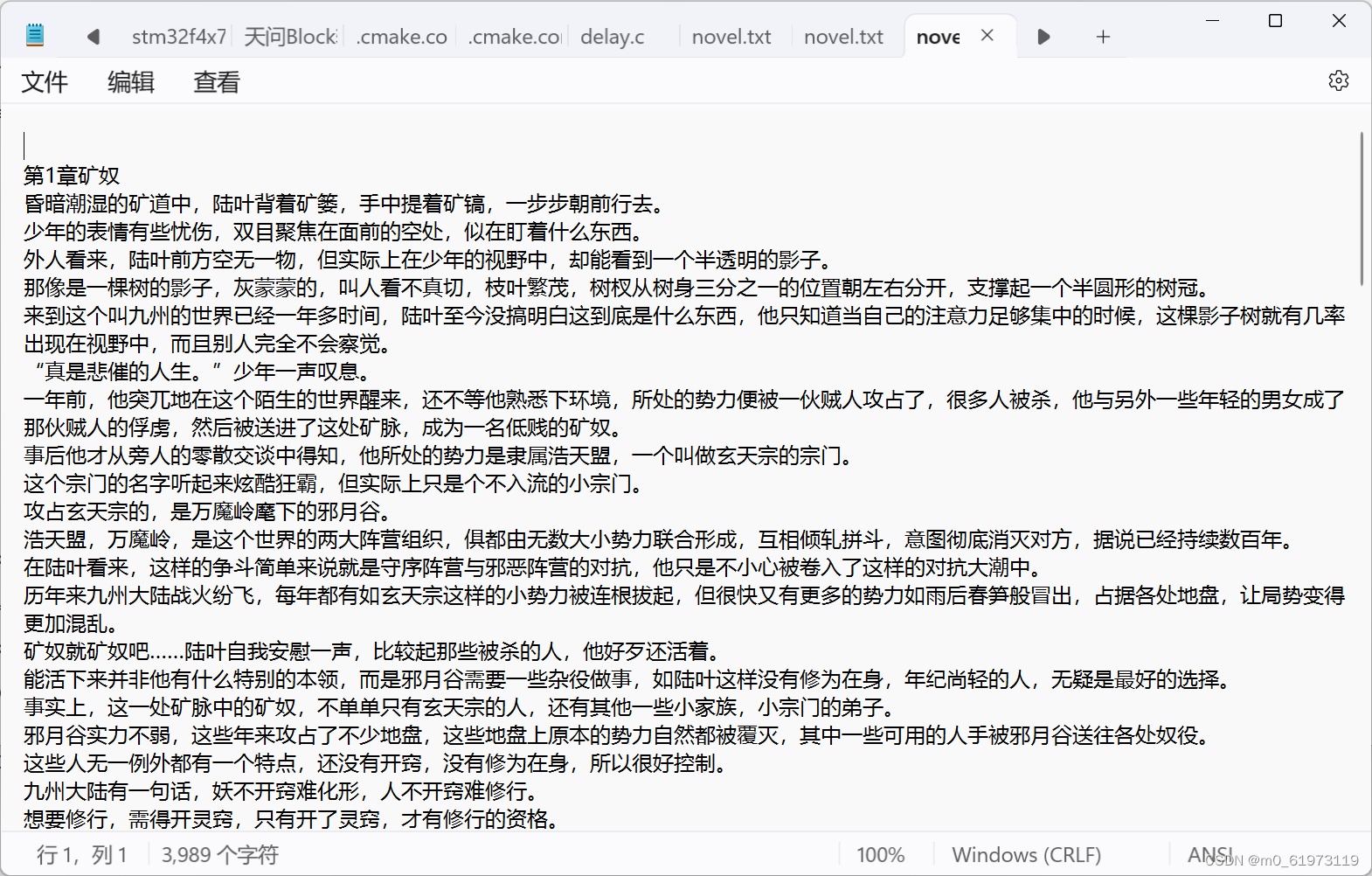

三、最终案例

import requests

from bs4 import BeautifulSoupif __name__ == '__main__':url = 'https://www.biqg.cc/book/6909/1.html'#目标访问网站urlheader = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 Edg/123.0.0.0"}req = requests.get(url=url,headers = header)req.encoding = 'utf-8'html = req.textbes = BeautifulSoup(html,"lxml")texts = bes.find("div", class_ = "Readarea ReadAjax_content")# print(texts)texts_list = texts.text.split("\xa0" * 4) #texts.text.split("\xa0" * 4)会将texts.text按照\xa0\xa0\xa0\xa0进行分割,得到一个字符串列表,存储在texts_list中。texts_list = texts.text.split("\u3000" * 2)# print(texts_list)with open("D:/novel.txt","w") as file: ##打开读写文件,逐行将列表读入文件内for line in texts_list:file.write(line+"\n")

教程)

——构建高效数字化商业新生态)

)