targets[positive_indices, assigned_annotations[positive_indices, 4].long()] = 1

## 把正样本所对应的锚框所对应的类别的列置为1

# aim = torch.randint(0, 1, (1, 80))

# tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0]])

# aim[0, 12] = 1

# tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

# 0, 0, 0, 0, 0, 0, 0, 0]])

result = torch.where(condition, x, y)

import torch

condition = torch.tensor([True, False, True, False])

x = torch.tensor([1, 2, 3, 4])

y = torch.tensor([10, 20, 30, 40])

result = torch.where(condition, x, y)

print(result)

tensor([ 1, 20, 3, 40])

targets = torch.tensor([1, 0, 1, 0, 1])

torch.eq(targets, 1.)

Out[20]: tensor([ True, False, True, False, True])

retinaNet FocalLoss源码详解

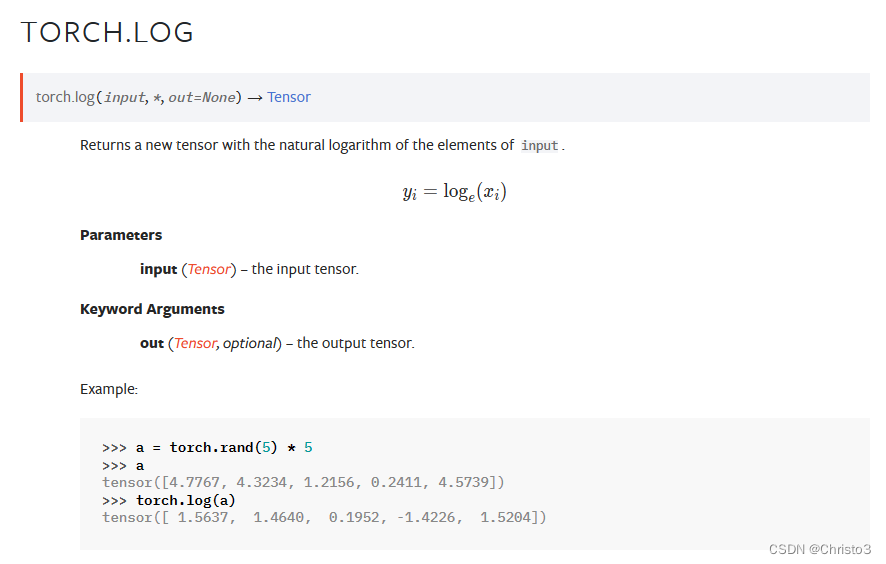

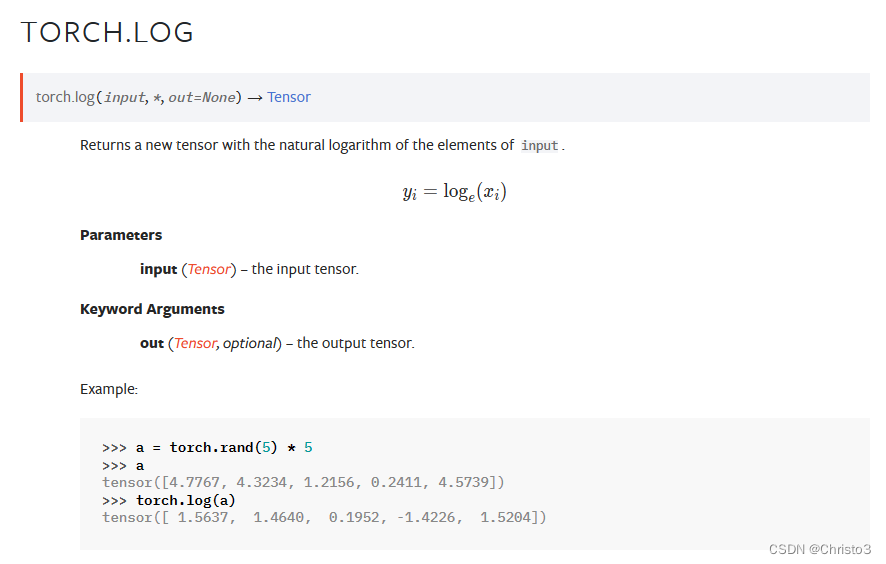

class FocalLoss(nn.Module):#def __init__(self):def forward(self, classifications, regressions, anchors, annotations):alpha = 0.25gamma = 2.0batch_size = classifications.shape[0]classification_losses = []regression_losses = []anchor = anchors[0, :, :]anchor_widths = anchor[:, 2] - anchor[:, 0]anchor_heights = anchor[:, 3] - anchor[:, 1]anchor_ctr_x = anchor[:, 0] + 0.5 * anchor_widthsanchor_ctr_y = anchor[:, 1] + 0.5 * anchor_heightsfor j in range(batch_size):classification = classifications[j, :, :]regression = regressions[j, :, :]bbox_annotation = annotations[j, :, :]bbox_annotation = bbox_annotation[bbox_annotation[:, 4] != -1]classification = torch.clamp(classification, 1e-4, 1.0 - 1e-4)if bbox_annotation.shape[0] == 0:if torch.cuda.is_available():alpha_factor = torch.ones(classification.shape).cuda() * alphaalpha_factor = 1. - alpha_factorfocal_weight = classificationfocal_weight = alpha_factor * torch.pow(focal_weight, gamma)bce = -(torch.log(1.0 - classification))# cls_loss = focal_weight * torch.pow(bce, gamma)cls_loss = focal_weight * bceclassification_losses.append(cls_loss.sum())regression_losses.append(torch.tensor(0).float().cuda())else:alpha_factor = torch.ones(classification.shape) * alphaalpha_factor = 1. - alpha_factorfocal_weight = classificationfocal_weight = alpha_factor * torch.pow(focal_weight, gamma)bce = -(torch.log(1.0 - classification))# cls_loss = focal_weight * torch.pow(bce, gamma)cls_loss = focal_weight * bceclassification_losses.append(cls_loss.sum())regression_losses.append(torch.tensor(0).float())continueIoU = calc_iou(anchors[0, :, :], bbox_annotation[:, :4]) # num_anchors x num_annotationsIoU_max, IoU_argmax = torch.max(IoU, dim=1) # num_anchors x 1#Iou_max 每一行的最大值,即锚框与标注框iou的最大值,iou_argmax代表是第几个标注框#import pdb#pdb.set_trace()# compute the loss for classificationtargets = torch.ones(classification.shape) * -1if torch.cuda.is_available():targets = targets.cuda()targets[torch.lt(IoU_max, 0.4), :] = 0#把锚框与目标框iou低于0.4的targets值置为0positive_indices = torch.ge(IoU_max, 0.5)#iou_max 大于0.5的置为Truenum_positive_anchors = positive_indices.sum()assigned_annotations = bbox_annotation[IoU_argmax, :]##assigned_annotations 存放与锚框 iou最大的标注框targets[positive_indices, :] = 0targets[positive_indices, assigned_annotations[positive_indices, 4].long()] = 1## 把正样本所对应的锚框所对应的类别的列置为1# aim = torch.randint(0, 1, (1, 80))# tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0]])# aim[0, 12] = 1# tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,# 0, 0, 0, 0, 0, 0, 0, 0]])if torch.cuda.is_available():alpha_factor = torch.ones(targets.shape).cuda() * alphaelse:alpha_factor = torch.ones(targets.shape) * alphaalpha_factor = torch.where(torch.eq(targets, 1.), alpha_factor, 1. - alpha_factor)#对应为1的位置设置为alpha_factorfocal_weight = torch.where(torch.eq(targets, 1.), 1. - classification, classification)focal_weight = alpha_factor * torch.pow(focal_weight, gamma)bce = -(targets * torch.log(classification) + (1.0 - targets) * torch.log(1.0 - classification))#注意这里的log是以e为底#二元交叉熵损失(Binary Cross Entropy, BCE)# cls_loss = focal_weight * torch.pow(bce, gamma)# 80中为一位都使用该公式进行计算cls_loss = focal_weight * bceif torch.cuda.is_available():cls_loss = torch.where(torch.ne(targets, -1.0), cls_loss, torch.zeros(cls_loss.shape).cuda())else:cls_loss = torch.where(torch.ne(targets, -1.0), cls_loss, torch.zeros(cls_loss.shape))temp = cls_loss.sum()/torch.clamp(num_positive_anchors.float(), min=1.0)classification_losses.append(cls_loss.sum()/torch.clamp(num_positive_anchors.float(), min=1.0))# compute the loss for regressionif positive_indices.sum() > 0:assigned_annotations = assigned_annotations[positive_indices, :]anchor_widths_pi = anchor_widths[positive_indices]#把正样本的anchor_widths拿出来#注意理解anchor_widths[positive_indices]anchor_heights_pi = anchor_heights[positive_indices]anchor_ctr_x_pi = anchor_ctr_x[positive_indices]anchor_ctr_y_pi = anchor_ctr_y[positive_indices]gt_widths = assigned_annotations[:, 2] - assigned_annotations[:, 0]gt_heights = assigned_annotations[:, 3] - assigned_annotations[:, 1]gt_ctr_x = assigned_annotations[:, 0] + 0.5 * gt_widthsgt_ctr_y = assigned_annotations[:, 1] + 0.5 * gt_heights# clip widths to 1gt_widths = torch.clamp(gt_widths, min=1)gt_heights = torch.clamp(gt_heights, min=1)targets_dx = (gt_ctr_x - anchor_ctr_x_pi) / anchor_widths_pi# 整个表达式# targets_dx = (gt_ctr_x - anchor_ctr_x_pi) / anchor_widths_pi# 的意义是计算目标框和锚框在x方向上的相对位移,并将其归一化到锚框的宽度上。## 具体地说:# (gt_ctr_x - anchor_ctr_x_pi)# 计算了真实目标框和锚框在x方向上的中心点的差值。# 除以anchor_widths_pi 将这个差值归一化到锚框的宽度上。targets_dy = (gt_ctr_y - anchor_ctr_y_pi) / anchor_heights_pitargets_dw = torch.log(gt_widths / anchor_widths_pi)targets_dh = torch.log(gt_heights / anchor_heights_pi)# 表达式gt_widths / anchor_widths_pi# 计算了真实目标框宽度和锚框宽度之间的比例。然后,对这个比例取自然对数(torch.log),得到的结果# targets_dw是对数空间中的相对宽度差。# 这样的计算通常在目标检测任务中用于计算宽度方向上的损失。对数变换有助于处理不同尺度的宽度,因为当宽度差异很大时,# 对数尺度上的差异会变得更加均匀。此外,对数变换还有助于模型更好地学习如何调整锚框的宽度以匹配真实的目标框。# 例如,如果gt_widths是100,而anchor_widths_pi是50,那么gt_widths / anchor_widths_pi将是2,而# torch.log(2)大约是0.693。这意味着目标框的宽度是锚框宽度的两倍,而在对数尺度上,这个差异被表示为大约0.693。targets = torch.stack((targets_dx, targets_dy, targets_dw, targets_dh))targets = targets.t()if torch.cuda.is_available():targets = targets/torch.Tensor([[0.1, 0.1, 0.2, 0.2]]).cuda()else:targets = targets/torch.Tensor([[0.1, 0.1, 0.2, 0.2]])negative_indices = 1 + (~positive_indices)regression_diff = torch.abs(targets - regression[positive_indices, :])regression_loss = torch.where(torch.le(regression_diff, 1.0 / 9.0),0.5 * 9.0 * torch.pow(regression_diff, 2),regression_diff - 0.5 / 9.0)regression_losses.append(regression_loss.mean())else:if torch.cuda.is_available():regression_losses.append(torch.tensor(0).float().cuda())else:regression_losses.append(torch.tensor(0).float())return torch.stack(classification_losses).mean(dim=0, keepdim=True), torch.stack(regression_losses).mean(dim=0, keepdim=True)

![PaddleOCR识别框架解读[07] 文本检测](http://pic.xiahunao.cn/PaddleOCR识别框架解读[07] 文本检测)

--匹配流程)

)

联结词及对应的真值指派,最小全功能联结词集,对偶式,范式,范式存在定理,小项)