本笔记主要记录常见的三个激活函数sigmoid,tanh和relu,关于激活函数详细的描述,可以参考这里:

详解激活函数(Sigmoid/Tanh/ReLU/Leaky ReLu等) - 知乎

import tensorflow as tf

import numpy as nptf.__version__#详细的激活函数参考资料

#https://zhuanlan.zhihu.com/p/427541517

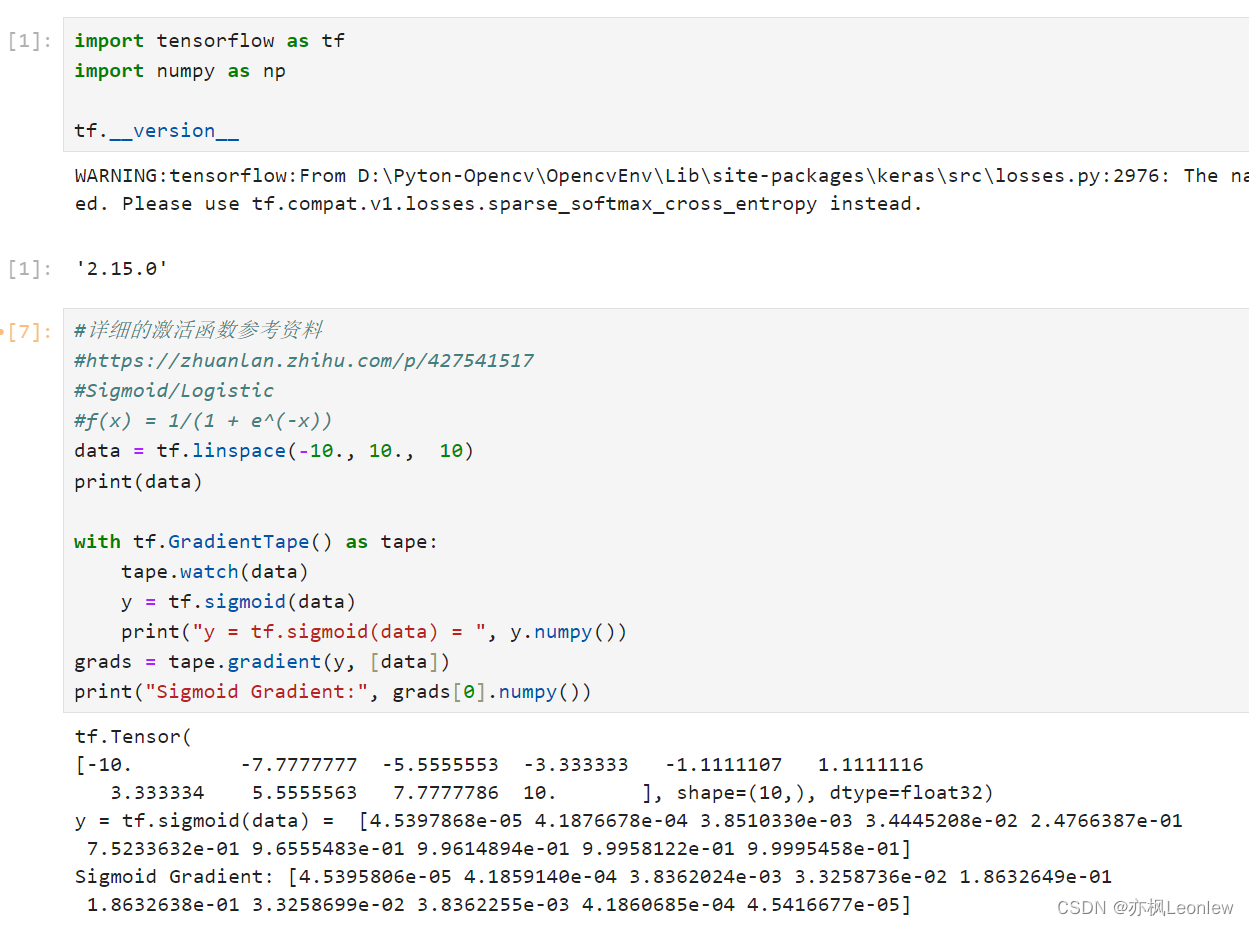

#Sigmoid/Logistic

#f(x) = 1/(1 + e^(-x))

data = tf.linspace(-10., 10., 10)

print(data)with tf.GradientTape() as tape:tape.watch(data)y = tf.sigmoid(data)print("y = tf.sigmoid(data) = ", y.numpy())

grads = tape.gradient(y, [data])

print("Sigmoid Gradient:", grads[0].numpy())#Tanh

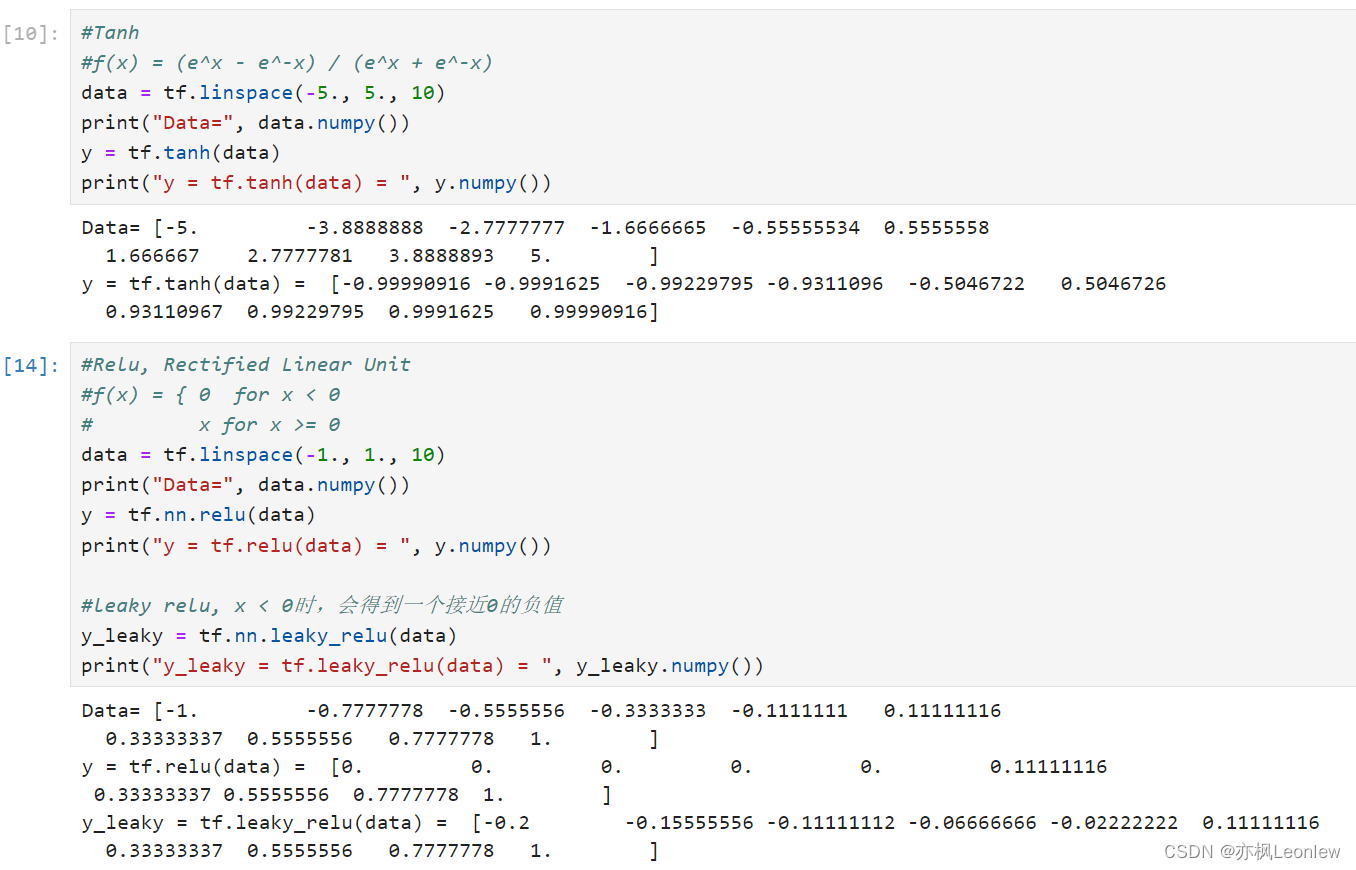

#f(x) = (e^x - e^-x) / (e^x + e^-x)

data = tf.linspace(-5., 5., 10)

print("Data=", data.numpy())

y = tf.tanh(data)

print("y = tf.tanh(data) = ", y.numpy())#Relu, Rectified Linear Unit

#f(x) = { 0 for x < 0

# x for x >= 0

data = tf.linspace(-1., 1., 10)

print("Data=", data.numpy())

y = tf.nn.relu(data)

print("y = tf.relu(data) = ", y.numpy())#leaky relu, x < 0时,会得到一个接近0的负值

y_leaky = tf.nn.leaky_relu(data)

print("y_leaky = tf.leaky_relu(data) = ", y_leaky.numpy())运行结果:

)

深度解析)