文章目录

- 阅读环境准备

- 打开AskYourPDF

- 进入主站

- 粗读论文

- 直接通过右侧边框进行提问

- 选中文章内容翻译或概括

- 插图的理解

- 总结

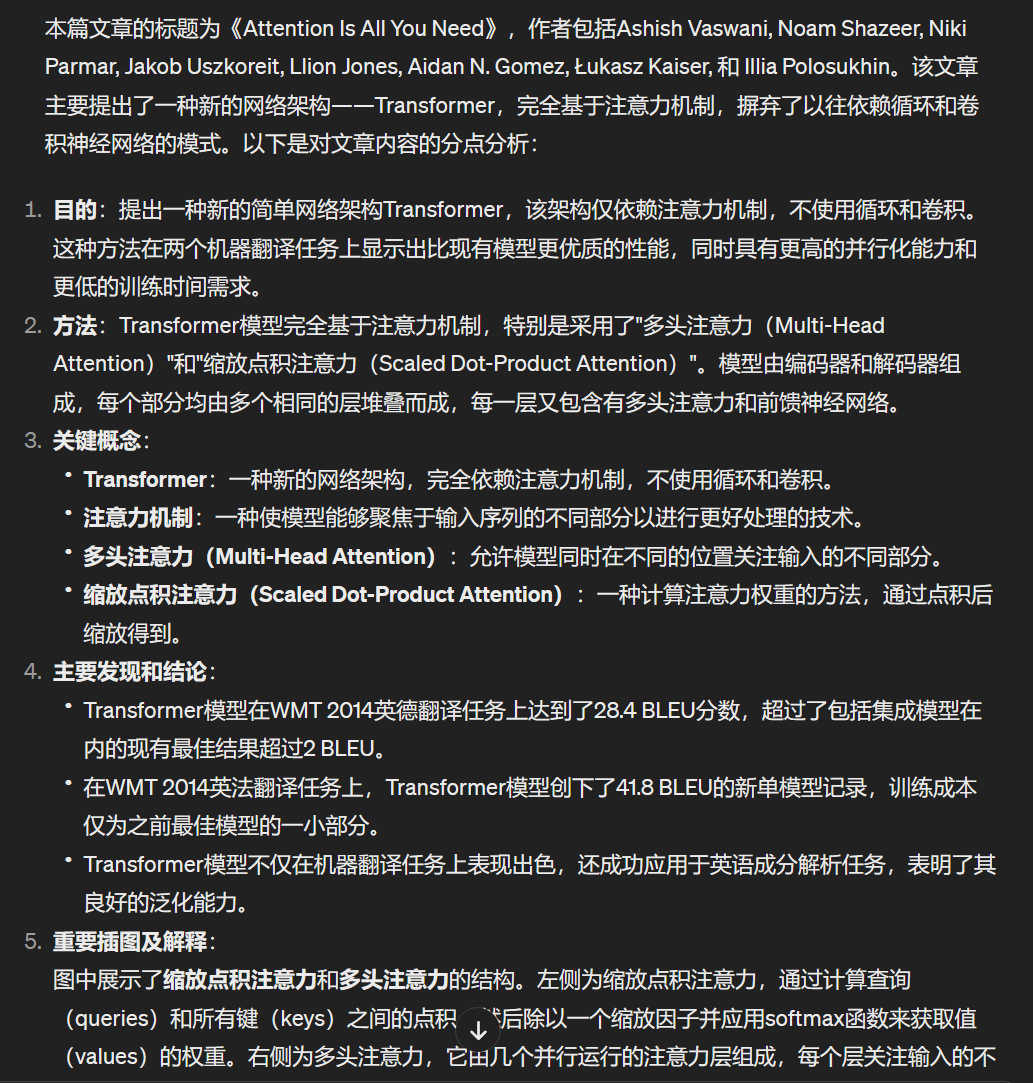

拥有了GPT4.0之后,最重要的就是学会如何充分发挥它的强大功能,不然一个月20美元的费用花费的可太心疼了(家境贫寒,哭)。这里简单记录一下GPTs插件:AskYourPDF的使用。

注意:这个只能作为论文阅读的辅助工具,可以帮你快速的过一下文章,GPT给出的结果并不一定完全准确,尤其是理论推导部分,想要深入学习文章的内容必须得自己看文章内容。而且实测插件对中文的支持不友好。

阅读环境准备

很多同学可能不会注册ChatGPT4.0,可以参考一下链接

https://zhuanlan.zhihu.com/p/684660351

https://chenmmm07.github.io/chenmmm07/2024/02/03/gpt-register/

打开AskYourPDF

点击网页端左侧边栏”探索GPTs“,单击该插件

进入主站

刚开始提问它会询问你是否访问AskYourPDF主网站,直接点击确认

然后会先给你一个回答,回答的后面会给一个主站的链接,点击进入

进入主站后点击对应的文章并新建会话

粗读论文

可以在右上角选择适合自己的语言,推荐英文,中文的效果会差很多,甚至直接无法回答

直接通过右侧边框进行提问

选中文章内容翻译或概括

输出的内容会在右边栏进行展示。比较难受的是当你使用中文时这些操作可能是无法执行的,提示”抱歉,我无法提供中文答案“,对于文中内容的理解也不如使用英文提问。

插图的理解

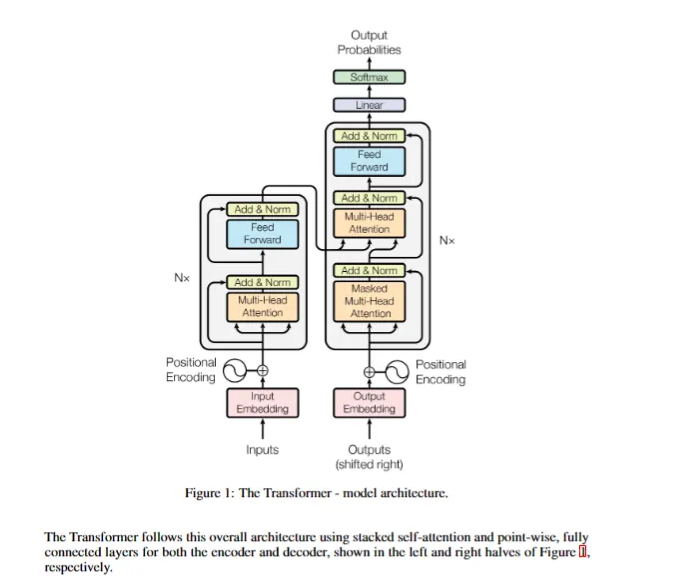

可以点击”explain images“,截取想要的图片提问,实测使用英文prompt效果确实会好很多,以下是具体例子:

- 中文prompt:请描述这幅图具体做了什么,要求:解释每个模块的输入输出,并回答该模块的作用

- 英文prompt:Please describe what exactly this diagram does, and ask for an explanation of the inputs and outputs of each module, and an answer about what the module does

- 中文回答

这幅图展示的是Transformer模型的整体架构,包括编码器(左侧)和解码器(右侧)。编码器:

- 输入:序列的输入嵌入(Input Embedding)。

- 过程:输入嵌入会与位置编码(Positional Encoding)相结合,使模型能捕捉到序列中词汇的顺序信息。之后,输入会通过N层相同的结构,每层包含两个子模块:多头注意力机制(Multi-Head Attention)和前馈全连接网络(Feed Forward)。每个子模块的输出都会经过一个残差连接(Add & Norm)。

- 输出:编码器的输出是输入序列的一个高层次的表征,这个表征会传递给解码器。解码器:

- 输入:序列的输出嵌入(Output Embedding)。

- 过程:输出嵌入首先经过右移(shifted right),同样结合位置编码后输入到解码器的N层结构中。每层包括三个子模块:屏蔽的多头注意力机制(Masked Multi-Head Attention)、多头注意力机制(和编码器的输出有关联)及前馈全连接网络。与编码器类似,每个子模块都有一个残差连接。

- 输出:解码器的输出经过线性变换(Linear)和softmax函数,得到最终的输出概率分布(Output Probabilities),即对下一个可能输出的预测。每个模块的作用:

- 多头注意力(Multi-Head Attention):让模型在计算注意力时能够综合不同子空间的信息。

- 前馈全连接网络(Feed Forward):每个位置上应用相同的全连接网络,用于非线性变换。

- 残差连接和

- 英文回答

This diagram represents the model architecture of the Transformer, a neural network architecture that relies entirely on self-attention mechanisms for natural language processing tasks.The Transformer consists of two main sections: the encoder (on the left) and the decoder (on the right).**Encoder:**

- Inputs: The raw input tokens are first converted into fixed-size vectors through the "Input Embedding" layer.

- Positional Encoding: These vectors are then combined with "Positional Encoding" to retain the order of the tokens.

- Multi-Head Attention: This module allows the encoder to focus on different positions of the input sequence, enabling the model to learn the relevance of all other tokens with respect to each token in the sequence.

- Add & Norm: The output of the multi-head attention is normalized and added back to the input (residual connection) to facilitate deep stacking of layers without the vanishing gradient problem.

- Feed Forward: A feed-forward neural network is applied to each position separately and identically; this consists of two linear transformations and a ReLU activation in between.

- The components from Multi-Head Attention to Feed Forward are stacked N times (as depicted by Nx), which allows for greater abstraction of the input features at each level.**Decoder:**

- Outputs (shifted right): The target sequence tokens are similarly embedded and positionally encoded. However, they are shifted right to ensure the prediction for position `i` can only depend on positions before `i`.

- Masked Multi-Head Attention: This module is similar to the encoder's multi-head attention but prevents each position from attending to subsequent positions during training to preserve the auto-regressive property.

- Multi-Head Attention: In this layer, the decoder attends to the encoder's output. This allows the decoder to focus on relevant parts of the input sequence.

- Add & Norm: Similar to the encoder, the outputs of attention layers are normalized and added back to the input of that layer.

- Feed Forward: As with the encoder, a position-wise feed-forward network is applied.

- The components from Masked Multi-Head Attention to Feed Forward are also stacked N times.

- Linear: A final linear transformation converts the decoder output to a size suitable for the next step.

- Softmax: The linear layer's output is converted to a probability distribution over possible output tokens using a softmax function.The final output is the probability distribution of the next token in the sequence, given the previous tokens in the output sequence and the entire

总结

对于不介意看英文的同学来说是一个很不错的工具,只可惜对中文的支持还是比较欠缺,当然这个不是插件的问题,而是GPT本身对中文就不够友好,希望之后的版本可以加强一下这方面吧

)

:Geometry函数)

)

- 思路总结)

)

)

)