import requests

import random

from prettytable import PrettyTable

tb = PrettyTable()

tb.field_names = ['区域','详情页链接','领导','经营领域','公司名','招聘人数','学历要求','工作经验要求','职位名称','期望薪资','技能要求','福利']headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36","Cookie":"lastCity=101240200; wd_guid=48d68397-7249-4f0f-bb46-d46109d91c45; historyState=state; _bl_uid=smlkOr6koyqh9y7eI6q3qhqi9FRb; __zp_seo_uuid__=adebcacd-2035-4cf3-9f73-7a36cbfa3926; __g=-; __l=r=https%3A%2F%2Fcn.bing.com%2F&l=%2Fwww.zhipin.com%2Fchengshi%2Fc101240200%2F&s=1&g=&s=3&friend_source=0; Hm_lvt_194df3105ad7148dcf2b98a91b5e727a=1709172158; __fid=0ffb8b698ee87b386c5ed3d71d3e37f0; Hm_lpvt_194df3105ad7148dcf2b98a91b5e727a=1709180059; __c=1709172158; __a=50791990.1704706028.1705900640.1709172158.23.4.16.23; __zp_stoken__=2e9cfPjrDpcK5XcK7RTQNCREVCkAtNzoxdkU%2BLjk8QT46Qz4%2FPjo7HD0uVi8%2Fw51iw4s1PCs%2BOjo8OEA6Qz8bPkbFgcK5Nz0wYyhDw5dmw5JeMcK%2BBzsNOMK%2BBysNwoBDKAvCuz03Q0JZwro3w4UKwr49w4ETw4U5w4M3O0I5MzsKZBBaOztPSloJTVtJYF9REFJWUC1CQDc%2BdsO6w7kxPBYHEBQSFgcQFBIQDRYREw8OFRETFAkSFhAyQ8Kewr3EgnhSxK3EgsSdwpxiwqbDhcKMwqjCn8KswrRswpXCsMO3wrLCmlTCssKGwohIwr5ywqlaYmRSY3J%2FVXlTw4TDhkrCu2xVwrpxXmIPEWTCgWIJOxM1JsOI"

}

for i in range(1,5):api_url = "https://dps.kdlapi.com/api/getdps/?secret_id=o8lhq88am27nzd51rego&num=5&signature=jp967bz15n8ensgnpksu6fbvfahzcfuh&pt=1&format=json&sep=1"proxy_ip = requests.get(api_url).json()['data']['proxy_list']# 用户名密码认证(私密代理/独享代理)username = "d3400384165"password = "f5s8g9pk"proxies = {"http": "http://%(user)s:%(pwd)s@%(proxy)s/" % {'user': username, 'pwd': password,'proxy': random.choice(proxy_ip)},"https": "http://%(user)s:%(pwd)s@%(proxy)s/" % {'user': username, 'pwd': password,'proxy': random.choice(proxy_ip)}}i = i+1boss_url = f"https://www.zhipin.com/wapi/zpgeek/search/joblist.json?scene=1&query=Python&city=100010000&experience=&payType=&partTime=°ree=&industry=&scale=&stage=&position=&jobType=&salary=&multiBusinessDistrict=&multiSubway=&page={i}&pageSize=30"json_data = requests.get(url=boss_url,headers=headers).json()['zpData']['jobList']for data in json_data:area = data['areaDistrict'] # 区域link = data['bossAvatar'] # 详情页链接boss_name = data['bossName'] # 领导brandIndustry = data['brandIndustry'] # 领域brandName = data['brandName'] #公司名brandScaleName = data['brandScaleName'] # 招聘人数jobDegree = data['jobDegree'] #学历要求jobExperience = data['jobExperience'] #工作经验要求jobName = data['jobName'] #职位名称salaryDesc = data['salaryDesc'] # 期望薪资skills = data['skills'] # 技能要求welfareList = data['welfareList'] #福利tb.add_row([area,link,boss_name,brandIndustry,brandName,brandScaleName,jobDegree,jobExperience,jobName,salaryDesc,skills,welfareList])

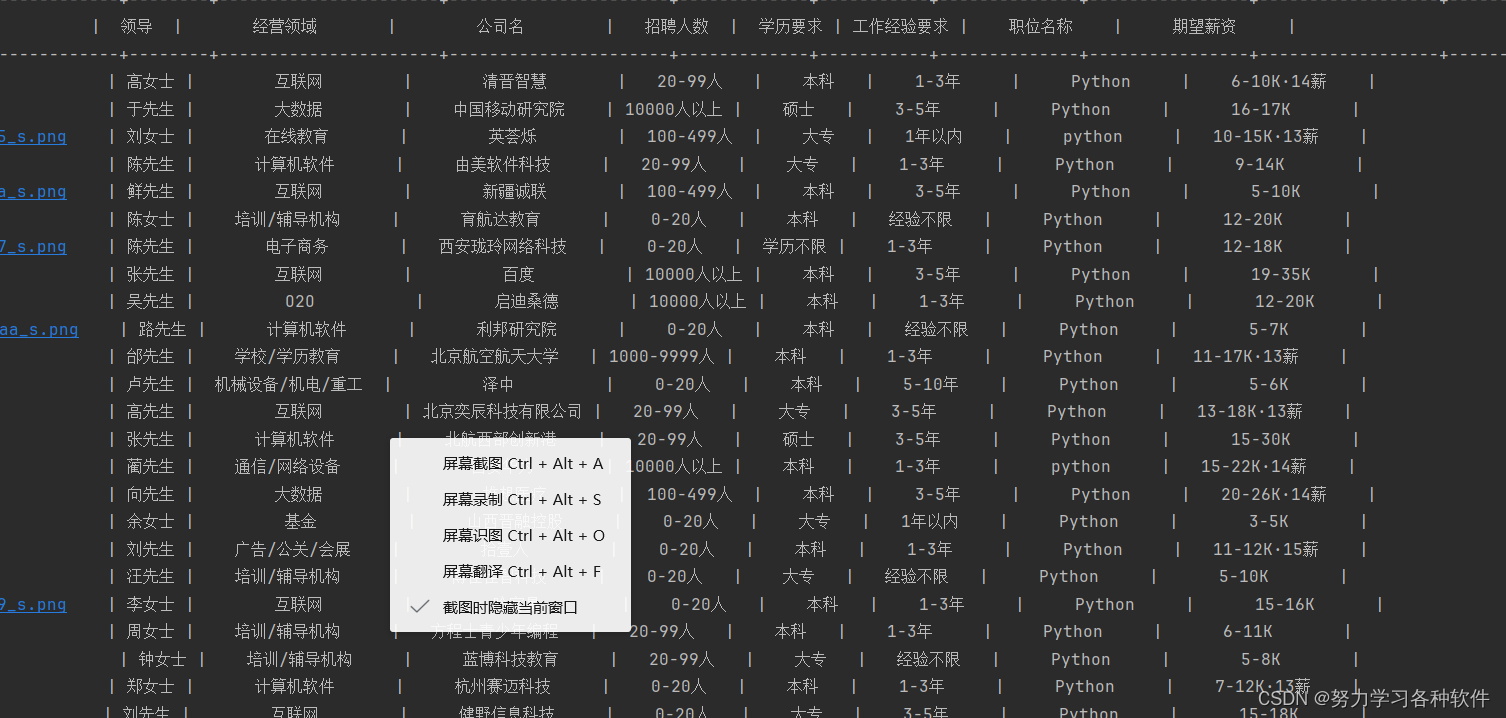

print(tb)总结:在这个案例中,它的cookie是频繁变化的, 不加cookie,访问不到数据,加了cookie,时间长了之后会失效。所以这又回到了逆向的方面,它的cookie是如何生成的,我该如何生成cookie?

尝试了用快代理免费的代理池,用于更换ip,以防止封ip类型的反扒手段 。

结果展现:

令我奇怪的是在cookie时效呢,加了proxies=proxies反而会报错,不知道为啥

以及借用或引用)

)

)