1.基础

loss function损失函数:预测输出与实际输出 差距 越小越好

- 计算实际输出和目标之间的差距

- 为我们更新输出提供依据(反向传播)

1. L1

torch.nn.L1Loss(size_average=None, reduce=None, reduction=‘mean’)

2. 平方差(L2)

torch.nn.MSELoss(size_average=None, reduce=None, reduction=‘mean’)

3. 交叉熵

torch.nn.CrossEntropyLoss(weight=None, size_average=None, ignore_index=-100, reduce=None, reduction=‘mean’, label_smoothing=0.0)

2. 例子

代码:

import torch

from torch import nninput = torch.tensor([1,2,3],dtype=torch.float32)

input = torch.reshape(input,[1,1,1,3])

target = torch.tensor([1,2,5],dtype=torch.float32)

target = torch.reshape(target,[1,1,1,3])

# L1

l1 = nn.L1Loss(reduction='sum')

result1 = l1(input,target)

print(result1)

# L2

l2 = nn.MSELoss()

result2 = l2(input,target)

print(result2)# 交叉熵损失

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1])

x = torch.reshape(x,[1,3])

loss_cross = nn.CrossEntropyLoss()

result = loss_cross(x,y)

print(result)

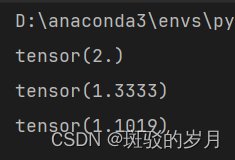

输出

)

)

)

)

11.5-服务映射(自顶向下))