论文网址:[2106.05234] Do Transformers Really Perform Bad for Graph Representation? (arxiv.org)

论文代码:https://github.com/Microsoft/Graphormer

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用!

目录

1. 省流版

1.1. 心得

1.2. 论文总结图

2. 论文逐段精读

2.1. Abstract

2.2. Introduction

2.3. Preliminary

2.4. Graphormer

2.4.1. Structural Encodings in Graphormer

2.4.2. Implementation Details of Graphormer

2.4.3. How Powerful is Graphormer?

2.5. Experiments

2.5.1. OGB Large-Scale Challenge

2.5.2. Graph Representation

2.5.3. Ablation Studies

2.6. Related Work

2.6.1. Graph Transformer

2.6.2. Structural Encodings in GNNs

2.7. Conclusion

2.8. Proofs

2.8.1. SPD can Be Used to Improve WL-Test

2.8.2. Proof of Fact 1

2.8.3. Proof of Fact 2

2.9. Experiment Details

2.9.1. Details of Datasets

2.9.2. Details of Training Strategies

2.9.3. Details of Hyper-parameters for Baseline Methods

2.10. More Experiments

2.11. Discussion & Future Work

3. 知识补充

3.1. Permutation invariant

3.2. Super node

3.3. Warm-up stage

3.4. Quadratic complexity

4. Reference List

1. 省流版

1.1. 心得

(1)佬们其实都爱简单模型是吧,qs

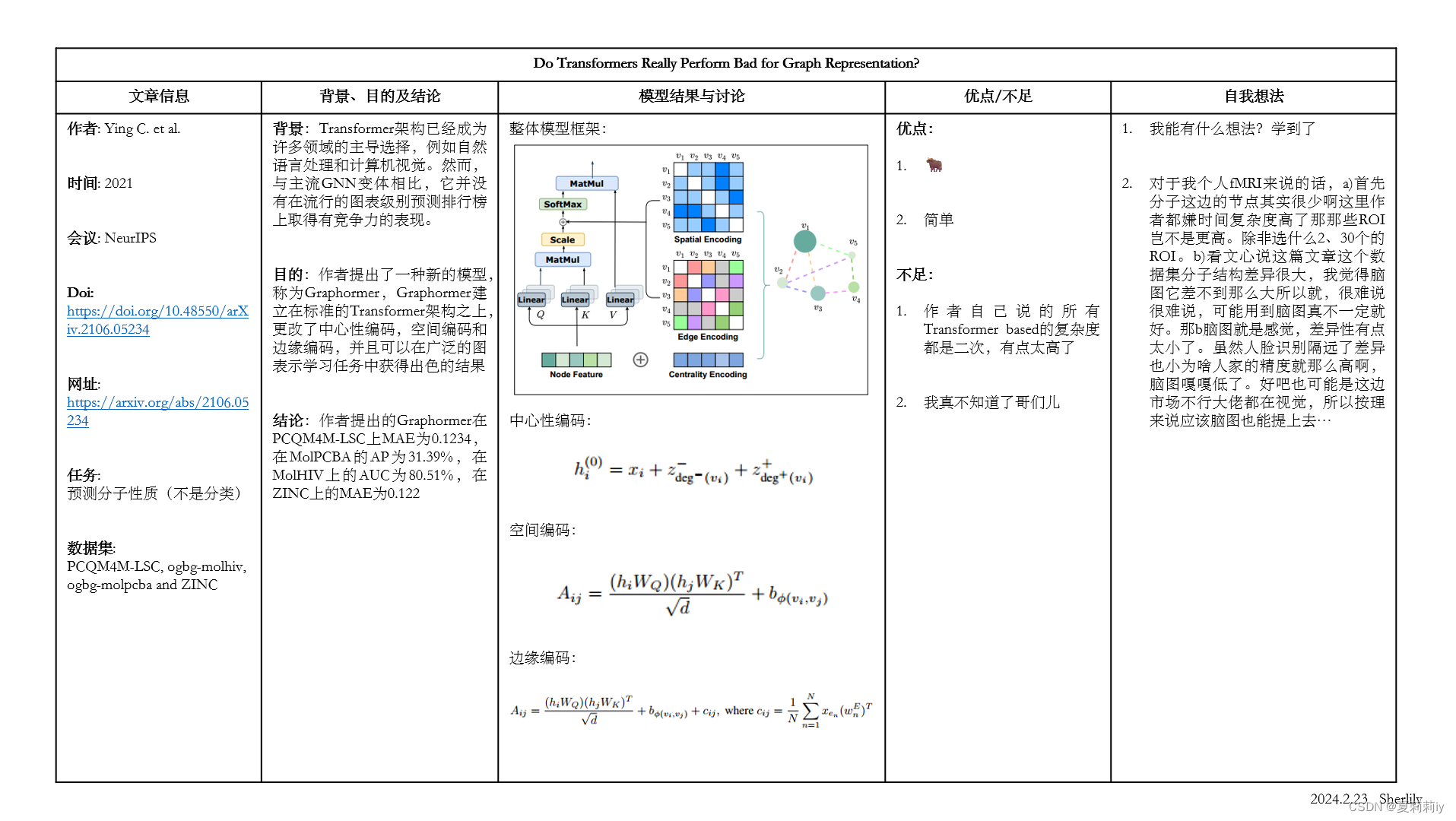

1.2. 论文总结图

2. 论文逐段精读

2.1. Abstract

①Transformer did not achieve ideal performance comparing with mainstream GNN variants

②The authors put forward Graphormer to change this situation

leaderboard n. 排行榜;通栏广告

2.2. Introduction

①Graphormer performs outstanding on Open Graph Benchmark Large-Scale Challenge (OGB-LSC), and several popular leaderboards such as OGB and Benchmarking-GNN

②Transformer only takes node similarity into consideration, whereas dose not focus on structural relationship. Ergo, Graphormer add structural encoding

③They capture node importance by Centrality Encoding, extract centrality by Degree centrality and present structural relationship by Spatial Encoding

④Graphormer occupies the top spot on the OGB-LSC, MolHIV, MolPCBA, ZINC and other rankings

de-facto 实际上的:指在实际上拥有某种地位或权力,而不是在法律上或正式上拥有

canonical adj. 根据教规的,按照宗教法规的;真经的,正经的;标准的,典范的;准确的,权威的;公认的,依据科学法则的;(数学表达式)最简洁的;(与)公理(或标准公式)(有关)的;(与)教会(或教士)(有关)的

2.3. Preliminary

(1)Graph Neural Network (GNN)

①Presenting graph as , where

denotes the node set,

denotes the number of nodes. Define the feature vector of

named

and node representation of

at the

-th layer is

,

②The usual GNN is representated as:

where denotes the neighbors (unknow hops) of

(2)Transformer

①Each layer in Transformer contains a self-attention module and a position-wise feed-forward network (FFN)

②The input of self-attention module is , where

represents the hidden dimension,

denotes the hidden representation at position

③The function of attention mechanism:

where is a similarity matrix of queries and keys

④They apply simple single-head self-attention mechanism and define . Moreover, they eliminate bias in multi-head attenton part

2.4. Graphormer

2.4.1. Structural Encodings in Graphormer

The overall framework of Graphormer, which contains three modules:

(1)Centrality Encoding

①For directed graph, their centrality encoding for input will be:

where is the learnable embedding vector of indegree

,

is the learnable embedding vector of outdegree

(呃呃我现在不太能想象z是个什么样的玩意儿)

②For undirected graph, just one replaces

and

(2)Spatial Encoding

①There is no sequence in graph presentation. To this end, they provide a new spatial encoding method to present spatial relations between and

:

where they choose there is the shortest path (SPD). If there is no path, then set value as -1.

②⭐Assigning a learnable scalar to each feasible output value as bias term in self-attention part(鼠鼠注意力学得太菜了捏)

③The Q-K product matrix can be calculated by:

where denotes learnable scalar

④Paraphrase不动了这里上一句中文,文中的意思大概是上式比起传统的GNN可以体现全局视野,每个节点都开天眼。然后还能体现一下学习性,作者举例说如果和

负相关的话可能每个节点会更在意邻近节点。

(3)Edge Encoding in the Attention

①In the previous works, adding edge features into corresponding node features or adding edge features and aggregated node features into corresponding node features are two traditional edge encoding methods. However, it is too superficial and limited in that it can just express the adjacent relationships rather than global relationships.

②The SP of each pair node can be

③They calculate the average of the dot-products of the edge feature in the

-th edge

and a learnable embedding weight

in the

-th along the path:

where denotes the dimensionality of edge feature

2.4.2. Implementation Details of Graphormer

(1)Graphormer Layer

①The move the layer normalization (LN) module from behind the multi-head self-attention (MHA) and the feed-forward blocks (FFN) module to before them

②They define the same dimension of input, output and the inner-layer in FFN sub-layer

③The functions of Graphormer Layer:

(2)Special Node

①⭐They added a special node [VNode] in their model, which connects with all the other nodes. With this, the whole graph feature can be the node feature of [VNode]. [CLS] is the similar token in BERT

②为啥???为啥[VNode]到所有节点的最短路径是1??怎么SPD里面没有小数吗??

③The connections between [VNode] and all the other nodes are virtual instead of physical. To distinguish this, they reset and

to new learnable scalars

2.4.3. How Powerful is Graphormer?

(1)Fact 1. By choosing proper weights and distance function φ, the Graphormer layer can represent AGGREGATE and COMBINE steps of popular GNN models such as GIN, GCN, GraphSAGE

①Spatial encoder presents the of node

, which enables Softmax to calculate mean statistics over

(啥是平均统计量??统计量是怎么个定义法?节点距离?节点特征?)

②They can translate mean over neighbors to sum over neighbors while knowing the degree of a node(emmm...emmm?)

③The process of and

can be arbitrarily separated or combined(完,全军覆没,这三点一点没看懂)

④The expression ability of Graphormer goes beyond GNNs and 1-Weisfeiler-Lehman (WL) test

(2)Fact 2. By choosing proper weights, every node representation of the output of a Graphormer layer without additional encodings can represent MEAN READOUT functions

①They add a super node that can aggregates the information of the whole graph (virtual node heuristic). However, simplily adding super node may cause over-smoothing in information propagation part

②Global perspective of all nodes that takes from self-attention mechanism is able to simulate graph-level READOUT operation

③Empirically, their Graphormer will not face over-smoothing problem

2.5. Experiments

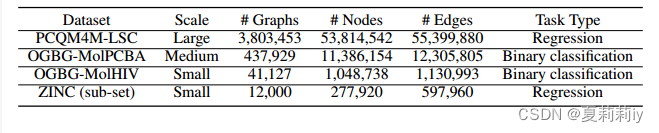

They challenge their model on PCQM4M-LSC with 3.8 M graphs, ogbg-molhiv, ogbg-molpcba and ZINC

2.5.1. OGB Large-Scale Challenge

(1)Baselines

①They compared Graphormer with GCN, GIN, GCN-VN (with virtual node), GIN-VN, multi-hop variant of GIN and 12-layer deep graph network DeeperGCN

②Transformer based GNNs are compared as well

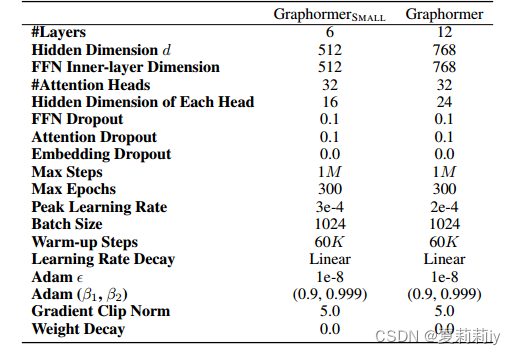

(2)Settings

①Two model size: Graphormer () and Graphormer_SMALL (

)

②Number of attention heads/dimensionality of edge features: in both models

③⭐Optimizer: AdamW(哇哇哇好少见的换了一个)

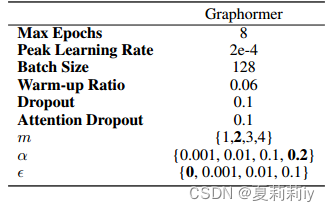

④Hyper-parameter:

⑤Peak learning rate: for Graphormer and

for Graphormer_SMALL

⑥Warm-up stage: 60k-step

(3)Results

①Comparison table:

where GT-Wide is GT with hidden dimension=768, the original of GT equals to 64

2.5.2. Graph Representation

①They further explore the performance of Graphormer on OGBG-MolPCBA, OGBG-MolHIV and benchmarking-GNN (ZINC)

②They use different parameters in this section. Details showed in the appendix.

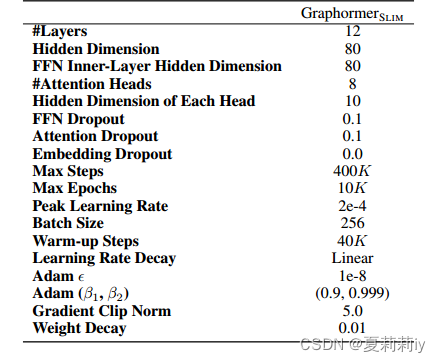

③They designed another model Graphormer_SLIM () for ZINC

(1)Baselines

①For fair competition on OGB, they adopt the pre-trained GIN-VN after fine-tuned

(2)Settings

①Graphormer there is more prone to overfitting problems when model size is large and dataset size is small. To this end, they apply FLAG, a data augmentation method, to mitigare this problem

(3)Results

①Comparison table on OGBG-MolPCBA:

②Comparison table on OGBG-MolHIV:

③Comparison table on ZINC:

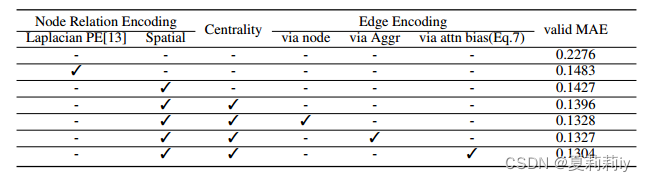

2.5.3. Ablation Studies

(1)Node Relation Encoding

①They compared traditional positional encoding (PE) with their spatial encoding

(2)Centrality Encoding

①Degree-based centrality encoding brings significant increasement of performance

(3)Edge Encoding

①They denote their edge encoding as via attn bias, and compare it with two traditional edge encoding methods, via node and via Aggr

②Comparison table:

2.6. Related Work

2.6.1. Graph Transformer

①One type of GT changes several parts in transformer layer, then pre-trains their model on 10 million unlabelled molecules and fine-tunes the down-stream classification task

②Introducing different variants of DT

2.6.2. Structural Encodings in GNNs

(1)Path and Distance in GNNs

①你不把模型名字说出来你就说[9]提出了啥方法我是真的不想做笔记。“与[9]类似,[56]利用基于路径的注意力来模拟中心节点与其高阶邻居之间的影响;[59]提出了一种图上距离加权聚合方案;文献[32]已经证明,采用距离编码(即将距离的一个热点特征作为额外的节点属性)可以获得比1-WL测试更强的表达能力。”414

(2)Positional Encoding in Transformer on Graph

①Introducing some models which introduce positional encoding techniques

(3)Edge Feature

①Introducing some models which contains special edge feature extraction methods

2.7. Conclusion

Combined the three new methods, Graphormer achieve excellent performance in competitions. However, the "quadratic complexity of the self-attention module restricts Graphormer’s application on large graphs"

2.8. Proofs

2.8.1. SPD can Be Used to Improve WL-Test

⭐Graphs which can not be distinguished by 1-WL-test:

SPD set in the left graph of two nodes are {0,1,1,2,2,3} (pink) and {0,1,1,1,2,2} (blue)(意思就是其实那三个粉色第一颜色一样第二度数一样所以是一类节点,其中的一个到别的点分别是到自己:0,到上方蓝色:1,到左下粉色:1,到中下蓝色:2,到右上粉色:2,到右下粉色:3.其他的以此类推嘛)

SPD set in the right graph of two nodes are {0,1,1,2,3,3} (pink) and {0,1,1,1,2,2} (blue)

然后1-WL不行是因为WL更看重度,比如左图和右图的pink和blue点每个的度都是一模一样的,粉2蓝3

2.8.2. Proof of Fact 1

Proof there based on function

①MEAN AGGREGATE: if :

else

, where

denotes SPD. What is more,

. Then

means average

②SUM AGGREGATE: MEAN AGGREGATE × number of degree(嘻嘻,感觉智商被羞辱了捏), where degree can be extracted by additional head Centrality Encoding. Then apply FFN to present mean degree times dimensions

③MAX AGGREGATE: one more head to search the max value in the -th dimension is needed. Likewise if

:

else

, where

denotes SPD. Secondly, define

as the

-th standard basis,

, bias term of

is

(do not be used in the text above),

, where “其中

是可以选择足够大的temperature(什么玩意儿??),使softmax函数可以近似于hard max,

是元素都为1的向量”

④COMBINE: obtain input by the outputs of AGGREGATE and previous representation of current node (additional head of current node is the representation). if :

else

, where

denotes SPD. What is more,

. Then apply FFN to approximate arbitrary COMBINE function

2.8.3. Proof of Fact 2

①MEAN READOUT: , bias terms of

are all

2.9. Experiment Details

2.9.1. Details of Datasets

①Introduce 4 datasets

②Data of 4 datasets:

2.9.2. Details of Training Strategies

(1)PCQM4M-LSC

①The hyper-parameters on PCQM4M-LSC:

②They briefly explain the reasons but I do not mention, hhh

(2)OGBG-MolPCBA

①They first train a 18 Transformer layers deep Graphormer on PCQM4M-LSC

②The hidden dimension and FFN inner-layer dimension: 1024

③Peak learning rate for deep Graphormer: 1e-4(我不知道为啥表里面和文本不一样)

④The hyper-parameters on OGBG-MolPCBA after fine-tuning:

(3)OGBG-MolHIV

①The hyper-parameters on OGBG-MolHIV after fine-tuning:

(4)ZINC

①The hyper-parameters on ZINC:

2.9.3. Details of Hyper-parameters for Baseline Methods

(1)PCQM4M-LSC

①The official Github repository of OGB-LSC7: https://github.com/snap-stanford/ogb/tree/master/examples/lsc/pcqm4m

②The provided hyper-parameters perform well on most of the variants of GNN, except the DeeperGCN-VN. So they trained DeeperGCN with 12 layers, 600 hidden dimension, 256 batch size, 1e-3 learning rate, decay after every 30 epoch and 0.25 decay rate for 100 epochs.

③Changing the dimension of laplacian PE of GT and GT-wide to 4 and learning rate to 1e-4

(2)OGBG-MolPCBA

①Default for some and grid search for learning rate, dropout ratio and batch size

(3)OGBG-MolHIV

①Default for some and grid search for learning rate, dropout ratio and batch size

2.10. More Experiments

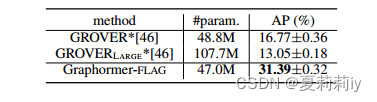

①Comparison of GROVER and Graphormer on MolHIV:

②Comparison of GROVER and Graphormer on MolPCBA:

2.11. Discussion & Future Work

①Complexity: there are quadratical increasement of number of nodes

②Choice of centrality and : L2 distance is another choice

③Node Representation: they can represent other tasks

preclude v. 阻止,妨碍(preclude sb. from)

3. 知识补充

3.1. Permutation invariant

参考学习1:【神经网络】Deep Sets:满足置换不变性(permutation-invariant)与置换同变性(permutation-equivariant)的通用网络结构 - 知乎 (zhihu.com)

3.2. Super node

文心:

在图论中,超节点(Super Node)或超级顶点(Super Vertex)是指那些具有极多相邻边的节点。这些相邻边可以是出边(从该节点指向其他节点的边)或者是入边(指向该节点的边)。这些节点由于它们的高连接性,常常在网络中扮演着重要的角色。超节点现象在具有幂律分布特性的网络中尤为常见,这意味着少数节点拥有大量的连接,而大多数节点只有少量的连接。例如,在社交网络中,拥有大量粉丝的网红或名人账户就可以被视为超节点,因为他们的账户与大量的其他账户相连。

3.3. Warm-up stage

文心:

深度学习的预热阶段,也称为学习率预热(Learning Rate Warmup)或warmup stage,是一种在模型训练初期采用的策略。其主要目的是在开始阶段使用较小的学习率,然后逐渐增大学习率至预设值,从而使模型能够逐渐适应训练过程并趋于稳定。

在深度学习训练中,模型的权重是随机初始化的。如果在训练初期直接使用较大的学习率,可能会导致模型的不稳定,甚至振荡。预热阶段的引入,可以在开始训练的几个epoch或一些steps内,使用较小的学习率,让模型有机会逐渐适应并趋于稳定。然后,随着预热阶段的结束,学习率会逐渐增大至预设值,以加快模型的收敛速度并提高模型的效果。

预热阶段的长度可以根据具体的任务和数据集进行调整。例如,在ResNet论文中,预热阶段被设置为前5个epoch,每个epoch包含15000个steps。预热阶段的具体实现方式也可以有所不同,如线性预热、余弦预热等。

需要注意的是,虽然预热阶段可以提高模型的稳定性和训练效果,但较低的学习率也可能导致训练过程变得非常缓慢。因此,在实际应用中,需要根据具体情况来平衡预热阶段的长度和学习率的变化范围。

3.4. Quadratic complexity

文心:

在自注意力机制(Self-Attention Mechanism)中,"quadratic complexity" 指的是自注意力计算的时间复杂度和空间复杂度都是二次的,即与输入序列的长度的平方成正比。

自注意力机制的核心是计算输入序列中每个位置的表示与其他所有位置的表示的加权和。这通常通过计算一个查询(query)、键(key)和值(value)的加权和来实现,其中查询、键和值都是输入序列的线性变换。具体来说,对于输入序列中的每个位置,都会生成一个查询向量、一个键向量和一个值向量。然后,通过将每个位置的查询向量与所有位置的键向量进行点积,并应用softmax函数来得到权重,再用这些权重对值向量进行加权求和,从而得到该位置的输出表示。

由于这个过程涉及到对每个位置的查询向量与所有位置的键向量进行点积,因此时间复杂度和空间复杂度都是二次的。假设输入序列的长度为n,那么自注意力机制的时间复杂度和空间复杂度都是O(n^2)。这在处理长序列时可能会成为问题,因为计算资源和内存使用会随着序列长度的增加而快速增加。

为了解决这个问题,一些研究工作提出了各种方法来降低自注意力机制的计算复杂度和内存使用,例如使用近似算法、局部注意力机制、稀疏注意力机制等。这些方法的目标是在保持模型性能的同时,减少计算资源和内存的使用。

4. Reference List

Ying C. et al. (2021) 'Do Transformers Really Perform Bad for Graph Representation?', NeurIPS 2021. doi: https://doi.org/10.48550/arXiv.2106.05234

)

循环神经网络(RNN))

Bitmap、Hyperloglog、GEO案例、布隆过滤器)

、信号量(semaphores))

)