若该文为原创文章,转载请注明原文出处。

本篇参考山水无移大佬文章,并成功部署了yolov8pose在RK3568板子上,这里记录下全过程。

在此特感谢所有分享的大佬,底部附大佬的链接。

一、环境

1、平台:rk3568

2、开发板: ATK-RK3568正点原子板子

3、环境:buildroot

特别说明:本示例中模型的训练使用的数据是coco8-pose数据集,数据量很少,模型效果无法保证,只是用来测试部署用的,如果换一张图像可能存在检测不到属于正常现象。

二、Yolov8Pose训练

YOLOV8的环境自行搭建。

过程参考:姿势 -Ultralytics YOLOv8 文档

1、下载源码

方式一:直接下载解压

GitHub - ultralytics/ultralytics: NEW - YOLOv8 🚀 in PyTorch > ONNX > OpenVINO > CoreML > TFLite

方式二:

# Clone the ultralytics repository

git clone https://github.com/ultralytics/ultralytics2、安装

# Navigate to the cloned directory

cd ultralytics# Install the package in editable mode for development

pip install -e .3、修改激活函数

由于SiLU在有些板端芯片上还不支持,因此将其改为ReLU。

# ultraltics/nn/modeles/conv.pyclass Conv(nn.Module):"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""#default_act = nn.SiLU() # default activationdefault_act = nn.ReLU() # 改动,silu在rknn上不能和conv融合def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):"""Initialize Conv layer with given arguments including activation."""super().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)self.bn = nn.BatchNorm2d(c2)self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()def forward(self, x):"""Apply convolution, batch normalization and activation to input tensor."""return self.act(self.bn(self.conv(x)))def forward_fuse(self, x):"""Perform transposed convolution of 2D data."""return self.act(self.conv(x))

4、训练

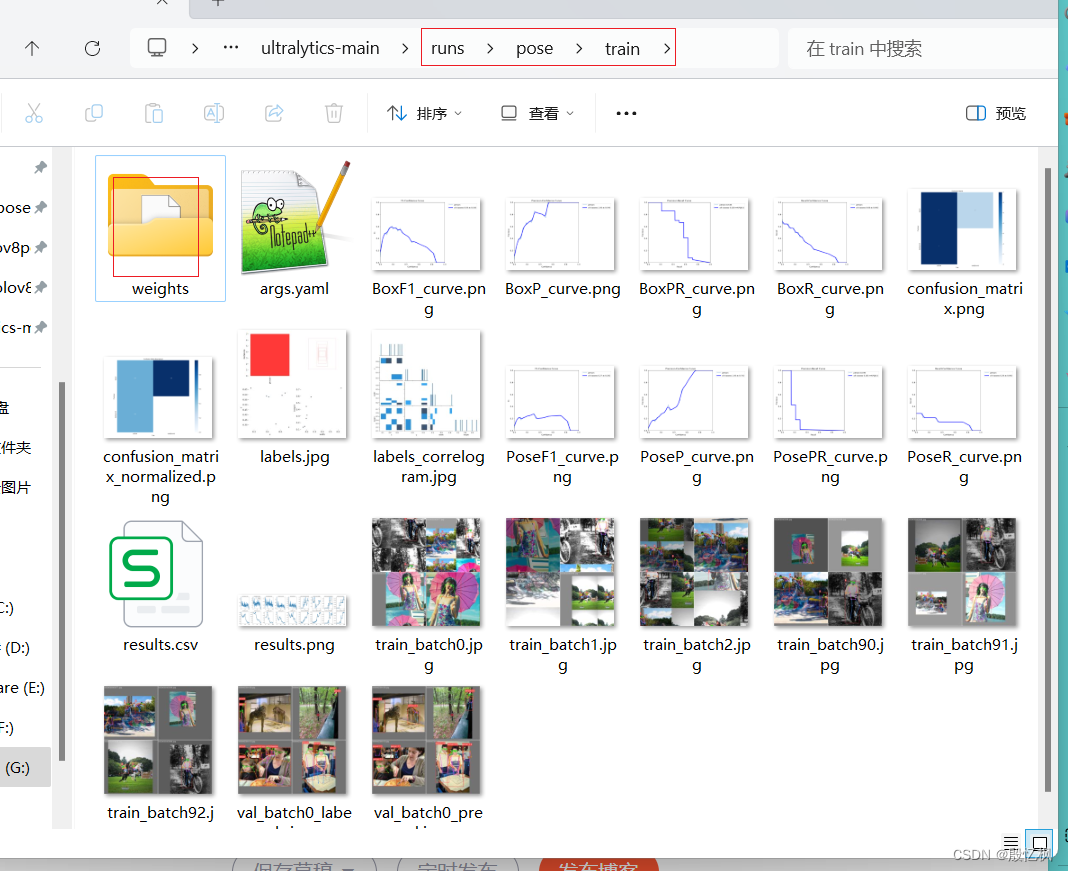

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.pt epochs=100 imgsz=640运行结束后会在runs\pose\train\weights目录下生成pt文件

在ultralytics目录下,创建一个weights文件,把训练好的best.pt模型拷贝到weights目录下,并修改成yolov8pos_relu.pt名字。

三、导出 yolov8pose 的 onnx

这里需要注意:导出onnx方式只适配本示例对应仓库的代码,如果用官方导出的onnx,请自行写后处理代码。(这里原作者提醒的)注意。注意。注意。并且以下修改顺序一定不能错,两次运行的代码也不一样,请注意,请注意,请注意。

即:训练和导出的代码是不一定的,训练需要用官方的代码,导出要修改代码。

1、重新导出pt,只保存权重值

增加下面代码

def _load(self, weights: str, task=None):"""Initializes a new model and infers the task type from the model head.Args:weights (str): model checkpoint to be loadedtask (str | None): model task"""suffix = Path(weights).suffixif suffix == ".pt":self.model, self.ckpt = attempt_load_one_weight(weights)self.task = self.model.args["task"]self.overrides = self.model.args = self._reset_ckpt_args(self.model.args)self.ckpt_path = self.model.pt_pathelse:weights = checks.check_file(weights)self.model, self.ckpt = weights, Noneself.task = task or guess_model_task(weights)self.ckpt_path = weightsself.overrides["model"] = weightsself.overrides["task"] = self.task# 保存权重值import torchself.model.fuse()self.model.eval()torch.save(self.model.state_dict(), './weights/yolov8pos_relu_dict.pt')修改后在ultralytics-main根目录下创建一个test.py文件,内容如下:

from ultralytics import YOLO

# 预测

model = YOLO('./weights/yolov8pos_relu.pt')

results = model(task='pose', mode='predict', source='./images/test.jpg', line_width=3, show=True, save=True, device='cpu')

打开终端运行后,会在weights文件夹下生成yolov8pos_relu_dict.pt。

2、去除不需要的算子,导出ONNX

修改下面文件

(1)修改检测初始化

def __init__(self, nc=80, ch=()):"""Initializes the YOLOv8 detection layer with specified number of classes and channels."""super().__init__()self.nc = nc # number of classesself.nl = len(ch) # number of detection layersself.reg_max = 16 # DFL channels (ch[0] // 16 to scale 4/8/12/16/20 for n/s/m/l/x)self.no = nc + self.reg_max * 4 # number of outputs per anchorself.stride = torch.zeros(self.nl) # strides computed during buildc2, c3 = max((16, ch[0] // 4, self.reg_max * 4)), max(ch[0], min(self.nc, 100)) # channelsself.cv2 = nn.ModuleList(nn.Sequential(Conv(x, c2, 3), Conv(c2, c2, 3), nn.Conv2d(c2, 4 * self.reg_max, 1)) for x in ch)self.cv3 = nn.ModuleList(nn.Sequential(Conv(x, c3, 3), Conv(c3, c3, 3), nn.Conv2d(c3, self.nc, 1)) for x in ch)self.dfl = DFL(self.reg_max) if self.reg_max > 1 else nn.Identity()# 导出 onnx 增加self.conv1x1 = nn.Conv2d(16, 1, 1, bias=False).requires_grad_(False)x = torch.arange(16, dtype=torch.float)self.conv1x1.weight.data[:] = nn.Parameter(x.view(1, 16, 1, 1))(2)修改forward

def forward(self, x):"""Concatenates and returns predicted bounding boxes and class probabilities."""shape = x[0].shape # BCHWy = []for i in range(self.nl):t1 = self.cv2[i](x[i])t2 = self.cv3[i](x[i])y.append(self.conv1x1(t1.view(t1.shape[0], 4, 16, -1).transpose(2, 1).softmax(1)))# y.append(t2.sigmoid())y.append(t2)return yfor i in range(self.nl):x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)if self.training: # Training pathreturn xelif self.dynamic or self.shape != shape:self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))self.shape = shape# Inference pathx_cat = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2)if self.export and self.format in ("saved_model", "pb", "tflite", "edgetpu", "tfjs"): # avoid TF FlexSplitV opsbox = x_cat[:, : self.reg_max * 4]cls = x_cat[:, self.reg_max * 4 :]else:box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.stridesy = torch.cat((dbox, cls.sigmoid()), 1)return y if self.export else (y, x)(3)修改分pose头

class Pose(Detect):"""YOLOv8 Pose head for keypoints models."""def __init__(self, nc=80, kpt_shape=(17, 3), ch=()):"""Initialize YOLO network with default parameters and Convolutional Layers."""super().__init__(nc, ch)self.kpt_shape = kpt_shape # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)self.nk = kpt_shape[0] * kpt_shape[1] # number of keypoints totalself.detect = Detect.forwardc4 = max(ch[0] // 4, self.nk)self.cv4 = nn.ModuleList(nn.Sequential(Conv(x, c4, 3), Conv(c4, c4, 3), nn.Conv2d(c4, self.nk, 1)) for x in ch)def forward(self, x):"""Perform forward pass through YOLO model and return predictions."""bs = x[0].shape[0] # batch size#kpt = torch.cat([self.cv4[i](x[i]).view(bs, self.nk, -1) for i in range(self.nl)], -1) # (bs, 17*3, h*w)ps = []for i in range(self.nl):ps.append(self.cv4[i](x[i]))x = self.detect(self, x)return x, psif self.training:return x, kptpred_kpt = self.kpts_decode(bs, kpt)return torch.cat([x, pred_kpt], 1) if self.export else (torch.cat([x[0], pred_kpt], 1), (x[1], kpt))(4)添加生成onnx代码

def _new(self, cfg: str, task=None, model=None, verbose=False):"""Initializes a new model and infers the task type from the model definitions.Args:cfg (str): model configuration filetask (str | None): model taskmodel (BaseModel): Customized model.verbose (bool): display model info on load"""cfg_dict = yaml_model_load(cfg)self.cfg = cfgself.task = task or guess_model_task(cfg_dict)self.model = (model or self._smart_load("model"))(cfg_dict, verbose=verbose and RANK == -1) # build modelself.overrides["model"] = self.cfgself.overrides["task"] = self.task# Below added to allow export from YAMLsself.model.args = {**DEFAULT_CFG_DICT, **self.overrides} # combine default and model args (prefer model args)self.model.task = self.taskimport torchself.model.fuse()self.model.eval()self.model.load_state_dict(torch.load('./weights/yolov8pos_relu_dict.pt', map_location='cpu'), strict=False)print("=========== onnx =========== ")dummy_input = torch.randn(1, 3, 640, 640)input_names = ["data"]output_names = ["cls1", "reg1", "cls2", "reg2", "cls3", "reg3", "ps1", "ps2", "ps3"]torch.onnx.export(self.model, dummy_input, "./weights/yolov8pos_relu.onnx", verbose=False, input_names=input_names, output_names=output_names, opset_version=11)print("======================== convert onnx Finished! .... ")

(5)导出ONNX

修改test.py文件,代码如下:

from ultralytics import YOLO

# 预测

#model = YOLO('./weights/yolov8pos_relu.pt')

#results = model(task='pose', mode='predict', source='./images/test.jpg', line_width=3, show=True, save=True, device='cpu')

# 导出

model = YOLO('./ultralytics/cfg/models/v8/yolov8-pose.yaml')运行后,会在weights文件夹下生成yolov8pos_relu.onnx

四、ONNX转RKNN

rknn模型转换所需要的环境,参考的是正点原子的手册搭建的。

模型转换代码:

yolov8pose 瑞芯微RKNN芯片、地平线Horizon芯片、TensorRT部署_yolov8 rknn-CSDN博客

首先下载代码

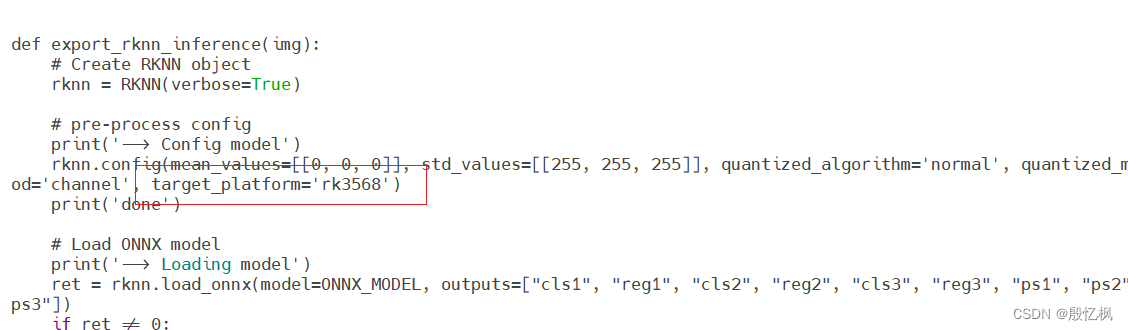

原代码使用的是RK3588平台,我使用的是正点原子的ATK-RK3568,所以需要修改代码,主要是修改平台,其他的全部没有变。

附修改后的代码

import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

from rknn.api import RKNN

from math import exp#ONNX_MODEL = './yolov8pos_relu_zq.onnx'

ONNX_MODEL = './yolov8pos_relu.onnx'

RKNN_MODEL = './yolov8pos_relu_zq.rknn'

DATASET = './dataset.txt'QUANTIZE_ON = Truecolor_list = [(0, 0, 255), (0, 255, 0), (255, 0, 0)]

skeleton = [[16, 14], [14, 12], [17, 15], [15, 13], [12, 13], [6, 12], [7, 13], [6, 7], [6, 8], [7, 9], [8, 10], [9, 11],[2, 3], [1, 2], [1, 3], [2, 4], [3, 5], [4, 6], [5, 7]]CLASSES = ['person']meshgrid = []class_num = len(CLASSES)

headNum = 3

keypoint_num = 17strides = [8, 16, 32]

mapSize = [[80, 80], [40, 40], [20, 20]]

nmsThresh = 0.55

objectThresh = 0.5input_imgH = 640

input_imgW = 640class DetectBox:def __init__(self, classId, score, xmin, ymin, xmax, ymax, pose):self.classId = classIdself.score = scoreself.xmin = xminself.ymin = yminself.xmax = xmaxself.ymax = ymaxself.pose = posedef GenerateMeshgrid():for index in range(headNum):for i in range(mapSize[index][0]):for j in range(mapSize[index][1]):meshgrid.append(j + 0.5)meshgrid.append(i + 0.5)def IOU(xmin1, ymin1, xmax1, ymax1, xmin2, ymin2, xmax2, ymax2):xmin = max(xmin1, xmin2)ymin = max(ymin1, ymin2)xmax = min(xmax1, xmax2)ymax = min(ymax1, ymax2)innerWidth = xmax - xmininnerHeight = ymax - ymininnerWidth = innerWidth if innerWidth > 0 else 0innerHeight = innerHeight if innerHeight > 0 else 0innerArea = innerWidth * innerHeightarea1 = (xmax1 - xmin1) * (ymax1 - ymin1)area2 = (xmax2 - xmin2) * (ymax2 - ymin2)total = area1 + area2 - innerAreareturn innerArea / totaldef NMS(detectResult):predBoxs = []sort_detectboxs = sorted(detectResult, key=lambda x: x.score, reverse=True)for i in range(len(sort_detectboxs)):xmin1 = sort_detectboxs[i].xminymin1 = sort_detectboxs[i].yminxmax1 = sort_detectboxs[i].xmaxymax1 = sort_detectboxs[i].ymaxclassId = sort_detectboxs[i].classIdif sort_detectboxs[i].classId != -1:predBoxs.append(sort_detectboxs[i])for j in range(i + 1, len(sort_detectboxs), 1):if classId == sort_detectboxs[j].classId:xmin2 = sort_detectboxs[j].xminymin2 = sort_detectboxs[j].yminxmax2 = sort_detectboxs[j].xmaxymax2 = sort_detectboxs[j].ymaxiou = IOU(xmin1, ymin1, xmax1, ymax1, xmin2, ymin2, xmax2, ymax2)if iou > nmsThresh:sort_detectboxs[j].classId = -1return predBoxsdef sigmoid(x):return 1 / (1 + exp(-x))def postprocess(out, img_h, img_w):print('postprocess ... ')detectResult = []output = []for i in range(len(out)):output.append(out[i].reshape((-1)))scale_h = img_h / input_imgHscale_w = img_w / input_imgWgridIndex = -2for index in range(headNum):reg = output[index * 2 + 0]cls = output[index * 2 + 1]pose = output[headNum * 2 + index]for h in range(mapSize[index][0]):for w in range(mapSize[index][1]):gridIndex += 2for cl in range(class_num):cls_val = sigmoid(cls[cl * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w])if cls_val > objectThresh:x1 = (meshgrid[gridIndex + 0] - reg[0 * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]) * strides[index]y1 = (meshgrid[gridIndex + 1] - reg[1 * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]) * strides[index]x2 = (meshgrid[gridIndex + 0] + reg[2 * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]) * strides[index]y2 = (meshgrid[gridIndex + 1] + reg[3 * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]) * strides[index]xmin = x1 * scale_wymin = y1 * scale_hxmax = x2 * scale_wymax = y2 * scale_hxmin = xmin if xmin > 0 else 0ymin = ymin if ymin > 0 else 0xmax = xmax if xmax < img_w else img_wymax = ymax if ymax < img_h else img_hposeResult = []for kc in range(keypoint_num):px = pose[(kc * 3 + 0) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]py = pose[(kc * 3 + 1) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w]vs = sigmoid(pose[(kc * 3 + 2) * mapSize[index][0] * mapSize[index][1] + h * mapSize[index][1] + w])x = (px * 2.0 + (meshgrid[gridIndex + 0] - 0.5)) * strides[index] * scale_wy = (py * 2.0 + (meshgrid[gridIndex + 1] - 0.5)) * strides[index] * scale_hposeResult.append(vs)poseResult.append(x)poseResult.append(y)# print(poseResult)box = DetectBox(cl, cls_val, xmin, ymin, xmax, ymax, poseResult)detectResult.append(box)# NMSprint('detectResult:', len(detectResult))predBox = NMS(detectResult)return predBoxdef export_rknn_inference(img):# Create RKNN objectrknn = RKNN(verbose=True)# pre-process configprint('--> Config model')rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], quantized_algorithm='normal', quantized_method='channel', target_platform='rk3568')print('done')# Load ONNX modelprint('--> Loading model')ret = rknn.load_onnx(model=ONNX_MODEL, outputs=["cls1", "reg1", "cls2", "reg2", "cls3", "reg3", "ps1", "ps2", "ps3"])if ret != 0:print('Load model failed!')exit(ret)print('done')# Build modelprint('--> Building model')ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET, rknn_batch_size=1)if ret != 0:print('Build model failed!')exit(ret)print('done')# Export RKNN modelprint('--> Export rknn model')ret = rknn.export_rknn(RKNN_MODEL)if ret != 0:print('Export rknn model failed!')exit(ret)print('done')# Init runtime environmentprint('--> Init runtime environment')ret = rknn.init_runtime()# ret = rknn.init_runtime(target='rk3566')if ret != 0:print('Init runtime environment failed!')exit(ret)print('done')# Inferenceprint('--> Running model')outputs = rknn.inference(inputs=[img])rknn.release()print('done')return outputsif __name__ == '__main__':print('This is main ...')GenerateMeshgrid()img_path = './test.jpg'orig_img = cv2.imread(img_path)img_h, img_w = orig_img.shape[:2]origimg = cv2.resize(orig_img, (input_imgW, input_imgH), interpolation=cv2.INTER_LINEAR)origimg = cv2.cvtColor(origimg, cv2.COLOR_BGR2RGB)img = np.expand_dims(origimg, 0)outputs = export_rknn_inference(img)out = []for i in range(len(outputs)):out.append(outputs[i])predbox = postprocess(out, img_h, img_w)print('obj num is :', len(predbox))for i in range(len(predbox)):xmin = int(predbox[i].xmin)ymin = int(predbox[i].ymin)xmax = int(predbox[i].xmax)ymax = int(predbox[i].ymax)classId = predbox[i].classIdscore = predbox[i].scorecv2.rectangle(orig_img, (xmin, ymin), (xmax, ymax), (128, 128, 0), 2)ptext = (xmin, ymin)title = CLASSES[classId] + "%.2f" % scorecv2.putText(orig_img, title, ptext, cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2, cv2.LINE_AA)pose = predbox[i].posefor i in range(0, keypoint_num):if pose[i * 3] > 0.5:if i < 5:color = color_list[0]elif 5 <= i < 12:color = color_list[1]else:color = color_list[2]cv2.circle(orig_img, (int(pose[i * 3 + 1]), int(pose[i * 3 + 2])), 2, color, 5)for i, sk in enumerate(skeleton):if pose[(sk[0] - 1) * 3] > 0.5:pos1 = (int(pose[(sk[0] - 1) * 3 + 1]), int(pose[(sk[0] - 1) * 3 + 2]))pos2 = (int(pose[(sk[1] - 1) * 3 + 1]), int(pose[(sk[1] - 1) * 3 + 2]))if (sk[0] - 1) < 5:color = color_list[0]elif 5 <= sk[0] - 1 < 12:color = color_list[1]else:color = color_list[2]cv2.line(orig_img, pos1, pos2, color, thickness=2, lineType=cv2.LINE_AA)cv2.imwrite('./test_rknn_result.jpg', orig_img)# cv2.imshow("test", orig_img)# cv2.waitKey(0)

把生成的onnx拷贝到当前目录,运行python onnx2rknn_demo_ZQ.py

结果正常 ,并生成了rknn文件

五、板端C++部署

下载代码:

http://GitHub - cqu20160901/yolov8pose_rknn_Cplusplus: yolov8pose 瑞芯微 rknn 板端 C++部署。

修改交叉工具链:

vim build-linux_RK356X.shset -eTARGET_SOC="rk356x"

GCC_COMPILER=/opt/atk-dlrk356x-toolchain/usr/bin/aarch64-buildroot-linux-gnuexport LD_LIBRARY_PATH=${TOOL_CHAIN}/lib64:$LD_LIBRARY_PATH

export CC=${GCC_COMPILER}-gcc

export CXX=${GCC_COMPILER}-g++ROOT_PWD=$( cd "$( dirname $0 )" && cd -P "$( dirname "$SOURCE" )" && pwd )# build

BUILD_DIR=${ROOT_PWD}/build/build_linux_aarch64if [[ ! -d "${BUILD_DIR}" ]]; thenmkdir -p ${BUILD_DIR}

ficd ${BUILD_DIR}

cmake ../.. -DCMAKE_SYSTEM_NAME=Linux -DTARGET_SOC=${TARGET_SOC}

make -j4

make install

cd -

编译:

会在当前目录下生成创建install,把install里的rknn_yolov8pose_demo_Linux文件全部使用adb方式上传到板子运行即可,注意模型记的替换,山水无移大佬用的是RK3588,无法在RK3568上运行的。一定要替换模型,或重新生成RKNN模型。

参考文章:

yolov8pose 瑞芯微RKNN芯片、地平线Horizon芯片、TensorRT部署_yolov8 rknn-CSDN博客

YOLOV8导出onnx模型,再导出RKNN模型-CSDN博客

模型转换代码:

yolov8pose 瑞芯微RKNN芯片、地平线Horizon芯片、TensorRT部署_yolov8 rknn-CSDN博客

板端 C++部署:

GitHub - cqu20160901/yolov8pose_rknn_Cplusplus: yolov8pose 瑞芯微 rknn 板端 C++部署。

如有侵权,或需要完整代码,请及时联系博主。

multimap)

)

)

)

![[机缘参悟-156] :一个软件架构师对佛学的理解 -22- 佛教经典的主要思想之《心经》:心经不是唯心主义,更不是迷信,摆脱对佛教的误解](http://pic.xiahunao.cn/[机缘参悟-156] :一个软件架构师对佛学的理解 -22- 佛教经典的主要思想之《心经》:心经不是唯心主义,更不是迷信,摆脱对佛教的误解)