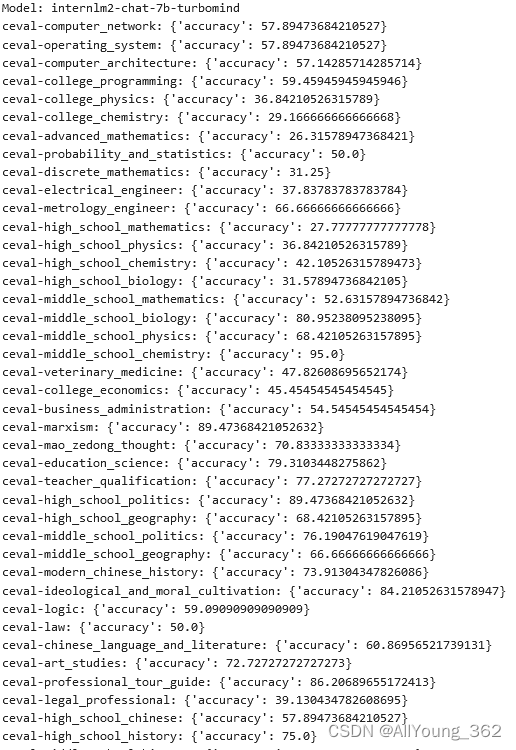

使用 OpenCompass 评测 InternLM2-Chat-7B 模型使用 LMDeploy 0.2.0 部署后在 C-Eval 数据集上的性能

步骤1:下载internLM2-Chat-7B 模型,并进行挂载

以下命令将internlm2-7b模型挂载到当前目录下:

ln -s /share/model_repos/internlm2-7b/ ./

步骤2:编译安装LMdeploy0.2.0

pip install 'lmdeploy[all]==v0.2.0'![]()

步骤3:使用LMdeploy 将模型internLM2-Chat-7B 进行转换

lmdeploy convert internlm2-chat-7b /root/model/Shanghai_AI_Laboratory/internlm2-chat-7b运行日志:

(internlm-demo) root@intern-studio:~/deploy# lmdeploy convert internlm2-chat-7b /root/model/Shanghai_AI_Laboratory/internlm2-chat-7b

create workspace in directory workspace

copy triton model templates from "/root/.conda/envs/internlm-demo/lib/python3.10/site-packages/lmdeploy/serve/turbomind/triton_models" to "workspace/triton_models"

copy service_docker_up.sh from "/root/.conda/envs/internlm-demo/lib/python3.10/site-packages/lmdeploy/serve/turbomind/service_docker_up.sh" to "workspace"

model_name internlm2-chat-7b

model_format None

inferred_model_format internlm2

model_path /root/model/Shanghai_AI_Laboratory/internlm2-chat-7b

tokenizer_path /root/model/Shanghai_AI_Laboratory/internlm2-chat-7b/tokenizer.model

output_format fp16

01/29 17:36:32 - lmdeploy - WARNING - Can not find tokenizer.json. It may take long time to initialize the tokenizer.

*** splitting layers.0.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.0.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.0.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.0.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.0.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.1.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.1.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.1.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.1.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.1.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.2.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.2.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.2.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.2.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.2.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.3.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.3.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.3.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.3.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.3.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.4.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.4.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.4.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.4.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.4.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.5.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.5.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.5.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.5.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.5.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.6.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.6.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.6.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.6.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.6.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.7.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.7.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.7.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.7.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.7.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.8.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.8.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.8.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.8.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.8.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.9.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.9.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.9.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.9.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.9.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.10.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.10.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.10.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.10.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.10.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.11.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.11.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.11.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.11.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.11.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.12.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.12.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.12.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.12.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.12.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.13.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.13.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.13.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.13.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.13.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.14.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.14.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.14.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.14.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.14.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.15.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.15.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.15.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.15.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.15.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.16.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.16.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.16.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.16.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.16.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.17.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.17.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.17.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.17.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.17.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.18.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.18.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.18.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.18.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.18.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.19.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.19.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.19.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.19.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.19.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.20.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.20.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.20.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.20.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.20.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.21.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.21.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.21.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.21.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.21.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.22.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.22.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.22.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.22.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.22.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.23.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.23.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.23.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.23.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.23.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.24.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.24.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.24.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.24.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.24.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.25.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.25.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.25.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.25.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.25.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.26.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.26.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.26.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.26.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.26.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.27.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.27.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.27.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.27.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.27.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.28.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.28.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.28.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.28.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.28.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.29.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.29.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.29.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.29.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.29.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.30.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.30.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.30.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.30.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.30.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

*** splitting layers.31.attention.w_qkv.weight, shape=torch.Size([4096, 6144]), split_dim=-1, tp=1

*** splitting layers.31.attention.wo.weight, shape=torch.Size([4096, 4096]), split_dim=0, tp=1

*** splitting layers.31.feed_forward.w1.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.31.feed_forward.w3.weight, shape=torch.Size([4096, 14336]), split_dim=-1, tp=1

*** splitting layers.31.feed_forward.w2.weight, shape=torch.Size([14336, 4096]), split_dim=0, tp=1

Convert to turbomind format: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 32/32 [00:27<00:00, 1.18it/s步骤4:模型结果测评

首先新建config文件,其中参数”/root/deploy/workspace/“表示LMdeploy转换后的模型地址。

from mmengine.config import read_base

from opencompass.models.turbomind import TurboMindModelwith read_base():# choose a list of datasets from .datasets.ceval.ceval_gen_5f30c7 import ceval_datasets # and output the results in a choosen formatfrom .summarizers.medium import summarizerdatasets = sum((v for k, v in locals().items() if k.endswith('_datasets')), [])internlm_meta_template = dict(round=[dict(role='HUMAN', begin='<|User|>:', end='\n'),dict(role='BOT', begin='<|Bot|>:', end='<eoa>\n', generate=True),

],eos_token_id=103028)# config for internlm-chat-7b

internlm2_chat_7b = dict(type=TurboMindModel,abbr='internlm2-chat-7b-turbomind',path='/root/deploy/workspace/',engine_config=dict(session_len=512,max_batch_size=2,rope_scaling_factor=1.0),gen_config=dict(top_k=1,top_p=0.8,temperature=1.0,max_new_tokens=100),max_out_len=100,max_seq_len=512,batch_size=2,concurrency=1,meta_template=internlm_meta_template,run_cfg=dict(num_gpus=1, num_procs=1),

)

models = [internlm2_chat_7b]在opencompass 目录下运行:

python run.py configs/eval_turbomind.py

同样可以添加--debug ,输出日志信息。

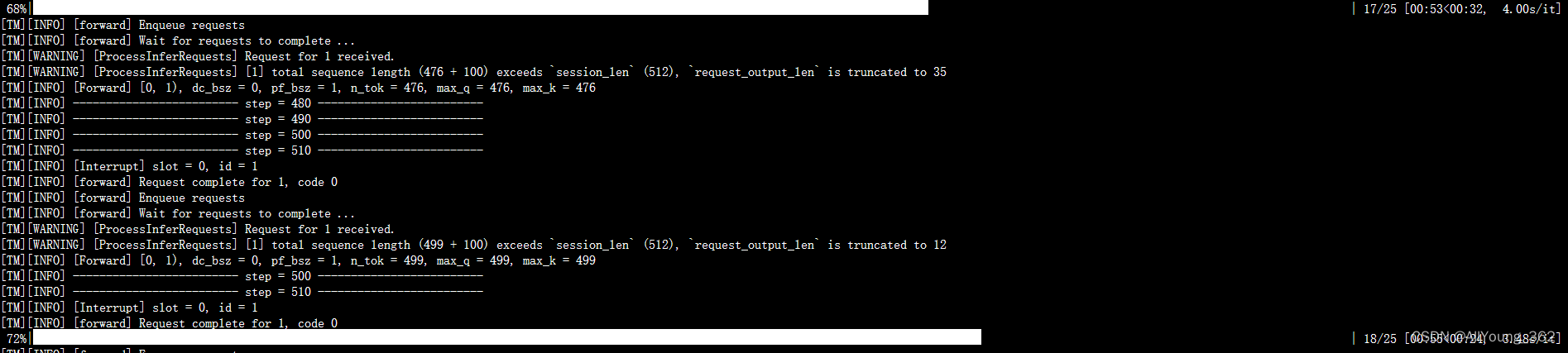

python run.py configs/eval_turbomind.py --debug过程日志如下:

组织成工程、并执行测试)

)