Tendermint:0.34.24

Docker:20.10.21

Docker-Compose:2.20.2

OS:Ubuntu 20.04

Go:1.19.2 Linux/amd64

1 修改Tendermint源码

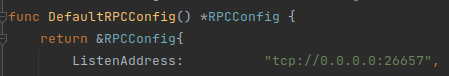

1.1 修改监听IP

为什么要将127.0.1修改成0.0.0.0呢?因为容器内的服务如果是以127.0.0.1暴露的话,外部是无法通过端口映射访问docker容器内对应服务的。

127.0.0.1是一个特殊的IP地址,称为本地回环地址,只能用于在同一台计算机上的进程之间进行通信。当您将服务绑定到127.0.0.1地址时,它将只能在本机进行访问,无法通过外部网络访问该应用程序。

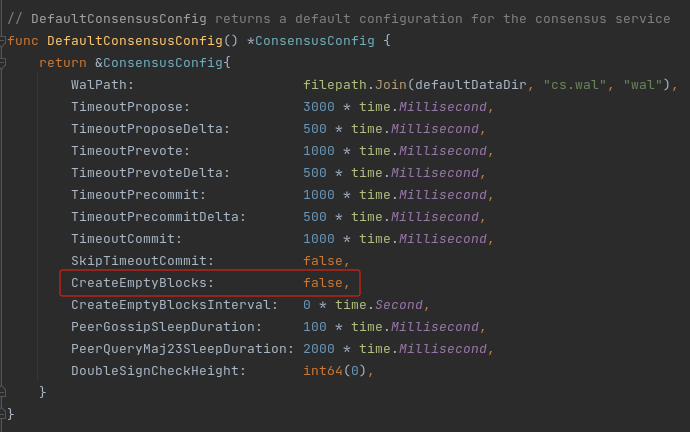

1.2 不产生空区块

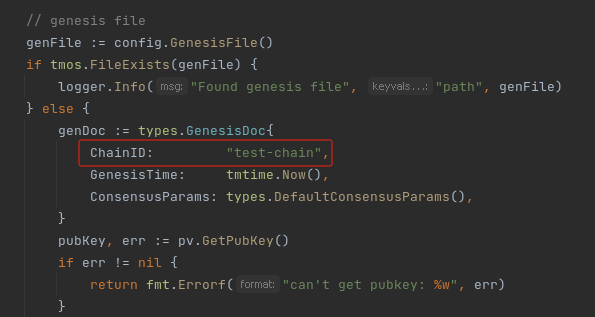

1.3 统一ChainID

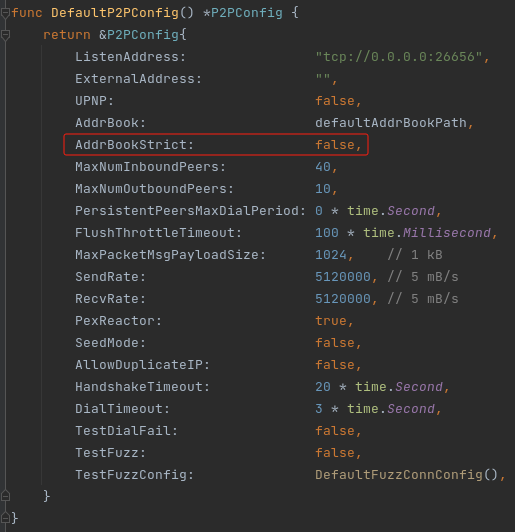

1.4 修改AddBookStrict为false,用来支持局域网通信

2 编译生成二进制可执行文件

make install

make install_abci

3 编写dockerfile定制镜像

FROM ubuntu

ENV MYPATH /usr/local

WORKDIR $MYPATH

ADD ./tendermint $MYPATH

ADD ./abci-cli $MYPATH

RUN apt update

RUN apt install curl

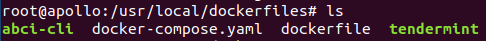

注意要将abci-cli和tendermint可执行文件与dockerfile文件放在同一目录下:

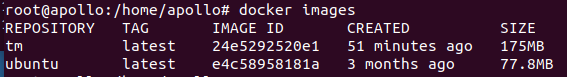

查看定制的docker镜像:

4 编写docker-compose编排容器

网络采用桥接模式,并对每个节点的RPC服务端口26657进行外部映射。

version: "3"

services:node0:image: tmcontainer_name: node0ports:- "26657:26657"volumes:- /usr/local/node0:$HOME/.tendermintnetworks:mynet:ipv4_address: 172.20.20.0command: - /bin/bash- -c- |./tendermint init./tendermint show-node-id > $HOME/.tendermint/nodeid.txt./abci-cli kvstorenode1:image: tmcontainer_name: node1ports:- "36657:26657"volumes:- /usr/local/node1:$HOME/.tendermintnetworks:mynet:ipv4_address: 172.20.20.1command:- /bin/bash- -c- |./tendermint init./tendermint show-node-id > $HOME/.tendermint/nodeid.txt./abci-cli kvstorenode2:image: tmcontainer_name: node2ports:- "46657:26657"networks:mynet:ipv4_address: 172.20.20.2volumes:- /usr/local/node2:$HOME/.tendermintcommand:- /bin/bash- -c- |./tendermint init./tendermint show-node-id > $HOME/.tendermint/nodeid.txt./abci-cli kvstorenode3: image: tmcontainer_name: node3ports:- "56657:26657"networks:mynet:ipv4_address: 172.20.20.3volumes: - /usr/local/node3:$HOME/.tendermintcommand:- /bin/bash- -c- |./tendermint init./tendermint show-node-id > $HOME/.tendermint/nodeid.txt./abci-cli kvstore

networks:mynet:ipam:driver: defaultconfig:- subnet: 172.20.0.0/16

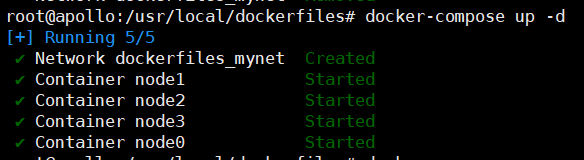

启动容器:

docker-compose up -d

5 统一的genesis.json

因为容器卷的缘故,宿主机本地可以直接查看每个节点的config和data目录。

集群要求每个节点的genesis.json文件完全相同,并且包含所有节点的validator信息。

这里通过一个简单的Python脚本快速构建统一的genesis.json文件:

linesArr = []for i in range(0, 4):f = open("/usr/local/node%d/config/genesis.json" % i, 'r')linesArr.append(f.readlines())f.close()f = open("./genesis.json", 'w')for i in range(len(linesArr)):lines = linesArr[i]if i == 0:for j in range(23):f.write(lines[j])for j in range(23, 32):if i == len(linesArr) - 1:f.write(lines[j])else:if j < 31:f.write(lines[j])else:f.write(lines[j][:len(lines[j]) - 1])f.write(",\n")for i in range(32, len(linesArr[0])):f.write(linesArr[0][i])f.close()

生成的统一的genesis.json创世文件:

{"genesis_time": "2024-01-06T02:17:15.806171802Z","chain_id": "test-chain","initial_height": "0","consensus_params": {"block": {"max_bytes": "22020096","max_gas": "50","time_iota_ms": "1000"},"evidence": {"max_age_num_blocks": "100000","max_age_duration": "172800000000000","max_bytes": "1048576"},"validator": {"pub_key_types": ["ed25519"]},"version": {}},"validators": [{"address": "6DE6C6F2CD5AD081A1B6C7A87FC915E04B1E2219","pub_key": {"type": "tendermint/PubKeyEd25519","value": "iKt2epWBuZHFayrS4qb7AJAwbfSlrJxOsLSbwwsUn9A="},"power": "10","name": ""},{"address": "5993A76300771C1EF633D6801FF53D5B6527127A","pub_key": {"type": "tendermint/PubKeyEd25519","value": "uGc87MkuEtnMTcIQGUD22mNdTAQ7400FDqtIOkctbDg="},"power": "10","name": ""},{"address": "039D64F5C23FD4CA0E4563B282B0B6F96CE278CB","pub_key": {"type": "tendermint/PubKeyEd25519","value": "FWFS6CuHP+iOk8tktKfMm1A8WJIldFP+chjDj9AbD78="},"power": "10","name": ""},{"address": "73BFE884FB6C97F170FED9A9699EDB1B3E5341C4","pub_key": {"type": "tendermint/PubKeyEd25519","value": "xpPW7WNXZYJnUCOLT/Uv1czyfLEhpiDo7jN/DS/Ad2s="},"power": "10","name": ""}],"app_hash": ""

}

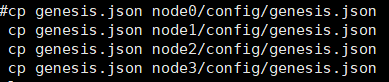

覆盖掉原来的genesis.json文件:

6 启动集群

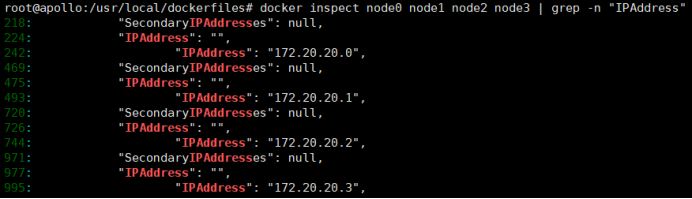

如果没在docker-compose手动指定各容器的IP,则通过docker inspect查询各容器的IP:

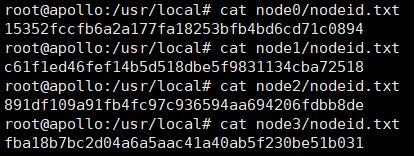

查看每个节点的nodeID,由于容器卷以及容器启动时会将nodeID重定向到nodeid.txt文件,我们在宿主机本地就能够访问。

最后构造出统一的启动命令:

./tendermint node --p2p.persistent_peers=“15352fccfb6a2a177fa18253bfb4bd6cd71c0894@172.20.20.0:26656,c61f1ed46fef14b5d518dbe5f9831134cba72518@172.20.20.1:26656,891df109a91fb4fc97c936594aa694206fdbb8de@172.20.20.2:26656,fba18b7bc2d04a6a5aac41a40ab5f230be51b031@172.20.20.3:26656”

7 验证集群是否启动成功

7.1 第一种验证方式:

可以看到,node2的peer数量为3

7.2 第二种验证方式:

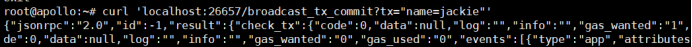

向node0发送一条name=jackie的交易:

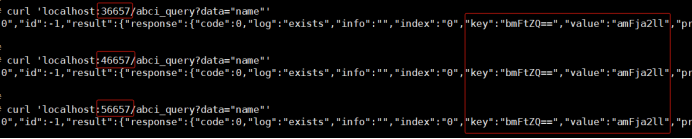

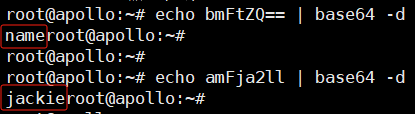

分别从node1、node2、node3查询交易,得到的结果是base64格式:

对返回的结果进行base64解码:

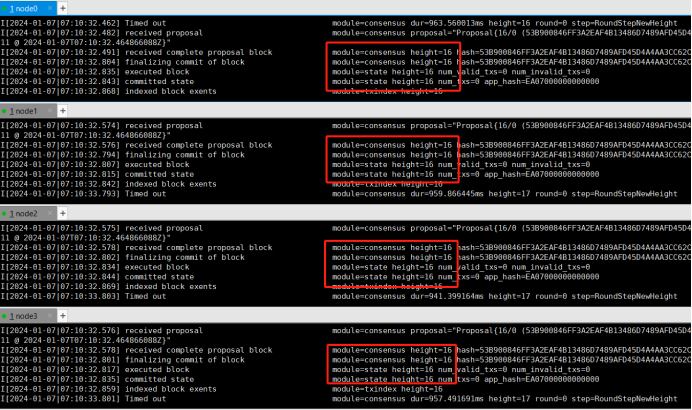

不过,通过命令行提交交易太繁琐了,我们干脆写个简单的测试程序,向node0提交500个交易:

func main() {cli, err := http.New("http://localhost:26657", "/websocket")if err != nil {panic(err)}for i := 0; i < 500; i++ {_, err = cli.BroadcastTxAsync(context.TODO(), types.Tx(strconv.Itoa(i)))if err != nil {panic(err)}}

}

会发现四个节点的区块全部同步到同一个高度:

如果我们让节点node0宕机,会发现其他三个节点会打印出node0宕机的信息:

最后优雅关闭所有容器:

至此。

的用法)

。Javaee项目。ssm项目。)