- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

目录

- 环境

- 步骤

- 环境设置

- 数据准备

- 图像信息查看

- 模型设计

- ResidualBlock块

- stack堆叠

- resnet50v2模型

- 模型训练

- 模型效果展示

- 总结与心得体会

环境

- 系统: Linux

- 语言: Python3.8.10

- 深度学习框架: Pytorch2.0.0+cu118

步骤

环境设置

包引用

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader, random_split

from torchvision import datasets, transformsimport copy, random, pathlib

import matplotlib.pyplot as plt

from PIL import Image

from torchinfo import summary

import numpy as np

设置一个全局的设备,使后面的模型和数据放置在统一的设备中

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

数据准备

从K同学提供的网盘中下载鸟类数据集,解压到data目录下,数据集的结构如下:

其中bird_photos下不同的文件夹中保存了不同类型的鸟类图像,这个目录结构可以使用torchvision.datasets.ImageFolder直接加载

图像信息查看

- 获取到所有的图像

root_dir = 'data/bird_photos'

root_directory = pathlib.Path(root_dir)

image_list = root_directory.glob("*/*")

- 随机打印5个图像的尺寸

for _ in range(5):print(np.array(Image.open(str(random.choice(image_list)))).shape)

发现都是224*224大小的三通道图像,所以我们可以在数据集处理时省略Resize这一步,或者加上224的Resize排除异常情况

3. 随机打印20个图像

plt.figure(figsize=(20, 4))

for i in range(20):plt.subplot(2, 10, i+1)plt.axis('off')image = random.choice(image_list)class_name = image.parts[-2]plt.title(class_name)plt.imshow(Image.open(str(image)))

4. 创建数据集

首先定义一个图像的预处理

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225],),

])

然后通过datasets.ImageFolder加载文件夹

dataset = datasets.ImageFolder(root_dir, transform=transform)

从数据中提取图像不同的分类名称

class_names = [x for x in dataset.class_to_idx]

划分训练集和验证集

train_size = int(len(dataset) * 0.8)

test_size = len(dataset) - train_sizetrain_dataset, test_dataset = random_split(dataset, [train_size, test_size])

最后,将数据集划分批次

batch_size = 8

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_loader = DataLoader(test_dataset, batch_size=batch_size)

模型设计

基于上次的ResNet-50,v2主要改进了BatchNormalization和激活函数的顺序。

ResidualBlock块

class ResidualBlock(nn.Module):def __init__(self, input_size, filters, kernel_size=3, stride=1, conv_shortcut=False):super().__init__()self.preact = nn.Sequential(nn.BatchNorm2d(input_size), nn.ReLU())self.conv_shortcut = conv_shortcutif conv_shortcut:self.shortcut = nn.Conv2d(input_size, 4*filters, 1, stride=stride, bias=False)elif stride > 1:self.shortcut = nn.MaxPool2d(1, stride=stride)else:self.shortcut = nn.Identity()self.conv1 = nn.Sequential(nn.Conv2d(input_size, filters, 1, stride=1, bias=False),nn.BatchNorm2d(filters),nn.ReLU())self.conv2 = nn.Sequential(nn.Conv2d(filters, filters, kernel_size, padding=1, stride=stride, bias=False),nn.BatchNorm2d(filters),nn.ReLU())self.conv3 = nn.Conv2d(filters, 4*filters, 1, bias=False)def forward(self, x):pre = self.preact(x)if self.conv_shortcut:shortcut = self.shortcut(pre)else:shortcut = self.shortcut(x)x = self.conv1(pre)x = self.conv2(x)x = self.conv3(x)x = x + shortcutreturn x

stack堆叠

class ResidualStack(nn.Module):def __init__(self, input_size, filters, blocks, stride=2):super().__init__()self.first = ResidualBlock(input_size, filters, conv_shortcut=True)self.module_list = nn.ModuleList([])for i in range(2, blocks):self.module_list.append(ResidualBlock(filters*4, filters))self.last = ResidualBlock(filters*4, filters, stride=stride)def forward(self, x):x = self.first(x)for layer in self.module_list:x = layer(x)x = self.last(x)return x

resnet50v2模型

class ResNet50v2(nn.Module):def __init__(self, include_top=True, preact=True, use_bias=True, input_size=None, pooling=None, classes=1000, classifier_activation = 'softmax'):super().__init__()self.input_conv = nn.Conv2d(3, 64, 7, padding=3, stride=2, bias=use_bias)if not preact:self.pre = nn.Sequential(nn.BatchNorm2d(64), nn.ReLU())else:self.pre = nn.Identity()self.pool = nn.MaxPool2d(3, padding=1, stride=2)self.stack1 = ResidualStack(64, 64, 3)self.stack2 = ResidualStack(256, 128, 4)self.stack3 = ResidualStack(512, 256, 6)self.stack4 = ResidualStack(1024, 512, 3, stride=1)if preact:self.post = nn.Sequential(nn.BatchNorm2d(2048), nn.ReLU())else:self.post = nn.Identity()if include_top:self.final = nn.Sequential(nn.AdaptiveAvgPool2d(1), nn.Flatten(), nn.Linear(2048, classes), nn.Softmax() if 'softmax' == classifier_activation else nn.Identity())elif 'avg' == pooling:self.final = nn.AdaptiveAvgPool2d(1)elif 'max' == pooling:self.final = nn.AdaptiveMaxPool2d(1)def forward(self, x):x = self.input_conv(x)x = self.pre(x)x = self.pool(x)x = self.stack1(x)x = self.stack2(x)x = self.stack3(x)x = self.stack4(x)x = self.post(x)x = self.final(x)return x

创建模型并打印

model = ResNet50v2(classes=len(class_names)).to(device)

summary(model, input_size=(8, 3, 224, 224))

===============================================================================================

Layer (type:depth-idx) Output Shape Param #

===============================================================================================

ResNet50v2 [8, 4] --

├─Conv2d: 1-1 [8, 64, 112, 112] 9,472

├─Identity: 1-2 [8, 64, 112, 112] --

├─MaxPool2d: 1-3 [8, 64, 56, 56] --

├─ResidualStack: 1-4 [8, 256, 28, 28] --

│ └─ResidualBlock: 2-1 [8, 256, 56, 56] --

│ │ └─Sequential: 3-1 [8, 64, 56, 56] 128

│ │ └─Conv2d: 3-2 [8, 256, 56, 56] 16,384

│ │ └─Sequential: 3-3 [8, 64, 56, 56] 4,224

│ │ └─Sequential: 3-4 [8, 64, 56, 56] 36,992

│ │ └─Conv2d: 3-5 [8, 256, 56, 56] 16,384

│ └─ModuleList: 2-2 -- --

│ │ └─ResidualBlock: 3-6 [8, 256, 56, 56] 70,400

│ └─ResidualBlock: 2-3 [8, 256, 28, 28] --

│ │ └─Sequential: 3-7 [8, 256, 56, 56] 512

│ │ └─MaxPool2d: 3-8 [8, 256, 28, 28] --

│ │ └─Sequential: 3-9 [8, 64, 56, 56] 16,512

│ │ └─Sequential: 3-10 [8, 64, 28, 28] 36,992

│ │ └─Conv2d: 3-11 [8, 256, 28, 28] 16,384

├─ResidualStack: 1-5 [8, 512, 14, 14] --

│ └─ResidualBlock: 2-4 [8, 512, 28, 28] --

│ │ └─Sequential: 3-12 [8, 256, 28, 28] 512

│ │ └─Conv2d: 3-13 [8, 512, 28, 28] 131,072

│ │ └─Sequential: 3-14 [8, 128, 28, 28] 33,024

│ │ └─Sequential: 3-15 [8, 128, 28, 28] 147,712

│ │ └─Conv2d: 3-16 [8, 512, 28, 28] 65,536

│ └─ModuleList: 2-5 -- --

│ │ └─ResidualBlock: 3-17 [8, 512, 28, 28] 280,064

│ │ └─ResidualBlock: 3-18 [8, 512, 28, 28] 280,064

│ └─ResidualBlock: 2-6 [8, 512, 14, 14] --

│ │ └─Sequential: 3-19 [8, 512, 28, 28] 1,024

│ │ └─MaxPool2d: 3-20 [8, 512, 14, 14] --

│ │ └─Sequential: 3-21 [8, 128, 28, 28] 65,792

│ │ └─Sequential: 3-22 [8, 128, 14, 14] 147,712

│ │ └─Conv2d: 3-23 [8, 512, 14, 14] 65,536

├─ResidualStack: 1-6 [8, 1024, 7, 7] --

│ └─ResidualBlock: 2-7 [8, 1024, 14, 14] --

│ │ └─Sequential: 3-24 [8, 512, 14, 14] 1,024

│ │ └─Conv2d: 3-25 [8, 1024, 14, 14] 524,288

│ │ └─Sequential: 3-26 [8, 256, 14, 14] 131,584

│ │ └─Sequential: 3-27 [8, 256, 14, 14] 590,336

│ │ └─Conv2d: 3-28 [8, 1024, 14, 14] 262,144

│ └─ModuleList: 2-8 -- --

│ │ └─ResidualBlock: 3-29 [8, 1024, 14, 14] 1,117,184

│ │ └─ResidualBlock: 3-30 [8, 1024, 14, 14] 1,117,184

│ │ └─ResidualBlock: 3-31 [8, 1024, 14, 14] 1,117,184

│ │ └─ResidualBlock: 3-32 [8, 1024, 14, 14] 1,117,184

│ └─ResidualBlock: 2-9 [8, 1024, 7, 7] --

│ │ └─Sequential: 3-33 [8, 1024, 14, 14] 2,048

│ │ └─MaxPool2d: 3-34 [8, 1024, 7, 7] --

│ │ └─Sequential: 3-35 [8, 256, 14, 14] 262,656

│ │ └─Sequential: 3-36 [8, 256, 7, 7] 590,336

│ │ └─Conv2d: 3-37 [8, 1024, 7, 7] 262,144

├─ResidualStack: 1-7 [8, 2048, 7, 7] --

│ └─ResidualBlock: 2-10 [8, 2048, 7, 7] --

│ │ └─Sequential: 3-38 [8, 1024, 7, 7] 2,048

│ │ └─Conv2d: 3-39 [8, 2048, 7, 7] 2,097,152

│ │ └─Sequential: 3-40 [8, 512, 7, 7] 525,312

│ │ └─Sequential: 3-41 [8, 512, 7, 7] 2,360,320

│ │ └─Conv2d: 3-42 [8, 2048, 7, 7] 1,048,576

│ └─ModuleList: 2-11 -- --

│ │ └─ResidualBlock: 3-43 [8, 2048, 7, 7] 4,462,592

│ └─ResidualBlock: 2-12 [8, 2048, 7, 7] --

│ │ └─Sequential: 3-44 [8, 2048, 7, 7] 4,096

│ │ └─Identity: 3-45 [8, 2048, 7, 7] --

│ │ └─Sequential: 3-46 [8, 512, 7, 7] 1,049,600

│ │ └─Sequential: 3-47 [8, 512, 7, 7] 2,360,320

│ │ └─Conv2d: 3-48 [8, 2048, 7, 7] 1,048,576

├─Sequential: 1-8 [8, 2048, 7, 7] --

│ └─BatchNorm2d: 2-13 [8, 2048, 7, 7] 4,096

│ └─ReLU: 2-14 [8, 2048, 7, 7] --

├─Sequential: 1-9 [8, 4] --

│ └─AdaptiveAvgPool2d: 2-15 [8, 2048, 1, 1] --

│ └─Flatten: 2-16 [8, 2048] --

│ └─Linear: 2-17 [8, 4] 8,196

│ └─Softmax: 2-18 [8, 4] --

===============================================================================================

Total params: 23,508,612

Trainable params: 23,508,612

Non-trainable params: 0

Total mult-adds (G): 27.85

===============================================================================================

Input size (MB): 4.82

Forward/backward pass size (MB): 1051.69

Params size (MB): 94.03

Estimated Total Size (MB): 1150.54

===============================================================================================

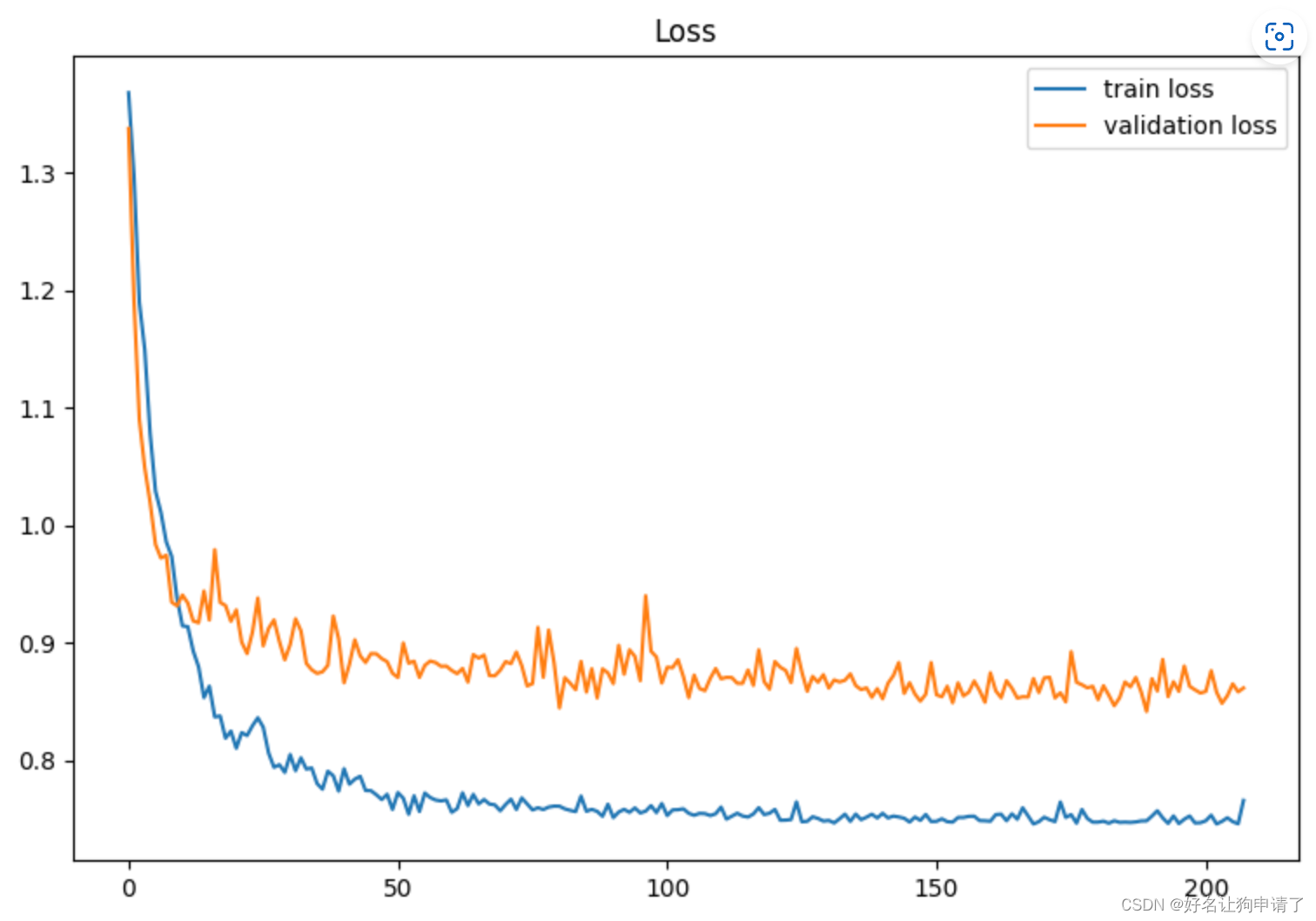

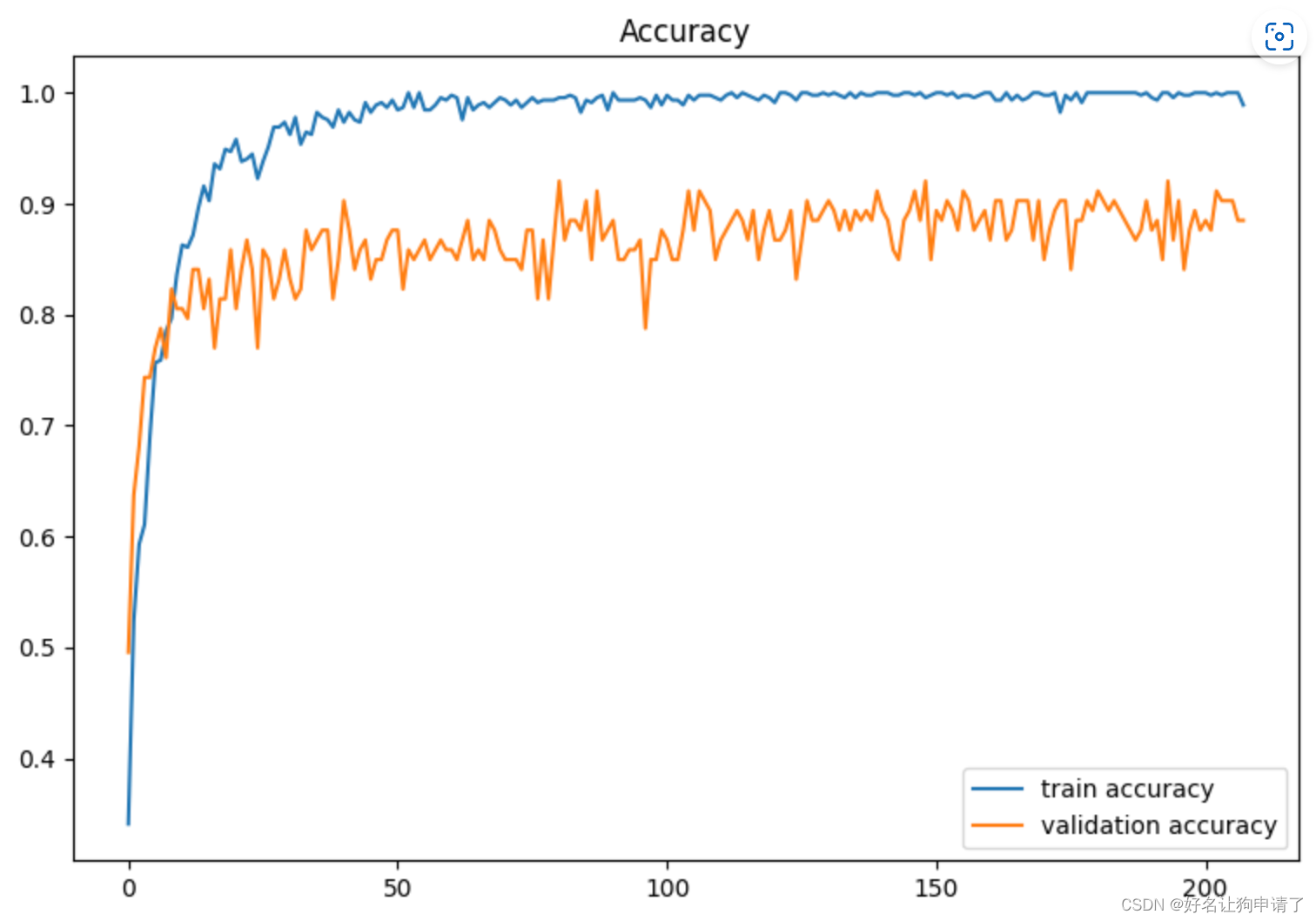

模型训练

训练代码和上一节完全一样,就不再写了, 训练结果如下:

Epoch: 1, Lr:1e-05, TrainAcc: 34.1, TrainLoss: 1.368, TestAcc: 49.6, TestLoss: 1.338

Epoch: 2, Lr:1e-05, TrainAcc: 52.4, TrainLoss: 1.297, TestAcc: 63.7, TestLoss: 1.192

Epoch: 3, Lr:1e-05, TrainAcc: 59.3, TrainLoss: 1.190, TestAcc: 68.1, TestLoss: 1.090

Epoch: 4, Lr:1e-05, TrainAcc: 61.1, TrainLoss: 1.150, TestAcc: 74.3, TestLoss: 1.049

Epoch: 5, Lr:9.5e-06, TrainAcc: 69.0, TrainLoss: 1.078, TestAcc: 74.3, TestLoss: 1.019

Epoch: 6, Lr:9.5e-06, TrainAcc: 75.7, TrainLoss: 1.029, TestAcc: 77.0, TestLoss: 0.984

Epoch: 7, Lr:9.5e-06, TrainAcc: 75.9, TrainLoss: 1.011, TestAcc: 78.8, TestLoss: 0.972

Epoch: 8, Lr:9.5e-06, TrainAcc: 78.5, TrainLoss: 0.986, TestAcc: 76.1, TestLoss: 0.974

Epoch: 9, Lr:9.5e-06, TrainAcc: 79.6, TrainLoss: 0.973, TestAcc: 82.3, TestLoss: 0.934

Epoch: 10, Lr:9.025e-06, TrainAcc: 83.6, TrainLoss: 0.938, TestAcc: 80.5, TestLoss: 0.931

Epoch: 11, Lr:9.025e-06, TrainAcc: 86.3, TrainLoss: 0.914, TestAcc: 80.5, TestLoss: 0.940

Epoch: 12, Lr:9.025e-06, TrainAcc: 86.1, TrainLoss: 0.914, TestAcc: 79.6, TestLoss: 0.934

Epoch: 13, Lr:9.025e-06, TrainAcc: 87.2, TrainLoss: 0.893, TestAcc: 84.1, TestLoss: 0.918

Epoch: 14, Lr:9.025e-06, TrainAcc: 89.6, TrainLoss: 0.879, TestAcc: 84.1, TestLoss: 0.917

Epoch: 15, Lr:8.573749999999999e-06, TrainAcc: 91.6, TrainLoss: 0.853, TestAcc: 80.5, TestLoss: 0.944

Epoch: 16, Lr:8.573749999999999e-06, TrainAcc: 90.3, TrainLoss: 0.863, TestAcc: 83.2, TestLoss: 0.919

Epoch: 17, Lr:8.573749999999999e-06, TrainAcc: 93.6, TrainLoss: 0.837, TestAcc: 77.0, TestLoss: 0.979

Epoch: 18, Lr:8.573749999999999e-06, TrainAcc: 93.1, TrainLoss: 0.838, TestAcc: 81.4, TestLoss: 0.934

Epoch: 19, Lr:8.573749999999999e-06, TrainAcc: 94.9, TrainLoss: 0.819, TestAcc: 81.4, TestLoss: 0.932

Epoch: 20, Lr:8.1450625e-06, TrainAcc: 94.7, TrainLoss: 0.825, TestAcc: 85.8, TestLoss: 0.918

Epoch: 21, Lr:8.1450625e-06, TrainAcc: 95.8, TrainLoss: 0.810, TestAcc: 80.5, TestLoss: 0.928

Epoch: 22, Lr:8.1450625e-06, TrainAcc: 93.8, TrainLoss: 0.823, TestAcc: 84.1, TestLoss: 0.900

Epoch: 23, Lr:8.1450625e-06, TrainAcc: 94.0, TrainLoss: 0.821, TestAcc: 86.7, TestLoss: 0.891

Epoch: 24, Lr:8.1450625e-06, TrainAcc: 94.5, TrainLoss: 0.829, TestAcc: 84.1, TestLoss: 0.909

Epoch: 25, Lr:7.737809374999999e-06, TrainAcc: 92.3, TrainLoss: 0.836, TestAcc: 77.0, TestLoss: 0.938

Epoch: 26, Lr:7.737809374999999e-06, TrainAcc: 93.8, TrainLoss: 0.828, TestAcc: 85.8, TestLoss: 0.897

Epoch: 27, Lr:7.737809374999999e-06, TrainAcc: 95.1, TrainLoss: 0.806, TestAcc: 85.0, TestLoss: 0.912

Epoch: 28, Lr:7.737809374999999e-06, TrainAcc: 96.9, TrainLoss: 0.794, TestAcc: 81.4, TestLoss: 0.919

Epoch: 29, Lr:7.737809374999999e-06, TrainAcc: 96.9, TrainLoss: 0.796, TestAcc: 83.2, TestLoss: 0.901

Epoch: 30, Lr:7.350918906249998e-06, TrainAcc: 97.3, TrainLoss: 0.789, TestAcc: 85.8, TestLoss: 0.885

Epoch: 31, Lr:7.350918906249998e-06, TrainAcc: 96.2, TrainLoss: 0.805, TestAcc: 83.2, TestLoss: 0.898

Epoch: 32, Lr:7.350918906249998e-06, TrainAcc: 97.8, TrainLoss: 0.791, TestAcc: 81.4, TestLoss: 0.920

Epoch: 33, Lr:7.350918906249998e-06, TrainAcc: 95.4, TrainLoss: 0.802, TestAcc: 82.3, TestLoss: 0.910

Epoch: 34, Lr:7.350918906249998e-06, TrainAcc: 96.5, TrainLoss: 0.792, TestAcc: 87.6, TestLoss: 0.882

Epoch: 35, Lr:6.983372960937498e-06, TrainAcc: 96.2, TrainLoss: 0.793, TestAcc: 85.8, TestLoss: 0.877

Epoch: 36, Lr:6.983372960937498e-06, TrainAcc: 98.2, TrainLoss: 0.780, TestAcc: 86.7, TestLoss: 0.874

Epoch: 37, Lr:6.983372960937498e-06, TrainAcc: 97.8, TrainLoss: 0.775, TestAcc: 87.6, TestLoss: 0.875

Epoch: 38, Lr:6.983372960937498e-06, TrainAcc: 97.6, TrainLoss: 0.790, TestAcc: 87.6, TestLoss: 0.881

Epoch: 39, Lr:6.983372960937498e-06, TrainAcc: 96.9, TrainLoss: 0.787, TestAcc: 81.4, TestLoss: 0.923

Epoch: 40, Lr:6.634204312890623e-06, TrainAcc: 98.5, TrainLoss: 0.774, TestAcc: 85.0, TestLoss: 0.903

Epoch: 41, Lr:6.634204312890623e-06, TrainAcc: 97.3, TrainLoss: 0.793, TestAcc: 90.3, TestLoss: 0.866

Epoch: 42, Lr:6.634204312890623e-06, TrainAcc: 98.2, TrainLoss: 0.779, TestAcc: 87.6, TestLoss: 0.882

Epoch: 43, Lr:6.634204312890623e-06, TrainAcc: 97.6, TrainLoss: 0.784, TestAcc: 84.1, TestLoss: 0.902

Epoch: 44, Lr:6.634204312890623e-06, TrainAcc: 97.3, TrainLoss: 0.786, TestAcc: 85.8, TestLoss: 0.889

Epoch: 45, Lr:6.302494097246091e-06, TrainAcc: 99.1, TrainLoss: 0.774, TestAcc: 86.7, TestLoss: 0.883

Epoch: 46, Lr:6.302494097246091e-06, TrainAcc: 98.2, TrainLoss: 0.774, TestAcc: 83.2, TestLoss: 0.891

Epoch: 47, Lr:6.302494097246091e-06, TrainAcc: 98.9, TrainLoss: 0.770, TestAcc: 85.0, TestLoss: 0.890

Epoch: 48, Lr:6.302494097246091e-06, TrainAcc: 99.1, TrainLoss: 0.766, TestAcc: 85.0, TestLoss: 0.886

Epoch: 49, Lr:6.302494097246091e-06, TrainAcc: 98.7, TrainLoss: 0.771, TestAcc: 86.7, TestLoss: 0.884

Epoch: 50, Lr:5.987369392383788e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 87.6, TestLoss: 0.874

Epoch: 51, Lr:5.987369392383788e-06, TrainAcc: 98.5, TrainLoss: 0.772, TestAcc: 87.6, TestLoss: 0.870

Epoch: 52, Lr:5.987369392383788e-06, TrainAcc: 98.7, TrainLoss: 0.767, TestAcc: 82.3, TestLoss: 0.900

Epoch: 53, Lr:5.987369392383788e-06, TrainAcc: 100.0, TrainLoss: 0.754, TestAcc: 85.8, TestLoss: 0.882

Epoch: 54, Lr:5.987369392383788e-06, TrainAcc: 98.7, TrainLoss: 0.770, TestAcc: 85.0, TestLoss: 0.884

Epoch: 55, Lr:5.688000922764597e-06, TrainAcc: 100.0, TrainLoss: 0.756, TestAcc: 85.8, TestLoss: 0.870

Epoch: 56, Lr:5.688000922764597e-06, TrainAcc: 98.5, TrainLoss: 0.772, TestAcc: 86.7, TestLoss: 0.881

Epoch: 57, Lr:5.688000922764597e-06, TrainAcc: 98.5, TrainLoss: 0.768, TestAcc: 85.0, TestLoss: 0.884

Epoch: 58, Lr:5.688000922764597e-06, TrainAcc: 98.9, TrainLoss: 0.766, TestAcc: 85.8, TestLoss: 0.883

Epoch: 59, Lr:5.688000922764597e-06, TrainAcc: 99.6, TrainLoss: 0.765, TestAcc: 86.7, TestLoss: 0.880

Epoch: 60, Lr:5.403600876626367e-06, TrainAcc: 99.3, TrainLoss: 0.766, TestAcc: 85.8, TestLoss: 0.880

Epoch: 61, Lr:5.403600876626367e-06, TrainAcc: 99.8, TrainLoss: 0.755, TestAcc: 85.8, TestLoss: 0.876

Epoch: 62, Lr:5.403600876626367e-06, TrainAcc: 99.6, TrainLoss: 0.759, TestAcc: 85.0, TestLoss: 0.874

Epoch: 63, Lr:5.403600876626367e-06, TrainAcc: 97.6, TrainLoss: 0.772, TestAcc: 86.7, TestLoss: 0.878

Epoch: 64, Lr:5.403600876626367e-06, TrainAcc: 99.6, TrainLoss: 0.761, TestAcc: 88.5, TestLoss: 0.866

Epoch: 65, Lr:5.133420832795049e-06, TrainAcc: 98.5, TrainLoss: 0.771, TestAcc: 85.0, TestLoss: 0.890

Epoch: 66, Lr:5.133420832795049e-06, TrainAcc: 98.9, TrainLoss: 0.763, TestAcc: 85.8, TestLoss: 0.887

Epoch: 67, Lr:5.133420832795049e-06, TrainAcc: 99.1, TrainLoss: 0.766, TestAcc: 85.0, TestLoss: 0.889

Epoch: 68, Lr:5.133420832795049e-06, TrainAcc: 98.7, TrainLoss: 0.763, TestAcc: 88.5, TestLoss: 0.872

Epoch: 69, Lr:5.133420832795049e-06, TrainAcc: 99.1, TrainLoss: 0.762, TestAcc: 87.6, TestLoss: 0.872

Epoch: 70, Lr:4.876749791155296e-06, TrainAcc: 99.6, TrainLoss: 0.757, TestAcc: 85.8, TestLoss: 0.876

Epoch: 71, Lr:4.876749791155296e-06, TrainAcc: 99.3, TrainLoss: 0.762, TestAcc: 85.0, TestLoss: 0.884

Epoch: 72, Lr:4.876749791155296e-06, TrainAcc: 98.9, TrainLoss: 0.767, TestAcc: 85.0, TestLoss: 0.882

Epoch: 73, Lr:4.876749791155296e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 85.0, TestLoss: 0.892

Epoch: 74, Lr:4.876749791155296e-06, TrainAcc: 98.7, TrainLoss: 0.768, TestAcc: 84.1, TestLoss: 0.880

Epoch: 75, Lr:4.632912301597531e-06, TrainAcc: 99.1, TrainLoss: 0.763, TestAcc: 87.6, TestLoss: 0.863

Epoch: 76, Lr:4.632912301597531e-06, TrainAcc: 99.6, TrainLoss: 0.757, TestAcc: 87.6, TestLoss: 0.865

Epoch: 77, Lr:4.632912301597531e-06, TrainAcc: 99.1, TrainLoss: 0.759, TestAcc: 81.4, TestLoss: 0.913

Epoch: 78, Lr:4.632912301597531e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 86.7, TestLoss: 0.870

Epoch: 79, Lr:4.632912301597531e-06, TrainAcc: 99.3, TrainLoss: 0.760, TestAcc: 81.4, TestLoss: 0.911

Epoch: 80, Lr:4.401266686517654e-06, TrainAcc: 99.3, TrainLoss: 0.761, TestAcc: 86.7, TestLoss: 0.883

Epoch: 81, Lr:4.401266686517654e-06, TrainAcc: 99.6, TrainLoss: 0.761, TestAcc: 92.0, TestLoss: 0.844

Epoch: 82, Lr:4.401266686517654e-06, TrainAcc: 99.6, TrainLoss: 0.758, TestAcc: 86.7, TestLoss: 0.870

Epoch: 83, Lr:4.401266686517654e-06, TrainAcc: 99.8, TrainLoss: 0.757, TestAcc: 88.5, TestLoss: 0.865

Epoch: 84, Lr:4.401266686517654e-06, TrainAcc: 99.6, TrainLoss: 0.756, TestAcc: 88.5, TestLoss: 0.860

Epoch: 85, Lr:4.181203352191771e-06, TrainAcc: 98.2, TrainLoss: 0.769, TestAcc: 87.6, TestLoss: 0.884

Epoch: 86, Lr:4.181203352191771e-06, TrainAcc: 99.3, TrainLoss: 0.756, TestAcc: 90.3, TestLoss: 0.858

Epoch: 87, Lr:4.181203352191771e-06, TrainAcc: 99.1, TrainLoss: 0.758, TestAcc: 85.0, TestLoss: 0.878

Epoch: 88, Lr:4.181203352191771e-06, TrainAcc: 99.6, TrainLoss: 0.756, TestAcc: 91.2, TestLoss: 0.853

Epoch: 89, Lr:4.181203352191771e-06, TrainAcc: 99.8, TrainLoss: 0.752, TestAcc: 86.7, TestLoss: 0.878

Epoch: 90, Lr:3.972143184582182e-06, TrainAcc: 98.5, TrainLoss: 0.762, TestAcc: 87.6, TestLoss: 0.874

Epoch: 91, Lr:3.972143184582182e-06, TrainAcc: 100.0, TrainLoss: 0.751, TestAcc: 88.5, TestLoss: 0.865

Epoch: 92, Lr:3.972143184582182e-06, TrainAcc: 99.3, TrainLoss: 0.756, TestAcc: 85.0, TestLoss: 0.898

Epoch: 93, Lr:3.972143184582182e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 85.0, TestLoss: 0.873

Epoch: 94, Lr:3.972143184582182e-06, TrainAcc: 99.3, TrainLoss: 0.755, TestAcc: 85.8, TestLoss: 0.894

Epoch: 95, Lr:3.7735360253530726e-06, TrainAcc: 99.3, TrainLoss: 0.759, TestAcc: 85.8, TestLoss: 0.888

Epoch: 96, Lr:3.7735360253530726e-06, TrainAcc: 99.6, TrainLoss: 0.755, TestAcc: 86.7, TestLoss: 0.868

Epoch: 97, Lr:3.7735360253530726e-06, TrainAcc: 99.3, TrainLoss: 0.756, TestAcc: 78.8, TestLoss: 0.940

Epoch: 98, Lr:3.7735360253530726e-06, TrainAcc: 98.7, TrainLoss: 0.761, TestAcc: 85.0, TestLoss: 0.893

Epoch: 99, Lr:3.7735360253530726e-06, TrainAcc: 99.8, TrainLoss: 0.755, TestAcc: 85.0, TestLoss: 0.888

Epoch: 100, Lr:3.584859224085419e-06, TrainAcc: 98.9, TrainLoss: 0.763, TestAcc: 87.6, TestLoss: 0.866

Epoch: 101, Lr:3.584859224085419e-06, TrainAcc: 99.8, TrainLoss: 0.753, TestAcc: 86.7, TestLoss: 0.879

Epoch: 102, Lr:3.584859224085419e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 85.0, TestLoss: 0.879

Epoch: 103, Lr:3.584859224085419e-06, TrainAcc: 99.3, TrainLoss: 0.758, TestAcc: 85.0, TestLoss: 0.885

Epoch: 104, Lr:3.584859224085419e-06, TrainAcc: 98.9, TrainLoss: 0.758, TestAcc: 87.6, TestLoss: 0.871

Epoch: 105, Lr:3.4056162628811484e-06, TrainAcc: 99.8, TrainLoss: 0.755, TestAcc: 91.2, TestLoss: 0.853

Epoch: 106, Lr:3.4056162628811484e-06, TrainAcc: 99.3, TrainLoss: 0.753, TestAcc: 87.6, TestLoss: 0.872

Epoch: 107, Lr:3.4056162628811484e-06, TrainAcc: 99.8, TrainLoss: 0.755, TestAcc: 91.2, TestLoss: 0.861

Epoch: 108, Lr:3.4056162628811484e-06, TrainAcc: 99.8, TrainLoss: 0.754, TestAcc: 90.3, TestLoss: 0.859

Epoch: 109, Lr:3.4056162628811484e-06, TrainAcc: 99.8, TrainLoss: 0.753, TestAcc: 89.4, TestLoss: 0.870

Epoch: 110, Lr:3.2353354497370905e-06, TrainAcc: 99.6, TrainLoss: 0.754, TestAcc: 85.0, TestLoss: 0.878

Epoch: 111, Lr:3.2353354497370905e-06, TrainAcc: 99.3, TrainLoss: 0.760, TestAcc: 86.7, TestLoss: 0.869

Epoch: 112, Lr:3.2353354497370905e-06, TrainAcc: 99.8, TrainLoss: 0.750, TestAcc: 87.6, TestLoss: 0.870

Epoch: 113, Lr:3.2353354497370905e-06, TrainAcc: 100.0, TrainLoss: 0.752, TestAcc: 88.5, TestLoss: 0.870

Epoch: 114, Lr:3.2353354497370905e-06, TrainAcc: 99.6, TrainLoss: 0.755, TestAcc: 89.4, TestLoss: 0.865

Epoch: 115, Lr:3.073568677250236e-06, TrainAcc: 100.0, TrainLoss: 0.752, TestAcc: 88.5, TestLoss: 0.865

Epoch: 116, Lr:3.073568677250236e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 86.7, TestLoss: 0.877

Epoch: 117, Lr:3.073568677250236e-06, TrainAcc: 99.6, TrainLoss: 0.754, TestAcc: 89.4, TestLoss: 0.864

Epoch: 118, Lr:3.073568677250236e-06, TrainAcc: 99.3, TrainLoss: 0.759, TestAcc: 85.0, TestLoss: 0.894

Epoch: 119, Lr:3.073568677250236e-06, TrainAcc: 99.8, TrainLoss: 0.754, TestAcc: 87.6, TestLoss: 0.867

Epoch: 120, Lr:2.919890243387724e-06, TrainAcc: 99.6, TrainLoss: 0.755, TestAcc: 89.4, TestLoss: 0.860

Epoch: 121, Lr:2.919890243387724e-06, TrainAcc: 99.1, TrainLoss: 0.758, TestAcc: 86.7, TestLoss: 0.884

Epoch: 122, Lr:2.919890243387724e-06, TrainAcc: 100.0, TrainLoss: 0.749, TestAcc: 86.7, TestLoss: 0.879

Epoch: 123, Lr:2.919890243387724e-06, TrainAcc: 100.0, TrainLoss: 0.749, TestAcc: 87.6, TestLoss: 0.876

Epoch: 124, Lr:2.919890243387724e-06, TrainAcc: 99.8, TrainLoss: 0.749, TestAcc: 89.4, TestLoss: 0.866

Epoch: 125, Lr:2.7738957312183377e-06, TrainAcc: 99.3, TrainLoss: 0.764, TestAcc: 83.2, TestLoss: 0.895

Epoch: 126, Lr:2.7738957312183377e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 86.7, TestLoss: 0.875

Epoch: 127, Lr:2.7738957312183377e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.859

Epoch: 128, Lr:2.7738957312183377e-06, TrainAcc: 99.8, TrainLoss: 0.752, TestAcc: 88.5, TestLoss: 0.871

Epoch: 129, Lr:2.7738957312183377e-06, TrainAcc: 99.8, TrainLoss: 0.750, TestAcc: 88.5, TestLoss: 0.866

Epoch: 130, Lr:2.6352009446574206e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 89.4, TestLoss: 0.873

Epoch: 131, Lr:2.6352009446574206e-06, TrainAcc: 99.8, TrainLoss: 0.749, TestAcc: 90.3, TestLoss: 0.861

Epoch: 132, Lr:2.6352009446574206e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 89.4, TestLoss: 0.868

Epoch: 133, Lr:2.6352009446574206e-06, TrainAcc: 99.8, TrainLoss: 0.750, TestAcc: 87.6, TestLoss: 0.867

Epoch: 134, Lr:2.6352009446574206e-06, TrainAcc: 99.6, TrainLoss: 0.754, TestAcc: 89.4, TestLoss: 0.868

Epoch: 135, Lr:2.5034408974245495e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 87.6, TestLoss: 0.873

Epoch: 136, Lr:2.5034408974245495e-06, TrainAcc: 99.6, TrainLoss: 0.754, TestAcc: 89.4, TestLoss: 0.863

Epoch: 137, Lr:2.5034408974245495e-06, TrainAcc: 100.0, TrainLoss: 0.749, TestAcc: 88.5, TestLoss: 0.860

Epoch: 138, Lr:2.5034408974245495e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 89.4, TestLoss: 0.861

Epoch: 139, Lr:2.5034408974245495e-06, TrainAcc: 99.8, TrainLoss: 0.754, TestAcc: 88.5, TestLoss: 0.853

Epoch: 140, Lr:2.378268852553322e-06, TrainAcc: 100.0, TrainLoss: 0.751, TestAcc: 91.2, TestLoss: 0.861

Epoch: 141, Lr:2.378268852553322e-06, TrainAcc: 100.0, TrainLoss: 0.755, TestAcc: 89.4, TestLoss: 0.852

Epoch: 142, Lr:2.378268852553322e-06, TrainAcc: 100.0, TrainLoss: 0.751, TestAcc: 88.5, TestLoss: 0.865

Epoch: 143, Lr:2.378268852553322e-06, TrainAcc: 99.8, TrainLoss: 0.752, TestAcc: 85.8, TestLoss: 0.872

Epoch: 144, Lr:2.378268852553322e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 85.0, TestLoss: 0.883

Epoch: 145, Lr:2.2593554099256557e-06, TrainAcc: 100.0, TrainLoss: 0.750, TestAcc: 88.5, TestLoss: 0.857

Epoch: 146, Lr:2.2593554099256557e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 89.4, TestLoss: 0.866

Epoch: 147, Lr:2.2593554099256557e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 91.2, TestLoss: 0.856

Epoch: 148, Lr:2.2593554099256557e-06, TrainAcc: 100.0, TrainLoss: 0.749, TestAcc: 88.5, TestLoss: 0.850

Epoch: 149, Lr:2.2593554099256557e-06, TrainAcc: 99.6, TrainLoss: 0.754, TestAcc: 92.0, TestLoss: 0.856

Epoch: 150, Lr:2.146387639429373e-06, TrainAcc: 99.8, TrainLoss: 0.748, TestAcc: 85.0, TestLoss: 0.883

Epoch: 151, Lr:2.146387639429373e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 89.4, TestLoss: 0.856

Epoch: 152, Lr:2.146387639429373e-06, TrainAcc: 100.0, TrainLoss: 0.750, TestAcc: 88.5, TestLoss: 0.854

Epoch: 153, Lr:2.146387639429373e-06, TrainAcc: 99.8, TrainLoss: 0.747, TestAcc: 90.3, TestLoss: 0.863

Epoch: 154, Lr:2.146387639429373e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 89.4, TestLoss: 0.849

Epoch: 155, Lr:2.039068257457904e-06, TrainAcc: 99.6, TrainLoss: 0.751, TestAcc: 87.6, TestLoss: 0.866

Epoch: 156, Lr:2.039068257457904e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 91.2, TestLoss: 0.855

Epoch: 157, Lr:2.039068257457904e-06, TrainAcc: 99.8, TrainLoss: 0.752, TestAcc: 90.3, TestLoss: 0.858

Epoch: 158, Lr:2.039068257457904e-06, TrainAcc: 99.6, TrainLoss: 0.752, TestAcc: 87.6, TestLoss: 0.867

Epoch: 159, Lr:2.039068257457904e-06, TrainAcc: 99.8, TrainLoss: 0.748, TestAcc: 88.5, TestLoss: 0.859

Epoch: 160, Lr:1.937114844585009e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 89.4, TestLoss: 0.849

Epoch: 161, Lr:1.937114844585009e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 86.7, TestLoss: 0.874

Epoch: 162, Lr:1.937114844585009e-06, TrainAcc: 99.3, TrainLoss: 0.754, TestAcc: 90.3, TestLoss: 0.859

Epoch: 163, Lr:1.937114844585009e-06, TrainAcc: 99.3, TrainLoss: 0.754, TestAcc: 90.3, TestLoss: 0.853

Epoch: 164, Lr:1.937114844585009e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 86.7, TestLoss: 0.868

Epoch: 165, Lr:1.8402591023557584e-06, TrainAcc: 99.3, TrainLoss: 0.754, TestAcc: 87.6, TestLoss: 0.861

Epoch: 166, Lr:1.8402591023557584e-06, TrainAcc: 99.8, TrainLoss: 0.750, TestAcc: 90.3, TestLoss: 0.853

Epoch: 167, Lr:1.8402591023557584e-06, TrainAcc: 99.3, TrainLoss: 0.759, TestAcc: 90.3, TestLoss: 0.854

Epoch: 168, Lr:1.8402591023557584e-06, TrainAcc: 99.6, TrainLoss: 0.753, TestAcc: 90.3, TestLoss: 0.854

Epoch: 169, Lr:1.8402591023557584e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 86.7, TestLoss: 0.869

Epoch: 170, Lr:1.7482461472379704e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 90.3, TestLoss: 0.858

Epoch: 171, Lr:1.7482461472379704e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 85.0, TestLoss: 0.870

Epoch: 172, Lr:1.7482461472379704e-06, TrainAcc: 99.8, TrainLoss: 0.749, TestAcc: 87.6, TestLoss: 0.870

Epoch: 173, Lr:1.7482461472379704e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 89.4, TestLoss: 0.853

Epoch: 174, Lr:1.7482461472379704e-06, TrainAcc: 98.2, TrainLoss: 0.764, TestAcc: 90.3, TestLoss: 0.857

Epoch: 175, Lr:1.6608338398760719e-06, TrainAcc: 99.8, TrainLoss: 0.751, TestAcc: 90.3, TestLoss: 0.849

Epoch: 176, Lr:1.6608338398760719e-06, TrainAcc: 99.3, TrainLoss: 0.753, TestAcc: 84.1, TestLoss: 0.892

Epoch: 177, Lr:1.6608338398760719e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 88.5, TestLoss: 0.866

Epoch: 178, Lr:1.6608338398760719e-06, TrainAcc: 99.1, TrainLoss: 0.758, TestAcc: 88.5, TestLoss: 0.864

Epoch: 179, Lr:1.6608338398760719e-06, TrainAcc: 100.0, TrainLoss: 0.750, TestAcc: 90.3, TestLoss: 0.861

Epoch: 180, Lr:1.577792147882268e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 89.4, TestLoss: 0.863

Epoch: 181, Lr:1.577792147882268e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 91.2, TestLoss: 0.851

Epoch: 182, Lr:1.577792147882268e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.863

Epoch: 183, Lr:1.577792147882268e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 89.4, TestLoss: 0.855

Epoch: 184, Lr:1.577792147882268e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.846

Epoch: 185, Lr:1.4989025404881547e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 89.4, TestLoss: 0.853

Epoch: 186, Lr:1.4989025404881547e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 88.5, TestLoss: 0.866

Epoch: 187, Lr:1.4989025404881547e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 87.6, TestLoss: 0.862

Epoch: 188, Lr:1.4989025404881547e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 86.7, TestLoss: 0.870

Epoch: 189, Lr:1.4989025404881547e-06, TrainAcc: 99.8, TrainLoss: 0.748, TestAcc: 87.6, TestLoss: 0.858

Epoch: 190, Lr:1.4239574134637468e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.841

Epoch: 191, Lr:1.4239574134637468e-06, TrainAcc: 99.6, TrainLoss: 0.752, TestAcc: 87.6, TestLoss: 0.869

Epoch: 192, Lr:1.4239574134637468e-06, TrainAcc: 99.3, TrainLoss: 0.757, TestAcc: 88.5, TestLoss: 0.859

Epoch: 193, Lr:1.4239574134637468e-06, TrainAcc: 100.0, TrainLoss: 0.751, TestAcc: 85.0, TestLoss: 0.886

Epoch: 194, Lr:1.4239574134637468e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 92.0, TestLoss: 0.854

Epoch: 195, Lr:1.3527595427905593e-06, TrainAcc: 99.6, TrainLoss: 0.752, TestAcc: 86.7, TestLoss: 0.867

Epoch: 196, Lr:1.3527595427905593e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 90.3, TestLoss: 0.858

Epoch: 197, Lr:1.3527595427905593e-06, TrainAcc: 99.8, TrainLoss: 0.750, TestAcc: 84.1, TestLoss: 0.880

Epoch: 198, Lr:1.3527595427905593e-06, TrainAcc: 99.8, TrainLoss: 0.752, TestAcc: 87.6, TestLoss: 0.863

Epoch: 199, Lr:1.3527595427905593e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 89.4, TestLoss: 0.860

Epoch: 200, Lr:1.2851215656510314e-06, TrainAcc: 100.0, TrainLoss: 0.747, TestAcc: 87.6, TestLoss: 0.857

Epoch: 201, Lr:1.2851215656510314e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 88.5, TestLoss: 0.858

Epoch: 202, Lr:1.2851215656510314e-06, TrainAcc: 99.8, TrainLoss: 0.753, TestAcc: 87.6, TestLoss: 0.876

Epoch: 203, Lr:1.2851215656510314e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 91.2, TestLoss: 0.858

Epoch: 204, Lr:1.2851215656510314e-06, TrainAcc: 99.8, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.848

Epoch: 205, Lr:1.2208654873684798e-06, TrainAcc: 100.0, TrainLoss: 0.751, TestAcc: 90.3, TestLoss: 0.855

Epoch: 206, Lr:1.2208654873684798e-06, TrainAcc: 100.0, TrainLoss: 0.748, TestAcc: 90.3, TestLoss: 0.865

Epoch: 207, Lr:1.2208654873684798e-06, TrainAcc: 100.0, TrainLoss: 0.746, TestAcc: 88.5, TestLoss: 0.858

Epoch: 208, Lr:1.2208654873684798e-06, TrainAcc: 98.9, TrainLoss: 0.766, TestAcc: 88.5, TestLoss: 0.861

模型效果展示

损失

准确度

总结与心得体会

可以发现逆置了BN和ReLU之后,模型的收敛速度更快,然后在测试集上的效果也更好。和作者的相比,我的效果提升给加明显。分析原因是作者的模型训练层数较深,提升的是模型的上限,而我的应该还没有收敛。由此可以得出结论逆置的BN和ReLU具有更强的特征提取能力,也有一定的表达能力的提升

的损失函数以及faiss 的原理介绍)

条件查询QueryWrapper、聚合函数的使用、Lambda条件查询)