1 基础工作

打开cmd

输入 conda env list

输入 conda activate py38

查看 nvidia-smi

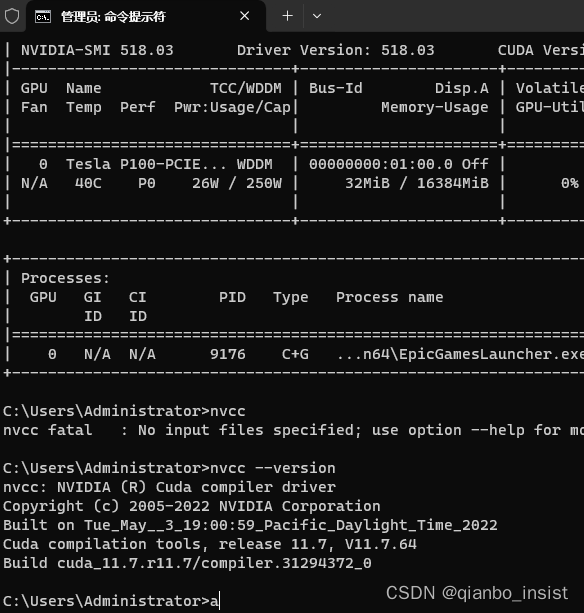

查看 nvcc,如下图所示 cuda为11.7 ,为确认可以查看program files 下面的cuda 安装,看到11.7 就行了,读者可以自行确认自己的版本。

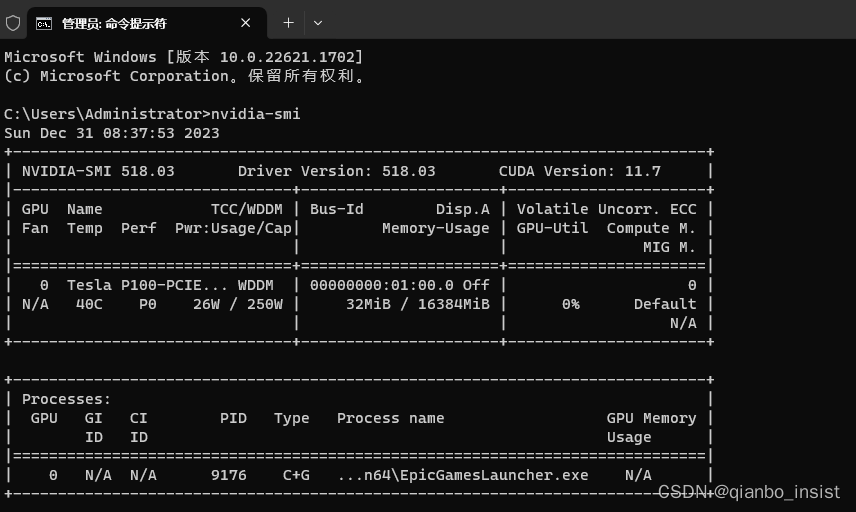

查看nvidia-smi

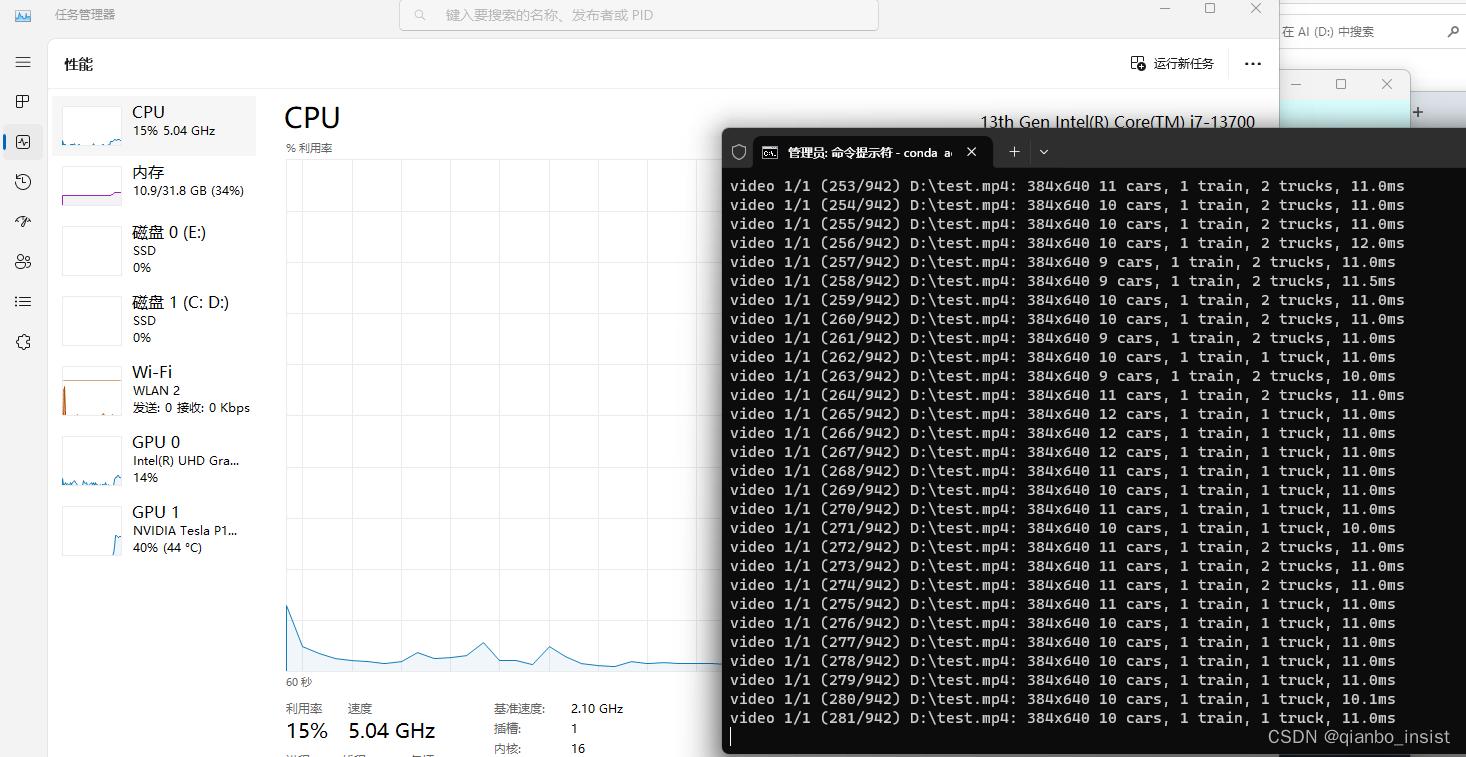

打开yolov8 track以后,发现速度超级慢, 打开cpu占用率奇高,gpu为零, 打开cmd

import torch

torch.cuda.is_available()

为false

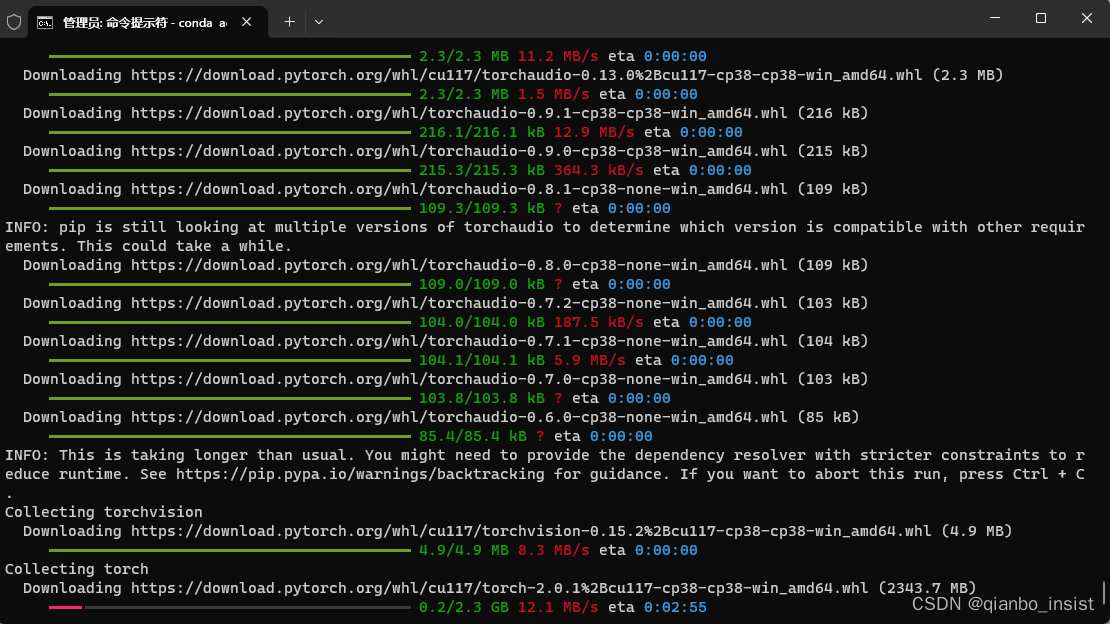

确认pytorch安装的为cpu版本,重新安装pytorch cuda 版本,去pytorch网站,点开后复制安装命令,把cuda版本改成自己本机的版本。

输入命令

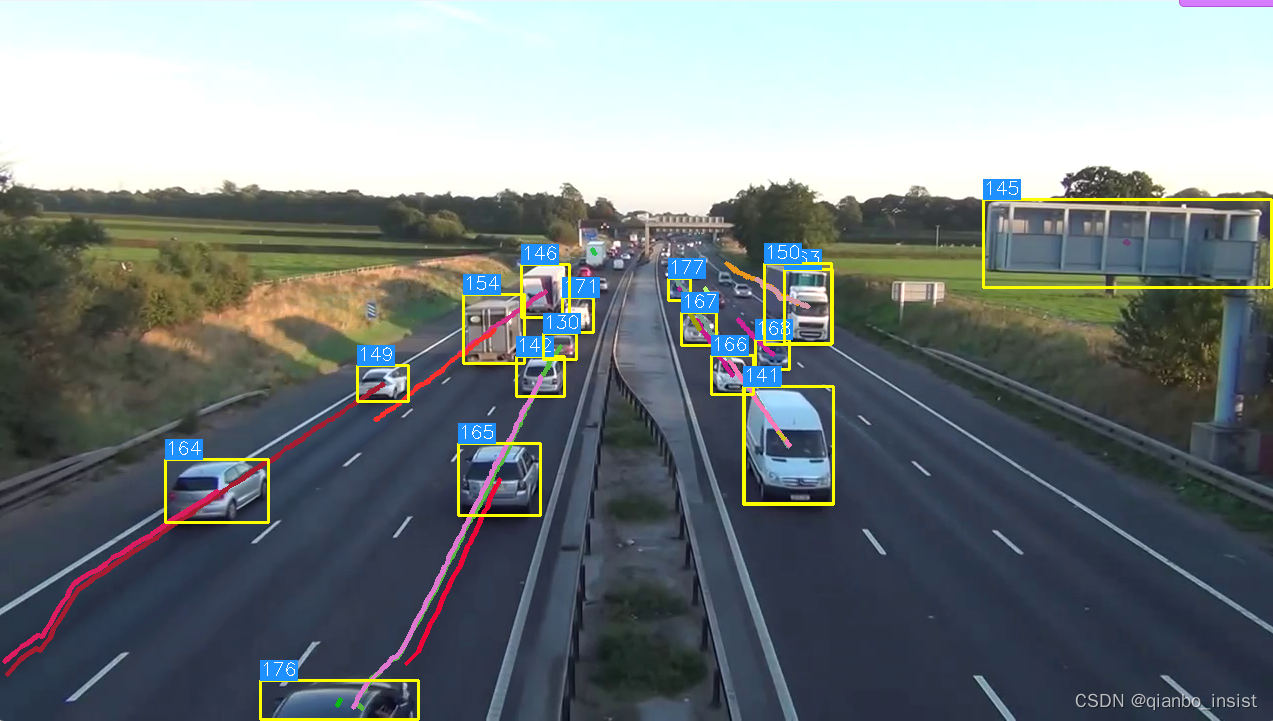

python yolo\v8\detect\detect_and_trk.py model=yolov8s.pt source=“d:\test.mp4” show=True

gpu占用率上升到40% 左右。

2 输出到web

接下来我们要做的工作为rtsp server 编码上传, 为了让其他机器得到结果,我们必须编程并且得到图像结果,让后使用实时传输协议直接转成rtp 流,让本机成为rtsp server,使用vlc 等工具,可以直接拉取到编码后的流。

修改代码,上传结果,先把命令修改成为

python yolo\v8\detect\detect_and_trk.py model=yolov8s.pt source=“d:\test.mp4”

然后在代码里面自己用opencv 来show,也就是增加两行代码

cv2.imshow("qbshow",im0)cv2.waitKey(3)

没问题show 出来了,也就是im0 是我们在增加了选框的地方,在show 代码的基础上把图片发送出去就行,我们选择使用websocket 发送,或者socket 直接发送都行,这样我们需要一个客户端还是服务端的选择,我们选择让python成为服务端,目的是为了不让python需要断线重连,python 成为服务端有很多好处,

1 是可以直接给web传送图片

2 是可以直接给

def write_results(self, idx, preds, batch):p, im, im0 = batchlog_string = ""if len(im.shape) == 3:im = im[None] # expand for batch dimself.seen += 1im0 = im0.copy()if self.webcam: # batch_size >= 1log_string += f'{idx}: 'frame = self.dataset.countelse:frame = getattr(self.dataset, 'frame', 0)# trackerself.data_path = psave_path = str(self.save_dir / p.name) # im.jpgself.txt_path = str(self.save_dir / 'labels' / p.stem) + ('' if self.dataset.mode == 'image' else f'_{frame}')log_string += '%gx%g ' % im.shape[2:] # print stringself.annotator = self.get_annotator(im0)det = preds[idx]self.all_outputs.append(det)if len(det) == 0:return log_stringfor c in det[:, 5].unique():n = (det[:, 5] == c).sum() # detections per classlog_string += f"{n} {self.model.names[int(c)]}{'s' * (n > 1)}, "# #..................USE TRACK FUNCTION....................dets_to_sort = np.empty((0,6))for x1,y1,x2,y2,conf,detclass in det.cpu().detach().numpy():dets_to_sort = np.vstack((dets_to_sort, np.array([x1, y1, x2, y2, conf, detclass])))tracked_dets = tracker.update(dets_to_sort)tracks =tracker.getTrackers()for track in tracks:[cv2.line(im0, (int(track.centroidarr[i][0]),int(track.centroidarr[i][1])), (int(track.centroidarr[i+1][0]),int(track.centroidarr[i+1][1])),rand_color_list[track.id], thickness=3) for i,_ in enumerate(track.centroidarr) if i < len(track.centroidarr)-1 ] if len(tracked_dets)>0:bbox_xyxy = tracked_dets[:,:4]identities = tracked_dets[:, 8]categories = tracked_dets[:, 4]draw_boxes(im0, bbox_xyxy, identities, categories, self.model.names)gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwhcv2.imshow("qbshow",im0)cv2.waitKey(3)return log_string

2.1 web中显示

在python中加上websocket server的代码,以下是一个示例代码,读者可以自己测试,但是要应用到python 的图像处理server中,我们要做一些修改

import asyncio

import threading

import websockets

import time

CONNECTIONS = set()async def server_recv(websocket):while True:try:recv_text = await websocket.recv()print("recv:", recv_text)except websockets.ConnectionClosed as e:# 客户端关闭连接,跳出对客户端的读取,结束函数print(e.code)await asyncio.sleep(0.01)breakasync def server_hands(websocket):CONNECTIONS.add(websocket)print(CONNECTIONS)try:await websocket.wait_closed()finally:CONNECTIONS.remove(websocket) def message_all(message):websockets.broadcast(CONNECTIONS, message) async def handler(websocket, path):# 处理新的 WebSocket 连接print("New WebSocket route is ",path)try:await server_hands(websocket) # 握手加入队列await server_recv(websocket) # 接收客户端消息并处理except websockets.exceptions.ConnectionClosedError as e:print(f"Connection closed unexpectedly: {e}")finally:pass# 处理完毕,关闭 WebSocket 连接print("WebSocket connection closed")async def sockrun():async with websockets.serve(handler, "", 9090):await asyncio.Future() # run foreverdef main_thread(): print("main")asyncio.run(sockrun())

2.2 修改

修改的地方为我们不能让websocket 阻塞主线程,虽然websocket为协程处理,但是依然要放到线程里面

def predict(cfg):init_tracker()random_color_list()cfg.model = cfg.model or "yolov8n.pt"cfg.imgsz = check_imgsz(cfg.imgsz, min_dim=2) # check image sizecfg.source = cfg.source if cfg.source is not None else ROOT / "assets"predictor = DetectionPredictor(cfg)predictor()if __name__ == "__main__":thread = threading.Thread(target=main_thread)thread.start()predict()

值得注意的地方是:

很多人喜欢把编码做成base64 ,这样没有必要, 服务程序里面把二进制编码成base64, 消耗了cpu, 然后发送还大了很多,实际上我们直接发送二进制就行了,发送时

cv2.waitKey(3)_, encimg = cv2.imencode('.jpg', im0)bys = np.array(encimg).tobytes()message_all(bys)

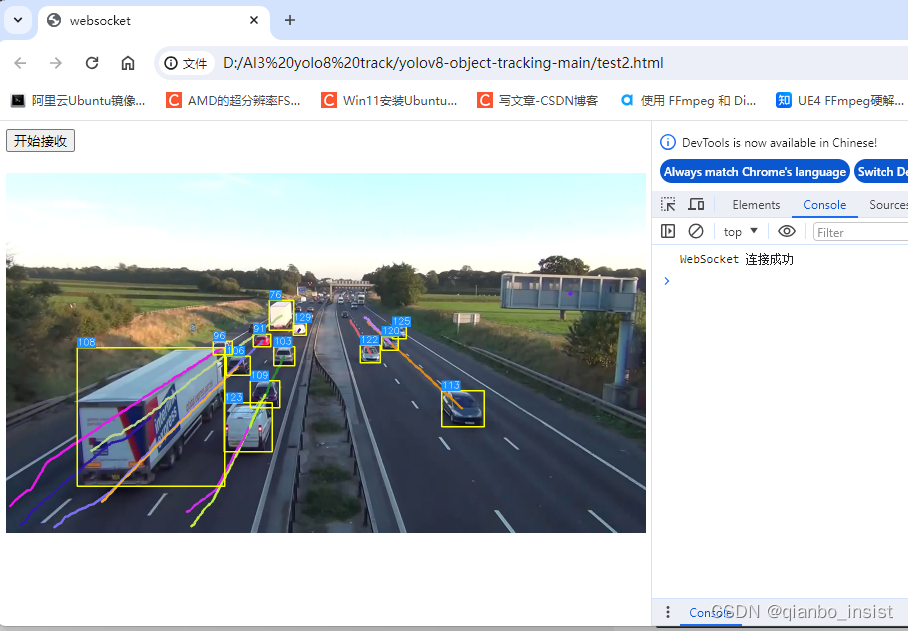

web端测试代码

<!DOCTYPE html>

<html lang="en"><head><meta charset="UTF-8"><meta http-equiv="X-UA-Compatible" content="IE=edge"><meta name="viewport" content="width=device-width, initial-scale=1.0"><title>websocket</title></head>

<body><div><button onclick="connecteClient()">开始接收</button></div><br><div><img src="" id="python_img" alt="websock" style="text-align:left; width: 640px; height: 360px;"> </div><script>function connecteClient() {var ws = new WebSocket("ws://127.0.0.1:9090");ws.onopen = function () {console.log("WebSocket 连接成功");};ws.onmessage = function (evt) {var received_msg = evt.data;blobToDataURI(received_msg, function (result) {document.getElementById("python_img").src = result;})};ws.onclose = function () {console.log("连接关闭...");};}function blobToDataURI(blob, callback) {var reader = new FileReader();reader.readAsDataURL(blob);reader.onload = function (e) {callback(e.target.result);}}</script>

</body>

</html>

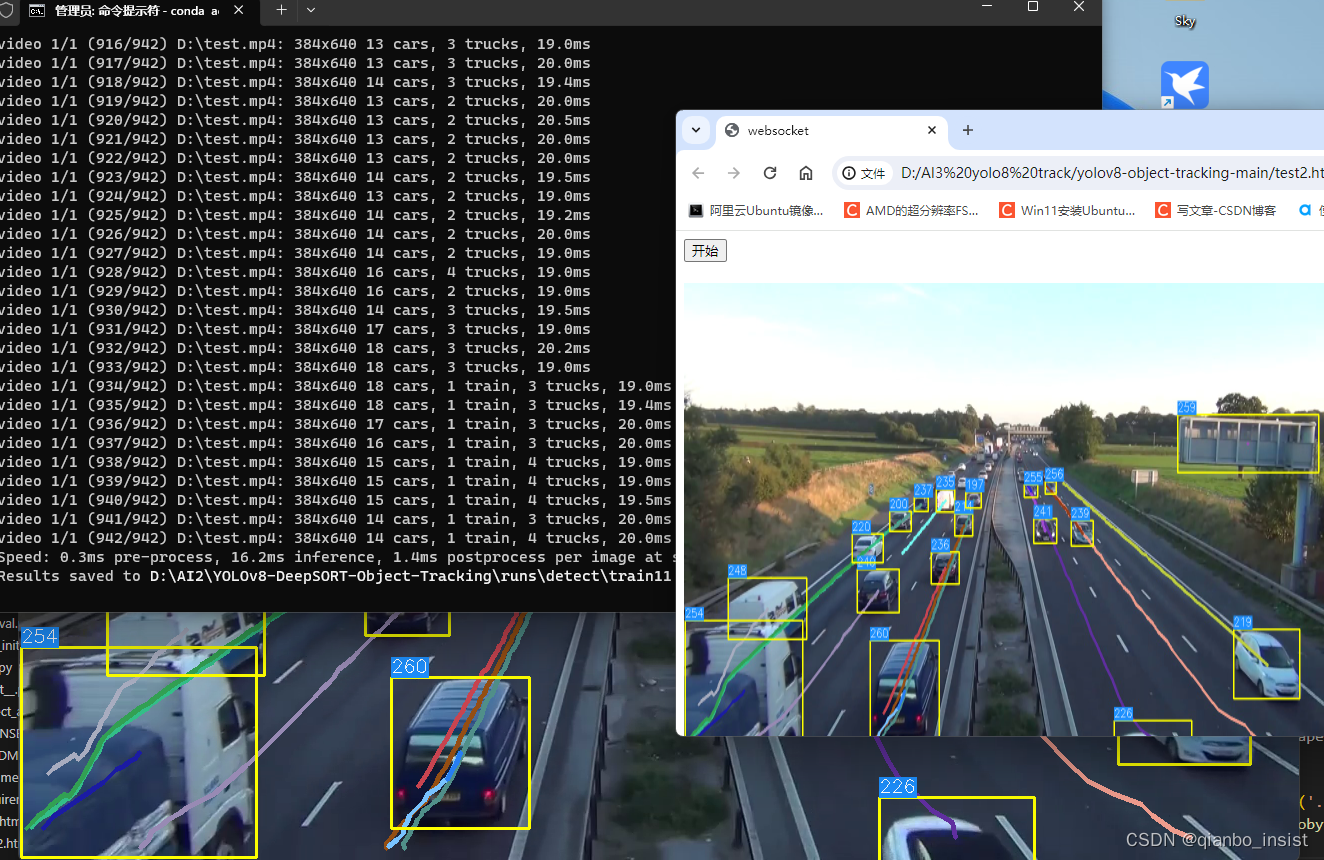

其中接收到二进制完成以后直接显示,不用服务端编码成base64,下图表明web端可以正常拿到jpg图片显示

其中message_all 是广播,只要是websocket client 我们就给他发一份,这样做,不但可以发送给web,也可以发送给rtsp server,我们让rtsp server 链接上来,取到jpg后,解码成为rgb24,然后编码成为h264,让vlc 可以链接rtsp server取到rtsp 流

3 rtsp server 编码h264 输出

我们的rtsp server 端使用c++ 编写,为了加快速度,使用了内存共享,而没有使用websocket client,当然是可以的,考虑到这里要解码,又要编码输出,还有可能需要使用硬件编码,为了提高效率,就这么做了,而且还简单,缺点就是这样多了一个问题,rtspserve只能启动在本机上。先编写一个h264Frame, h265 是一样的道理,后面再更改,暂时运行在windows上,等完成了再修改。注意以下代码只能运行在windows上

class H264Frame :public TThreadRunable

{const wchar_t* sharedMemoryMutex = L"shared_memory_mutex";const wchar_t* sharedMemoryName = L"shared_memory";LPVOID v_lpBase = NULL;HANDLE v_hMapFile = NULL;HANDLE m_shareMutex = NULL;std::shared_ptr<xop::RtspServer> v_server;std::string v_livename;std::wstring v_pathname;std::wstring v_pathmutex_name;int v_fps = 10;Encoder v_encoder;xop::MediaSessionId v_id;int v_init = 0;int v_quality = 28;

public:H264Frame(std::shared_ptr<xop::RtspServer> s, const char* livename, const wchar_t* pathname, xop::MediaSessionId session_id){v_server = s;v_livename = livename;v_pathname = pathname;if (pathname != NULL){v_pathmutex_name = pathname;v_pathmutex_name += L"_mutex";}v_id = session_id;}~H264Frame() {}bool Open(){if (v_pathname.empty())v_hMapFile = OpenFileMappingW(FILE_MAP_ALL_ACCESS, NULL, sharedMemoryName);elsev_hMapFile = OpenFileMappingW(FILE_MAP_ALL_ACCESS, NULL, v_pathname.c_str());if (v_hMapFile == NULL){//printf(" Waiting shared memory creation......\n");return false;}return true;}void Close(){if (m_shareMutex != NULL)ReleaseMutex(m_shareMutex);if (v_hMapFile != NULL)CloseHandle(v_hMapFile);}bool IsOpened() const{return (v_hMapFile != NULL);}void sendFrame(uint8_t* data ,int size, int sessionid){xop::AVFrame videoFrame = { 0 };videoFrame.type = 0;videoFrame.size = size;// sizeofdata;// frame_size;videoFrame.timestamp = xop::H264Source::GetTimestamp();//videoFrame.notcopy = data;videoFrame.buffer.reset(new uint8_t[videoFrame.size]);std::memcpy(videoFrame.buffer.get(), data, videoFrame.size);v_server->PushFrame(sessionid, xop::channel_0, videoFrame);}int ReadFrame(int sessionid){if (v_hMapFile){v_lpBase = MapViewOfFile(v_hMapFile, FILE_MAP_ALL_ACCESS, 0, 0, 0);unsigned char* pMemory = (unsigned char*)v_lpBase;int w = 0, h = 0;int frame_size = 0;std::memcpy(&w, pMemory, 4);std::memcpy(&h, pMemory + 4, 4);std::memcpy(&frame_size, pMemory + 8, 4);//printf("read the width %d height %d framesize %d\n", imageWidth,imageHeight, frame_size);uint8_t* rgb = pMemory + 12;if (v_init == 0){v_encoder.Encoder_Open(v_fps, w, h, w, h, v_quality);v_init = 1;}v_encoder.RGB2YUV(rgb, w, h);AVPacket * pkt = v_encoder.EncodeVideo();//while (!*framebuf++);if (pkt!=NULL) {uint8_t* framebuf = pkt->data;frame_size = pkt->size;uint8_t* spsStart = NULL;int spsLen = 0;uint8_t* ppsStart = NULL;int ppsLen = 0;uint8_t* seStart = NULL;int seLen = 0;uint8_t* frameStart = 0;//非关键帧地址或者关键帧地址int frameLen = 0;AnalyseNalu_no0x((const uint8_t*)framebuf, frame_size, &spsStart, spsLen, &ppsStart, ppsLen, &seStart, seLen, &frameStart, frameLen);if (spsStart != NULL && ppsStart!=NULL){sendFrame(spsStart, spsLen, sessionid);sendFrame(ppsStart, ppsLen, sessionid);}if (frameStart != NULL){sendFrame(frameStart, frameLen, sessionid);}av_packet_free(&pkt);}UnmapViewOfFile(pMemory);}return -1;}void Run(){m_shareMutex = CreateMutexW(NULL, false, sharedMemoryMutex/*v_pathmutex_name.c_str()*/); v_encoder.Encoder_Open(10, 4096, 1080, 4096, 1080, 27);while (1){if (Open() == true){break;}else{printf("can not open share mem error\n");Sleep(3000);}}printf("wait ok\n");//检查是否改变参数//重新开始int64_t m_start_clock = 0;float delay = 1000000.0f / (float)(v_fps);//微妙//合并的rgbuint8_t * rgb = NULL;uint8_t * cam = NULL;int64_t start_timestamp = av_gettime_relative();// GetTimestamp32();while (1){if (IsStop())break;//获取一帧//rgb = if (v_hMapFile){//printf("start to lock sharemutex\n");WaitForSingleObject(m_shareMutex, INFINITE);//printf("read frame\n");ReadFrame(v_id);ReleaseMutex(m_shareMutex);}else{printf("Shared memory handle error\n");break;}int64_t tnow = av_gettime_relative();// GetTimestamp32();int64_t total = tnow - start_timestamp; //總共花費的時間int64_t fix_consume = ++m_start_clock * delay;if (total < fix_consume) {int64_t diff = fix_consume - total;if (diff > 0) { //相差5000微妙以上std::this_thread::sleep_for(std::chrono::microseconds(diff));}}}if (v_init == 0){v_encoder.Encoder_Close();v_init = 1;}}};int main(int argc, char **argv)

{ std::string suffix = "live";std::string ip = "127.0.0.1";std::string port = "8554";std::string rtsp_url = "rtsp://" + ip + ":" + port + "/" + suffix;std::shared_ptr<xop::EventLoop> event_loop(new xop::EventLoop());std::shared_ptr<xop::RtspServer> server = xop::RtspServer::Create(event_loop.get());if (!server->Start("0.0.0.0", atoi(port.c_str()))) {printf("RTSP Server listen on %s failed.\n", port.c_str());return 0;}#ifdef AUTH_CONFIGserver->SetAuthConfig("-_-", "admin", "12345");

#endifxop::MediaSession *session = xop::MediaSession::CreateNew("live"); session->AddSource(xop::channel_0, xop::H264Source::CreateNew()); //session->StartMulticast(); session->AddNotifyConnectedCallback([] (xop::MediaSessionId sessionId, std::string peer_ip, uint16_t peer_port){printf("RTSP client connect, ip=%s, port=%hu \n", peer_ip.c_str(), peer_port);});session->AddNotifyDisconnectedCallback([](xop::MediaSessionId sessionId, std::string peer_ip, uint16_t peer_port) {printf("RTSP client disconnect, ip=%s, port=%hu \n", peer_ip.c_str(), peer_port);});xop::MediaSessionId session_id = server->AddSession(session);H264Frame h264frame(server, suffix.c_str(), L"shared_memory",session_id);//std::thread t1(SendFrameThread, server.get(), session_id, &h264_file);//t1.detach(); std::cout << "Play URL: " << rtsp_url << std::endl;h264frame.Start();while (1) {xop::Timer::Sleep(100);}h264frame.Join();getchar();return 0;

}

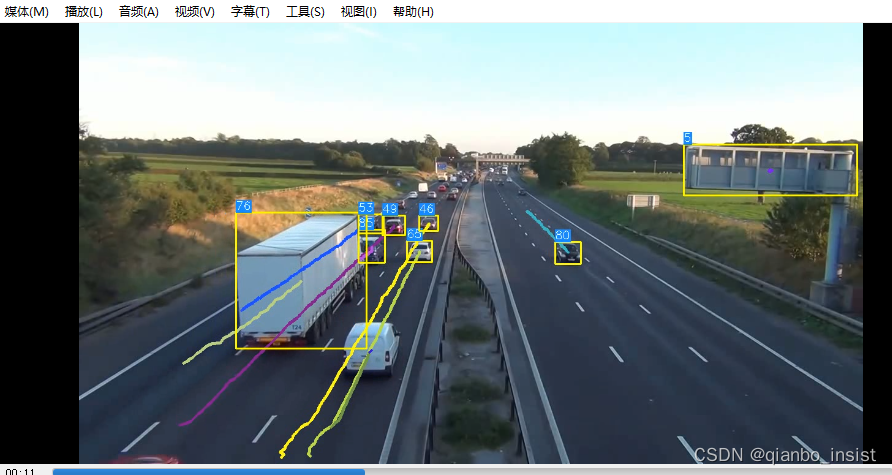

完成以后,打开python 服务端,打开rtsp服务端,最后打开vlc查看

这样就完成了python端使用pytorch ,yolo 等等共享给c++ 的内存

其中python端的内存共享示例使用如下

import mmap

import contextlib

import timewhile True:with contextlib.closing(mmap.mmap(-1, 25, tagname='test', access=mmap.ACCESS_READ)) as m:s = m.read(1024)#.replace('\x00', '')print(s)time.sleep(1)

读者可自行完成剩余的代码

)

——基于51单片机的温控风扇protues仿真)